2024年的春节假期,AIGC界又发生了重大革命性事件。

- OpenAI 发布了首款文生视频模型——Sora。简单来说就是,AI视频要变天了!之前的SVD,还是Google的Lumiere最多就几十帧,大约十秒左右,但是Sora却是SOTA级别且达到60s长度的文生视频模型。

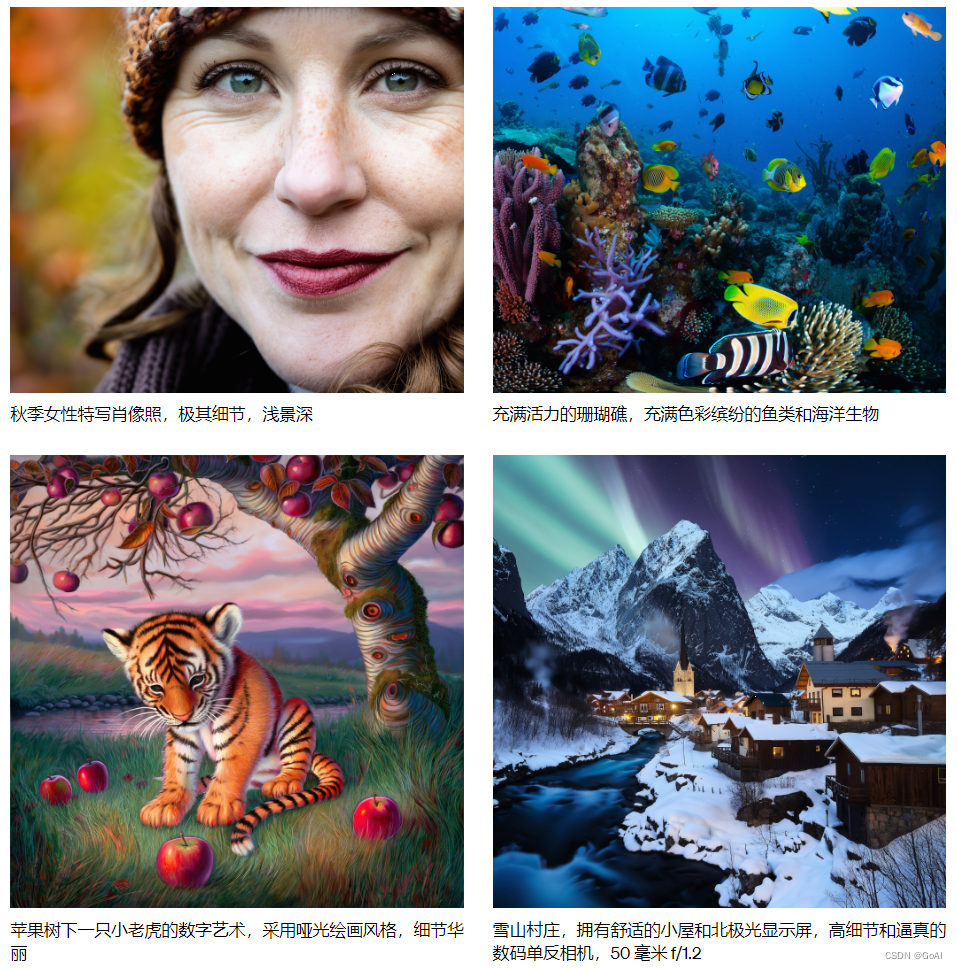

- Stability AI 开源 Stable Cascade,重新修改了diffusion架构,并且速度比原来的sdxl更快。不过目前huggingface下的模型,我仍建议使用 20GB以上的VRAM,而X 平台用户 @GozukaraFurkan 发文表示它只需要大约 9GB 的 GPU 内存,且速度依旧能保持得较好,具体优化需等GitHub发布了。

- Apple 开售 Vision Pro,继 Meta Quest 3 之后又一位元宇宙的重量级选手。虽然Pico和黑鲨暂时退热了, 但是硅谷依旧热情高涨,值得向往学习。

部署

#install `diffusers` from this branch while the PR is WIP

pip install git+https://github.com/kashif/diffusers.git@wuerstchen-v3

官方最新的有问题,会提示 If you want to instead overwrite randomly initialized weights, please make sure to pass both low_cpu_mem_usage=False and ignore_mismatched_sizes=True.

# 参考 https://github.com/kijai/ComfyUI-DiffusersStableCascade/issues/13

pip install --force-reinstall --no-deps git+https://github.com/huggingface/diffusers.git@a3dc21385b7386beb3dab3a9845962ede6765887

代码

import torch

from diffusers import StableCascadeDecoderPipeline, StableCascadePriorPipeline

device = "cuda"

dtype = torch.bfloat16

num_images_per_prompt = 2

prior = StableCascadePriorPipeline.from_pretrained("stabilityai/stable-cascade-prior", torch_dtype=dtype).to(device)

decoder = StableCascadeDecoderPipeline.from_pretrained("stabilityai/stable-cascade", torch_dtype=dtype).to(device)

prompt = "Anthropomorphic cat dressed as a pilot"

negative_prompt = ""

with torch.cuda.amp.autocast(dtype=dtype):

prior_output = prior(

prompt=prompt,

height=1024,

width=1024,

negative_prompt=negative_prompt,

guidance_scale=4.0,

num_images_per_prompt=num_images_per_prompt,

)

decoder_output = decoder(

image_embeddings=prior_output.image_embeddings,

prompt=prompt,

negative_prompt=negative_prompt,

guidance_scale=0.0,

output_type="pil",

).images

代码

import torch

from diffusers import StableCascadeDecoderPipeline, StableCascadePriorPipeline

device = "cuda"

num_images_per_prompt = 2

prior = StableCascadePriorPipeline.from_pretrained("stabilityai/stable-cascade-prior", torch_dtype=torch.bfloat16).to(device)

decoder = StableCascadeDecoderPipeline.from_pretrained("stabilityai/stable-cascade", torch_dtype=torch.float16).to(device)

prompt = "Anthropomorphic cat dressed as a pilot"

negative_prompt = ""

prior_output = prior(

prompt=prompt,

height=1024,

width=1024,

negative_prompt=negative_prompt,

guidance_scale=4.0,

num_images_per_prompt=num_images_per_prompt,

num_inference_steps=20

)

decoder_output = decoder(

image_embeddings=prior_output.image_embeddings.half(),

prompt=prompt,

negative_prompt=negative_prompt,

guidance_scale=0.0,

output_type="pil",

num_inference_steps=10

).images

#Now decoder_output is a list with your PIL images

![[AIGC] 消息积压了,该如何处理?](https://img-blog.csdnimg.cn/direct/2d748b3ba7e043d89671b7f3e19078e6.png)