前置:

YOTO.h:

#pragma once

//用于YOTO APP

#include "YOTO/Application.h"

#include"YOTO/Layer.h"

#include "YOTO/Log.h"

#include"YOTO/Core/Timestep.h"

#include"YOTO/Input.h"

#include"YOTO/KeyCode.h"

#include"YOTO/MouseButtonCodes.h"

#include "YOTO/OrthographicCameraController.h"

#include"YOTO/ImGui/ImGuiLayer.h"

//Renderer

#include"YOTO/Renderer/Renderer.h"

#include"YOTO/Renderer/RenderCommand.h"

#include"YOTO/Renderer/Buffer.h"

#include"YOTO/Renderer/Shader.h"

#include"YOTO/Renderer/Texture.h"

#include"YOTO/Renderer/VertexArray.h"

#include"YOTO/Renderer/OrthographicCamera.h"

//入口点

#include"YOTO/EntryPoint.h"添加方法:

OrthographicCamera.h:

#pragma once

#include <glm/glm.hpp>

namespace YOTO {

class OrthographicCamera

{

public:

OrthographicCamera(float left, float right, float bottom, float top);

void SetProjection(float left, float right, float bottom, float top);

const glm::vec3& GetPosition()const { return m_Position; }

void SetPosition(const glm::vec3& position) {

m_Position = position;

RecalculateViewMatrix();

}

float GetRotation()const { return m_Rotation; }

void SetRotation(float rotation) {

m_Rotation = rotation;

RecalculateViewMatrix();

}

const glm::mat4& GetProjectionMatrix()const { return m_ProjectionMatrix; }

const glm::mat4& GetViewMatrix()const { return m_ViewMatrix; }

const glm::mat4& GetViewProjectionMatrix()const { return m_ViewProjectionMatrix; }

private:

void RecalculateViewMatrix();

private:

glm::mat4 m_ProjectionMatrix;

glm::mat4 m_ViewMatrix;

glm::mat4 m_ViewProjectionMatrix;

glm::vec3 m_Position = { 0.0f ,0.0f ,0.0f };

float m_Rotation = 0.0f;

};

}

OrthographicCamera.cpp:

#include "ytpch.h"

#include "OrthographicCamera.h"

#include <glm/gtc/matrix_transform.hpp>

namespace YOTO {

OrthographicCamera::OrthographicCamera(float left, float right, float bottom, float top)

:m_ProjectionMatrix(glm::ortho(left, right, bottom, top)), m_ViewMatrix(1.0f)

{

m_ViewProjectionMatrix = m_ProjectionMatrix * m_ViewMatrix;

}

void OrthographicCamera::SetProjection(float left, float right, float bottom, float top) {

m_ProjectionMatrix = glm::ortho(left, right, bottom, top);

m_ViewProjectionMatrix = m_ProjectionMatrix * m_ViewMatrix;

}

void OrthographicCamera::RecalculateViewMatrix()

{

glm::mat4 transform = glm::translate(glm::mat4(1.0f), m_Position) *

glm::rotate(glm::mat4(1.0f), glm::radians(m_Rotation), glm::vec3(0, 0, 1));

m_ViewMatrix = glm::inverse(transform);

m_ViewProjectionMatrix = m_ProjectionMatrix * m_ViewMatrix;

}

}封装控制类:

OrthographicCameraController.h:

#pragma once

#include "YOTO/Renderer/OrthographicCamera.h"

#include"YOTO/Core/Timestep.h"

#include "YOTO/Event/ApplicationEvent.h"

#include "YOTO/Event/MouseEvent.h"

namespace YOTO {

class OrthographicCameraController {

public:

OrthographicCameraController(float aspectRatio, bool rotation=false );//0,1280

void OnUpdate(Timestep ts);

void OnEvent(Event& e);

OrthographicCamera& GetCamera() {

return m_Camera

;

}

const OrthographicCamera& GetCamera()const {

return m_Camera

;

}

private:

bool OnMouseScrolled(MouseScrolledEvent &e);

bool OnWindowResized(WindowResizeEvent& e);

private:

float m_AspectRatio;//横纵比

float m_ZoomLevel = 1.0f;

OrthographicCamera m_Camera;

bool m_Rotation;

glm::vec3 m_CameraPosition = {0.0f,0.0f,0.0f};

float m_CameraRotation = 0.0f;

float m_CameraTranslationSpeed = 1.0f, m_CameraRotationSpeed = 180.0f;

};

}

OrthographicCameraController.cpp:

#include "ytpch.h"

#include "OrthographicCameraController.h"

#include"YOTO/Input.h"

#include <YOTO/KeyCode.h>

namespace YOTO {

OrthographicCameraController::OrthographicCameraController(float aspectRatio, bool rotation)

:m_AspectRatio(aspectRatio),m_Camera(-m_AspectRatio*m_ZoomLevel,m_AspectRatio*m_ZoomLevel,-m_ZoomLevel,m_ZoomLevel),m_Rotation(rotation)

{

}

void OrthographicCameraController::OnUpdate(Timestep ts)

{

if (Input::IsKeyPressed(YT_KEY_A)) {

m_CameraPosition.x -= m_CameraTranslationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_D)) {

m_CameraPosition.x += m_CameraTranslationSpeed * ts;

}

if (Input::IsKeyPressed(YT_KEY_S)) {

m_CameraPosition.y -= m_CameraTranslationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_W)) {

m_CameraPosition.y += m_CameraTranslationSpeed * ts;

}

if (m_Rotation) {

if (Input::IsKeyPressed(YT_KEY_Q)) {

m_CameraRotation += m_CameraRotationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_E)) {

m_CameraRotation -= m_CameraRotationSpeed * ts;

}

m_Camera.SetRotation(m_CameraRotation);

}

m_Camera.SetPosition(m_CameraPosition);

m_CameraTranslationSpeed = m_ZoomLevel;

}

void OrthographicCameraController::OnEvent(Event& e)

{

EventDispatcher dispatcher(e);

dispatcher.Dispatch<MouseScrolledEvent>(YT_BIND_EVENT_FN(OrthographicCameraController::OnMouseScrolled));

dispatcher.Dispatch<WindowResizeEvent>(YT_BIND_EVENT_FN(OrthographicCameraController::OnWindowResized));

}

bool OrthographicCameraController::OnMouseScrolled(MouseScrolledEvent& e)

{

m_ZoomLevel -= e.GetYOffset()*0.5f;

m_ZoomLevel = std::max(m_ZoomLevel, 0.25f);

m_Camera.SetProjection(-m_AspectRatio * m_ZoomLevel, m_AspectRatio * m_ZoomLevel, -m_ZoomLevel, m_ZoomLevel);

return false;

}

bool OrthographicCameraController::OnWindowResized(WindowResizeEvent& e)

{

m_AspectRatio = (float)e.GetWidth()/(float) e.GetHeight();

m_Camera.SetProjection(-m_AspectRatio * m_ZoomLevel, m_AspectRatio * m_ZoomLevel, -m_ZoomLevel, m_ZoomLevel);

return false;

}

}

SandboxApp.cpp:

#include<YOTO.h>

#include "imgui/imgui.h"

#include<stdio.h>

#include <glm/gtc/matrix_transform.hpp>

#include <Platform/OpenGL/OpenGLShader.h>

#include <glm/gtc/type_ptr.hpp>

class ExampleLayer:public YOTO::Layer

{

public:

ExampleLayer()

:Layer("Example"), m_CameraController(1280.0f/720.0f,true){

uint32_t indices[3] = { 0,1,2 };

float vertices[3 * 7] = {

-0.5f,-0.5f,0.0f, 0.8f,0.2f,0.8f,1.0f,

0.5f,-0.5f,0.0f, 0.2f,0.3f,0.8f,1.0f,

0.0f,0.5f,0.0f, 0.8f,0.8f,0.2f,1.0f,

};

m_VertexArray.reset(YOTO::VertexArray::Create());

YOTO::Ref<YOTO::VertexBuffer> m_VertexBuffer;

m_VertexBuffer.reset(YOTO::VertexBuffer::Create(vertices, sizeof(vertices)));

{

YOTO::BufferLayout setlayout = {

{YOTO::ShaderDataType::Float3,"a_Position"},

{YOTO::ShaderDataType::Float4,"a_Color"}

};

m_VertexBuffer->SetLayout(setlayout);

}

m_VertexArray->AddVertexBuffer(m_VertexBuffer);

YOTO::Ref<YOTO::IndexBuffer>m_IndexBuffer;

m_IndexBuffer.reset(YOTO::IndexBuffer::Create(indices, sizeof(indices) / sizeof(uint32_t)));

m_VertexArray->AddIndexBuffer(m_IndexBuffer);

std::string vertexSource = R"(

#version 330 core

layout(location = 0) in vec3 a_Position;

layout(location = 1) in vec4 a_Color;

uniform mat4 u_ViewProjection;

uniform mat4 u_Transform;

out vec3 v_Position;

out vec4 v_Color;

void main(){

v_Position=a_Position;

v_Color=a_Color;

gl_Position =u_ViewProjection *u_Transform* vec4( a_Position,1.0);

}

)";

//绘制颜色

std::string fragmentSource = R"(

#version 330 core

layout(location = 0) out vec4 color;

in vec3 v_Position;

in vec4 v_Color;

void main(){

color=vec4(v_Color);

}

)";

m_Shader=(YOTO::Shader::Create("VertexPosColor", vertexSource, fragmentSource));

///测试/

m_SquareVA.reset(YOTO::VertexArray::Create());

float squareVertices[5 * 4] = {

-0.5f,-0.5f,0.0f, 0.0f,0.0f,

0.5f,-0.5f,0.0f, 1.0f,0.0f,

0.5f,0.5f,0.0f, 1.0f,1.0f,

-0.5f,0.5f,0.0f, 0.0f,1.0f,

};

YOTO::Ref<YOTO::VertexBuffer> squareVB;

squareVB.reset(YOTO::VertexBuffer::Create(squareVertices, sizeof(squareVertices)));

squareVB->SetLayout({

{YOTO::ShaderDataType::Float3,"a_Position"},

{YOTO::ShaderDataType::Float2,"a_TexCoord"}

});

m_SquareVA->AddVertexBuffer(squareVB);

uint32_t squareIndices[6] = { 0,1,2,2,3,0 };

YOTO::Ref<YOTO::IndexBuffer> squareIB;

squareIB.reset((YOTO::IndexBuffer::Create(squareIndices, sizeof(squareIndices) / sizeof(uint32_t))));

m_SquareVA->AddIndexBuffer(squareIB);

//测试:

std::string BlueShaderVertexSource = R"(

#version 330 core

layout(location = 0) in vec3 a_Position;

uniform mat4 u_ViewProjection;

uniform mat4 u_Transform;

out vec3 v_Position;

void main(){

v_Position=a_Position;

gl_Position =u_ViewProjection*u_Transform*vec4( a_Position,1.0);

}

)";

//绘制颜色

std::string BlueShaderFragmentSource = R"(

#version 330 core

layout(location = 0) out vec4 color;

in vec3 v_Position;

uniform vec3 u_Color;

void main(){

color=vec4(u_Color,1.0);

}

)";

m_BlueShader=(YOTO::Shader::Create("FlatColor", BlueShaderVertexSource, BlueShaderFragmentSource));

auto textureShader= m_ShaderLibrary.Load("assets/shaders/Texture.glsl");

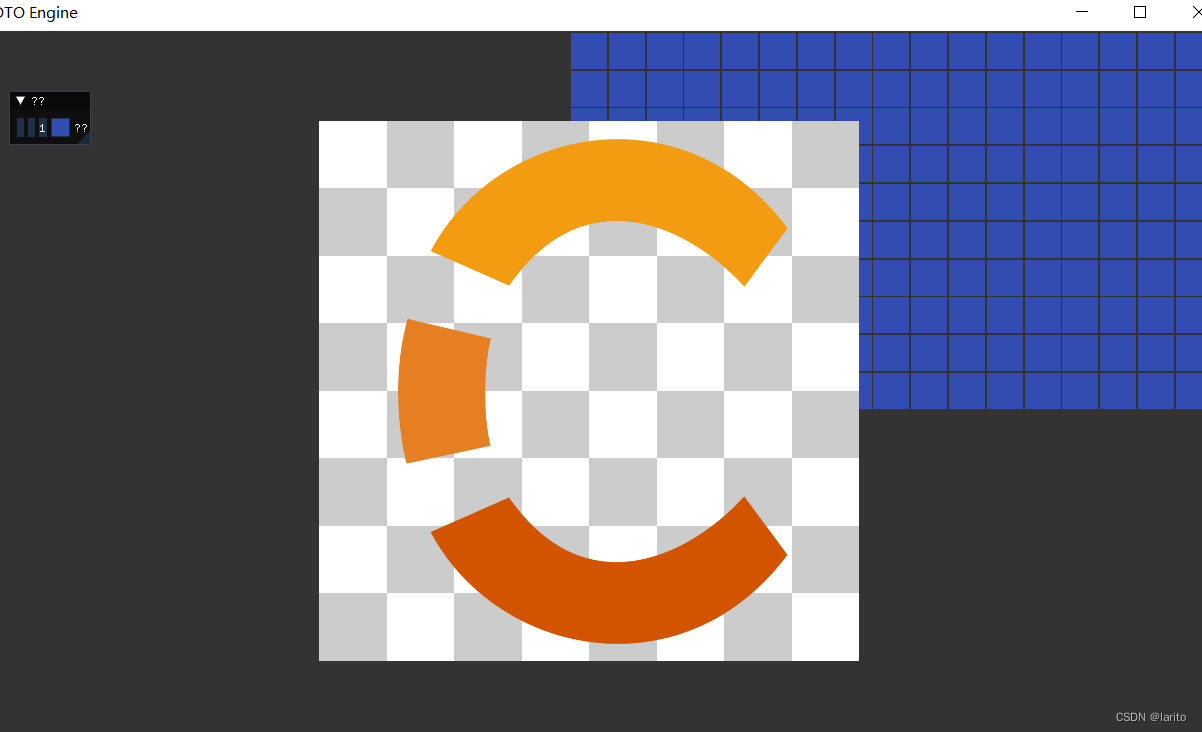

m_Texture=YOTO::Texture2D::Create("assets/textures/Checkerboard.png");

m_ChernoLogo= YOTO::Texture2D::Create("assets/textures/ChernoLogo.png");

std::dynamic_pointer_cast<YOTO::OpenGLShader>(textureShader)->Bind();

std::dynamic_pointer_cast<YOTO::OpenGLShader>(textureShader)->UploadUniformInt("u_Texture", 0);

}

void OnImGuiRender() override {

ImGui::Begin("设置");

ImGui::ColorEdit3("正方形颜色", glm::value_ptr(m_SquareColor));

ImGui::End();

}

void OnUpdate(YOTO::Timestep ts)override {

//update

m_CameraController.OnUpdate(ts);

//YT_CLIENT_TRACE("delta time {0}s ({1}ms)", ts.GetSeconds(), ts.GetMilliseconds());

//Render

YOTO::RenderCommand::SetClearColor({ 0.2f, 0.2f, 0.2f, 1.0f });

YOTO::RenderCommand::Clear();

YOTO::Renderer::BeginScene(m_CameraController.GetCamera());

{

static glm::mat4 scale = glm::scale(glm::mat4(1.0f), glm::vec3(0.1f));

glm::vec4 redColor(0.8f, 0.3f, 0.3f, 1.0f);

glm::vec4 blueColor(0.2f, 0.3f, 0.8f, 1.0f);

/* YOTO::MaterialRef material = new YOTO::MaterialRef(m_FlatColorShader);

YOTO::MaterialInstaceRef mi = new YOTO::MaterialInstaceRef(material);

mi.setValue("u_Color",redColor);

mi.setTexture("u_AlbedoMap", texture);

squreMesh->SetMaterial(mi);*/

std::dynamic_pointer_cast<YOTO::OpenGLShader>(m_BlueShader)->Bind();

std::dynamic_pointer_cast<YOTO::OpenGLShader>(m_BlueShader)->UploadUniformFloat3("u_Color",m_SquareColor);

for (int y = 0; y < 20; y++) {

for (int x = 0; x <20; x++)

{

glm::vec3 pos(x * 0.105f,y* 0.105f, 0.0);

glm::mat4 transform = glm::translate(glm::mat4(1.0f), pos) * scale;

/* if (x % 2 == 0) {

m_BlueShader->UploadUniformFloat4("u_Color", redColor);

}

else {

m_BlueShader->UploadUniformFloat4("u_Color", blueColor);

}*/

YOTO::Renderer::Submit(m_BlueShader, m_SquareVA, transform);

}

}

auto textureShader = m_ShaderLibrary.Get("Texture");

m_Texture->Bind();

YOTO::Renderer::Submit(textureShader, m_SquareVA, glm::scale(glm::mat4(1.0f), glm::vec3(1.5f)));

m_ChernoLogo->Bind();

YOTO::Renderer::Submit(textureShader, m_SquareVA, glm::scale(glm::mat4(1.0f), glm::vec3(1.5f)));

//YOTO::Renderer::Submit(m_Shader, m_VertexArray);

}

YOTO::Renderer::EndScene();

//YOTO::Renderer3D::BeginScene(m_Scene);

//YOTO::Renderer2D::BeginScene(m_Scene);

}

void OnEvent(YOTO::Event& e)override {

m_CameraController.OnEvent(e);

/*if (event.GetEventType() == YOTO::EventType::KeyPressed) {

YOTO:: KeyPressedEvent& e = (YOTO::KeyPressedEvent&)event;

YT_CLIENT_TRACE("ExampleLayer:{0}",(char)e.GetKeyCode());

if (e.GetKeyCode()==YT_KEY_TAB) {

YT_CLIENT_INFO("ExampleLayerOnEvent:TAB按下了");

}}*/

//YT_CLIENT_TRACE("SandBoxApp:测试event{0}", event);

}

private:

YOTO::ShaderLibrary m_ShaderLibrary;

YOTO::Ref<YOTO::Shader> m_Shader;

YOTO::Ref<YOTO::VertexArray> m_VertexArray;

YOTO::Ref<YOTO::Shader> m_BlueShader;

YOTO::Ref<YOTO::VertexArray> m_SquareVA;

YOTO::Ref<YOTO::Texture2D> m_Texture,m_ChernoLogo;

YOTO::OrthographicCameraController m_CameraController;

glm::vec3 m_SquareColor = { 0.2f,0.3f,0.7f };

};

class Sandbox:public YOTO::Application

{

public:

Sandbox(){

PushLayer(new ExampleLayer());

//PushLayer(new YOTO::ImGuiLayer());

}

~Sandbox() {

}

private:

};

YOTO::Application* YOTO::CreateApplication() {

printf("helloworld");

return new Sandbox();

}

测试:

cool

修改:

添加修改视口的方法:

Render.h:

#pragma once

#include"RenderCommand.h"

#include "OrthographicCamera.h"

#include"Shader.h"

namespace YOTO {

class Renderer {

public:

static void Init();

static void OnWindowResize(uint32_t width,uint32_t height);

static void BeginScene(OrthographicCamera& camera);

static void EndScene();

static void Submit(const Ref<Shader>& shader, const Ref<VertexArray>& vertexArray,const glm::mat4&transform = glm::mat4(1.0f));

inline static RendererAPI::API GetAPI() {

return RendererAPI::GetAPI();

}

private:

struct SceneData {

glm::mat4 ViewProjectionMatrix;

};

static SceneData* m_SceneData;

};

}Render.cpp:

#include"ytpch.h"

#include"Renderer.h"

#include <Platform/OpenGL/OpenGLShader.h>

namespace YOTO {

Renderer::SceneData* Renderer::m_SceneData = new Renderer::SceneData;

void Renderer::Init()

{

RenderCommand::Init();

}

void Renderer::OnWindowResize(uint32_t width, uint32_t height)

{

RenderCommand::SetViewport(0, 0, width, height);

}

void Renderer::BeginScene(OrthographicCamera& camera)

{

m_SceneData->ViewProjectionMatrix = camera.GetViewProjectionMatrix();

}

void Renderer::EndScene()

{

}

void Renderer::Submit(const Ref<Shader>& shader, const Ref<VertexArray>& vertexArray, const glm::mat4& transform)

{

shader->Bind();

std::dynamic_pointer_cast<OpenGLShader>(shader)->UploadUniformMat4("u_ViewProjection", m_SceneData->ViewProjectionMatrix);

std::dynamic_pointer_cast<OpenGLShader>(shader)->UploadUniformMat4("u_Transform", transform);

/* mi.Bind();*/

vertexArray->Bind();

RenderCommand::DrawIndexed(vertexArray);

}

}RenderCommand.h:

#pragma once

#include"RendererAPI.h"

namespace YOTO {

class RenderCommand

{

public:

inline static void Init() {

s_RendererAPI->Init();

}

inline static void SetViewport(uint32_t x, uint32_t y, uint32_t width, uint32_t height) {

s_RendererAPI->SetViewport(x,y,width,height);

}

inline static void SetClearColor(const glm::vec4& color) {

s_RendererAPI->SetClearColor(color);

}

inline static void Clear() {

s_RendererAPI->Clear();

}

inline static void DrawIndexed(const Ref<VertexArray>& vertexArray) {

s_RendererAPI->DrawIndexed(vertexArray);

}

private:

static RendererAPI* s_RendererAPI;

};

}

RenderAPI.h:

#pragma once

#include<glm/glm.hpp>

#include "VertexArray.h"

namespace YOTO {

class RendererAPI

{

public:

enum class API {

None = 0,

OpenGL = 1

};

public:

virtual void Init() = 0;

virtual void SetClearColor(const glm::vec4& color)=0;

virtual void SetViewport(uint32_t x, uint32_t y, uint32_t width, uint32_t height) = 0;

virtual void Clear() = 0;

virtual void DrawIndexed(const Ref<VertexArray>& vertexArray)=0;

inline static API GetAPI() { return s_API; }

private:

static API s_API;

};

}

OpenGLRenderAPI.h:

#pragma once

#include"YOTO/Renderer/RendererAPI.h"

namespace YOTO {

class OpenGLRendererAPI:public RendererAPI

{

public:

virtual void Init()override;

virtual void SetViewport(uint32_t x, uint32_t y, uint32_t width, uint32_t height) override;

virtual void SetClearColor(const glm::vec4& color)override;

virtual void Clear()override;

virtual void DrawIndexed(const Ref<VertexArray>& vertexArray) override;

};

}

OpenGLRenderAPI.cpp:

#include "ytpch.h"

#include "OpenGLRendererAPI.h"

#include <glad/glad.h>

namespace YOTO {

void OpenGLRendererAPI::Init()

{

//启用混合

glEnable(GL_BLEND);

//设置混合函数

glBlendFunc(GL_SRC_ALPHA,GL_ONE_MINUS_SRC_ALPHA);

}

void OpenGLRendererAPI::SetViewport(uint32_t x, uint32_t y, uint32_t width, uint32_t height)

{

glViewport(x, y, width, height);

}

void OpenGLRendererAPI::SetClearColor(const glm::vec4& color)

{

glClearColor(color.r, color.g, color.b, color.a);

}

void OpenGLRendererAPI::Clear()

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

}

void OpenGLRendererAPI::DrawIndexed(const Ref<VertexArray>& vertexArray)

{

glDrawElements(GL_TRIANGLES, vertexArray->GetIndexBuffer()->GetCount(), GL_UNSIGNED_INT, nullptr);

}

}OrthographicCameraController.h:

#pragma once

#include "YOTO/Renderer/OrthographicCamera.h"

#include"YOTO/Core/Timestep.h"

#include "YOTO/Event/ApplicationEvent.h"

#include "YOTO/Event/MouseEvent.h"

namespace YOTO {

class OrthographicCameraController {

public:

OrthographicCameraController(float aspectRatio, bool rotation=false );//0,1280

void OnUpdate(Timestep ts);

void OnEvent(Event& e);

OrthographicCamera& GetCamera() {

return m_Camera

;

}

const OrthographicCamera& GetCamera()const {

return m_Camera

;

}

float GetZoomLevel() { return m_ZoomLevel; }

void SetZoomLevel(float level) { m_ZoomLevel = level; }

private:

bool OnMouseScrolled(MouseScrolledEvent &e);

bool OnWindowResized(WindowResizeEvent& e);

private:

float m_AspectRatio;//横纵比

float m_ZoomLevel = 1.0f;

OrthographicCamera m_Camera;

bool m_Rotation;

glm::vec3 m_CameraPosition = {0.0f,0.0f,0.0f};

float m_CameraRotation = 0.0f;

float m_CameraTranslationSpeed = 1.0f, m_CameraRotationSpeed = 180.0f;

};

}

OrthographicCameraController.cpp:

#include "ytpch.h"

#include "OrthographicCameraController.h"

#include"YOTO/Input.h"

#include <YOTO/KeyCode.h>

namespace YOTO {

OrthographicCameraController::OrthographicCameraController(float aspectRatio, bool rotation)

:m_AspectRatio(aspectRatio),m_Camera(-m_AspectRatio*m_ZoomLevel,m_AspectRatio*m_ZoomLevel,-m_ZoomLevel,m_ZoomLevel),m_Rotation(rotation)

{

}

void OrthographicCameraController::OnUpdate(Timestep ts)

{

if (Input::IsKeyPressed(YT_KEY_A)) {

m_CameraPosition.x -= m_CameraTranslationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_D)) {

m_CameraPosition.x += m_CameraTranslationSpeed * ts;

}

if (Input::IsKeyPressed(YT_KEY_S)) {

m_CameraPosition.y -= m_CameraTranslationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_W)) {

m_CameraPosition.y += m_CameraTranslationSpeed * ts;

}

if (m_Rotation) {

if (Input::IsKeyPressed(YT_KEY_Q)) {

m_CameraRotation += m_CameraRotationSpeed * ts;

}

else if (Input::IsKeyPressed(YT_KEY_E)) {

m_CameraRotation -= m_CameraRotationSpeed * ts;

}

m_Camera.SetRotation(m_CameraRotation);

}

m_Camera.SetPosition(m_CameraPosition);

m_CameraTranslationSpeed = m_ZoomLevel;

}

void OrthographicCameraController::OnEvent(Event& e)

{

EventDispatcher dispatcher(e);

dispatcher.Dispatch<MouseScrolledEvent>(YT_BIND_EVENT_FN(OrthographicCameraController::OnMouseScrolled));

dispatcher.Dispatch<WindowResizeEvent>(YT_BIND_EVENT_FN(OrthographicCameraController::OnWindowResized));

}

bool OrthographicCameraController::OnMouseScrolled(MouseScrolledEvent& e)

{

m_ZoomLevel -= e.GetYOffset()*0.5f;

m_ZoomLevel = std::max(m_ZoomLevel, 0.25f);

m_Camera.SetProjection(-m_AspectRatio * m_ZoomLevel, m_AspectRatio * m_ZoomLevel, -m_ZoomLevel, m_ZoomLevel);

return false;

}

bool OrthographicCameraController::OnWindowResized(WindowResizeEvent& e)

{

m_AspectRatio = (float)e.GetWidth()/(float) e.GetHeight();

m_Camera.SetProjection(-m_AspectRatio * m_ZoomLevel, m_AspectRatio * m_ZoomLevel, -m_ZoomLevel, m_ZoomLevel);

return false;

}

}

Application.h:

#pragma once

#include"Core.h"

#include"Event/Event.h"

#include"Event/ApplicationEvent.h"

#include "YOTO/Window.h"

#include"YOTO/LayerStack.h"

#include"YOTO/ImGui/ImGuiLayer.h"

#include "YOTO/Core/Timestep.h"

#include "YOTO/Renderer/OrthographicCamera.h"

namespace YOTO {

class YOTO_API Application

{

public:

Application();

virtual ~Application();

void Run();

void OnEvent(Event& e);

void PushLayer(Layer* layer);

void PushOverlay(Layer* layer);

inline static Application& Get() { return *s_Instance; }

inline Window& GetWindow() { return *m_Window; }

private:

bool OnWindowClosed(WindowCloseEvent& e);

bool OnWindowResize(WindowResizeEvent& e);

private:

std::unique_ptr<Window> m_Window;

ImGuiLayer* m_ImGuiLayer;

bool m_Running = true;

bool m_Minimized = false;

LayerStack m_LayerStack;

Timestep m_Timestep;

float m_LastFrameTime = 0.0f;

private:

static Application* s_Instance;

};

//在客户端定义

Application* CreateApplication();

}

Application.cpp:

#include"ytpch.h"

#include "Application.h"

#include"Log.h"

#include "YOTO/Renderer/Renderer.h"

#include"Input.h"

#include <GLFW/glfw3.h>

namespace YOTO {

#define BIND_EVENT_FN(x) std::bind(&x, this, std::placeholders::_1)

Application* Application::s_Instance = nullptr;

Application::Application()

{

YT_CORE_ASSERT(!s_Instance, "Application需要为空!")

s_Instance = this;

//智能指针

m_Window = std::unique_ptr<Window>(Window::Creat());

//设置回调函数

m_Window->SetEventCallback(BIND_EVENT_FN(Application::OnEvent));

m_Window->SetVSync(false);

Renderer::Init();

//new一个Layer,放在最后层进行渲染

m_ImGuiLayer = new ImGuiLayer();

PushOverlay(m_ImGuiLayer);

}

Application::~Application() {

}

/// <summary>

/// 所有的Window事件都会在这触发,作为参数e

/// </summary>

/// <param name="e"></param>

void Application::OnEvent(Event& e) {

//根据事件类型绑定对应事件

EventDispatcher dispatcher(e);

dispatcher.Dispatch<WindowCloseEvent>(BIND_EVENT_FN(Application::OnWindowClosed));

dispatcher.Dispatch<WindowResizeEvent>(BIND_EVENT_FN(Application::OnWindowResize));

//输出事件信息

YT_CORE_INFO("Application:{0}", e);

for (auto it = m_LayerStack.end(); it != m_LayerStack.begin();) {

(*--it)->OnEvent(e);

if (e.m_Handled)

break;

}

}

bool Application::OnWindowClosed(WindowCloseEvent& e) {

m_Running = false;

return true;

}

bool Application::OnWindowResize(WindowResizeEvent& e)

{

if (e.GetWidth()==0||e.GetHeight()==0) {

m_Minimized = true;

return false;

}

m_Minimized = false;

//调整视口

Renderer::OnWindowResize(e.GetWidth(), e.GetHeight());

return false;

}

void Application::Run() {

WindowResizeEvent e(1280, 720);

if (e.IsInCategory(EventCategoryApplication)) {

YT_CORE_TRACE(e);

}

if (e.IsInCategory(EventCategoryInput)) {

YT_CORE_ERROR(e);

}

while (m_Running)

{

float time = (float)glfwGetTime();//window平台

Timestep timestep = time - m_LastFrameTime;

m_LastFrameTime = time;

if (!m_Minimized) {

for (Layer* layer : m_LayerStack) {

layer->OnUpdate(timestep);

}

}

//将ImGui的刷新放到APP中,与Update分开

m_ImGuiLayer->Begin();

for (Layer* layer : m_LayerStack) {

layer->OnImGuiRender();

}

m_ImGuiLayer->End();

m_Window->OnUpdate();

}

}

void Application::PushLayer(Layer* layer) {

m_LayerStack.PushLayer(layer);

layer->OnAttach();

}

void Application::PushOverlay(Layer* layer) {

m_LayerStack.PushOverlay(layer);

layer->OnAttach();

}

}SandboxApp.cpp:

#include<YOTO.h>

#include "imgui/imgui.h"

#include<stdio.h>

#include <glm/gtc/matrix_transform.hpp>

#include <Platform/OpenGL/OpenGLShader.h>

#include <glm/gtc/type_ptr.hpp>

class ExampleLayer:public YOTO::Layer

{

public:

ExampleLayer()

:Layer("Example"), m_CameraController(1280.0f/720.0f,true){

uint32_t indices[3] = { 0,1,2 };

float vertices[3 * 7] = {

-0.5f,-0.5f,0.0f, 0.8f,0.2f,0.8f,1.0f,

0.5f,-0.5f,0.0f, 0.2f,0.3f,0.8f,1.0f,

0.0f,0.5f,0.0f, 0.8f,0.8f,0.2f,1.0f,

};

m_VertexArray.reset(YOTO::VertexArray::Create());

YOTO::Ref<YOTO::VertexBuffer> m_VertexBuffer;

m_VertexBuffer.reset(YOTO::VertexBuffer::Create(vertices, sizeof(vertices)));

{

YOTO::BufferLayout setlayout = {

{YOTO::ShaderDataType::Float3,"a_Position"},

{YOTO::ShaderDataType::Float4,"a_Color"}

};

m_VertexBuffer->SetLayout(setlayout);

}

m_VertexArray->AddVertexBuffer(m_VertexBuffer);

YOTO::Ref<YOTO::IndexBuffer>m_IndexBuffer;

m_IndexBuffer.reset(YOTO::IndexBuffer::Create(indices, sizeof(indices) / sizeof(uint32_t)));

m_VertexArray->AddIndexBuffer(m_IndexBuffer);

std::string vertexSource = R"(

#version 330 core

layout(location = 0) in vec3 a_Position;

layout(location = 1) in vec4 a_Color;

uniform mat4 u_ViewProjection;

uniform mat4 u_Transform;

out vec3 v_Position;

out vec4 v_Color;

void main(){

v_Position=a_Position;

v_Color=a_Color;

gl_Position =u_ViewProjection *u_Transform* vec4( a_Position,1.0);

}

)";

//绘制颜色

std::string fragmentSource = R"(

#version 330 core

layout(location = 0) out vec4 color;

in vec3 v_Position;

in vec4 v_Color;

void main(){

color=vec4(v_Color);

}

)";

m_Shader=(YOTO::Shader::Create("VertexPosColor", vertexSource, fragmentSource));

///测试/

m_SquareVA.reset(YOTO::VertexArray::Create());

float squareVertices[5 * 4] = {

-0.5f,-0.5f,0.0f, 0.0f,0.0f,

0.5f,-0.5f,0.0f, 1.0f,0.0f,

0.5f,0.5f,0.0f, 1.0f,1.0f,

-0.5f,0.5f,0.0f, 0.0f,1.0f,

};

YOTO::Ref<YOTO::VertexBuffer> squareVB;

squareVB.reset(YOTO::VertexBuffer::Create(squareVertices, sizeof(squareVertices)));

squareVB->SetLayout({

{YOTO::ShaderDataType::Float3,"a_Position"},

{YOTO::ShaderDataType::Float2,"a_TexCoord"}

});

m_SquareVA->AddVertexBuffer(squareVB);

uint32_t squareIndices[6] = { 0,1,2,2,3,0 };

YOTO::Ref<YOTO::IndexBuffer> squareIB;

squareIB.reset((YOTO::IndexBuffer::Create(squareIndices, sizeof(squareIndices) / sizeof(uint32_t))));

m_SquareVA->AddIndexBuffer(squareIB);

//测试:

std::string BlueShaderVertexSource = R"(

#version 330 core

layout(location = 0) in vec3 a_Position;

uniform mat4 u_ViewProjection;

uniform mat4 u_Transform;

out vec3 v_Position;

void main(){

v_Position=a_Position;

gl_Position =u_ViewProjection*u_Transform*vec4( a_Position,1.0);

}

)";

//绘制颜色

std::string BlueShaderFragmentSource = R"(

#version 330 core

layout(location = 0) out vec4 color;

in vec3 v_Position;

uniform vec3 u_Color;

void main(){

color=vec4(u_Color,1.0);

}

)";

m_BlueShader=(YOTO::Shader::Create("FlatColor", BlueShaderVertexSource, BlueShaderFragmentSource));

auto textureShader= m_ShaderLibrary.Load("assets/shaders/Texture.glsl");

m_Texture=YOTO::Texture2D::Create("assets/textures/Checkerboard.png");

m_ChernoLogo= YOTO::Texture2D::Create("assets/textures/ChernoLogo.png");

std::dynamic_pointer_cast<YOTO::OpenGLShader>(textureShader)->Bind();

std::dynamic_pointer_cast<YOTO::OpenGLShader>(textureShader)->UploadUniformInt("u_Texture", 0);

}

void OnImGuiRender() override {

ImGui::Begin("设置");

ImGui::ColorEdit3("正方形颜色", glm::value_ptr(m_SquareColor));

ImGui::End();

}

void OnUpdate(YOTO::Timestep ts)override {

//update

m_CameraController.OnUpdate(ts);

//YT_CLIENT_TRACE("delta time {0}s ({1}ms)", ts.GetSeconds(), ts.GetMilliseconds());

//Render

YOTO::RenderCommand::SetClearColor({ 0.2f, 0.2f, 0.2f, 1.0f });

YOTO::RenderCommand::Clear();

YOTO::Renderer::BeginScene(m_CameraController.GetCamera());

{

static glm::mat4 scale = glm::scale(glm::mat4(1.0f), glm::vec3(0.1f));

glm::vec4 redColor(0.8f, 0.3f, 0.3f, 1.0f);

glm::vec4 blueColor(0.2f, 0.3f, 0.8f, 1.0f);

/* YOTO::MaterialRef material = new YOTO::MaterialRef(m_FlatColorShader);

YOTO::MaterialInstaceRef mi = new YOTO::MaterialInstaceRef(material);

mi.setValue("u_Color",redColor);

mi.setTexture("u_AlbedoMap", texture);

squreMesh->SetMaterial(mi);*/

std::dynamic_pointer_cast<YOTO::OpenGLShader>(m_BlueShader)->Bind();

std::dynamic_pointer_cast<YOTO::OpenGLShader>(m_BlueShader)->UploadUniformFloat3("u_Color",m_SquareColor);

for (int y = 0; y < 20; y++) {

for (int x = 0; x <20; x++)

{

glm::vec3 pos(x * 0.105f,y* 0.105f, 0.0);

glm::mat4 transform = glm::translate(glm::mat4(1.0f), pos) * scale;

/* if (x % 2 == 0) {

m_BlueShader->UploadUniformFloat4("u_Color", redColor);

}

else {

m_BlueShader->UploadUniformFloat4("u_Color", blueColor);

}*/

YOTO::Renderer::Submit(m_BlueShader, m_SquareVA, transform);

}

}

auto textureShader = m_ShaderLibrary.Get("Texture");

m_Texture->Bind();

YOTO::Renderer::Submit(textureShader, m_SquareVA, glm::scale(glm::mat4(1.0f), glm::vec3(1.5f)));

m_ChernoLogo->Bind();

YOTO::Renderer::Submit(textureShader, m_SquareVA, glm::scale(glm::mat4(1.0f), glm::vec3(1.5f)));

//YOTO::Renderer::Submit(m_Shader, m_VertexArray);

}

YOTO::Renderer::EndScene();

//YOTO::Renderer3D::BeginScene(m_Scene);

//YOTO::Renderer2D::BeginScene(m_Scene);

}

void OnEvent(YOTO::Event& e)override {

m_CameraController.OnEvent(e);

if (e.GetEventType() == YOTO::EventType::WindowResize) {

auto& re = (YOTO::WindowResizeEvent&)e;

/* float zoom = re.GetWidth() / 1280.0f;

m_CameraController.SetZoomLevel(zoom);*/

}

}

private:

YOTO::ShaderLibrary m_ShaderLibrary;

YOTO::Ref<YOTO::Shader> m_Shader;

YOTO::Ref<YOTO::VertexArray> m_VertexArray;

YOTO::Ref<YOTO::Shader> m_BlueShader;

YOTO::Ref<YOTO::VertexArray> m_SquareVA;

YOTO::Ref<YOTO::Texture2D> m_Texture,m_ChernoLogo;

YOTO::OrthographicCameraController m_CameraController;

glm::vec3 m_SquareColor = { 0.2f,0.3f,0.7f };

};

class Sandbox:public YOTO::Application

{

public:

Sandbox(){

PushLayer(new ExampleLayer());

//PushLayer(new YOTO::ImGuiLayer());

}

~Sandbox() {

}

private:

};

YOTO::Application* YOTO::CreateApplication() {

printf("helloworld");

return new Sandbox();

}

添加了新功能,修改窗口大小在OnEvent中。