专属领域论文订阅

关注{晓理紫|小李子},每日更新论文,如感兴趣,请转发给有需要的同学,谢谢支持

如果你感觉对你有所帮助,请关注我,每日准时为你推送最新论文。

为了答谢各位网友的支持,从今日起免费为300名读者提供订阅主题论文服务,只需VX关注公号并回复{邮箱+论文主题}(如:123456@xx.com + chatgpt@large language model @LLM),主题必须是同一个领域,最多三个关键词。解释权归博主所有

分类:

- 大语言模型LLM

- 视觉模型VLM

- 扩散模型

- 视觉语言导航VLN

- 强化学习 RL

- 模仿学习 IL

- 机器人

- 开放词汇,检测分割

== RLHF ==

标题: Curriculum-Based Reinforcement Learning for Quadrupedal Jumping: A Reference-free Design

作者: Vassil Atanassov, Jiatao Ding, Jens Kober

PubTime: 2024-01-29

Downlink: http://arxiv.org/abs/2401.16337v1

Project: https://youtu.be/nRaMCrwU5X8|

中文摘要: 深度强化学习(DRL)已经成为掌握爆发性和多功能四足跳跃技能的一种有前途的解决方案。然而,当前基于DRL的框架通常依赖于定义明确的参考轨迹,这些轨迹是通过捕捉动物运动或从现有控制器转移经验来获得的。这项工作探索了在不模仿参考轨迹的情况下学习动态跳跃的可能性。为此,我们将课程设计纳入DRL,以逐步完成具有挑战性的任务。从垂直原地跳跃开始,我们将学习到的策略推广到向前和对角跳跃,最后,学习跳过障碍。以期望的着陆位置、方向和障碍尺寸为条件,所提出的方法有助于大范围的跳跃运动,包括全向跳跃和健壮跳跃,减轻了预先提取参考的努力。特别是,在没有参考运动约束的情况下,实现了90厘米的向前跳跃,超过了现有文献中报道的类似机器人的先前记录。此外,即使在训练阶段没有遇到,也可以在柔软的草地上连续跳跃。展示我们结果的补充视频可以在https://youtu.be/nRaMCrwU5X8。

摘要: Deep reinforcement learning (DRL) has emerged as a promising solution to mastering explosive and versatile quadrupedal jumping skills. However, current DRL-based frameworks usually rely on well-defined reference trajectories, which are obtained by capturing animal motions or transferring experience from existing controllers. This work explores the possibility of learning dynamic jumping without imitating a reference trajectory. To this end, we incorporate a curriculum design into DRL so as to accomplish challenging tasks progressively. Starting from a vertical in-place jump, we then generalize the learned policy to forward and diagonal jumps and, finally, learn to jump across obstacles. Conditioned on the desired landing location, orientation, and obstacle dimensions, the proposed approach contributes to a wide range of jumping motions, including omnidirectional jumping and robust jumping, alleviating the effort to extract references in advance. Particularly, without constraints from the reference motion, a 90cm forward jump is achieved, exceeding previous records for similar robots reported in the existing literature. Additionally, continuous jumping on the soft grassy floor is accomplished, even when it is not encountered in the training stage. A supplementary video showing our results can be found at https://youtu.be/nRaMCrwU5X8 .

标题: SPRINT: Scalable Policy Pre-Training via Language Instruction Relabeling

作者: Jesse Zhang, Karl Pertsch, Jiahui Zhang

PubTime: 2024-01-29

Downlink: http://arxiv.org/abs/2306.11886v3

Project: https://clvrai.com/sprint|

摘要: Pre-training robot policies with a rich set of skills can substantially accelerate the learning of downstream tasks. Prior works have defined pre-training tasks via natural language instructions, but doing so requires tedious human annotation of hundreds of thousands of instructions. Thus, we propose SPRINT, a scalable offline policy pre-training approach which substantially reduces the human effort needed for pre-training a diverse set of skills. Our method uses two core ideas to automatically expand a base set of pre-training tasks: instruction relabeling via large language models and cross-trajectory skill chaining through offline reinforcement learning. As a result, SPRINT pre-training equips robots with a much richer repertoire of skills. Experimental results in a household simulator and on a real robot kitchen manipulation task show that SPRINT leads to substantially faster learning of new long-horizon tasks than previous pre-training approaches. Website at https://clvrai.com/sprint.

标题: Enhancing End-to-End Multi-Task Dialogue Systems: A Study on Intrinsic Motivation Reinforcement Learning Algorithms for Improved Training and Adaptability

作者: Navin Kamuni, Hardik Shah, Sathishkumar Chintala

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.18040v1

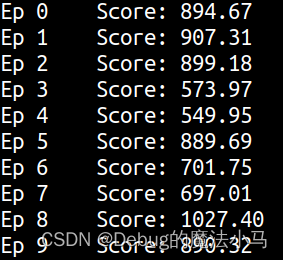

中文摘要: 端到端多任务对话系统通常为对话管道设计有单独的模块。其中,策略模块对于决定如何响应用户输入至关重要。该策略由强化学习算法通过利用代理接收奖励信号形式的反馈的环境来训练。然而,目前的对话系统只能提供微薄和简单的回报。研究内在动机强化学习算法是本研究的目标。通过这种方式,代理人可以通过教授内部激励系统来快速加速训练并提高其判断行为质量的能力。特别是,我们采用随机网络提取和好奇心驱动的强化学习技术来测量国事访问的频率,并通过使用话语之间的语义相似性来鼓励探索。在异构数据集MultiWOZ上的实验结果表明,基于内在动机的辩论系统优于依赖于外在激励的政策。例如,通过采用随机网络提取,使用用户——系统对话之间的语义相似性进行训练,实现了令人震惊的73%的平均成功率。这是对基线近端策略优化(PPO)的显著改进,PPO的平均成功率为60%。此外,预订率和完成率等绩效指标显示比基线高出10%。此外,这些内在激励模型有助于在越来越多的领域提高系统政策的弹性。这意味着它们在扩展到覆盖更广泛域的设置时可能是有用的。

摘要: End-to-end multi-task dialogue systems are usually designed with separate modules for the dialogue pipeline. Among these, the policy module is essential for deciding what to do in response to user input. This policy is trained by reinforcement learning algorithms by taking advantage of an environment in which an agent receives feedback in the form of a reward signal. The current dialogue systems, however, only provide meagre and simplistic rewards. Investigating intrinsic motivation reinforcement learning algorithms is the goal of this study. Through this, the agent can quickly accelerate training and improve its capacity to judge the quality of its actions by teaching it an internal incentive system. In particular, we adapt techniques for random network distillation and curiosity-driven reinforcement learning to measure the frequency of state visits and encourage exploration by using semantic similarity between utterances. Experimental results on MultiWOZ, a heterogeneous dataset, show that intrinsic motivation-based debate systems outperform policies that depend on extrinsic incentives. By adopting random network distillation, for example, which is trained using semantic similarity between user-system dialogues, an astounding average success rate of 73% is achieved. This is a significant improvement over the baseline Proximal Policy Optimization (PPO), which has an average success rate of 60%. In addition, performance indicators such as booking rates and completion rates show a 10% rise over the baseline. Furthermore, these intrinsic incentive models help improve the system’s policy’s resilience in an increasing amount of domains. This implies that they could be useful in scaling up to settings that cover a wider range of domains.

标题: Causal Coordinated Concurrent Reinforcement Learning

作者: Tim Tse, Isaac Chan, Zhitang Chen

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.18012v1

中文摘要: 在这项工作中,我们提出了一种用于数据共享和协调探索的新算法框架,目的是在并发强化学习(CRL)设置下学习更有效的数据和更好的执行策略。与其他假设所有主体在相同环境下活动的工作相反,我们放宽了这一限制,转而考虑每个主体在共享全局结构但也表现出个体差异的环境中活动的公式。我们的算法利用加性噪声模型——混合模型(ANM-MM)形式的因果推理算法,通过独立性强制提取控制个体差异的模型参数。我们提出了一种新的数据共享方案,该方案基于提取的模型参数的相似性度量,并在一组自回归、钟摆和推车杆摆动任务上展示了优越的学习速度,最后,我们展示了在稀疏奖励设置下普通代理之间不同动作选择的有效性。据我们所知,这是在CRL中考虑非相同环境的第一项工作,也是少数几项寻求将因果推理与强化学习(RL)相结合的工作之一。

摘要: In this work, we propose a novel algorithmic framework for data sharing and coordinated exploration for the purpose of learning more data-efficient and better performing policies under a concurrent reinforcement learning (CRL) setting. In contrast to other work which make the assumption that all agents act under identical environments, we relax this restriction and instead consider the formulation where each agent acts within an environment which shares a global structure but also exhibits individual variations. Our algorithm leverages a causal inference algorithm in the form of Additive Noise Model - Mixture Model (ANM-MM) in extracting model parameters governing individual differentials via independence enforcement. We propose a new data sharing scheme based on a similarity measure of the extracted model parameters and demonstrate superior learning speeds on a set of autoregressive, pendulum and cart-pole swing-up tasks and finally, we show the effectiveness of diverse action selection between common agents under a sparse reward setting. To the best of our knowledge, this is the first work in considering non-identical environments in CRL and one of the few works which seek to integrate causal inference with reinforcement learning (RL).

标题: Circuit Partitioning for Multi-Core Quantum Architectures with Deep Reinforcement Learning

作者: Arnau Pastor, Pau Escofet, Sahar Ben Rached

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17976v1

中文摘要: 量子计算通过利用量子力学的独特属性,在解决经典棘手问题方面拥有巨大的潜力。量子架构的可扩展性仍然是一个重大挑战。多核量子架构被提出来解决可扩展性问题,这在硬件、通信和编译等方面带来了一系列新的挑战。这些挑战之一是调整量子算法以适应量子计算机的不同核心。本文提出了一种利用深度强化学习进行电路划分的新方法,为量子计算和图划分的发展做出了贡献。这项工作是将深度强化学习技术集成到量子电路映射中的第一步,为解决此类问题的新范式打开了大门。

摘要: Quantum computing holds immense potential for solving classically intractable problems by leveraging the unique properties of quantum mechanics. The scalability of quantum architectures remains a significant challenge. Multi-core quantum architectures are proposed to solve the scalability problem, arising a new set of challenges in hardware, communications and compilation, among others. One of these challenges is to adapt a quantum algorithm to fit within the different cores of the quantum computer. This paper presents a novel approach for circuit partitioning using Deep Reinforcement Learning, contributing to the advancement of both quantum computing and graph partitioning. This work is the first step in integrating Deep Reinforcement Learning techniques into Quantum Circuit Mapping, opening the door to a new paradigm of solutions to such problems.

标题: Attention Graph for Multi-Robot Social Navigation with Deep Reinforcement Learning

作者: Erwan Escudie, Laetitia Matignon, Jacques Saraydaryan

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17914v1

中文摘要: 在行人中学习机器人导航策略对于基于领域的应用至关重要。将感知、规划和预测结合起来,使我们能够对机器人和行人之间的交互进行建模,从而产生令人印象深刻的结果,特别是最近基于深度强化学习(RL)的方法。然而,这些工作没有考虑多机器人场景。在本文中,我们提出了MultiSoc,一种使用RL学习多智能体社会感知导航策略的新方法。受最近多智能体深度RL工作的启发,我们的方法利用了基于图的智能体交互表示,结合了实体(行人和智能体)的位置和视野。每个agent使用基于结合注意机制的两个图神经网络的模型。首先,边缘选择器生成一个稀疏图,然后群协调器应用节点注意力来生成一个表示每个实体对其他实体的影响的图。这被合并到一个无模型的RL框架中,以学习多代理策略。我们在模拟上评估了我们的方法,并在一组不同的条件下(代理/行人的数量)提供了一系列的实验。实证结果表明,我们的方法比社交导航深度RL单智能体技术学习速度更快,并在具有多个异构人类的挑战性人群导航中实现了有效的多智能体隐式协调。此外,通过加入可定制的元参数,我们可以调整邻域密度,以考虑我们的导航策略。

摘要: Learning robot navigation strategies among pedestrian is crucial for domain based applications. Combining perception, planning and prediction allows us to model the interactions between robots and pedestrians, resulting in impressive outcomes especially with recent approaches based on deep reinforcement learning (RL). However, these works do not consider multi-robot scenarios. In this paper, we present MultiSoc, a new method for learning multi-agent socially aware navigation strategies using RL. Inspired by recent works on multi-agent deep RL, our method leverages graph-based representation of agent interactions, combining the positions and fields of view of entities (pedestrians and agents). Each agent uses a model based on two Graph Neural Network combined with attention mechanisms. First an edge-selector produces a sparse graph, then a crowd coordinator applies node attention to produce a graph representing the influence of each entity on the others. This is incorporated into a model-free RL framework to learn multi-agent policies. We evaluate our approach on simulation and provide a series of experiments in a set of various conditions (number of agents / pedestrians). Empirical results show that our method learns faster than social navigation deep RL mono-agent techniques, and enables efficient multi-agent implicit coordination in challenging crowd navigation with multiple heterogeneous humans. Furthermore, by incorporating customizable meta-parameters, we can adjust the neighborhood density to take into account in our navigation strategy.

== Imitation Learning ==

标题: LeTO: Learning Constrained Visuomotor Policy with Differentiable Trajectory Optimization

作者: Zhengtong Xu, Yu She

PubTime: 2024-01-30

Downlink: http://arxiv.org/abs/2401.17500v1

GitHub: https://github.com/ZhengtongXu/LeTO|

中文摘要: 本文介绍了LeTO,一种通过可微轨迹优化学习约束视觉运动策略的方法。我们的方法独特地将可微分的优化层集成到神经网络中。通过将优化层公式化为轨迹优化问题,我们使模型能够以安全和可控的方式端到端地生成动作,而无需额外的模块。我们的方法允许在训练过程中引入约束信息,从而平衡满足约束、平滑轨迹和通过演示最小化误差的训练目标。这种“灰盒”方法将基于优化的安全性和可解释性与神经网络强大的表示能力结合在一起。我们在仿真和真实机器人上定量评估LeTO。在模拟中,LeTO实现了与最先进的模仿学习方法相当的成功率,但生成的轨迹不确定性更小,质量更高,更平滑。在真实世界的实验中,我们部署了LeTO来处理约束关键任务。结果表明,与最先进的模仿学习方法相比,LeTO是有效的。我们在https://github.com/ZhengtongXu/LeTO。发布了我们的代码。

摘要: This paper introduces LeTO, a method for learning constrained visuomotor policy via differentiable trajectory optimization. Our approach uniquely integrates a differentiable optimization layer into the neural network. By formulating the optimization layer as a trajectory optimization problem, we enable the model to end-to-end generate actions in a safe and controlled fashion without extra modules. Our method allows for the introduction of constraints information during the training process, thereby balancing the training objectives of satisfying constraints, smoothing the trajectories, and minimizing errors with demonstrations. This “gray box” method marries the optimization-based safety and interpretability with the powerful representational abilities of neural networks. We quantitatively evaluate LeTO in simulation and on the real robot. In simulation, LeTO achieves a success rate comparable to state-of-the-art imitation learning methods, but the generated trajectories are of less uncertainty, higher quality, and smoother. In real-world experiments, we deployed LeTO to handle constraints-critical tasks. The results show the effectiveness of LeTO comparing with state-of-the-art imitation learning approaches. We release our code at https://github.com/ZhengtongXu/LeTO.

标题: R$\times$R: Rapid eXploration for Reinforcement Learning via Sampling-based Reset Distributions and Imitation Pre-training

作者: Gagan Khandate, Tristan L. Saidi, Siqi Shang

PubTime: 2024-01-27

Downlink: http://arxiv.org/abs/2401.15484v1

Project: https://sbrl.cs.columbia.edu|

中文摘要: 我们提出了一种方法,用于实现复杂技能(如灵巧操作)的运动控制策略的强化学习。我们假设训练这种策略的一个关键困难是探索问题状态空间的困难,因为该空间的可访问和有用区域沿着原始高维状态空间的流形形成复杂的结构。这项工作提出了一种方法,以实现和支持勘探与采样为基础的规划。我们使用了一种普遍适用的非完整快速探索随机树算法,并提出了多种方法来使用由此产生的结构来引导无模型强化学习。我们的方法在学习比以前显示的难度更高的各种具有挑战性的灵巧运动控制技能方面是有效的。特别是,我们实现了复杂物体的灵巧手动操作,同时在不使用被动支撑表面的情况下固定物体。这些政策也有效地转移到真正的机器人身上。在项目网站上还可以找到许多示例视频:https://sbrl.cs.columbia.edu

摘要: We present a method for enabling Reinforcement Learning of motor control policies for complex skills such as dexterous manipulation. We posit that a key difficulty for training such policies is the difficulty of exploring the problem state space, as the accessible and useful regions of this space form a complex structure along manifolds of the original high-dimensional state space. This work presents a method to enable and support exploration with Sampling-based Planning. We use a generally applicable non-holonomic Rapidly-exploring Random Trees algorithm and present multiple methods to use the resulting structure to bootstrap model-free Reinforcement Learning. Our method is effective at learning various challenging dexterous motor control skills of higher difficulty than previously shown. In particular, we achieve dexterous in-hand manipulation of complex objects while simultaneously securing the object without the use of passive support surfaces. These policies also transfer effectively to real robots. A number of example videos can also be found on the project website: https://sbrl.cs.columbia.edu

标题: Cognitive TransFuser: Semantics-guided Transformer-based Sensor Fusion for Improved Waypoint Prediction

作者: Hwan-Soo Choi, Jongoh Jeong, Young Hoo Cho

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2308.02126v2

中文摘要: 智能自动驾驶代理的传感器融合方法仍然是从输入传感器获取的视觉全局上下文中理解驾驶场景的关键。具体来说,对于局部航路点预测任务,单模态网络仍然受到对输入传感器灵敏度的强烈依赖性的限制,因此最近的工作促进了在实践中在特征级融合中使用多传感器。虽然众所周知,多种数据模态鼓励相互的上下文交换,但它需要在部署到实际驾驶场景时以最小的计算实时理解全局3D场景,从而在给定有限数量的实际可用传感器的情况下对训练策略具有更大的重要性。在这种情况下,我们通过融合辅助任务特征以及使用辅助头进行基于模仿学习的路点预测,来利用精心选择的与感兴趣的目标任务高度相关的辅助任务(例如,交通灯识别和语义分割)。我们基于RGB激光雷达的多任务特征融合网络,创造了认知输血器,大大增强并超过了基线网络,在CARLA模拟器中实现了更安全、更完整的道路导航。我们通过大量的实验在Town05 Short和Town05 Long基准上验证了所提出的网络,实现了高达44.2 FPS的实时推理时间。

摘要: Sensor fusion approaches for intelligent self-driving agents remain key to driving scene understanding given visual global contexts acquired from input sensors. Specifically, for the local waypoint prediction task, single-modality networks are still limited by strong dependency on the sensitivity of the input sensor, and thus recent works therefore promote the use of multiple sensors in fusion in feature level in practice. While it is well known that multiple data modalities encourage mutual contextual exchange, it requires global 3D scene understanding in real-time with minimal computation upon deployment to practical driving scenarios, thereby placing greater significance on the training strategy given a limited number of practically usable sensors. In this light, we exploit carefully selected auxiliary tasks that are highly correlated with the target task of interest (e.g., traffic light recognition and semantic segmentation) by fusing auxiliary task features and also using auxiliary heads for waypoint prediction based on imitation learning. Our RGB-LIDAR-based multi-task feature fusion network, coined Cognitive TransFuser, augments and exceeds the baseline network by a significant margin for safer and more complete road navigation in the CARLA simulator. We validate the proposed network on the Town05 Short and Town05 Long Benchmark through extensive experiments, achieving up to 44.2 FPS real-time inference time.

标题: Bi-ACT: Bilateral Control-Based Imitation Learning via Action Chunking with Transformer

作者: Thanpimon Buamanee, Masato Kobayashi, Yuki Uranishi

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17698v1

中文摘要: 机器人手臂中的自主操作是机器人学中一个复杂且不断发展的研究领域。本文提出了机器人和机器学习领域两种创新方法的交汇点。受Transformer model动作分块(ACT)模型的启发,该模型采用关节位置和图像数据来预测未来的运动,我们的工作整合了基于双边控制的模仿学习的原则,以增强机器人控制。我们的目标是协同这些技术,从而创建一个更强大和有效的控制机制。在我们的方法中,从环境中收集的数据是来自手爪和头顶摄像机的图像,以及使用双边控制的跟随机器人的关节角度、角速度和力。该模型旨在预测领导者机器人的关节角度、角速度和力的后续步骤。这种预测能力对于在跟随机器人中实施有效的双边控制至关重要,允许更细致入微和反应灵敏的机动。

摘要: Autonomous manipulation in robot arms is a complex and evolving field of study in robotics. This paper proposes work stands at the intersection of two innovative approaches in the field of robotics and machine learning. Inspired by the Action Chunking with Transformer (ACT) model, which employs joint location and image data to predict future movements, our work integrates principles of Bilateral Control-Based Imitation Learning to enhance robotic control. Our objective is to synergize these techniques, thereby creating a more robust and efficient control mechanism. In our approach, the data collected from the environment are images from the gripper and overhead cameras, along with the joint angles, angular velocities, and forces of the follower robot using bilateral control. The model is designed to predict the subsequent steps for the joint angles, angular velocities, and forces of the leader robot. This predictive capability is crucial for implementing effective bilateral control in the follower robot, allowing for more nuanced and responsive maneuvering.

标题: Zero-shot Imitation Policy via Search in Demonstration Dataset

作者: Federco Malato, Florian Leopold, Andrew Melnik

PubTime: 2024-01-29

Downlink: http://arxiv.org/abs/2401.16398v1

中文摘要: 行为克隆使用演示数据集来学习策略。为了克服计算昂贵的训练过程并解决策略适应问题,我们建议使用预训练基础模型的潜在空间来索引演示数据集,即时访问类似的相关经验,并从这些情况中复制行为。来自所选相似情境的动作可以由主体执行,直到主体的当前情境和所选体验的表征在潜在空间中出现分歧。因此,我们将我们的控制问题表述为在专家演示数据集上的动态搜索问题。我们在视频预训练模型的潜在表示中的玄武岩矿物数据集上测试了我们的方法。我们将我们的模型与最先进的、基于模仿学习的《我的世界》代理进行比较。我们的方法可以有效地恢复有意义的演示,并在各种场景中显示《我的世界》环境中代理的类似人类的行为。实验结果表明,我们的基于搜索的方法在准确性和感知评估方面明显优于基于学习的模型。

摘要: Behavioral cloning uses a dataset of demonstrations to learn a policy. To overcome computationally expensive training procedures and address the policy adaptation problem, we propose to use latent spaces of pre-trained foundation models to index a demonstration dataset, instantly access similar relevant experiences, and copy behavior from these situations. Actions from a selected similar situation can be performed by the agent until representations of the agent’s current situation and the selected experience diverge in the latent space. Thus, we formulate our control problem as a dynamic search problem over a dataset of experts’ demonstrations. We test our approach on BASALT MineRL-dataset in the latent representation of a Video Pre-Training model. We compare our model to state-of-the-art, Imitation Learning-based Minecraft agents. Our approach can effectively recover meaningful demonstrations and show human-like behavior of an agent in the Minecraft environment in a wide variety of scenarios. Experimental results reveal that performance of our search-based approach clearly wins in terms of accuracy and perceptual evaluation over learning-based models.

标题: Safe and Generalized end-to-end Autonomous Driving System with Reinforcement Learning and Demonstrations

作者: Zuojin Tang, Xiaoyu Chen, YongQiang Li

PubTime: 2024-01-29

Downlink: http://arxiv.org/abs/2401.11792v4

中文摘要: 智能驾驶系统应该能够根据当前环境和车辆状态动态制定适当的驾驶策略,同时保证系统的安全性和可靠性。然而,基于强化学习和模仿学习的现有方法存在安全性低、泛化能力差和采样效率低的问题。此外,它们不能准确预测未来的驾驶轨迹,而对未来驾驶轨迹的准确预测是做出最优决策的先决条件。为了解决这些问题,本文介绍了一种安全通用的端到端自动驾驶系统(SGADS),适用于复杂多样的场景。我们的SGADS将变分推理与归一化流量相结合,使智能车辆能够准确预测未来的驾驶轨迹。此外,我们提出了鲁棒安全约束的公式。此外,我们将强化学习与演示相结合,以增强代理的搜索过程。实验结果表明,与现有方法相比,我们的SGADS可以显著提高安全性能,表现出很强的泛化能力,并提高智能车辆在复杂城市场景中的训练效率。

摘要: An intelligent driving system should be capable of dynamically formulating appropriate driving strategies based on the current environment and vehicle status, while ensuring the security and reliability of the system. However, existing methods based on reinforcement learning and imitation learning suffer from low safety, poor generalization, and inefficient sampling. Additionally, they cannot accurately predict future driving trajectories, and the accurate prediction of future driving trajectories is a precondition for making optimal decisions. To solve these problems, in this paper, we introduce a Safe and Generalized end-to-end Autonomous Driving System (SGADS) for complex and various scenarios. Our SGADS incorporates variational inference with normalizing flows, enabling the intelligent vehicle to accurately predict future driving trajectories. Moreover, we propose the formulation of robust safety constraints. Furthermore, we combine reinforcement learning with demonstrations to augment search process of the agent. The experimental results demonstrate that our SGADS can significantly improve safety performance, exhibit strong generalization, and enhance the training efficiency of intelligent vehicles in complex urban scenarios compared to existing methods.

== robotic agent ==

标题: RABBIT: A Robot-Assisted Bed Bathing System with Multimodal Perception and Integrated Compliance

作者: Rishabh Madan, Skyler Valdez, David Kim

PubTime: 2024-01-26

Downlink: http://arxiv.org/abs/2401.15159v1

Project: https://emprise.cs.cornell.edu/rabbit|

中文摘要: 本文介绍了RABBIT,这是一种新颖的机器人辅助床上沐浴系统,旨在解决个人卫生任务中对辅助技术日益增长的需求。它结合了多模态感知和双重(软件和硬件)合规性,以执行安全舒适的物理人机交互。使用RGB和热成像来准确分割干燥、肥皂和潮湿的皮肤区域,RABBIT可以根据专家护理实践有效地执行洗涤、漂洗和干燥任务。我们的系统包括受人类护理技术启发的定制设计的运动原语,以及一种名为Scrubby的新型柔顺末端执行器,针对温和有效的交互进行了优化。我们对12名参与者进行了用户研究,其中包括一名行动严重受限的参与者,证明了该系统的有效性和感知舒适度。补充材料和视频可以在我们的网站https://emprise.cs.cornell.edu/rabbit。

摘要: This paper introduces RABBIT, a novel robot-assisted bed bathing system designed to address the growing need for assistive technologies in personal hygiene tasks. It combines multimodal perception and dual (software and hardware) compliance to perform safe and comfortable physical human-robot interaction. Using RGB and thermal imaging to segment dry, soapy, and wet skin regions accurately, RABBIT can effectively execute washing, rinsing, and drying tasks in line with expert caregiving practices. Our system includes custom-designed motion primitives inspired by human caregiving techniques, and a novel compliant end-effector called Scrubby, optimized for gentle and effective interactions. We conducted a user study with 12 participants, including one participant with severe mobility limitations, demonstrating the system’s effectiveness and perceived comfort. Supplementary material and videos can be found on our website https://emprise.cs.cornell.edu/rabbit.

标题: CARPE-ID: Continuously Adaptable Re-identification for Personalized Robot Assistance

作者: Federico Rollo, Andrea Zunino, Nikolaos Tsagarakis

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2310.19413v2

摘要: In today’s Human-Robot Interaction (HRI) scenarios, a prevailing tendency exists to assume that the robot shall cooperate with the closest individual or that the scene involves merely a singular human actor. However, in realistic scenarios, such as shop floor operations, such an assumption may not hold and personalized target recognition by the robot in crowded environments is required. To fulfil this requirement, in this work, we propose a person re-identification module based on continual visual adaptation techniques that ensure the robot’s seamless cooperation with the appropriate individual even subject to varying visual appearances or partial or complete occlusions. We test the framework singularly using recorded videos in a laboratory environment and an HRI scenario, i.e., a person-following task by a mobile robot. The targets are asked to change their appearance during tracking and to disappear from the camera field of view to test the challenging cases of occlusion and outfit variations. We compare our framework with one of the state-of-the-art Multi-Object Tracking (MOT) methods and the results show that the CARPE-ID can accurately track each selected target throughout the experiments in all the cases (except two limit cases). At the same time, the s-o-t-a MOT has a mean of 4 tracking errors for each video.

标题: Smart Driver Monitoring Robotic System to Enhance Road Safety : A Comprehensive Review

作者: Farhin Farhad Riya, Shahinul Hoque, Xiaopeng Zhao

PubTime: 2024-01-28

Downlink: http://arxiv.org/abs/2401.15762v1

中文摘要: 交通运输的未来正在由技术塑造,改善道路安全的一个革命性步骤是将机器人系统纳入驾驶员监控基础设施。这篇文献综述探讨了驾驶员监控系统的现状,从传统的生理参数监控到先进的技术,如面部识别和转向分析。探索现有系统面临的挑战,然后审查调查机器人作为智能实体在这一框架内的集成。这些机器人系统配备了人工智能和复杂的传感器,不仅可以监控,还可以主动与驾驶员互动,实时处理认知和情绪状态。现有研究的综合揭示了人机之间的动态相互作用,为驾驶员监控的自适应、个性化和道德上负责任的人机交互创新提供了有希望的途径。这篇综述为理解这一动态领域的复杂性和潜在途径奠定了基础。它鼓励在人机交互和汽车安全的交叉领域进行进一步的研究和进步,引入了一个新的方向。这涉及多个章节,详细介绍了可以集成的技术改进,以提出一个创新和改进的驾驶员监控系统。

摘要: The future of transportation is being shaped by technology, and one revolutionary step in improving road safety is the incorporation of robotic systems into driver monitoring infrastructure. This literature review explores the current landscape of driver monitoring systems, ranging from traditional physiological parameter monitoring to advanced technologies such as facial recognition to steering analysis. Exploring the challenges faced by existing systems, the review then investigates the integration of robots as intelligent entities within this framework. These robotic systems, equipped with artificial intelligence and sophisticated sensors, not only monitor but actively engage with the driver, addressing cognitive and emotional states in real-time. The synthesis of existing research reveals a dynamic interplay between human and machine, offering promising avenues for innovation in adaptive, personalized, and ethically responsible human-robot interactions for driver monitoring. This review establishes a groundwork for comprehending the intricacies and potential avenues within this dynamic field. It encourages further investigation and advancement at the intersection of human-robot interaction and automotive safety, introducing a novel direction. This involves various sections detailing technological enhancements that can be integrated to propose an innovative and improved driver monitoring system.

标题: HRI Challenges Influencing Low Usage of Robotic Systems in Disaster Response and Rescue Operations

作者: Shahinul Hoque, Farhin Farhad Riya, Jinyuan Sun

PubTime: 2024-01-28

Downlink: http://arxiv.org/abs/2401.15760v1

中文摘要: 人工智能和机器学习的突破带来了机器人学的新革命,导致更复杂的机器人系统的构建。这些机器人系统不仅可以惠及所有领域,还可以完成几年前似乎不可想象的任务。从成群的自主小型机器人一起工作,到更重更大的物体,再到能够前往最恶劣环境的看似坚不可摧的机器人,我们可以看到为每一项可以想象的任务而设计的机器人系统。其中,机器人系统可以受益的一个关键场景是灾难响应场景和救援行动。机器人系统能够成功地执行任务,例如移除沉重的材料,利用多个先进的传感器来寻找感兴趣的物体,在碎片和各种恶劣的环境中移动,并且至少具有飞行能力。即使有如此大的潜力,我们也很少看到机器人系统在灾难响应场景和救援任务中的应用。在这种情况下,许多因素可能导致机器人系统的低利用率。其中一个关键因素涉及与人机交互(HRI)问题相关的挑战。因此,在本文中,我们试图了解在灾难响应和救援行动中利用机器人系统所面临的HRI挑战。此外,我们还介绍了一些为灾难响应场景设计的机器人系统,并确定了这些系统的HRI挑战。最后,我们试图通过引入各种拟议研究工作的想法来解决这些挑战。

摘要: The breakthrough in AI and Machine Learning has brought a new revolution in robotics, resulting in the construction of more sophisticated robotic systems. Not only can these robotic systems benefit all domains, but also can accomplish tasks that seemed to be unimaginable a few years ago. From swarms of autonomous small robots working together to more very heavy and large objects, to seemingly indestructible robots capable of going to the harshest environments, we can see robotic systems designed for every task imaginable. Among them, a key scenario where robotic systems can benefit is in disaster response scenarios and rescue operations. Robotic systems are capable of successfully conducting tasks such as removing heavy materials, utilizing multiple advanced sensors for finding objects of interest, moving through debris and various inhospitable environments, and not the least have flying capabilities. Even with so much potential, we rarely see the utilization of robotic systems in disaster response scenarios and rescue missions. Many factors could be responsible for the low utilization of robotic systems in such scenarios. One of the key factors involve challenges related to Human-Robot Interaction (HRI) issues. Therefore, in this paper, we try to understand the HRI challenges involving the utilization of robotic systems in disaster response and rescue operations. Furthermore, we go through some of the proposed robotic systems designed for disaster response scenarios and identify the HRI challenges of those systems. Finally, we try to address the challenges by introducing ideas from various proposed research works.

标题: Large Language Models for Multi-Modal Human-Robot Interaction

作者: Chao Wang, Stephan Hasler, Daniel Tanneberg

PubTime: 2024-01-26

Downlink: http://arxiv.org/abs/2401.15174v1

中文摘要: 本文提出了一种创新的基于大语言模型(LLM)的机器人系统,用于增强多模态人机交互(HRI)。传统的HRI系统依赖于复杂的设计来进行意图估计、推理和行为生成,这些都是资源密集型的。相比之下,我们的系统使研究人员和从业人员能够通过三个关键方面来规范机器人行为:提供高级语言指导,为机器人可以使用的动作和表情创建“原子”,以及提供一组示例。在物理机器人上实现,它展示了适应多模态输入和确定适当的行动方式以帮助人类的熟练程度,遵循研究人员定义的指南。同时,它将机器人的盖子、脖子和耳朵运动与语音输出相协调,以产生动态的多模态表情。这展示了该系统通过从传统的、手动的状态和流程设计方法转变为直观的、基于指导的和示例驱动的方法来彻底改变HRI的潜力。

摘要: This paper presents an innovative large language model (LLM)-based robotic system for enhancing multi-modal human-robot interaction (HRI). Traditional HRI systems relied on complex designs for intent estimation, reasoning, and behavior generation, which were resource-intensive. In contrast, our system empowers researchers and practitioners to regulate robot behavior through three key aspects: providing high-level linguistic guidance, creating “atomics” for actions and expressions the robot can use, and offering a set of examples. Implemented on a physical robot, it demonstrates proficiency in adapting to multi-modal inputs and determining the appropriate manner of action to assist humans with its arms, following researchers’ defined guidelines. Simultaneously, it coordinates the robot’s lid, neck, and ear movements with speech output to produce dynamic, multi-modal expressions. This showcases the system’s potential to revolutionize HRI by shifting from conventional, manual state-and-flow design methods to an intuitive, guidance-based, and example-driven approach.

== Object Detection@ Segmentation@Open vocabulary detection@SAM ==

标题: Collaborative Multi-Object Tracking with Conformal Uncertainty Propagation

作者: Sanbao Su, Songyang Han, Yiming Li

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2303.14346v2

Project: https://coperception.github.io/MOT-CUP/|

中文摘要: 物体检测和多物体跟踪(MOT)是自动驾驶系统的重要组成部分。准确的检测和不确定性量化对于车载模块(如感知、预测和规划)都至关重要,以提高自动驾驶汽车的安全性和鲁棒性。协作目标检测(COD)已经被提出来通过利用多个智能体的观点来提高检测精度和减少不确定性。然而,很少有人关注如何利用COD的不确定性量化来提高MOT性能。在本文中,作为解决这一挑战的第一次尝试,我们设计了一个称为MOT-CUP的不确定性传播框架。我们的框架首先通过直接建模和保角预测量化COD的不确定性,并将这些不确定性信息传播到运动预测和关联步骤中。MOT-CUP设计用于不同的协作对象检测器和基线MOT算法。我们在V2X-Sim(一个全面的协作感知数据集)上评估了MOT-CUP,并证明与基线(如SORT和ByteTrack)相比,准确性提高了2%,不确定性降低了2.67倍。在以高遮挡水平为特征的场景中,我们的MOT-CUP在准确性方面表现出了值得注意的4.01美元的改进。MOT-CUP展示了不确定性量化在COD和MOT中的重要性,并首次尝试通过不确定性传播来提高基于COD的MOT的准确性和降低不确定性。我们的代码在https://copperception.github.io/MOT-CUP/。上是公开的

摘要: Object detection and multiple object tracking (MOT) are essential components of self-driving systems. Accurate detection and uncertainty quantification are both critical for onboard modules, such as perception, prediction, and planning, to improve the safety and robustness of autonomous vehicles. Collaborative object detection (COD) has been proposed to improve detection accuracy and reduce uncertainty by leveraging the viewpoints of multiple agents. However, little attention has been paid to how to leverage the uncertainty quantification from COD to enhance MOT performance. In this paper, as the first attempt to address this challenge, we design an uncertainty propagation framework called MOT-CUP. Our framework first quantifies the uncertainty of COD through direct modeling and conformal prediction, and propagates this uncertainty information into the motion prediction and association steps. MOT-CUP is designed to work with different collaborative object detectors and baseline MOT algorithms. We evaluate MOT-CUP on V2X-Sim, a comprehensive collaborative perception dataset, and demonstrate a 2% improvement in accuracy and a 2.67X reduction in uncertainty compared to the baselines, e.g. SORT and ByteTrack. In scenarios characterized by high occlusion levels, our MOT-CUP demonstrates a noteworthy 4.01 % 4.01\% 4.01% improvement in accuracy. MOT-CUP demonstrates the importance of uncertainty quantification in both COD and MOT, and provides the first attempt to improve the accuracy and reduce the uncertainty in MOT based on COD through uncertainty propagation. Our code is public on https://coperception.github.io/MOT-CUP/.

标题: Source-free Domain Adaptive Object Detection in Remote Sensing Images

作者: Weixing Liu, Jun Liu, Xin Su

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17916v1

Project: https://weixliu.github.io/|

中文摘要: 最近的研究使用无监督域自适应对象检测(UDAOD)方法来弥合遥感(RS)图像中的域差距。然而,UDAOD方法通常假设源域数据可以在域自适应过程中被访问。由于RS数据隐私和传输困难,这种设置在现实世界中通常是不切实际的。为了解决这一挑战,我们提出了一种实用的遥感图像无源目标检测(SFOD)设置,其目的是仅使用源预训练模型来执行目标域自适应。我们提出了一种新的遥感图像SFOD方法,包括两个部分:扰动域生成和对准。所提出的多级扰动通过根据颜色和风格偏差在图像级别和特征级别扰动域变体特征,以简单而有效的形式构建扰动域。所提出的多级对齐计算整个师生网络中扰动域和目标域之间的特征和标签一致性,并引入特征原型的蒸馏来减轻伪标签的噪声。通过要求检测器在扰动域和目标域中保持一致,检测器被迫关注域不变特征。三个合成到真实实验和三个跨传感器实验的广泛结果验证了我们的方法的有效性,该方法不需要访问源域RS图像。此外,在计算机视觉数据集上的实验表明,我们的方法也可以扩展到其他领域。我们的代码将在以下网址提供:https://weixliu.github.io/。

摘要: Recent studies have used unsupervised domain adaptive object detection (UDAOD) methods to bridge the domain gap in remote sensing (RS) images. However, UDAOD methods typically assume that the source domain data can be accessed during the domain adaptation process. This setting is often impractical in the real world due to RS data privacy and transmission difficulty. To address this challenge, we propose a practical source-free object detection (SFOD) setting for RS images, which aims to perform target domain adaptation using only the source pre-trained model. We propose a new SFOD method for RS images consisting of two parts: perturbed domain generation and alignment. The proposed multilevel perturbation constructs the perturbed domain in a simple yet efficient form by perturbing the domain-variant features at the image level and feature level according to the color and style bias. The proposed multilevel alignment calculates feature and label consistency between the perturbed domain and the target domain across the teacher-student network, and introduces the distillation of feature prototype to mitigate the noise of pseudo-labels. By requiring the detector to be consistent in the perturbed domain and the target domain, the detector is forced to focus on domaininvariant features. Extensive results of three synthetic-to-real experiments and three cross-sensor experiments have validated the effectiveness of our method which does not require access to source domain RS images. Furthermore, experiments on computer vision datasets show that our method can be extended to other fields as well. Our code will be available at: https://weixliu.github.io/ .

标题: SubPipe: A Submarine Pipeline Inspection Dataset for Segmentation and Visual-inertial Localization

作者: Olaya Álvarez-Tuñón, Luiza Ribeiro Marnet, László Antal

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17907v1

GitHub: https://github.com/remaro-network/SubPipe-dataset|

中文摘要: 本文介绍了SubPipe,这是一个用于SLAM、对象检测和图像分割的水下数据集。SubPipe已经使用由OceanScan MST运营的\gls{LAUV}进行了记录,并携带了一套传感器,包括两个摄像机、一个侧扫声纳和一个惯性导航系统以及其他传感器。AUV已经部署在管道检查环境中,海底管道部分被沙子覆盖。AUV的姿态地面真实值由导航传感器估计。侧扫声纳和RGB图像分别包括目标检测和分割注释。最先进的分割、对象检测和SLAM方法在SubPipe上进行了基准测试,以展示数据集在利用计算机视觉算法方面的挑战和机遇。据作者所知,这是第一个带注释的水下数据集,提供了真实的管道检查场景。数据集和实验可在https://github.com/remaro-network/SubPipe-dataset

摘要: This paper presents SubPipe, an underwater dataset for SLAM, object detection, and image segmentation. SubPipe has been recorded using a \gls{LAUV}, operated by OceanScan MST, and carrying a sensor suite including two cameras, a side-scan sonar, and an inertial navigation system, among other sensors. The AUV has been deployed in a pipeline inspection environment with a submarine pipe partially covered by sand. The AUV’s pose ground truth is estimated from the navigation sensors. The side-scan sonar and RGB images include object detection and segmentation annotations, respectively. State-of-the-art segmentation, object detection, and SLAM methods are benchmarked on SubPipe to demonstrate the dataset’s challenges and opportunities for leveraging computer vision algorithms. To the authors’ knowledge, this is the first annotated underwater dataset providing a real pipeline inspection scenario. The dataset and experiments are publicly available online at https://github.com/remaro-network/SubPipe-dataset

标题: Hi-SAM: Marrying Segment Anything Model for Hierarchical Text Segmentation

作者: Maoyuan Ye, Jing Zhang, Juhua Liu

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17904v1

GitHub: https://github.com/ymy-k/Hi-SAM|https://github.com/ymy-k/Hi-SAM|

中文摘要: Segment Anything Model(SAM)是一个在大规模数据集上预先训练的深度视觉基础模型,它打破了一般分割的界限,并引发了各种下游应用。本文介绍了利用SAM进行分层文本分割的统一模型Hi-SAM。Hi-SAM擅长跨四个层次的文本分割,包括笔画、单词、文本行和段落,同时还实现了布局分析。具体来说,我们首先通过参数高效的微调方法将SAM转化为高质量的文本笔划分割(TSS)模型。我们使用这个TSS模型以半自动的方式迭代生成文本笔画标签,统一HierText数据集中四个文本层次结构的标签。随后,有了这些完整的标签,我们推出了基于TSS架构的端到端可训练Hi-SAM,带有定制的分层掩码解码器。在推理过程中,Hi-SAM提供了自动掩模生成(AMG)模式和可提示分割模式。在AMG模式下,Hi-SAM首先对文本笔画前景蒙版进行分割,然后对前景点进行采样,进行分层文本蒙版生成,并进行版面分析。至于可提示模式,Hi-SAM提供了单词、文本行和段落遮罩,只需点击一下。实验结果显示了我们的TSS模型的最先进的性能:对于文本笔划分割,总文本的fgIOU为84.86%,TextSeg的fgIOU为88.96%。此外,与之前在层次文本上进行联合层次检测和布局分析的专家相比,Hi-SAM实现了显著的改进:在文本行级别上实现了4.73%的PQ和5.39%的F1,在段落级别布局分析上实现了5.49%的PQ和7.39%的F1,所需的训练时期减少了20倍。该代码可在https://github.com/ymy-k/Hi-SAM。获得

摘要: The Segment Anything Model (SAM), a profound vision foundation model pre-trained on a large-scale dataset, breaks the boundaries of general segmentation and sparks various downstream applications. This paper introduces Hi-SAM, a unified model leveraging SAM for hierarchical text segmentation. Hi-SAM excels in text segmentation across four hierarchies, including stroke, word, text-line, and paragraph, while realizing layout analysis as well. Specifically, we first turn SAM into a high-quality text stroke segmentation (TSS) model through a parameter-efficient fine-tuning approach. We use this TSS model to iteratively generate the text stroke labels in a semi-automatical manner, unifying labels across the four text hierarchies in the HierText dataset. Subsequently, with these complete labels, we launch the end-to-end trainable Hi-SAM based on the TSS architecture with a customized hierarchical mask decoder. During inference, Hi-SAM offers both automatic mask generation (AMG) mode and promptable segmentation mode. In terms of the AMG mode, Hi-SAM segments text stroke foreground masks initially, then samples foreground points for hierarchical text mask generation and achieves layout analysis in passing. As for the promptable mode, Hi-SAM provides word, text-line, and paragraph masks with a single point click. Experimental results show the state-of-the-art performance of our TSS model: 84.86% fgIOU on Total-Text and 88.96% fgIOU on TextSeg for text stroke segmentation. Moreover, compared to the previous specialist for joint hierarchical detection and layout analysis on HierText, Hi-SAM achieves significant improvements: 4.73% PQ and 5.39% F1 on the text-line level, 5.49% PQ and 7.39% F1 on the paragraph level layout analysis, requiring 20x fewer training epochs. The code is available at https://github.com/ymy-k/Hi-SAM.

标题: SimAda: A Simple Unified Framework for Adapting Segment Anything Model in Underperformed Scenes

作者: Yiran Song, Qianyu Zhou, Xuequan Lu

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.17803v1

GitHub: https://github.com/zongzi13545329/SimAda|

中文摘要: 分段任何东西模型(SAM)在常见视觉场景中表现出出色的泛化能力,但缺乏对专门数据的理解。尽管许多工作都集中在优化下游任务的SAM上,但是这些特定于任务的方法通常限制了对其他下游任务的普遍性。在本文中,我们旨在研究通用视觉模块对微调SAM的影响,并使它们能够推广到所有下游任务。我们提出了一个简单的统一框架,称为SimAda,用于在表现不佳的场景中适应SAM。具体来说,我们的框架将不同方法的一般模块抽象为基本设计元素,并基于共享的理论框架设计了四种变体。SimAda简单而有效,它删除了所有特定于数据集的设计,只专注于一般优化,确保SimAda可以应用于所有基于SAM甚至基于Transformer model的模型。我们对六个下游任务的九个数据集进行了广泛的实验。结果表明,SimAda显著提高了SAM在多个下游任务上的性能,并在大多数任务上实现了最先进的性能,而不需要特定任务的设计。代码可在以下网址获得:https://github.com/zongzi13545329/SimAda

摘要: Segment anything model (SAM) has demonstrated excellent generalization capabilities in common vision scenarios, yet lacking an understanding of specialized data. Although numerous works have focused on optimizing SAM for downstream tasks, these task-specific approaches usually limit the generalizability to other downstream tasks. In this paper, we aim to investigate the impact of the general vision modules on finetuning SAM and enable them to generalize across all downstream tasks. We propose a simple unified framework called SimAda for adapting SAM in underperformed scenes. Specifically, our framework abstracts the general modules of different methods into basic design elements, and we design four variants based on a shared theoretical framework. SimAda is simple yet effective, which removes all dataset-specific designs and focuses solely on general optimization, ensuring that SimAda can be applied to all SAM-based and even Transformer-based models. We conduct extensive experiments on nine datasets of six downstream tasks. The results demonstrate that SimAda significantly improves the performance of SAM on multiple downstream tasks and achieves state-of-the-art performance on most of them, without requiring task-specific designs. Code is available at: https://github.com/zongzi13545329/SimAda

标题: SAMF: Small-Area-Aware Multi-focus Image Fusion for Object Detection

作者: Xilai Li, Xiaosong Li, Haishu Tan

PubTime: 2024-01-31

Downlink: http://arxiv.org/abs/2401.08357v2

GitHub: https://github.com/ixilai/SAMF|

摘要: Existing multi-focus image fusion (MFIF) methods often fail to preserve the uncertain transition region and detect small focus areas within large defocused regions accurately. To address this issue, this study proposes a new small-area-aware MFIF algorithm for enhancing object detection capability. First, we enhance the pixel attributes within the small focus and boundary regions, which are subsequently combined with visual saliency detection to obtain the pre-fusion results used to discriminate the distribution of focused pixels. To accurately ensure pixel focus, we consider the source image as a combination of focused, defocused, and uncertain regions and propose a three-region segmentation strategy. Finally, we design an effective pixel selection rule to generate segmentation decision maps and obtain the final fusion results. Experiments demonstrated that the proposed method can accurately detect small and smooth focus areas while improving object detection performance, outperforming existing methods in both subjective and objective evaluations. The source code is available at https://github.com/ixilai/SAMF.

专属领域论文订阅

关注{晓理紫|小李子},每日更新论文,如感兴趣,请转发给有需要的同学,谢谢支持。谢谢提供建议

如果你感觉对你有所帮助,请关注我,每日准时为你推送最新论文

为了答谢各位网友的支持,从今日起免费为300名读者提供订阅主题论文服务,只需VX关注公号并回复{邮箱+论文主题}(如:123456@xx.com + chatgpt@large language model @LLM),主题必须是同一个领域,最多三个关键词。解释权归博主所有

![[Java面试]JavaSE知识回顾](https://img-blog.csdnimg.cn/direct/3a48b143c7104bceabf36edad9a309a0.png)