1. 前言

之前我们已经搭建过了 hbase单点环境,(单机版搭建参见: https://blog.csdn.net/a15835774652/article/details/135569456)

但是 为了模拟一把集群环境 我们还是尝试搭建一个伪集群版

2. 环境准备

- jdk环境 1.8+

- hdfs (hadoop环境 可选) 搭建参考 https://blog.csdn.net/a15835774652/article/details/135572760

3. 配置

搭建过 hadoop 才发现 hbase环境配置如此之少 可能hadoop东西比较多的缘故

- 配置 jdk 修改 conf/hbase-env.sh

# 修改为自己的jdk export JAVA_HOME=/usr/local/develop/java/zulu-jdk17.0.7 #可选 jdk1.8 以上需要配置 export HBASE_OPTS="${HBASE_SHELL_OPTS} --add-opens java.base/java.lang=ALL-UNNAMED --add-opens java.base/java.lang=ALL-UNNAMED --add-opens java.base/sun.nio.ch=ALL-UNNAMED --add-opens java.base/java.io=ALL-UNNAMED" - 修改 conf/hbase-site.xml 新增属性配置

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!--配置hbase存储目录 这是用hdfs方式 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:8020/hbase</value>

</property>

<!--另外还可以选择本地目录方式 二选一即可 -->

<!-- <property>

<name>hbase.rootdir</name>

<value>file:///usr/local/develop/hbase</value>

</property> -->

<property>

<name>hbase.master.ipc.address</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hbase.regionserver.ipc.address</name>

<value>0.0.0.0</value>

</property>

- 移除 hbase.tmp.dir 和 hbase.unsafe.stream.capability.enforce 配置

4. 启动

是的,你没有听错 配置到这就结束了。开始进入启动环节

直接输入命令进行启动

bin/start-hbase.sh

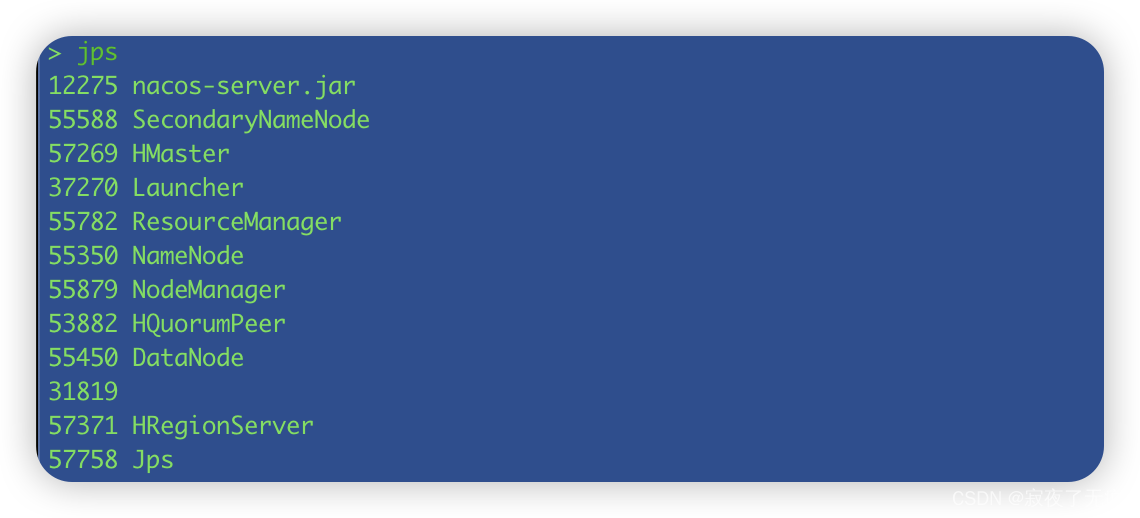

如果配置的没问题的话 使用jps 查看 可以看到 HMaster and HRegionServer 进程在运行

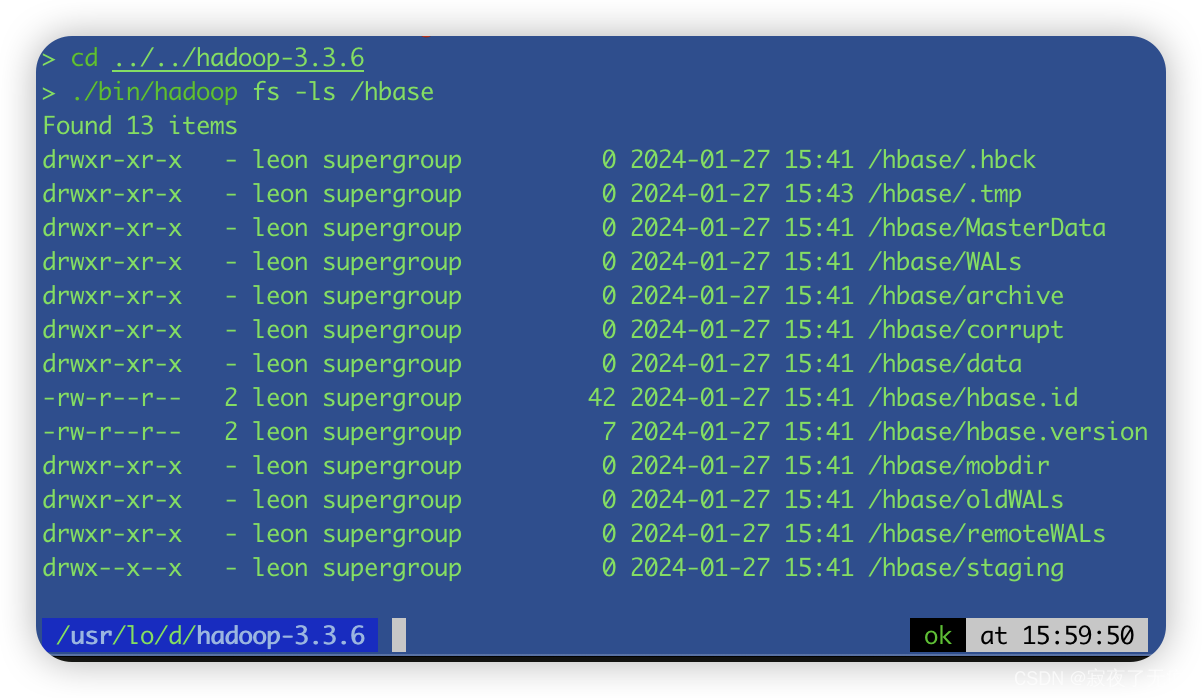

5. 检查hdfs中的 hbase目录

./bin/hadoop fs -ls /hbase

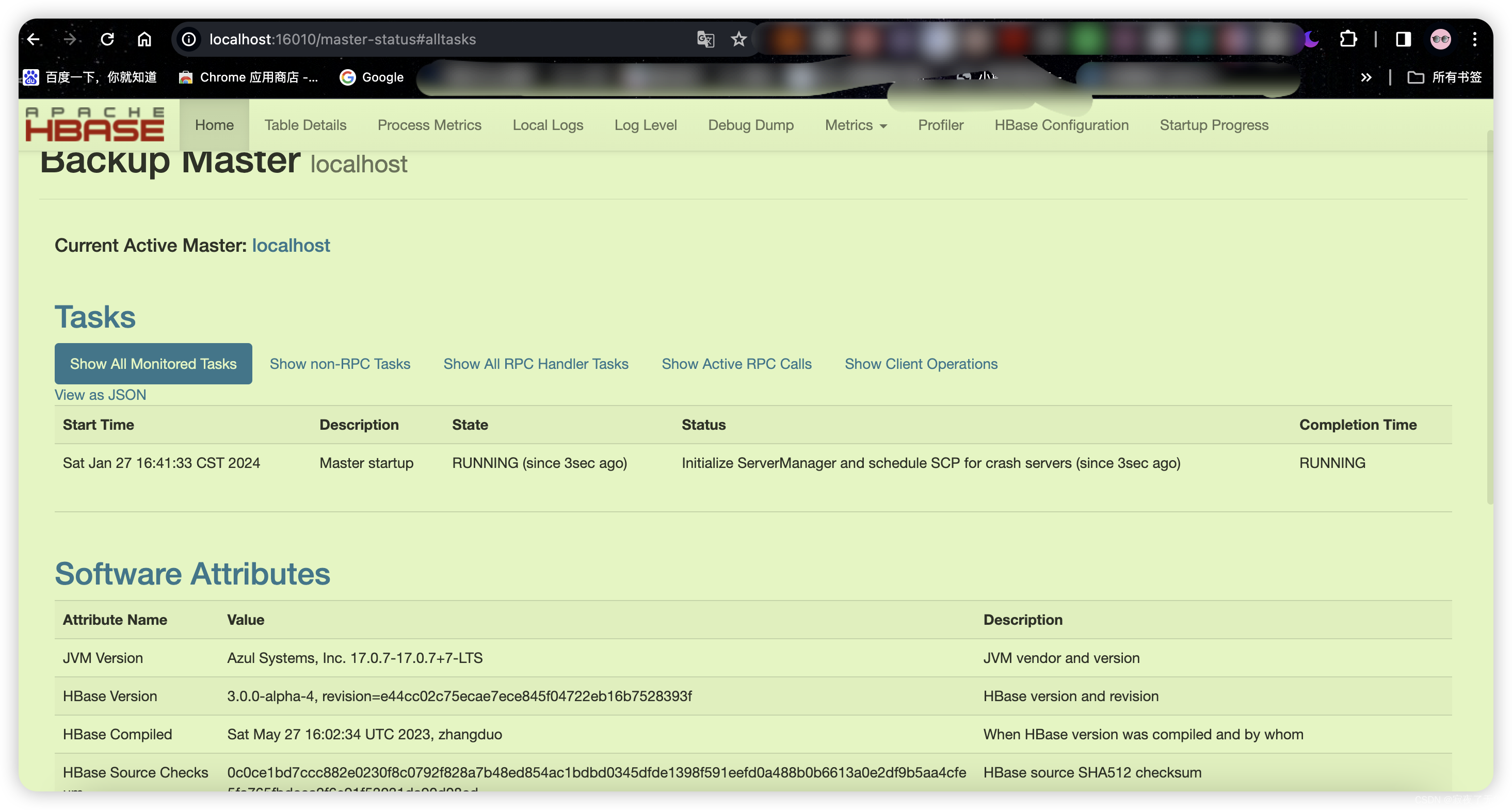

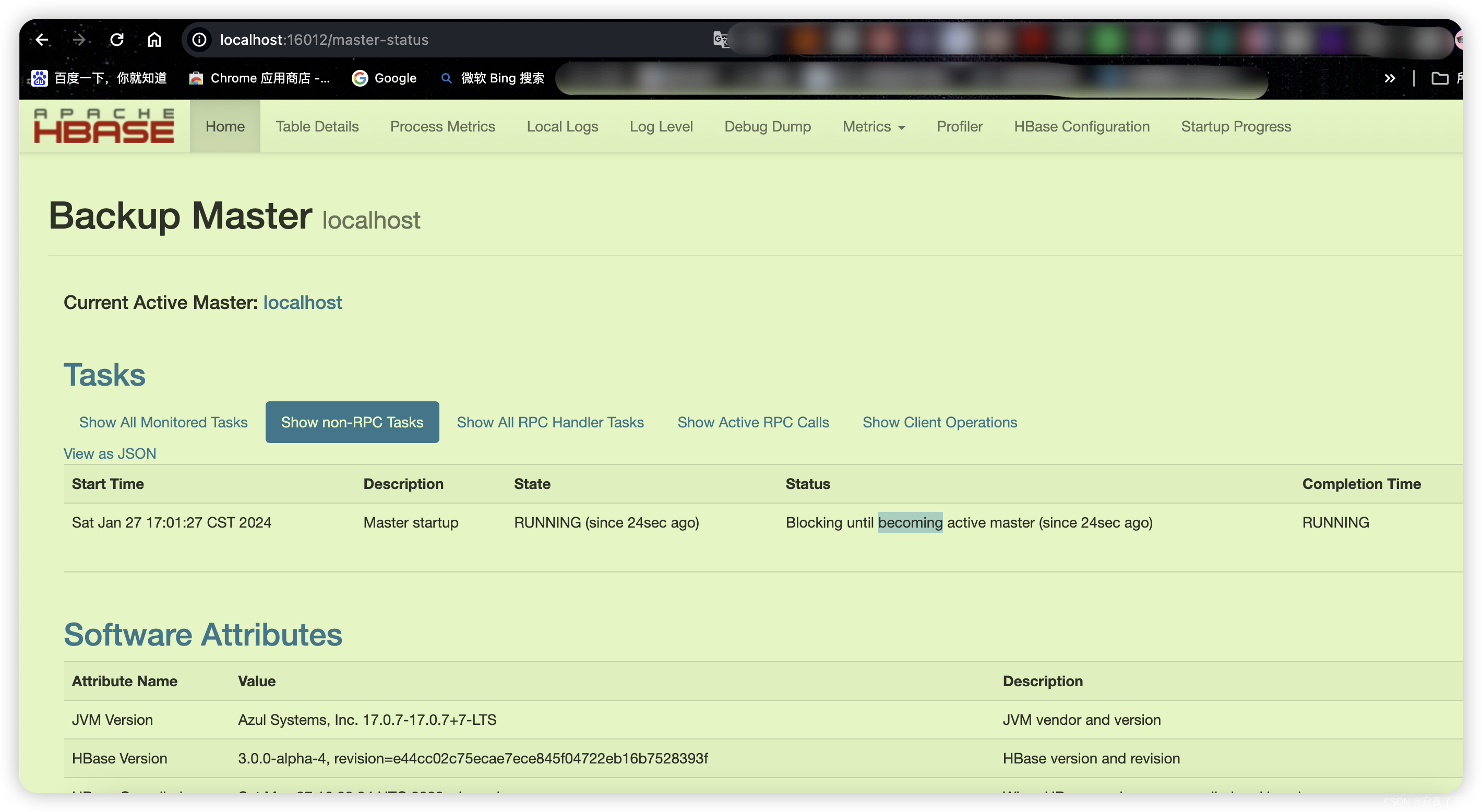

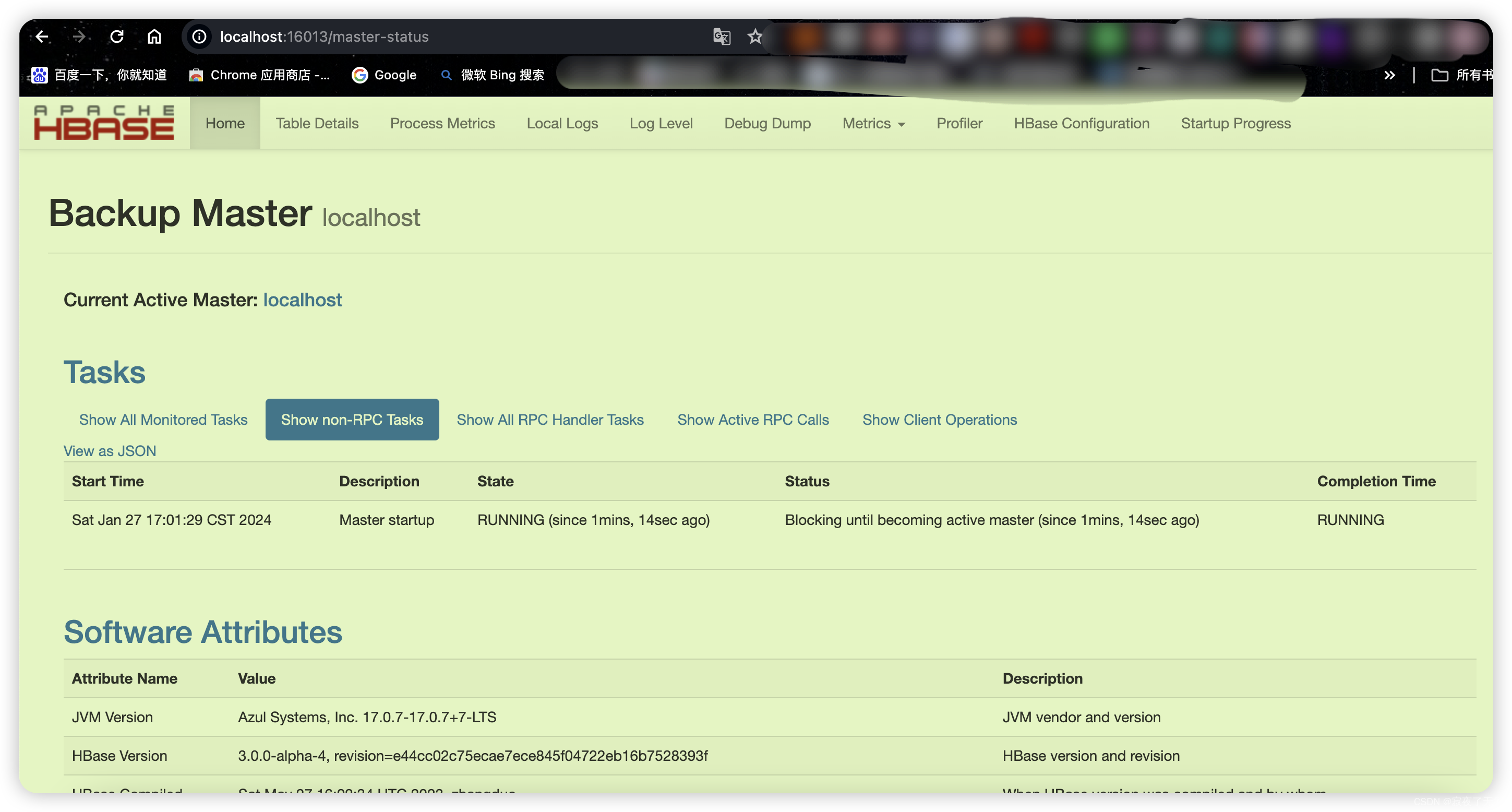

6. Start backup HBase Master

# 开启 3个 backup servers using ports 16002/16012, 16003/16013, and 16005/16015. 2 3 5每个参数是 相对默认端口16000的偏移量

bin/local-master-backup.sh start 2 3 5

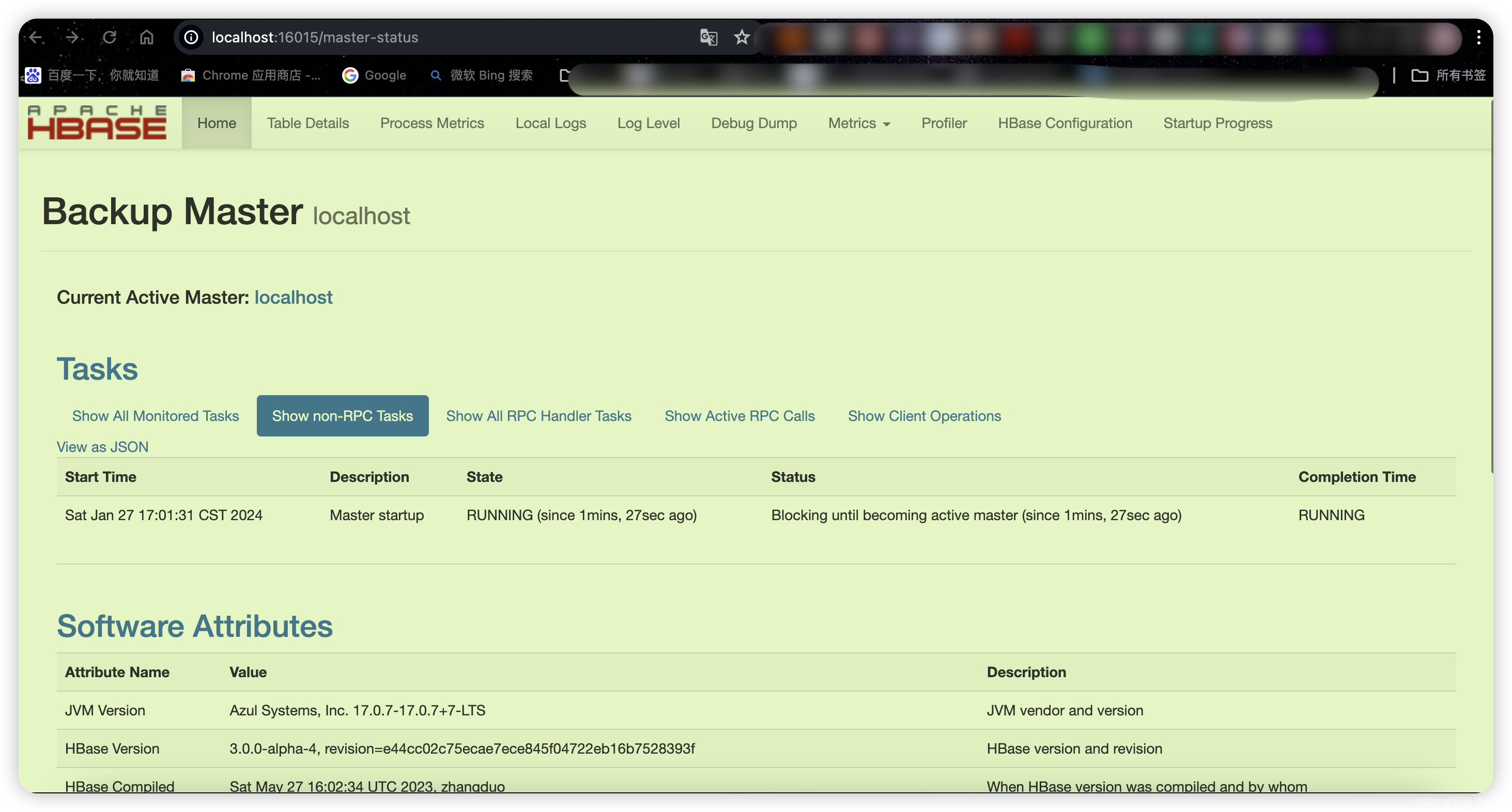

然后我们可以访问本地的 hbase控制台试试效果

默认的是 localhost:16010

backup server localhost:16012 localhost:16013 localhost:16015

这样我们访问就有4个节点了 一个主节点 3个backup 节点 在通过一些组件例如keep alive 等 就实现了 多节点 高可用

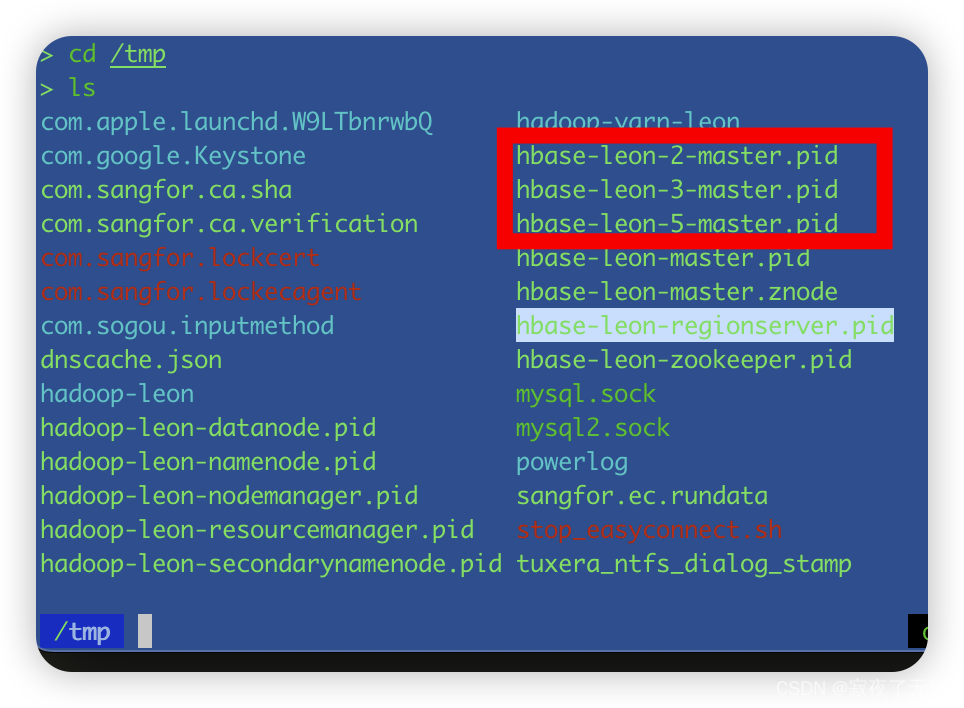

7. 停止 backup HBase Master

要kill备用master而不杀死整个集群,需要找到其进程D(PID)。PID存储在文件中,名称为 /tmp/hbase-user-x-master.pid。文件的唯一内容是PID。您可以使用Kill -9命令杀死该PID。以下命令将使用端口偏移1杀死master,但使群集运行:

查看tmp目录下的 hbase backup master pid文件

选择对应的文件 并执行如下命令 即可kill 备用的 master

#注意hbase-leon-2-master.pid n替换为自己的pid文件

cat /tmp/hbase-leon-2-master.pid |xargs kill -9

8. 开启 备用的RegionServers

设置环境变量 /conf/hbase-env.sh

export HBASE_RS_BASE_PORT=16020

export HBASE_RS_INFO_BASE_PORT=16030

Hnegionserver按照HMaster指示管理其仓库中的数据。通常,一个hregionserver在集群中的每个节点运行。在同一系统上运行多个Hegionservers在伪分布模式下测试很有用。local-regionservers.sh命令允许您运行多个RegionServer。它以与local-master-backup.sh命令相似的方式工作,因为您提供的每个参数代表一个实例的端口偏移。每个区域服务器需要两个端口,默认端口为16020和16030。由于HBASE版本1.1.0,HMASTER不使用区域服务器端口,因此将10个端口(16020至16029和16029和16030至16039)用于ZEMIONIONSERVERS。为了支持其他区域服务器,设置环境变量HBASE_RS_BASE_PORT和HBASE_RS_INFO_BASE_PORT在运行脚本local-regionservers.sh之前。例如对于基本端口的值16200和16300,服务器上可以支持99个其他区域服务器。以下命令启动了另外三个区域服务器,从16022/16032(基本端口16020/16030加2)运行。

开启3个 RegionServers

# regionServers 默认端口为16020和16030

# 参数2 3 5 含义是相对默认端口的偏移量

./bin/local-regionservers.sh start 2 3 5

9. 停止 localRegionServers

./bin/local-regionservers.sh stop 3

以上就完成了 hbase的伪集群搭建 其中 master的 backup比较好配置 另外 多个regionServers 配置稍微复杂。

10. 问题记录

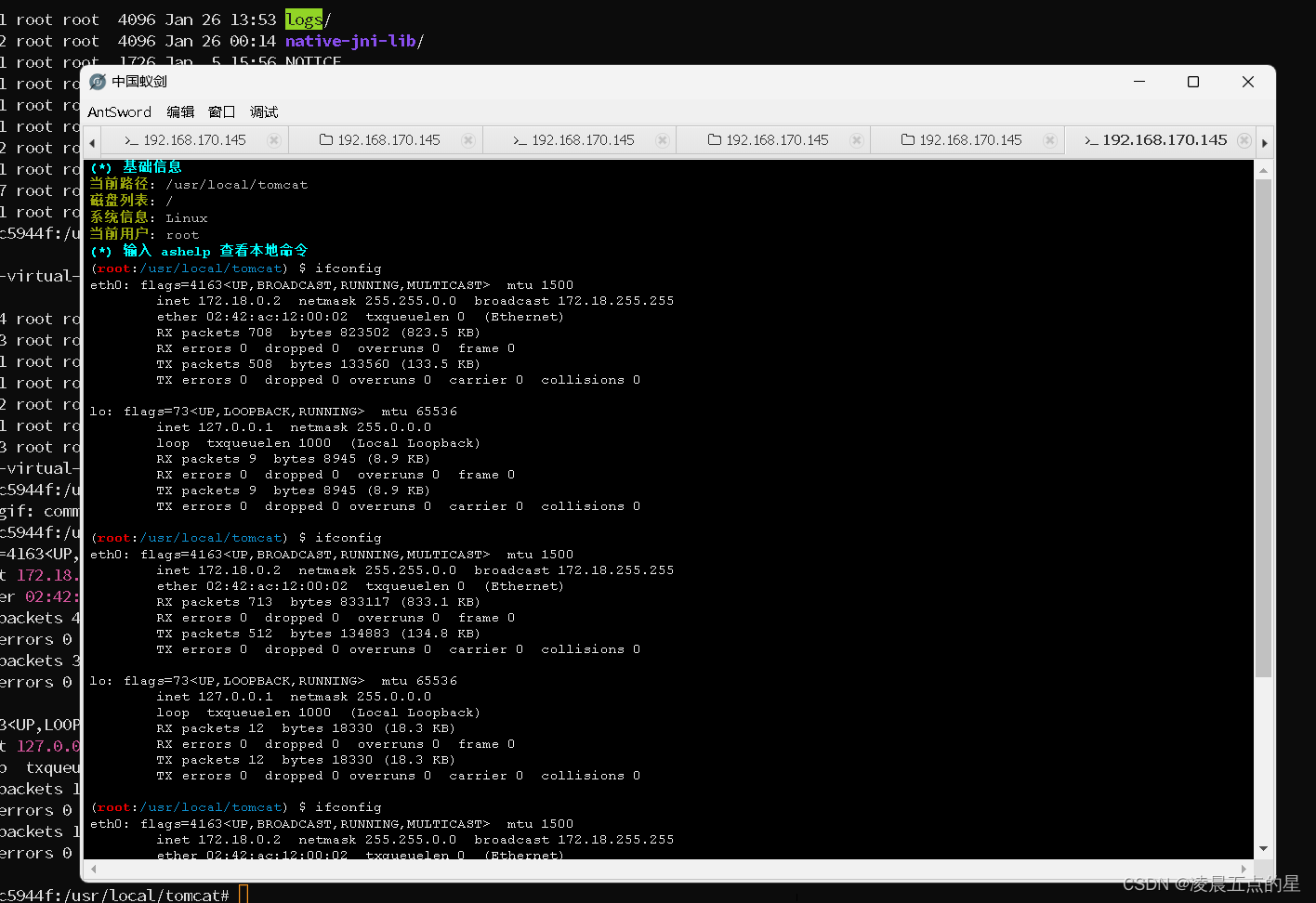

- 问题一 ip绑定问题

hbase 启动服务默认绑定ip到 自己电脑的IP地址上 而没有绑定到localhost 但是在hbase的shell工具中 默认连接的地址是localhost 然后报错提示 连接不到localhost的16000端口 报错如下

list 'test'

TABLE

2024-01-27 16:16:12,963 WARN [RPCClient-NioEventLoopGroup-1-1] ipc.NettyRpcConnection (NettyRpcConnection.java:fail(318)) - Exception encountered while connecting to the server localhost:16000

org.apache.hbase.thirdparty.io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: localhost/127.0.0.1:16000

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hbase.thirdparty.io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:337)

at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:776)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at org.apache.hbase.thirdparty.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.base/java.lang.Thread.run(Thread.java:833)

2024-01-27 16:16:12,978 INFO [Registry-endpoints-refresh-end-points] client.RegistryEndpointsRefresher (RegistryEndpointsRefresher.java:mainLoop(78)) - Registry end points refresher loop exited.

ERROR: Failed contacting masters after 1 attempts.

Exceptions:

java.net.ConnectException: Call to address=localhost:16000 failed on connection exception: org.apache.hbase.thirdparty.io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: localhost/127.0.0.1:16000

For usage try 'help "list"'

Took 0.8411 seconds

hbase:002:0> exit

但是本机查看端口的占用 提示绑定到 自己的电脑的ip:16000上

但是当修改 hbase绑定ip到0.0.0.0时,又提示如下错误

hbase:002:0> create 'test', 'cf'

2024-01-27 16:44:46,414 WARN [RPCClient-NioEventLoopGroup-1-2] client.AsyncRpcRetryingCaller (AsyncRpcRetryingCaller.java:onError(184)) - Call to master failed, tries = 6, maxAttempts = 7, timeout = 1200000 ms, time elapsed = 2217 ms org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3235)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3241) at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:2380)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:778)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.ipc.ServerNotRunningYetException): org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3235)

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3241)

at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:2380)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:778)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)

at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:388)

难道说 hbase不能绑定到0.0.0.0上

折中解决先把IP绑定到 localhost 上 或者127.0.0.1上 然后使用hbase shell测试

- 问题2 hbase使用本地目录存储时 启动报错 如下错误 org.apache.hadoop.hbase.util.CommonFSUtils$StreamLacksCapabilityException: hflush

2024-01-27 14:59:46,330 WARN [master/localhost:16000:becomeActiveMaster] wal.AbstractProtobufLogWriter (AbstractProtobufLogWriter.java:init(195)) - Init output failed, path=file:/usr/local/develop/hbase/MasterData/WALs/localhost,16000,1706338777398/localhost%2C16000%2C1706338777398.1706338786302

org.apache.hadoop.hbase.util.CommonFSUtils$StreamLacksCapabilityException: hflush

at org.apache.hadoop.hbase.io.asyncfs.AsyncFSOutputHelper.createOutput(AsyncFSOutputHelper.java:72)

at org.apache.hadoop.hbase.regionserver.wal.AsyncProtobufLogWriter.initOutput(AsyncProtobufLogWriter.java:183)

at org.apache.hadoop.hbase.regionserver.wal.AbstractProtobufLogWriter.init(AbstractProtobufLogWriter.java:167)

at org.apache.hadoop.hbase.wal.AsyncFSWALProvider.createAsyncWriter(AsyncFSWALProvider.java:114)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.createAsyncWriter(AsyncFSWAL.java:749)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.createWriterInstance(AsyncFSWAL.java:755)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.createWriterInstance(AsyncFSWAL.java:127)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.rollWriterInternal(AbstractFSWAL.java:953)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.lambda$rollWriter$8(AbstractFSWAL.java:985)

at org.apache.hadoop.hbase.trace.TraceUtil.trace(TraceUtil.java:216)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.rollWriter(AbstractFSWAL.java:985)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.rollWriter(AbstractFSWAL.java:595)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.init(AbstractFSWAL.java:536)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:163)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:65)

at org.apache.hadoop.hbase.wal.WALFactory.getWAL(WALFactory.java:391)

at org.apache.hadoop.hbase.master.region.MasterRegion.createWAL(MasterRegion.java:211)

at org.apache.hadoop.hbase.master.region.MasterRegion.open(MasterRegion.java:271)

at org.apache.hadoop.hbase.master.region.MasterRegion.create(MasterRegion.java:432)

at org.apache.hadoop.hbase.master.region.MasterRegionFactory.create(MasterRegionFactory.java:135)

at org.apache.hadoop.hbase.master.HMaster.finishActiveMasterInitialization(HMaster.java:981)

at org.apache.hadoop.hbase.master.HMaster.startActiveMasterManager(HMaster.java:2478)

at org.apache.hadoop.hbase.master.HMaster.lambda$run$0(HMaster.java:602)

at org.apache.hadoop.hbase.trace.TraceUtil.lambda$tracedRunnable$2(TraceUtil.java:155)

at java.base/java.lang.Thread.run(Thread.java:833)

2024-01-27 14:59:46,330 ERROR [master/localhost:16000:becomeActiveMaster] wal.AsyncFSWALProvider (AsyncFSWALProvider.java:createAsyncWriter(118)) - The RegionServer async write ahead log provider relies on the ability to call hflush for proper operation during component failures, but the current FileSystem does not support doing so. Please check the config value of 'hbase.wal.dir' and ensure it points to a FileSystem mount that has suitable capabilities for output streams.

2024-01-27 14:59:46,335 ERROR [master/localhost:16000:becomeActiveMaster] master.HMaster (MarkerIgnoringBase.java:error(159)) - Failed to become active master

java.io.IOException: java.lang.NullPointerException

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.shutdown(AbstractFSWAL.java:1056)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.close(AbstractFSWAL.java:1083)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:167)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:65)

at org.apache.hadoop.hbase.wal.WALFactory.getWAL(WALFactory.java:391)

at org.apache.hadoop.hbase.master.region.MasterRegion.createWAL(MasterRegion.java:211)

at org.apache.hadoop.hbase.master.region.MasterRegion.open(MasterRegion.java:271)

at org.apache.hadoop.hbase.master.region.MasterRegion.create(MasterRegion.java:432)

at org.apache.hadoop.hbase.master.region.MasterRegionFactory.create(MasterRegionFactory.java:135)

at org.apache.hadoop.hbase.master.HMaster.finishActiveMasterInitialization(HMaster.java:981)

at org.apache.hadoop.hbase.master.HMaster.startActiveMasterManager(HMaster.java:2478)

at org.apache.hadoop.hbase.master.HMaster.lambda$run$0(HMaster.java:602)

at org.apache.hadoop.hbase.trace.TraceUtil.lambda$tracedRunnable$2(TraceUtil.java:155)

at java.base/java.lang.Thread.run(Thread.java:833)

Caused by: java.lang.NullPointerException

at java.base/java.util.concurrent.ConcurrentHashMap.putVal(ConcurrentHashMap.java:1011)

at java.base/java.util.concurrent.ConcurrentHashMap.put(ConcurrentHashMap.java:1006)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.closeWriter(AsyncFSWAL.java:778)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.doShutdown(AsyncFSWAL.java:835)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL$2.call(AbstractFSWAL.java:1028)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL$2.call(AbstractFSWAL.java:1023)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

... 1 more

2024-01-27 14:59:46,336 ERROR [master/localhost:16000:becomeActiveMaster] master.HMaster (MarkerIgnoringBase.java:error(159)) - ***** ABORTING master localhost,16000,1706338777398: Unhandled exception. Starting shutdown. *****

java.io.IOException: java.lang.NullPointerException

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.shutdown(AbstractFSWAL.java:1056)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL.close(AbstractFSWAL.java:1083)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:167)

at org.apache.hadoop.hbase.wal.AbstractFSWALProvider.getWAL(AbstractFSWALProvider.java:65)

at org.apache.hadoop.hbase.wal.WALFactory.getWAL(WALFactory.java:391)

at org.apache.hadoop.hbase.master.region.MasterRegion.createWAL(MasterRegion.java:211)

at org.apache.hadoop.hbase.master.region.MasterRegion.open(MasterRegion.java:271)

at org.apache.hadoop.hbase.master.region.MasterRegion.create(MasterRegion.java:432)

at org.apache.hadoop.hbase.master.region.MasterRegionFactory.create(MasterRegionFactory.java:135)

at org.apache.hadoop.hbase.master.HMaster.finishActiveMasterInitialization(HMaster.java:981)

at org.apache.hadoop.hbase.master.HMaster.startActiveMasterManager(HMaster.java:2478)

at org.apache.hadoop.hbase.master.HMaster.lambda$run$0(HMaster.java:602)

at org.apache.hadoop.hbase.trace.TraceUtil.lambda$tracedRunnable$2(TraceUtil.java:155)

at java.base/java.lang.Thread.run(Thread.java:833)

Caused by: java.lang.NullPointerException

at java.base/java.util.concurrent.ConcurrentHashMap.putVal(ConcurrentHashMap.java:1011)

at java.base/java.util.concurrent.ConcurrentHashMap.put(ConcurrentHashMap.java:1006)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.closeWriter(AsyncFSWAL.java:778)

at org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL.doShutdown(AsyncFSWAL.java:835)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL$2.call(AbstractFSWAL.java:1028)

at org.apache.hadoop.hbase.regionserver.wal.AbstractFSWAL$2.call(AbstractFSWAL.java:1023)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

... 1 more

2024-01-27 14:59:46,337 INFO [master/localhost:16000:becomeActiveMaster] master.HMaster (HMaster.java:stop(3222)) - ***** STOPPING master 'localhost,16000,1706338777398' *****

2024-01-27 14:59:46,337 INFO [master/localhost:16000:becomeActiveMaster] master.HMaster (HMaster.java:stop(3224)) - STOPPED: Stopped by master/localhost:16000:becomeActiveMaster

2024-01-27 14:59:46,338 INFO [master/localhost:16000] hbase.HBaseServerBase (HBaseServerBase.java:stopInfoServer(465)) - Stop info server

2024-01-27 14:59:46,359 INFO [master/localhost:16000] handler.ContextHandler (ContextHandler.java:doStop(1159)) - Stopped o.a.h.t.o.e.j.w.WebAppContext@5a3cf878{master,/,null,STOPPED}{file:/usr/local/develop/hbase-3.0.0-alpha-4/hbase-webapps/master}

2024-01-27 14:59:46,365 INFO [master/localhost:16000] server.AbstractConnector (AbstractConnector.java:doStop(383)) - Stopped ServerConnector@43d9f1a2{HTTP/1.1, (http/1.1)}{0.0.0.0:16010}

2024-01-27 14:59:46,366 INFO [master/localhost:16000] server.session (HouseKeeper.java:stopScavenging(149)) - node0 Stopped scavenging

2024-01-27 14:59:46,366 INFO [master/localhost:16000] handler.ContextHandler (ContextHandler.java:doStop(1159)) - Stopped o.a.h.t.o.e.j.s.ServletContextHandler@181b8c4b{static,/static,file:///usr/local/develop/hbase-3.0.0-alpha-4/hbase-webapps/static/,STOPPED}

2024-01-27 14:59:46,366 INFO [master/localhost:16000] handler.ContextHandler (ContextHandler.java:doStop(1159)) - Stopped o.a.h.t.o.e.j.s.ServletContextHandler@4c1bdcc2{logs,/logs,file:///usr/local/develop/hbase-3.0.0-alpha-4/logs/,STOPPED}

2024-01-27 14:59:46,371 INFO [master/localhost:16000] hbase.HBaseServerBase (HBaseServerBase.java:stopChoreService(435)) - Shutdown chores and chore service

2024-01-27 14:59:46,371 INFO [master/localhost:16000] hbase.ChoreService (ChoreService.java:shutdown(370)) - Chore service for: master/localhost:16000 had [] on shutdown

2024-01-27 14:59:46,371 INFO [master/localhost:16000] hbase.HBaseServerBase (HBaseServerBase.java:stopExecutorService(445)) - Shutdown executor service

2024-01-27 14:59:46,373 WARN [master/localhost:16000] master.ActiveMasterManager (ActiveMasterManager.java:stop(344)) - Failed get of master address: java.io.IOException: Can't get master address from ZooKeeper; znode data == null

2024-01-27 14:59:46,373 INFO [master/localhost:16000] ipc.NettyRpcServer (NettyRpcServer.java:stop(202)) - Stopping server on /127.0.0.1:16000

2024-01-27 14:59:46,380 INFO [master/localhost:16000] hbase.HBaseServerBase (HBaseServerBase.java:closeZooKeeper(476)) - Close zookeeper

2024-01-27 14:59:46,488 INFO [master/localhost:16000] zookeeper.ZooKeeper (ZooKeeper.java:close(1422)) - Session: 0x1002ae462b30006 closed

2024-01-27 14:59:46,488 INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(524)) - EventThread shut down for session: 0x1002ae462b30006

2024-01-27 14:59:46,489 INFO [master/localhost:16000] hbase.HBaseServerBase (HBaseServerBase.java:closeTableDescriptors(483)) - Close table descriptors

2024-01-27 14:59:46,489 ERROR [main] master.HMasterCommandLine (HMasterCommandLine.java:startMaster(260)) - Master exiting

java.lang.RuntimeException: HMaster Aborted

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:255)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:140)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3363)

2024-01-27 14:59:46,492 INFO [shutdown-hook-0] regionserver.ShutdownHook (ShutdownHook.java:run(105)) - Shutdown hook starting; hbase.shutdown.hook=true; fsShutdownHook=org.apache.hadoop.fs.FileSystem$Cache$ClientFinalizer@181e72d3

2024-01-27 14:59:46,493 INFO [shutdown-hook-0] regionserver.ShutdownHook (ShutdownHook.java:run(114)) - Starting fs shutdown hook thread.

2024-01-27 14:59:46,493 INFO [shutdown-hook-0] regionserver.ShutdownHook (ShutdownHook.java:run(129)) - Shutdown hook finished

这个问题导致hbase无法完成启动,只能被迫换了使用hdfs

good day !!!