数仓面试之手写拉链表SQL,并分析有多少个job

拉链表定义

维护历史状态,以及最新状态数据的一种表,拉链表根据拉链粒度的不同,实际上相当于快照,只不过做了优化,去除了一部分不变的记录而已,通过拉链表可以很方便的还原出拉链时点的客户记录。

拉链表,记录每条信息的生命周期,一旦一条记录的生命周期结束,就重新开始一条新的记录,并把当前日期放入生效开始日期。

如果当前信息至今有效,在生效结束日期中填入一个极大值 (如9999-99-99 )

用户表

用户表中的数据每日既有可能新增, 也有可能修改, 但修改频率并不高, 属于缓慢变化维度, 此处采用拉链表存储用户维度数据。

拉链表实现原理

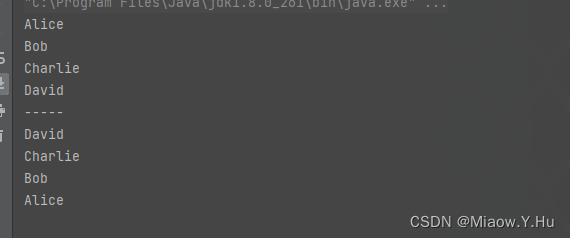

第一步:比如在2020-01-01这一天,我们初始化用户数据到数据仓库user表,user表包含start_time和end_time字段用来标识该条数据的生命周期,明细数据如下所示。

第二步:在2020-01-02这一天,104用户被删除,同时增加了106和107用户,103用户的名称改成了小吴,明细数据如下所示。

第三步:在2020-01-03这一天,107用户被删除,同时106用户名称改为八王爷,103用户名称改为吴王,并增加了108用户,明细数据如下所示。

拉链表使用场景

数据量有点大,表中某些字段有变化,但是变化的频率不是很高(数据缓慢变化),业务需求又需要统计这种变化状态。但每天全量一份数据,有点不太现实,不仅浪费了存储空间,有时可能业务统计也有点麻烦。这时,拉链表的作用就体现出来了,既节省空间,又满足了需求。比如用户表,用户量1亿,一年365天,每天存储一份全量用户数据,效率非常低,此时就可以使用用户拉链表。

拉链表实现步骤

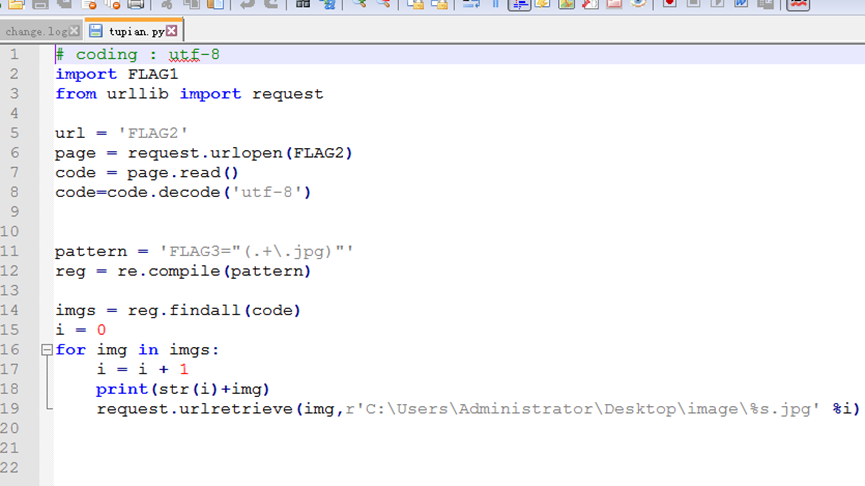

每日更新(下面截图取自尚硅谷)

原始业主用户表

DROP TABLE IF EXISTS ods_user_info;

CREATE EXTERNAL TABLE ods_user_info(

`id` STRING COMMENT '用户id',

`login_name` STRING COMMENT '用户名称',

`nick_name` STRING COMMENT '用户昵称',

`name` STRING COMMENT '用户姓名',

`phone_num` STRING COMMENT '手机号码',

`email` STRING COMMENT '邮箱',

`user_level` STRING COMMENT '用户等级',

`birthday` STRING COMMENT '生日',

`gender` STRING COMMENT '性别',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '操作时间'

) COMMENT '用户表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

模拟数据:

ALTER TABLE ods_user_info DROP PARTITION (dt='2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (1, 'user1', 'nick1', '张三', '13800138000', 'zhangsan@example.com', 1, '2000-01-01', '男', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (2, 'user2', 'nick2', '李四', '13900139000', 'lisi@example.com', 2, '2001-01-01', '女', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (3, 'user3', 'nick3', '王五', '13700137000', 'wangwu@example.com', 3, '2002-01-01', '男', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (4, 'user4', 'nick4', '赵六', '13600136000', 'zhaoliu@example.com', 4, '2003-01-01', '女', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (5, 'user5', 'nick5', '孙七', '13500135000', 'sunqi@example.com', 5, '2004-01-01', '男', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (6, 'user6', 'nick6', '周八', '13400134000', 'zhouba@example.com', 6, '2005-01-01', '女', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (7, 'user7', 'nick7', '吴九', '13300133000', 'wujiu@example.com', 7, '2006-01-01', '男', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (8, 'user8', 'nick8', '郑十', '13200132000', 'zhengshi@example.com', 8, '2007-01-01', '女', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (9, 'user9', 'nick9', '冯十一', '13100131000', 'fengshiyi@example.com', 9, '2008-01-01', '男', '2020-06-14', '2020-06-14');

INSERT INTO ods_user_info PARTITION (dt='2020-06-14')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (10, 'user10', 'nick10', '陈十二', '13000130000', 'chenshier@example.com', 10, '2009-01-01', '女', '2020-06-14', '2020-06-14');

select *from ods_user_info;

ALTER TABLE ods_user_info DROP PARTITION (dt='2020-06-15');

INSERT INTO ods_user_info PARTITION (dt='2020-06-15')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (8, 'user8', 'nick8', '郑十san', '13200132000', 'zhengshi@example.com', 8, '2007-01-01', '女', '2020-06-15', '2020-06-15');

INSERT INTO ods_user_info PARTITION (dt='2020-06-15')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (9, 'user9', 'nick9', '冯十wu', '13100131000', 'fengshiyi@example.com', 9, '2008-01-01', '男', '2020-06-15', '2020-06-15');

INSERT INTO ods_user_info PARTITION (dt='2020-06-15')(id, login_name, nick_name, name, phone_num, email, user_level, birthday, gender, create_time, operate_time)

VALUES (10, 'user10', 'nick10', '陈yi二', '13000130000', 'chenshier@example.com', 10, '2009-01-01', '女', '2020-06-15', '2020-06-15');

创建拉链表

DROP TABLE IF EXISTS dim_user_info;

CREATE EXTERNAL TABLE dim_user_info(

`id` STRING COMMENT '用户id',

`login_name` STRING COMMENT '用户名称',

`nick_name` STRING COMMENT '用户昵称',

`name` STRING COMMENT '用户姓名',

`phone_num` STRING COMMENT '手机号码',

`email` STRING COMMENT '邮箱',

`user_level` STRING COMMENT '用户等级',

`birthday` STRING COMMENT '生日',

`gender` STRING COMMENT '性别',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '操作时间',

`start_date` STRING COMMENT '开始日期',

`end_date` STRING COMMENT '结束日期'

) COMMENT '用户表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

初始化拉链表

insert overwrite table dim_user_info partition(dt='9999-99-99')

select

id,

login_name,

nick_name,

md5(name),

md5(phone_num),

md5(email),

user_level,

birthday,

gender,

create_time,

operate_time,

'2020-06-14',

'9999-99-99'

from ods_user_info

where dt='2020-06-14';

拉链表具体sql实现

with

tmp as

(

select

old.id old_id,

old.login_name old_login_name,

old.nick_name old_nick_name,

old.name old_name,

old.phone_num old_phone_num,

old.email old_email,

old.user_level old_user_level,

old.birthday old_birthday,

old.gender old_gender,

old.create_time old_create_time,

old.operate_time old_operate_time,

old.start_date old_start_date,

old.end_date old_end_date,

new.id new_id,

new.login_name new_login_name,

new.nick_name new_nick_name,

new.name new_name,

new.phone_num new_phone_num,

new.email new_email,

new.user_level new_user_level,

new.birthday new_birthday,

new.gender new_gender,

new.create_time new_create_time,

new.operate_time new_operate_time,

new.start_date new_start_date,

new.end_date new_end_date

from

(

select

id,

login_name,

nick_name,

name,

phone_num,

email,

user_level,

birthday,

gender,

create_time,

operate_time,

start_date,

end_date

from dim_user_info

where dt='9999-99-99'

)old

full outer join

(

select

id,

login_name,

nick_name,

md5(name) name,

md5(phone_num) phone_num,

md5(email) email,

user_level,

birthday,

gender,

create_time,

operate_time,

'2020-06-15' start_date,

'9999-99-99' end_date

from ods_user_info

where dt='2020-06-15'

)new

on old.id=new.id

)

insert overwrite table dim_user_info partition(dt)

select

nvl(new_id,old_id),

nvl(new_login_name,old_login_name),

nvl(new_nick_name,old_nick_name),

nvl(new_name,old_name),

nvl(new_phone_num,old_phone_num),

nvl(new_email,old_email),

nvl(new_user_level,old_user_level),

nvl(new_birthday,old_birthday),

nvl(new_gender,old_gender),

nvl(new_create_time,old_create_time),

nvl(new_operate_time,old_operate_time),

nvl(new_start_date,old_start_date),

nvl(new_end_date,old_end_date),

nvl(new_end_date,old_end_date) dt

from tmp

union all

select

old_id,

old_login_name,

old_nick_name,

old_name,

old_phone_num,

old_email,

old_user_level,

old_birthday,

old_gender,

old_create_time,

old_operate_time,

old_start_date,

cast(date_add('2020-06-15',-1) as string),

cast(date_add('2020-06-15',-1) as string) dt

from tmp

where new_id is not null and old_id is not null;

查询最终拉链表数据

select * from dim_user_info where dt = '9999-99-99';

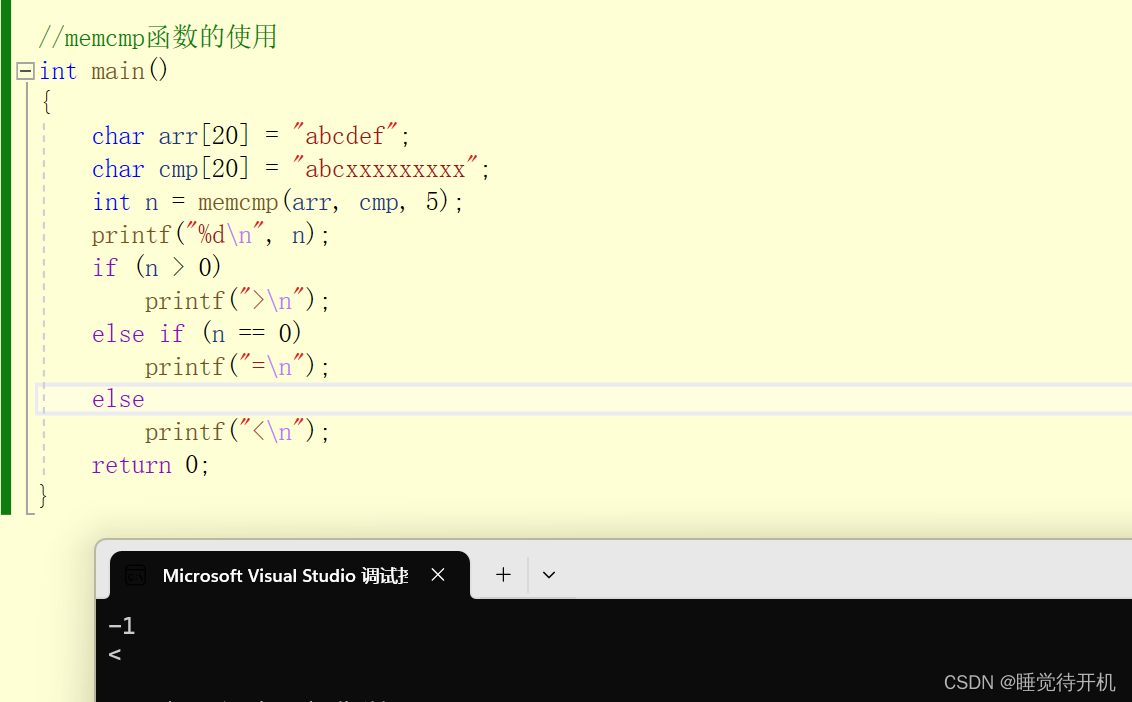

Hive中stage与job的关联

1)通过explain可以清晰的看到stage划分。

2)经过自动优化之后,有些stage并不会执行,这些stage就是空的stage。

3)基于stage划分,如何确定job数量,主要是看哪些stage会执行。只有需要执行的stage才会提交到yarn,然后生成具体的job application。

Explain

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-13 depends on stages: Stage-1

Stage-2 depends on stages: Stage-13

Stage-8 depends on stages: Stage-2 , consists of Stage-5, Stage-4, Stage-6

Stage-5

Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

Stage-3 depends on stages: Stage-0

Stage-4

Stage-6

Stage-7 depends on stages: Stage-6

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string), end_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

value expressions: _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), _col12 (type: string)

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (dt = '2020-06-15') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), md5(name) (type: string), md5(phone_num) (type: string), md5(email) (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), '2020-06-15' (type: string), '9999-99-99' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

value expressions: _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), _col12 (type: string)

Reduce Operator Tree:

Join Operator

condition map:

Outer Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13, _col14, _col15, _col16, _col17, _col18, _col19, _col20, _col21, _col22, _col23, _col24, _col25

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: NVL(_col13,_col0) (type: string), NVL(_col14,_col1) (type: string), NVL(_col15,_col2) (type: string), NVL(_col16,_col3) (type: string), NVL(_col17,_col4) (type: string), NVL(_col18,_col5) (type: string), NVL(_col19,_col6) (type: string), NVL(_col20,_col7) (type: string), NVL(_col21,_col8) (type: string), NVL(_col22,_col9) (type: string), NVL(_col23,_col10) (type: string), NVL(_col24,_col11) (type: string), NVL(_col25,_col12) (type: string), NVL(_col25,_col12) (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

Stage: Stage-13

Map Reduce Local Work

Alias -> Map Local Tables:

null-subquery2:$hdt$_0-subquery2:$hdt$_0:dim_user_info

Fetch Operator

limit: -1

Alias -> Map Local Operator Tree:

null-subquery2:$hdt$_0-subquery2:$hdt$_0:dim_user_info

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: id is not null (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

HashTable Sink Operator

keys:

0 _col0 (type: string)

1 _col0 (type: string)

Stage: Stage-2

Map Reduce

Map Operator Tree:

TableScan

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: zmm.dim_user_info

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '2020-06-15') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Map Join Operator

condition map:

Inner Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), '2020-06-14' (type: string), '2020-06-14' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: zmm.dim_user_info

Local Work:

Map Reduce Local Work

Stage: Stage-8

Conditional Operator

Stage: Stage-5

Move Operator

files:

hdfs directory: true

destination: hdfs://192.168.0.122:9000/user/hive/warehouse/zmm.db/dim_user_info/.hive-staging_hive_2023-09-28_16-48-39_357_1852508236922488629-1/-ext-10000

Stage: Stage-0

Move Operator

tables:

partition:

dt

replace: true

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: zmm.dim_user_info

Stage: Stage-3

Stats-Aggr Operator

Stage: Stage-4

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: zmm.dim_user_info

Stage: Stage-6

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: zmm.dim_user_info

Stage: Stage-7

Move Operator

files:

hdfs directory: true

destination: hdfs://192.168.0.122:9000/user/hive/warehouse/zmm.db/dim_user_info/.hive-staging_hive_2023-09-28_16-48-39_357_1852508236922488629-1/-ext-10000

Explain

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-2 depends on stages: Stage-1, Stage-10, Stage-12, Stage-13

Stage-8 depends on stages: Stage-2 , consists of Stage-5, Stage-4, Stage-6

Stage-5

Stage-0 depends on stages: Stage-5, Stage-4, Stage-7

Stage-3 depends on stages: Stage-0

Stage-4

Stage-6

Stage-7 depends on stages: Stage-6

Stage-14 is a root stage , consists of Stage-15, Stage-16, Stage-10

Stage-15 has a backup stage: Stage-10

Stage-12 depends on stages: Stage-15

Stage-16 has a backup stage: Stage-10

Stage-13 depends on stages: Stage-16

Stage-10

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (dt = '9999-99-99') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string), end_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

value expressions: _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), _col12 (type: string)

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (dt = '2020-06-15') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), md5(name) (type: string), md5(phone_num) (type: string), md5(email) (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), '2020-06-15' (type: string), '9999-99-99' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

value expressions: _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), _col12 (type: string)

Reduce Operator Tree:

Join Operator

condition map:

Outer Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13, _col14, _col15, _col16, _col17, _col18, _col19, _col20, _col21, _col22, _col23, _col24, _col25

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: NVL(_col13,_col0) (type: string), NVL(_col14,_col1) (type: string), NVL(_col15,_col2) (type: string), NVL(_col16,_col3) (type: string), NVL(_col17,_col4) (type: string), NVL(_col18,_col5) (type: string), NVL(_col19,_col6) (type: string), NVL(_col20,_col7) (type: string), NVL(_col21,_col8) (type: string), NVL(_col22,_col9) (type: string), NVL(_col23,_col10) (type: string), NVL(_col24,_col11) (type: string), NVL(_col25,_col12) (type: string), NVL(_col25,_col12) (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

Stage: Stage-2

Map Reduce

Map Operator Tree:

TableScan

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

output format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

serde: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

name: zmm.dim_user_info

TableScan

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

output format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

serde: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

name: zmm.dim_user_info

Stage: Stage-8

Conditional Operator

Stage: Stage-5

Move Operator

files:

hdfs directory: true

destination: hdfs://192.168.0.122:9000/warehouse/gmall/dim/dim_user_info/.hive-staging_hive_2023-09-28_16-23-09_282_7816820202500856515-1/-ext-10000

Stage: Stage-0

Move Operator

tables:

partition:

dt

replace: true

table:

input format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

output format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

serde: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

name: zmm.dim_user_info

Stage: Stage-3

Stats-Aggr Operator

Stage: Stage-4

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

output format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

serde: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

name: zmm.dim_user_info

Stage: Stage-6

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

output format: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

serde: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

name: zmm.dim_user_info

Stage: Stage-7

Move Operator

files:

hdfs directory: true

destination: hdfs://192.168.0.122:9000/warehouse/gmall/dim/dim_user_info/.hive-staging_hive_2023-09-28_16-23-09_282_7816820202500856515-1/-ext-10000

Stage: Stage-14

Conditional Operator

Stage: Stage-15

Map Reduce Local Work

Alias -> Map Local Tables:

null-subquery2:$hdt$_0-subquery2:$hdt$_1:ods_user_info

Fetch Operator

limit: -1

Alias -> Map Local Operator Tree:

null-subquery2:$hdt$_0-subquery2:$hdt$_1:ods_user_info

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '2020-06-15') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

HashTable Sink Operator

keys:

0 _col0 (type: string)

1 _col0 (type: string)

Stage: Stage-12

Map Reduce

Map Operator Tree:

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '9999-99-99') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Map Join Operator

condition map:

Inner Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), '2020-06-14' (type: string), '2020-06-14' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

Local Work:

Map Reduce Local Work

Stage: Stage-16

Map Reduce Local Work

Alias -> Map Local Tables:

null-subquery2:$hdt$_0-subquery2:$hdt$_0:dim_user_info

Fetch Operator

limit: -1

Alias -> Map Local Operator Tree:

null-subquery2:$hdt$_0-subquery2:$hdt$_0:dim_user_info

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '9999-99-99') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

HashTable Sink Operator

keys:

0 _col0 (type: string)

1 _col0 (type: string)

Stage: Stage-13

Map Reduce

Map Operator Tree:

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '2020-06-15') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Map Join Operator

condition map:

Inner Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), '2020-06-14' (type: string), '2020-06-14' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

Local Work:

Map Reduce Local Work

Stage: Stage-10

Map Reduce

Map Operator Tree:

TableScan

alias: dim_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '9999-99-99') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string), login_name (type: string), nick_name (type: string), name (type: string), phone_num (type: string), email (type: string), user_level (type: string), birthday (type: string), gender (type: string), create_time (type: string), operate_time (type: string), start_date (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

value expressions: _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string)

TableScan

alias: ods_user_info

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: ((dt = '2020-06-15') and id is not null) (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string)

sort order: +

Map-reduce partition columns: _col0 (type: string)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Reduce Operator Tree:

Join Operator

condition map:

Inner Join 0 to 1

keys:

0 _col0 (type: string)

1 _col0 (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string), _col3 (type: string), _col4 (type: string), _col5 (type: string), _col6 (type: string), _col7 (type: string), _col8 (type: string), _col9 (type: string), _col10 (type: string), _col11 (type: string), '2020-06-14' (type: string), '2020-06-14' (type: string)

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7, _col8, _col9, _col10, _col11, _col12, _col13

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazybinary.LazyBinarySerDe

![[ACM学习] 动态规划基础之一二三维dp](https://img-blog.csdnimg.cn/direct/23331bcd72ae4d4a9574ada0a8f88cc4.png)