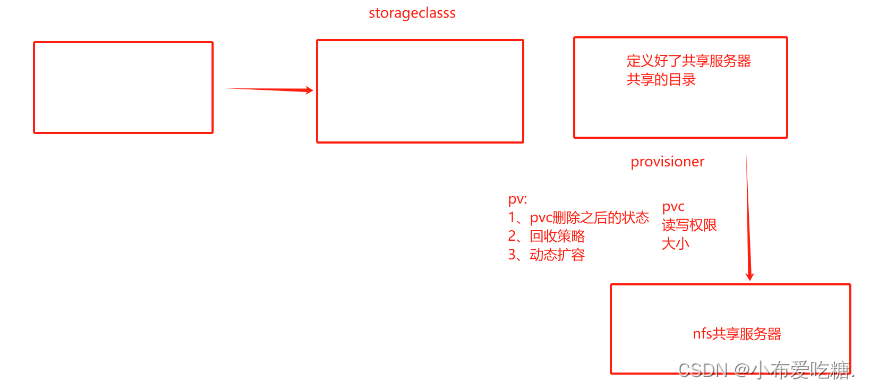

动态pv需要两个组件

1、卷插件,k8s本身支持的动态pv创建不包含NFS,需要声明和安装一个外部插件

Provisioner 存储分配器,动态创建pv,然后根据pvc的请求自动绑定和使用2、StorageClass,用来定义pv的属性,存储类型,大小,回收策略

使用NFS来实现动态pv,NFS支持的方式nfs-cli,Provisioner来适配nfs-client

nfs-client-Provisioner 卷插件

部署动态

1、在Harbor上部署

进入opt目录下

mkdir k8s

chmod 777 k8s

vim /etc/exports

/opt/k8s 20.0.0.0/24(rw,no_root_squash,sync)

systemctl restart rpcbind

systemctl restart nfs

在本机上测试

[root@k8s4 opt]# showmount -e

Export list for k8s4:

/opt/k8s 20.0.0.0/24

在节点上测试

[root@node02 ~]# showmount -e 20.0.0.73

Export list for 20.0.0.73:

/opt/k8s 20.0.0.0/24

2、在主节点上部署serviceAccount Nfs-privisioner storageclasses

部署serviceAccount

NFS PRovisioner是一个插件,没有权限是无法再集群当中获取k8s的消息,插件要有权限能够监听APIserver,获取getlist

rbac Role-based Access Control

定义角色在集群当中可以使用的权限

vim nfs-client-rbac.yaml

#定义角色

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

#定义角色的权限

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-client-provisioner-role

rules:

- apiGroups: [""]

#apiGroups定义了规则使用那个API的组,空字符"",直接使用API的核心组的资源

resources: ["persistentvolumes"]

verbs: ["get","list","watch","create","delete"]

#表示权限的动作

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["watch","get","list","update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get","list","watch"]

- apiGroups: [""]

#获取所有事件信息

resources: ["events"]

verbs: ["list","watch","create","update","patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["create","delete","get","list","watch","patch","update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: nfs-client-provisioner-bind

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-role

apiGroup: rbac.authorization.k8s.io部署Nfs-privisioner

部署插件:

Nfs-privisioner deployment来创建插件 pod

1.20之后有一个新的机制

selfLink api的资源对象之一,表示资源对象在集群当中自身的一个连接,self-link是一个唯一标识符号,可以用于标识k8s集群当中

每个资源的对象

self -link的值是一个URL,指向该资源对象的k8s api的路径

更好的实现资源对象的查找和引用

vim /etc/kubernetes/manifests/kube-apiserver.yaml

...........

spec:

containers:

- command:

- kube-apiserver

- --feature-gates=RemoveSelfLink=false

- --advertise-address=20.0.0.70

............

- --feature-gates=RemoveSelfLink=false

feature-gates 在不破坏现有有规则以及功能基础上引用新功能或者修改现有功能的机制,禁用不影响之前的规则

生成一个新的APIserver

kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml

删除旧的apiserver

kubectl delete pod -n kube-system kube-apiserver

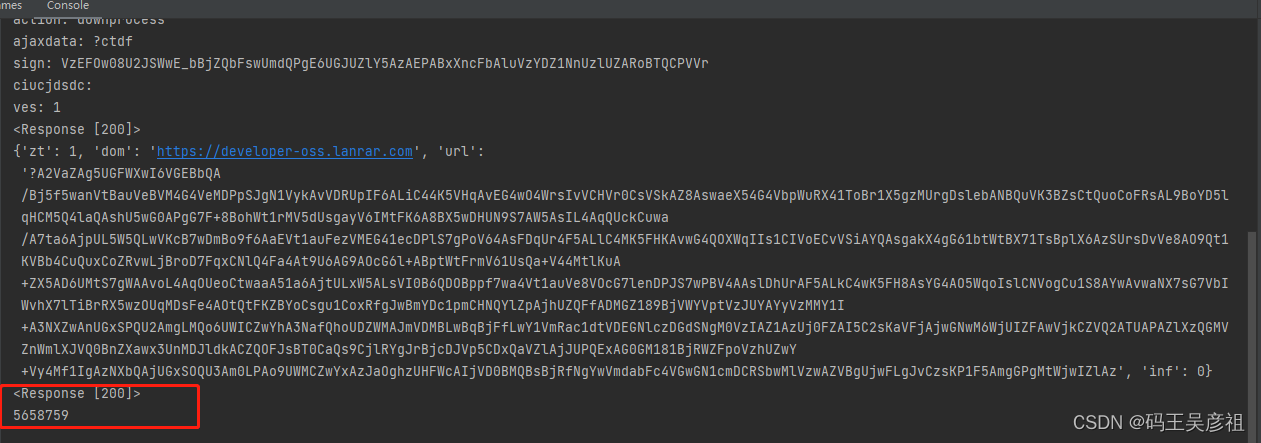

[root@master01 opt]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-vhmhn 1/1 Running 1 2d1h

coredns-7f89b7bc75-vrsqz 1/1 Running 0 2d2h

etcd-master01 1/1 Running 1 13d

kube-apiserver-master01 1/1 Running 0 3h45m

kube-controller-manager-master01 1/1 Running 11 13d

kube-flannel-ds-btmh8 1/1 Running 1 13d

kube-flannel-ds-kpfhw 1/1 Running 0 2d1h

kube-flannel-ds-nn558 1/1 Running 1 2d2h

kube-proxy-46rbj 1/1 Running 1 13d

kube-proxy-khngm 1/1 Running 1 13d

kube-proxy-lq8lh 1/1 Running 1 13d

kube-scheduler-master01 1/1 Running 11 13d

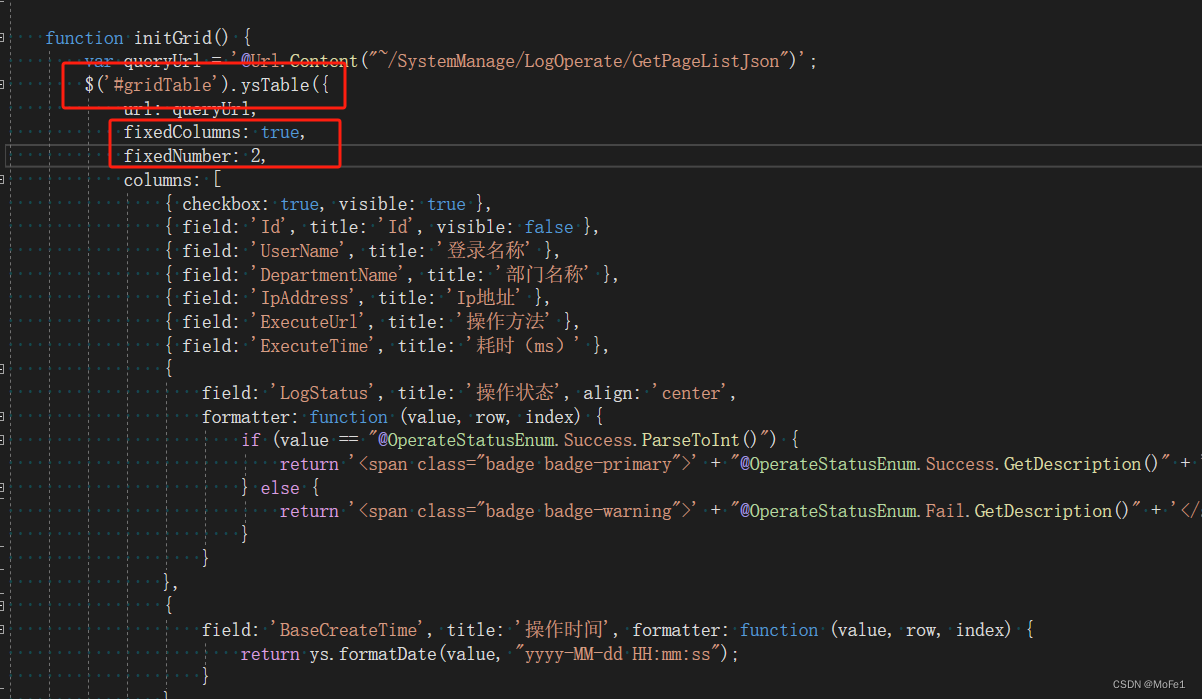

provisioner的yaml文件

vim nfs-client-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-provisioner

labels:

app: nfs1

spec:

replicas: 1

selector:

matchLabels:

app: nfs1

template:

metadata:

labels:

app: nfs1

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs1

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-storage

#配置provisioner的账户名称,要和storageclass的资源名称一致

- name: NFS_SERVER

#指定的是nfs共享服务器的地址

value: 20.0.0.73

- name: NFS_PATH

value: /opt/k8s

volumes:

- name: nfs

nfs:

server: 20.0.0.73

path: /opt/k8s

部署nfs-provisioner的插件

nfs的PRovisioner的客户端已pod的方式运行在集群当中,监听k8s集群当中pv的请求,动态的创建于NFS服务器相关的pv

容器里使用的配置,在PRovisioner当中定义好环境变量,传给容器,storageclass的名称,nfs服务器的地址,nfs的目录

部署storageclass(定义pv的存储卷)

vim nfs-client-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client-storageclass

#匹配provisioner

provisioner: nfs-storage

parameters:

archiveOnDelete: "false"

#当pvc被删除之后,pv的状态,定义的是false,pvc被删除,pv的状态将是released,可以人工调整继续使用,如果是true,pv的状态将是Archived,表示pv不再可

用

reclaimPolicy: Delete

#定义pv的回收的策略,retain,delete,不支持回收

allowVolumeExpansion: true

#pv的存储空间可以动态的扩缩容

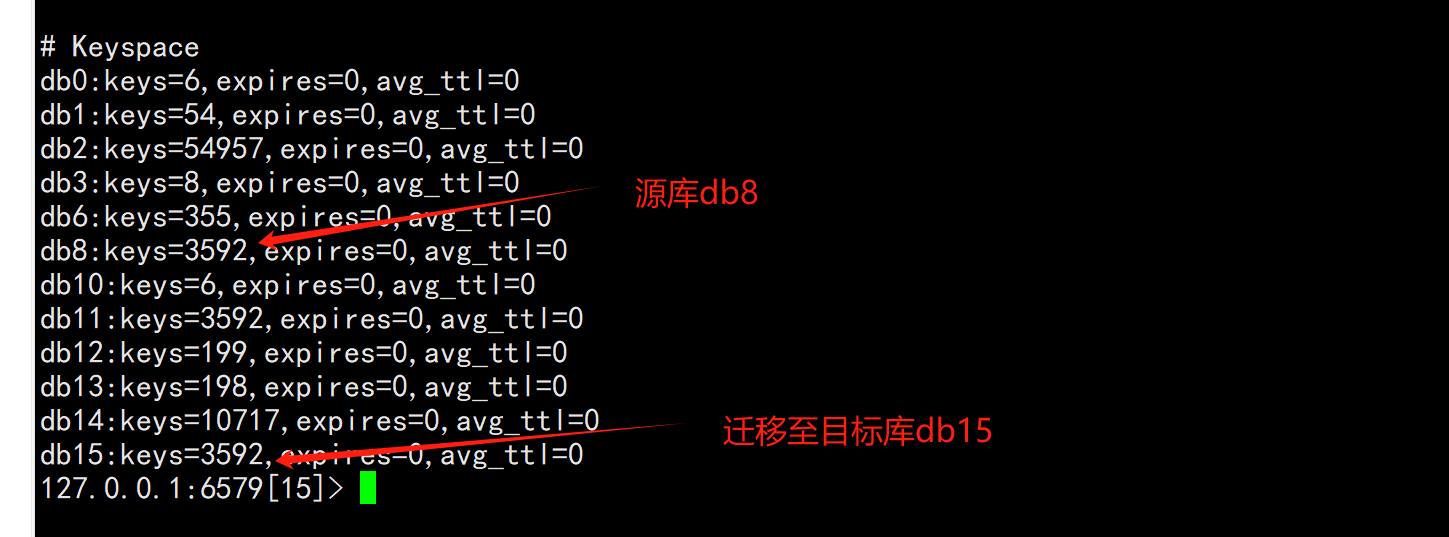

查看storageclasses

[root@master01 opt]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client-storageclass nfs-storage Retain Immediate true 16s

NAME storageclasses的名称

PROVISIONER 对应的创建pv的 PROVISIONER的插件

RECLAIMPOLICY 回收策略,保留

VOLUMEBINDINGMODE 卷绑定模式,Immediate表示pvc请求创建pv时,系统会立即绑定一个可用pv

waitFirstConsumer:第一个使用者出现之后再绑pv

ALLOWVOLUMEEXPANSION true表示可以在运行时对pv进行扩容三者之间的关系

动态pv的默认策略是删除,delete

查看pv

[root@master01 opt]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-da0b10f1-be7c-4553-9eb6-edd07b573058 2Gi RWX Retain Bound default/nfs-pvc nfs-client-storageclass 169m

查看挂载目录

[root@k8s4 k8s]# ls

default-nfs-pvc-pvc-da0b10f1-be7c-4553-9eb6-edd07b573058

[root@k8s4 default-nfs-pvc-pvc-da0b10f1-be7c-4553-9eb6-edd07b573058]# echo 123 > index.html

访问

[root@master01 opt]# curl 10.244.2.63

123