前言

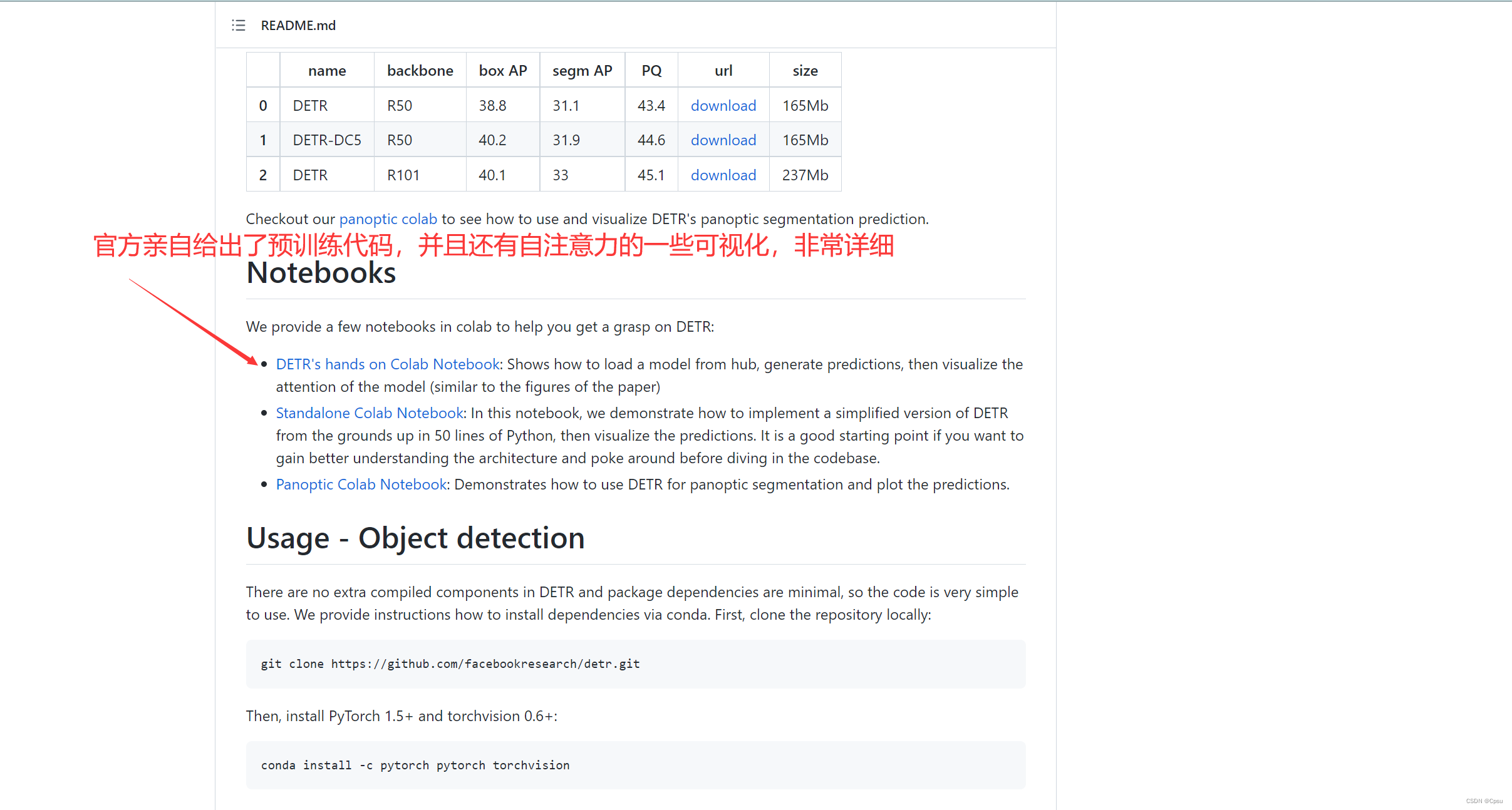

这里我分享一下官方写的DETR的预测代码,其实就是github上DETR官方写的一个Jupyter notebook,可能需要梯子才能访问,这里我贴出来。因为DETR比较难训练,我觉得可以用官方的预训练模型来看看效果。

如果要用自己训练的模型来推理,可以参考我的这篇文章:DETR训练自己的数据集

顺便说一下,这个模型是简易版,其实就是DETR论文最后一页贴出来的代码,mAP大概要比源码的低2-3个点,但已经很强了。只用了50行代码就把DETR模型架构展示出来了,真的是非常简洁的一个模型!

一、jupyter notebook运行代码

如果用的是jupyter notebook,用这个代码,修改代码末尾的图片路径即可

from PIL import Image

import matplotlib.pyplot as plt

%config InlineBackend.figure_format = 'svg'

import ipywidgets as widgets

from IPython.display import display, clear_output

import cv2

import torch

from torch import nn

from torchvision.models import resnet50

import torchvision.transforms as T

torch.set_grad_enabled(False)

from torchvision import models

# COCO classes

CLASSES = [

'N/A', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A',

'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse',

'sheep', 'cow', 'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack',

'umbrella', 'N/A', 'N/A', 'handbag', 'tie', 'suitcase', 'frisbee', 'skis',

'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove',

'skateboard', 'surfboard', 'tennis racket', 'bottle', 'N/A', 'wine glass',

'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich',

'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake',

'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table', 'N/A',

'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard',

'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A',

'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier',

'toothbrush'

]

# colors for visualization

COLORS = [[0.000, 0.447, 0.741], [0.850, 0.325, 0.098], [0.929, 0.694, 0.125],

[0.494, 0.184, 0.556], [0.466, 0.674, 0.188], [0.301, 0.745, 0.933]]

# standard PyTorch mean-std input image normalization

transform = T.Compose([

T.Resize(800),

T.ToTensor(),

T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# for output bounding box post-processing

def box_cxcywh_to_xyxy(x):

x_c, y_c, w, h = x.unbind(1)

b = [(x_c - 0.5 * w), (y_c - 0.5 * h),

(x_c + 0.5 * w), (y_c + 0.5 * h)]

return torch.stack(b, dim=1)

def rescale_bboxes(out_bbox, size):

img_w, img_h = size

b = box_cxcywh_to_xyxy(out_bbox)

b = b * torch.tensor([img_w, img_h, img_w, img_h], dtype=torch.float32)

return b

def plot_results(pil_img, prob, boxes):

plt.figure(figsize=(16,10))

plt.imshow(pil_img)

ax = plt.gca()

colors = COLORS * 100

for p, (xmin, ymin, xmax, ymax), c in zip(prob, boxes.tolist(), colors):

ax.add_patch(plt.Rectangle((xmin, ymin), xmax - xmin, ymax - ymin,

fill=False, color=c, linewidth=3))

cl = p.argmax()

text = f'{CLASSES[cl]}: {p[cl]:0.2f}'

ax.text(xmin, ymin, text, fontsize=15,

bbox=dict(facecolor='yellow', alpha=0.5))

plt.axis('off')

plt.show()

def detect(im, model, transform):

# mean-std normalize the input image (batch-size: 1)

img = transform(im).unsqueeze(0)

# demo model only support by default images with aspect ratio between 0.5 and 2

# if you want to use images with an aspect ratio outside this range

# rescale your image so that the maximum size is at most 1333 for best results

assert img.shape[-2] <= 1600 and img.shape[-1] <= 1600, 'demo model only supports images up to 1600 pixels on each side'

# propagate through the model

outputs = model(img)

# keep only predictions with 0.7+ confidence

probas = outputs['pred_logits'].softmax(-1)[0, :, :-1]

keep = probas.max(-1).values > 0.7

# convert boxes from [0; 1] to image scales

bboxes_scaled = rescale_bboxes(outputs['pred_boxes'][0, keep], im.size)

return probas[keep], bboxes_scaled

class DETRdemo(nn.Module):

"""

Demo DETR implementation.

Demo implementation of DETR in minimal number of lines, with the

following differences wrt DETR in the paper:

* learned positional encoding (instead of sine)

* positional encoding is passed at input (instead of attention)

* fc bbox predictor (instead of MLP)

The model achieves ~40 AP on COCO val5k and runs at ~28 FPS on Tesla V100.

Only batch size 1 supported.

"""

def __init__(self, num_classes, hidden_dim=256, nheads=8,

num_encoder_layers=6, num_decoder_layers=6):

super().__init__()

# create ResNet-50 backbone

self.backbone = resnet50()

del self.backbone.fc

# create conversion layer

self.conv = nn.Conv2d(2048, hidden_dim, 1)

# create a default PyTorch transformer

self.transformer = nn.Transformer(

hidden_dim, nheads, num_encoder_layers, num_decoder_layers)

# prediction heads, one extra class for predicting non-empty slots

# note that in baseline DETR linear_bbox layer is 3-layer MLP

self.linear_class = nn.Linear(hidden_dim, num_classes + 1)

self.linear_bbox = nn.Linear(hidden_dim, 4)

# output positional encodings (object queries)

self.query_pos = nn.Parameter(torch.rand(100, hidden_dim))

# spatial positional encodings

# note that in baseline DETR we use sine positional encodings

self.row_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

self.col_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

def forward(self, inputs):

# propagate inputs through ResNet-50 up to avg-pool layer

x = self.backbone.conv1(inputs)

x = self.backbone.bn1(x)

x = self.backbone.relu(x)

x = self.backbone.maxpool(x)

x = self.backbone.layer1(x)

x = self.backbone.layer2(x)

x = self.backbone.layer3(x)

x = self.backbone.layer4(x)

# convert from 2048 to 256 feature planes for the transformer

h = self.conv(x)

# construct positional encodings

H, W = h.shape[-2:]

pos = torch.cat([

self.col_embed[:W].unsqueeze(0).repeat(H, 1, 1),

self.row_embed[:H].unsqueeze(1).repeat(1, W, 1),

], dim=-1).flatten(0, 1).unsqueeze(1)

# propagate through the transformer

h = self.transformer(pos + 0.1 * h.flatten(2).permute(2, 0, 1),

self.query_pos.unsqueeze(1)).transpose(0, 1)

# finally project transformer outputs to class labels and bounding boxes

return {'pred_logits': self.linear_class(h),

'pred_boxes': self.linear_bbox(h).sigmoid()}

if __name__=="__main__":

detr = DETRdemo(num_classes=91)

state_dict = torch.hub.load_state_dict_from_url(url='https://dl.fbaipublicfiles.com/detr/detr_demo-da2a99e9.pth',

map_location='cpu', check_hash=True)

detr.load_state_dict(state_dict)

detr.eval()

# 这里修改自己的图片路径即可,注意路径不要有中文,否则要加一行解码的代码

im = cv2.imread("test4.JPG")

im= cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

im=Image.fromarray(im)

scores, boxes= detect(im, detr, transform)

plot_results(im, scores, boxes)

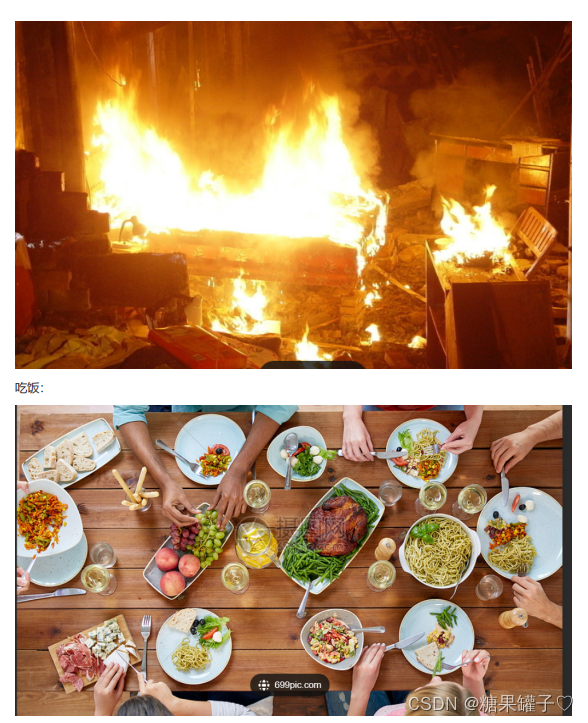

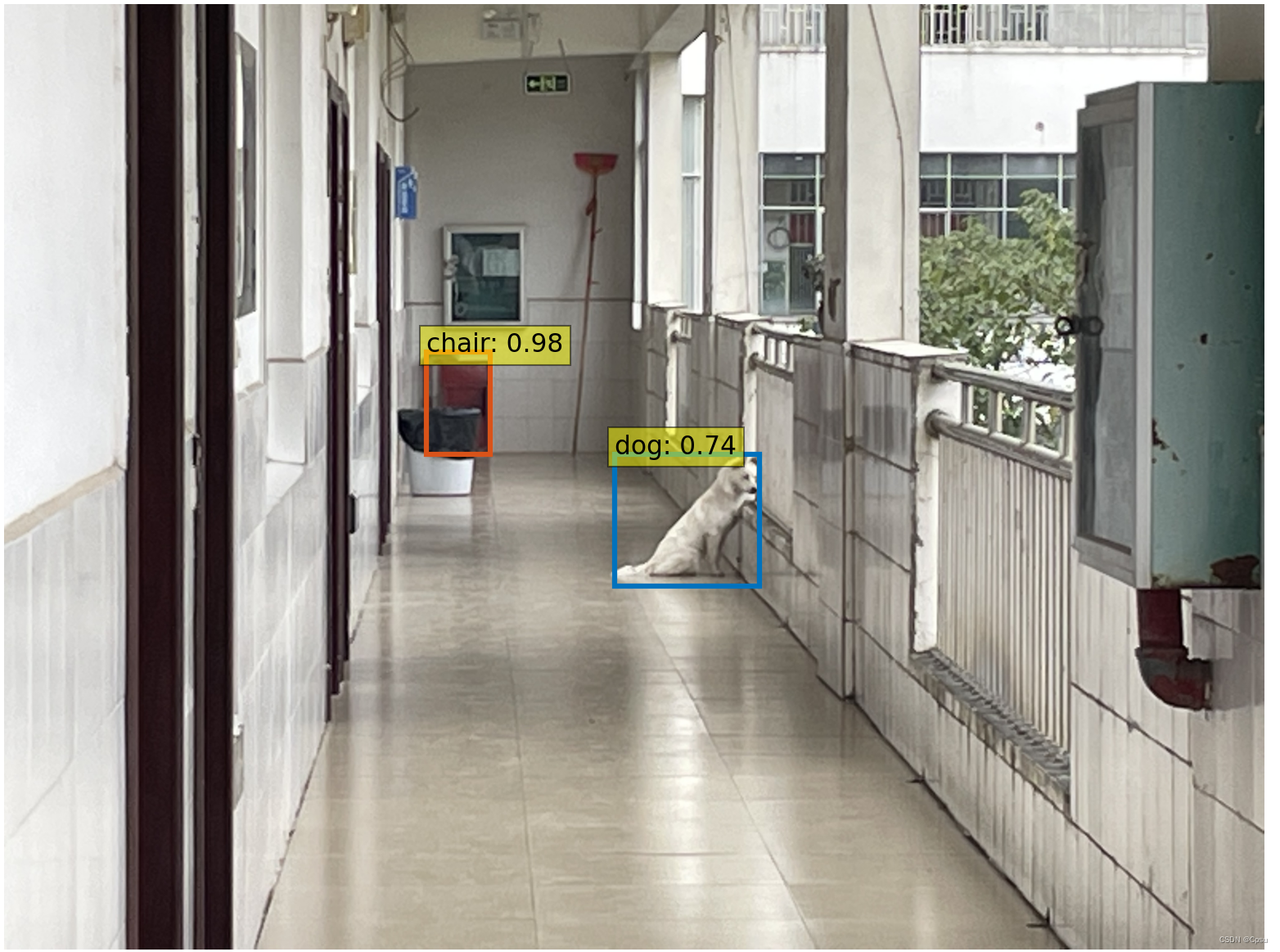

这是用我自己拍的图片跑的效果:

二、Pycharm(非jupyter notebook的IDE)

from PIL import Image

import matplotlib.pyplot as plt

import cv2

import torch

from torch import nn

from torchvision.models import resnet50

import torchvision.transforms as T

torch.set_grad_enabled(False)

# COCO classes

CLASSES = [

'N/A', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A',

'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse',

'sheep', 'cow', 'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack',

'umbrella', 'N/A', 'N/A', 'handbag', 'tie', 'suitcase', 'frisbee', 'skis',

'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove',

'skateboard', 'surfboard', 'tennis racket', 'bottle', 'N/A', 'wine glass',

'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich',

'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake',

'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table', 'N/A',

'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard',

'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A',

'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier',

'toothbrush'

]

# colors for visualization

COLORS = [[0.000, 0.447, 0.741], [0.850, 0.325, 0.098], [0.929, 0.694, 0.125],

[0.494, 0.184, 0.556], [0.466, 0.674, 0.188], [0.301, 0.745, 0.933]]

# standard PyTorch mean-std input image normalization

transform = T.Compose([

T.Resize(800),

T.ToTensor(),

T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# for output bounding box post-processing

def box_cxcywh_to_xyxy(x):

x_c, y_c, w, h = x.unbind(1)

b = [(x_c - 0.5 * w), (y_c - 0.5 * h),

(x_c + 0.5 * w), (y_c + 0.5 * h)]

return torch.stack(b, dim=1)

def rescale_bboxes(out_bbox, size):

img_w, img_h = size

b = box_cxcywh_to_xyxy(out_bbox)

b = b * torch.tensor([img_w, img_h, img_w, img_h], dtype=torch.float32)

return b

def plot_results(pil_img, prob, boxes):

plt.figure(figsize=(16, 10))

plt.imshow(pil_img)

ax = plt.gca()

colors = COLORS * 100

for p, (xmin, ymin, xmax, ymax), c in zip(prob, boxes.tolist(), colors):

ax.add_patch(plt.Rectangle((xmin, ymin), xmax - xmin, ymax - ymin,

fill=False, color=c, linewidth=3))

cl = p.argmax()

text = f'{CLASSES[cl]}: {p[cl]:0.2f}'

ax.text(xmin, ymin, text, fontsize=15,

bbox=dict(facecolor='yellow', alpha=0.5))

plt.axis('off')

plt.show()

def detect(im, model, transform):

# mean-std normalize the input image (batch-size: 1)

img = transform(im).unsqueeze(0)

# demo model only support by default images with aspect ratio between 0.5 and 2

# if you want to use images with an aspect ratio outside this range

# rescale your image so that the maximum size is at most 1333 for best results

assert img.shape[-2] <= 1600 and img.shape[

-1] <= 1600, 'demo model only supports images up to 1600 pixels on each side'

# propagate through the model

outputs = model(img)

# keep only predictions with 0.7+ confidence

probas = outputs['pred_logits'].softmax(-1)[0, :, :-1]

keep = probas.max(-1).values > 0.7

# convert boxes from [0; 1] to image scales

bboxes_scaled = rescale_bboxes(outputs['pred_boxes'][0, keep], im.size)

return probas[keep], bboxes_scaled

class DETRdemo(nn.Module):

"""

Demo DETR implementation.

Demo implementation of DETR in minimal number of lines, with the

following differences wrt DETR in the paper:

* learned positional encoding (instead of sine)

* positional encoding is passed at input (instead of attention)

* fc bbox predictor (instead of MLP)

The model achieves ~40 AP on COCO val5k and runs at ~28 FPS on Tesla V100.

Only batch size 1 supported.

"""

def __init__(self, num_classes, hidden_dim=256, nheads=8,

num_encoder_layers=6, num_decoder_layers=6):

super().__init__()

# create ResNet-50 backbone

self.backbone = resnet50()

del self.backbone.fc

# create conversion layer

self.conv = nn.Conv2d(2048, hidden_dim, 1)

# create a default PyTorch transformer

self.transformer = nn.Transformer(

hidden_dim, nheads, num_encoder_layers, num_decoder_layers)

# prediction heads, one extra class for predicting non-empty slots

# note that in baseline DETR linear_bbox layer is 3-layer MLP

self.linear_class = nn.Linear(hidden_dim, num_classes + 1)

self.linear_bbox = nn.Linear(hidden_dim, 4)

# output positional encodings (object queries)

self.query_pos = nn.Parameter(torch.rand(100, hidden_dim))

# spatial positional encodings

# note that in baseline DETR we use sine positional encodings

self.row_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

self.col_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

def forward(self, inputs):

# propagate inputs through ResNet-50 up to avg-pool layer

x = self.backbone.conv1(inputs)

x = self.backbone.bn1(x)

x = self.backbone.relu(x)

x = self.backbone.maxpool(x)

x = self.backbone.layer1(x)

x = self.backbone.layer2(x)

x = self.backbone.layer3(x)

x = self.backbone.layer4(x)

# convert from 2048 to 256 feature planes for the transformer

h = self.conv(x)

# construct positional encodings

H, W = h.shape[-2:]

pos = torch.cat([

self.col_embed[:W].unsqueeze(0).repeat(H, 1, 1),

self.row_embed[:H].unsqueeze(1).repeat(1, W, 1),

], dim=-1).flatten(0, 1).unsqueeze(1)

# propagate through the transformer

h = self.transformer(pos + 0.1 * h.flatten(2).permute(2, 0, 1),

self.query_pos.unsqueeze(1)).transpose(0, 1)

# finally project transformer outputs to class labels and bounding boxes

return {'pred_logits': self.linear_class(h),

'pred_boxes': self.linear_bbox(h).sigmoid()}

if __name__ == "__main__":

detr = DETRdemo(num_classes=91)

state_dict = torch.hub.load_state_dict_from_url(

url='https://dl.fbaipublicfiles.com/detr/detr_demo-da2a99e9.pth',

map_location='cpu', check_hash=True)

detr.load_state_dict(state_dict)

detr.eval()

im = cv2.imread("test4.JPG")

im = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

im = Image.fromarray(im)

scores, boxes = detect(im, detr, transform)

plot_results(im, scores, boxes)

官方github:DETR