需求描述

- 实际工作中,相比于mAP项目更加关心的是特定阈值下的precision和recall结果;

- 由于本次的GT中除了目标框之外还存在多边形标注,为此,计算IoU的方式从框与框之间变成了mask之间;

本文的代码适用于MMDetection下的预测结果和COCO格式之间来计算PR结果,具体的实现过程如下:

- 获取预测结果并保存到json文件中;

- 解析预测结果和GT;

- 根据image_id获取每张图的预测结果和GT;

- 基于mask计算预测结果和GT之间的iou矩阵;

- 根据iou矩阵得到对应的tp、fp和num_gt;

- 迭代所有的图像得到所有的tp、fp和num_gt累加,根据公式计算precision和recall;

具体实现

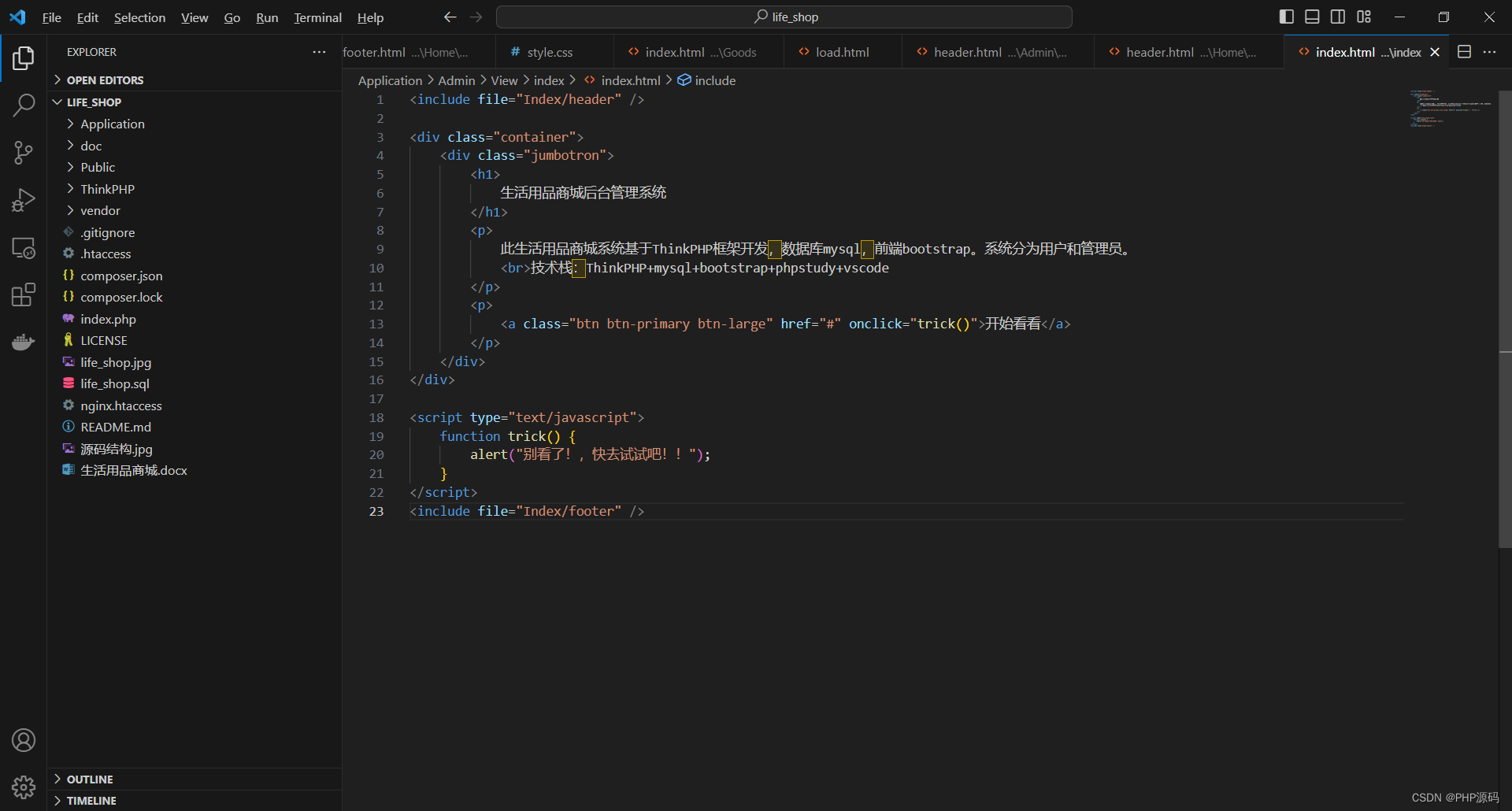

获取预测结果

在MMDetection框架下,通常使用如下的命令来评估模型的结果:

bash tools/dist_test.sh configs/aaaa/gaotie_cascade_rcnn_r50_fpn_1x.py work_dirs/gaotie_cascade_rcnn_r50_fpn_1x/epoch_20.pth 8 --eval bbox

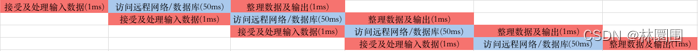

此时能获取到类似下图的mAP结果。

)

)

而我们需要在某个过程把预测结果保存下,用于后续得到PR结果,具体可以在mmdet/datasets/coco.py的438行位置添加如下代码:

try:

import shutil

cocoDt = cocoGt.loadRes(result_files[metric])

shutil.copyfile(result_files[metric], "results.bbox.json")

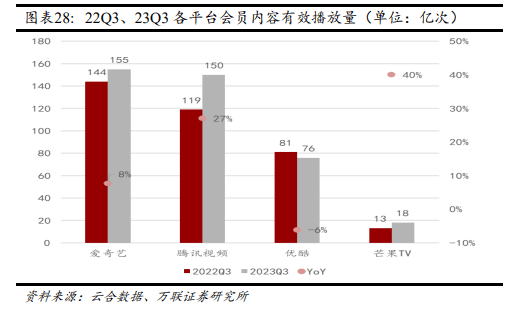

这样我们就可以得到results.bbox.json文件,里面包含的是模型的预测结果,如下图所示。

)

)

获取GT结果

由于标注时有两个格式:矩形框和多边形,因此在构建GT的coco格式文件时,对于矩形框会将其四个顶点作为多边形传入到segmentations字段,对于多边形会计算出外接矩形传入到bbox字段。

)

)

为此,获取GT信息的脚本实现如下:

def construct_gt_results(gt_json_path):

results = dict()

bbox_results = dict()

cocoGt = COCO(annotation_file=gt_json_path)

# cat_ids = cocoGt.getCatIds()

img_ids = cocoGt.getImgIds()

for id in img_ids:

anno_ids = cocoGt.getAnnIds(imgIds=[id])

annotations = cocoGt.loadAnns(ids=anno_ids)

for info in annotations:

img_id = info["image_id"]

if img_id not in results:

results[img_id] = list()

bbox_results[img_id] = list()

bbox = info["bbox"]

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[0] + bbox[2], bbox[1] + bbox[3]

# results[img_id].append([x1, y1, x2, y2])

# mask = _poly2mask(info["segmentation"], img_h=1544, img_w=2064)

results[img_id].append(info["segmentation"])

bbox_results[img_id].append([x1, y1, x2, y2])

return results, img_ids, cocoGt, bbox_results

输入GT的json文件路径,返回所有图像的分割结果,image_id,COCO对象和目标框结果(用于后续的可视化结果)。

获取预测结果

模型预测出来的结果都是目标框的形式,与上面一样,将目标框的四个顶点作为多边形的分割结果。具体解析脚本如下:

def construct_det_results(det_json_path):

results = dict()

bbox_results = dict()

scores = dict()

with open(det_json_path) as f:

json_data = json.load(f)

for info in json_data:

img_id = info["image_id"]

if img_id not in results:

results[img_id] = list()

scores[img_id] = list()

bbox_results[img_id] = list()

bbox = info["bbox"]

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[0] + bbox[2], bbox[1] + bbox[3]

segm = [[x1, y1, x2, y1, x2, y2, x1, y2]]

# mask = _poly2mask(segm, img_h=1544, img_w=2064)

score = info["score"]

# results[img_id].append([x1, y1, x2, y2, score])

results[img_id].append(segm)

bbox_results[img_id].append([x1, y1, x2, y2])

scores[img_id].append(score)

return results, scores, bbox_results

输入的是预测结果的json文件路径,输出是所有图像分割结果、得分和目标框结果。

根据image_id计算单个图像的TP、FP结果

本步骤的具体内容如下:

- 根据置信度阈值对预测框进行筛选;

- 将所有的多边形转换为mask,用于后续计算IoU;

- 得到tp和fp;

- 可视化fp和fn结果;

将多边形转换为mask

if img_id in det_results:

# for dt in det_results[img_id]:

for idx, score in enumerate(det_scores[img_id]):

# score = dt[-1]

if score > conf_thrs:

mask = _poly2mask(det_results[img_id][idx], img_h=1544, img_w=2064)

det_bboxes.append(mask)

det_thrs_scores.append(score)

plot_det_bboxes.append(det_tmp_bboxes[img_id][idx])

if img_id in gt_results:

for segm in gt_results[img_id]:

mask = _poly2mask(segm, img_h=1544, img_w=2064)

gt_bboxes.append(mask)

plot_gt_bboxes = gt_tmp_bboxes[img_id]

通过_poly2mask函数可以将多边形转换为mask,_poly2mask函数的实现如下。

def _poly2mask(mask_ann, img_h, img_w):

"""Private function to convert masks represented with polygon to

bitmaps.

Args:

mask_ann (list | dict): Polygon mask annotation input.

img_h (int): The height of output mask.

img_w (int): The width of output mask.

Returns:

numpy.ndarray: The decode bitmap mask of shape (img_h, img_w).

"""

if isinstance(mask_ann, list):

# polygon -- a single object might consist of multiple parts

# we merge all parts into one mask rle code

rles = maskUtils.frPyObjects(mask_ann, img_h, img_w)

rle = maskUtils.merge(rles)

elif isinstance(mask_ann['counts'], list):

# uncompressed RLE

rle = maskUtils.frPyObjects(mask_ann, img_h, img_w)

else:

# rle

rle = mask_ann

mask = maskUtils.decode(rle)

return mask

计算单张图像的TP和FP

本文中使用tpfp_default函数实现该功能,具体实现如下:

def tpfp_default(det_bboxes,

gt_bboxes,

gt_bboxes_ignore=None,

det_thrs_scores=None,

iou_thr=0.5,

area_ranges=None):

"""Check if detected bboxes are true positive or false positive.

Args:

det_bbox (ndarray): Detected bboxes of this image, of shape (m, 5).

gt_bboxes (ndarray): GT bboxes of this image, of shape (n, 4).

gt_bboxes_ignore (ndarray): Ignored gt bboxes of this image,

of shape (k, 4). Default: None

iou_thr (float): IoU threshold to be considered as matched.

Default: 0.5.

area_ranges (list[tuple] | None): Range of bbox areas to be evaluated,

in the format [(min1, max1), (min2, max2), ...]. Default: None.

Returns:

tuple[np.ndarray]: (tp, fp) whose elements are 0 and 1. The shape of

each array is (num_scales, m).

"""

# an indicator of ignored gts

gt_ignore_inds = np.concatenate(

(np.zeros(gt_bboxes.shape[0], dtype=np.bool),

np.ones(gt_bboxes_ignore.shape[0], dtype=np.bool)))

# stack gt_bboxes and gt_bboxes_ignore for convenience

# gt_bboxes = np.vstack((gt_bboxes, gt_bboxes_ignore))

num_dets = det_bboxes.shape[0]

num_gts = gt_bboxes.shape[0]

if area_ranges is None:

area_ranges = [(None, None)]

num_scales = len(area_ranges)

# tp and fp are of shape (num_scales, num_gts), each row is tp or fp of

# a certain scale

tp = np.zeros((num_scales, num_dets), dtype=np.float32)

fp = np.zeros((num_scales, num_dets), dtype=np.float32)

# if there is no gt bboxes in this image, then all det bboxes

# within area range are false positives

if gt_bboxes.shape[0] == 0:

if area_ranges == [(None, None)]:

fp[...] = 1

else:

det_areas = (det_bboxes[:, 2] - det_bboxes[:, 0] + 1) * (

det_bboxes[:, 3] - det_bboxes[:, 1] + 1)

for i, (min_area, max_area) in enumerate(area_ranges):

fp[i, (det_areas >= min_area) & (det_areas < max_area)] = 1

return tp, fp

# ious = bbox_overlaps(det_bboxes, gt_bboxes)

# ious = mask_overlaps(det_bboxes, gt_bboxes)

ious = mask_wraper(det_bboxes, gt_bboxes)

# for each det, the max iou with all gts

ious_max = ious.max(axis=1)

# for each det, which gt overlaps most with it

ious_argmax = ious.argmax(axis=1)

# sort all dets in descending order by scores

# sort_inds = np.argsort(-det_bboxes[:, -1])

sort_inds = np.argsort(-det_thrs_scores)

for k, (min_area, max_area) in enumerate(area_ranges):

gt_covered = np.zeros(num_gts, dtype=bool)

# if no area range is specified, gt_area_ignore is all False

if min_area is None:

gt_area_ignore = np.zeros_like(gt_ignore_inds, dtype=bool)

else:

gt_areas = (gt_bboxes[:, 2] - gt_bboxes[:, 0] + 1) * (

gt_bboxes[:, 3] - gt_bboxes[:, 1] + 1)

gt_area_ignore = (gt_areas < min_area) | (gt_areas >= max_area)

for i in sort_inds:

if ious_max[i] >= iou_thr:

matched_gt = ious_argmax[i] # 得到对应的GT索引

if not (gt_ignore_inds[matched_gt]

or gt_area_ignore[matched_gt]):

if not gt_covered[matched_gt]:

gt_covered[matched_gt] = True # GT占位

tp[k, i] = 1

else:

fp[k, i] = 1

# otherwise ignore this detected bbox, tp = 0, fp = 0

elif min_area is None:

fp[k, i] = 1

else:

bbox = det_bboxes[i, :4]

area = (bbox[2] - bbox[0] + 1) * (bbox[3] - bbox[1] + 1)

if area >= min_area and area < max_area:

fp[k, i] = 1

return tp, fp

过程是先获取预测框和GT框之间的IoU矩阵,然后按照置信度排序,将每个预测框分配给GT框得到tp和fp结果。

计算mask的IoU

IoU的定义都是一样的,计算公式如下:

基于mask计算IoU的实验也非常简单,代码如下:

def mask_overlaps(bboxes1, bboxes2, mode='iou'):

assert mode in ['iou', 'iof']

bboxes1 = bboxes1.astype(np.bool_)

bboxes2 = bboxes2.astype(np.bool_)

intersection = np.logical_and(bboxes1, bboxes2)

union = np.logical_or(bboxes1, bboxes2)

intersection_area = np.sum(intersection)

union_area = np.sum(union)

iou = intersection_area / union_area

return iou

而计算预测框和GT之间的IoU矩阵实现如下:

def mask_wraper(bboxes1, bboxes2, mode='iou'):

rows = bboxes1.shape[0] # gt

cols = bboxes2.shape[0] # det

ious = np.zeros((rows, cols), dtype=np.float32)

if rows * cols == 0:

return ious

for i in range(rows):

for j in range(cols):

iou = mask_overlaps(bboxes1[i], bboxes2[j])

ious[i, j] = iou

return ious

至此,通过上述过程就能获取到单张图像的tp和fp结果。

可视化FP和FN结果

此外,我们需要分析模型的badcase,因此,可以将FP和FN的结果可视化出来,我这里是直接将存在问题的图像所有预测框和GT框都画出来了。

if VIS and (fp > 0 or tp < gt):

img_data, path = draw_bbox(img_id=img_id, cocoGt=cocoGt, det_bboxes=plot_det_bboxes, gt_bboxes=plot_gt_bboxes)

if fp > 0:

save_dir = os.path.join(VIS_ROOT, "tmp/FP/")

os.makedirs(save_dir, exist_ok=True)

cv2.imwrite(os.path.join(save_dir, os.path.basename(path)+".jpg"), img_data, [int(cv2.IMWRITE_JPEG_QUALITY), 30])

if tp < gt:

save_dir = os.path.join(VIS_ROOT, "tmp/FN/")

os.makedirs(save_dir, exist_ok=True)

cv2.imwrite(os.path.join(save_dir, os.path.basename(path)+".jpg"), img_data,

[int(cv2.IMWRITE_JPEG_QUALITY), 30])

画框的实现如下:

def draw_bbox(img_id, cocoGt, det_bboxes, gt_bboxes):

path = cocoGt.loadImgs(ids=[img_id])[0]["file_name"]

img_path = os.path.join(IMG_ROOT, path)

img_data = cv2.imread(img_path)

for box in det_bboxes:

# color_mask = (0, 0, 255)

# color_mask = np.array([0, 0, 255], dtype=np.int8)

# bbox_mask = box.astype(np.bool)

cv2.rectangle(img_data, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])), (0, 0, 255), 3)

# img_data[bbox_mask] = img_data[bbox_mask] * 0.5 + color_mask * 0.5

for box in gt_bboxes:

# color_mask = np.array([0, 255, 0], dtype=np.int8)

# bbox_mask = box.astype(np.bool)

# img_data[bbox_mask] = img_data[bbox_mask] * 0.5 + color_mask * 0.5

cv2.rectangle(img_data, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])), (0, 255, 0), 3)

return img_data, path

至此,我们实现了单张图像的所有业务逻辑。

多线程计算所有图像结果

通过multiprocessing启动一个进程池来加速结果计算。

def eval_multiprocessing(img_ids):

from multiprocessing import Pool

pool = Pool(processes=16)

results = pool.map(eval_pr, img_ids)

# 关闭进程池,表示不再接受新的任务

pool.close()

# 等待所有任务完成

pool.join()

return np.sum(np.array(results), axis=0)

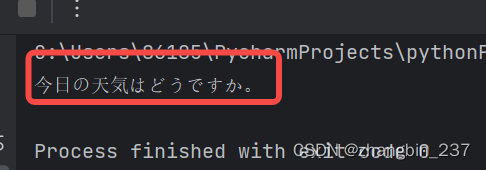

计算PR结果

返回所有图像的TP和FP结果之后,就可以计算precision和recall值了。

gt, tp, fp = eval_multiprocessing(img_ids)

eps = np.finfo(np.float32).eps

recalls = tp / np.maximum(gt, eps)

precisions = tp / np.maximum((tp + fp), eps)

print("conf_thrs:{:.3f} iou_thrs:{:.3f}, gt:{:d}, TP={:d}, FP={:d}, P={:.3f}, R={:.3f}".format(conf_thrs, iou_thrs, gt, tp, fp, precisions, recalls))

最后,也附上整个实现代码,方便后续复现或者参考。

from multiprocessing import Pool

import os

import numpy as np

import json

from pycocotools.coco import COCO

import cv2

from pycocotools import mask as maskUtils

def bbox_overlaps(bboxes1, bboxes2, mode='iou'):

"""Calculate the ious between each bbox of bboxes1 and bboxes2.

Args:

bboxes1(ndarray): shape (n, 4)

bboxes2(ndarray): shape (k, 4)

mode(str): iou (intersection over union) or iof (intersection

over foreground)

Returns:

ious(ndarray): shape (n, k)

"""

assert mode in ['iou', 'iof']

bboxes1 = bboxes1.astype(np.float32)

bboxes2 = bboxes2.astype(np.float32)

rows = bboxes1.shape[0]

cols = bboxes2.shape[0]

ious = np.zeros((rows, cols), dtype=np.float32)

if rows * cols == 0:

return ious

exchange = False

if bboxes1.shape[0] > bboxes2.shape[0]:

bboxes1, bboxes2 = bboxes2, bboxes1

ious = np.zeros((cols, rows), dtype=np.float32)

exchange = True

area1 = (bboxes1[:, 2] - bboxes1[:, 0] + 1) * (bboxes1[:, 3] - bboxes1[:, 1] + 1)

area2 = (bboxes2[:, 2] - bboxes2[:, 0] + 1) * (bboxes2[:, 3] - bboxes2[:, 1] + 1)

for i in range(bboxes1.shape[0]):

x_start = np.maximum(bboxes1[i, 0], bboxes2[:, 0])

y_start = np.maximum(bboxes1[i, 1], bboxes2[:, 1])

x_end = np.minimum(bboxes1[i, 2], bboxes2[:, 2])

y_end = np.minimum(bboxes1[i, 3], bboxes2[:, 3])

overlap = np.maximum(x_end - x_start + 1, 0) * np.maximum(y_end - y_start + 1, 0)

if mode == 'iou':

union = area1[i] + area2 - overlap

else:

union = area1[i] if not exchange else area2

ious[i, :] = overlap / union

if exchange:

ious = ious.T

return ious

def mask_wraper(bboxes1, bboxes2, mode='iou'):

rows = bboxes1.shape[0] # gt

cols = bboxes2.shape[0] # det

ious = np.zeros((rows, cols), dtype=np.float32)

if rows * cols == 0:

return ious

for i in range(rows):

for j in range(cols):

iou = mask_overlaps(bboxes1[i], bboxes2[j])

ious[i, j] = iou

return ious

def mask_overlaps(bboxes1, bboxes2, mode='iou'):

assert mode in ['iou', 'iof']

bboxes1 = bboxes1.astype(np.bool_)

bboxes2 = bboxes2.astype(np.bool_)

intersection = np.logical_and(bboxes1, bboxes2)

union = np.logical_or(bboxes1, bboxes2)

intersection_area = np.sum(intersection)

union_area = np.sum(union)

iou = intersection_area / union_area

return iou

def tpfp_default(det_bboxes,

gt_bboxes,

gt_bboxes_ignore=None,

det_thrs_scores=None,

iou_thr=0.5,

area_ranges=None):

"""Check if detected bboxes are true positive or false positive.

Args:

det_bbox (ndarray): Detected bboxes of this image, of shape (m, 5).

gt_bboxes (ndarray): GT bboxes of this image, of shape (n, 4).

gt_bboxes_ignore (ndarray): Ignored gt bboxes of this image,

of shape (k, 4). Default: None

iou_thr (float): IoU threshold to be considered as matched.

Default: 0.5.

area_ranges (list[tuple] | None): Range of bbox areas to be evaluated,

in the format [(min1, max1), (min2, max2), ...]. Default: None.

Returns:

tuple[np.ndarray]: (tp, fp) whose elements are 0 and 1. The shape of

each array is (num_scales, m).

"""

# an indicator of ignored gts

gt_ignore_inds = np.concatenate(

(np.zeros(gt_bboxes.shape[0], dtype=np.bool),

np.ones(gt_bboxes_ignore.shape[0], dtype=np.bool)))

# stack gt_bboxes and gt_bboxes_ignore for convenience

# gt_bboxes = np.vstack((gt_bboxes, gt_bboxes_ignore))

num_dets = det_bboxes.shape[0]

num_gts = gt_bboxes.shape[0]

if area_ranges is None:

area_ranges = [(None, None)]

num_scales = len(area_ranges)

# tp and fp are of shape (num_scales, num_gts), each row is tp or fp of

# a certain scale

tp = np.zeros((num_scales, num_dets), dtype=np.float32)

fp = np.zeros((num_scales, num_dets), dtype=np.float32)

# if there is no gt bboxes in this image, then all det bboxes

# within area range are false positives

if gt_bboxes.shape[0] == 0:

if area_ranges == [(None, None)]:

fp[...] = 1

else:

det_areas = (det_bboxes[:, 2] - det_bboxes[:, 0] + 1) * (

det_bboxes[:, 3] - det_bboxes[:, 1] + 1)

for i, (min_area, max_area) in enumerate(area_ranges):

fp[i, (det_areas >= min_area) & (det_areas < max_area)] = 1

return tp, fp

# ious = bbox_overlaps(det_bboxes, gt_bboxes)

# ious = mask_overlaps(det_bboxes, gt_bboxes)

ious = mask_wraper(det_bboxes, gt_bboxes)

# for each det, the max iou with all gts

ious_max = ious.max(axis=1)

# for each det, which gt overlaps most with it

ious_argmax = ious.argmax(axis=1)

# sort all dets in descending order by scores

# sort_inds = np.argsort(-det_bboxes[:, -1])

sort_inds = np.argsort(-det_thrs_scores)

for k, (min_area, max_area) in enumerate(area_ranges):

gt_covered = np.zeros(num_gts, dtype=bool)

# if no area range is specified, gt_area_ignore is all False

if min_area is None:

gt_area_ignore = np.zeros_like(gt_ignore_inds, dtype=bool)

else:

gt_areas = (gt_bboxes[:, 2] - gt_bboxes[:, 0] + 1) * (

gt_bboxes[:, 3] - gt_bboxes[:, 1] + 1)

gt_area_ignore = (gt_areas < min_area) | (gt_areas >= max_area)

for i in sort_inds:

if ious_max[i] >= iou_thr:

matched_gt = ious_argmax[i] # 得到对应的GT索引

if not (gt_ignore_inds[matched_gt]

or gt_area_ignore[matched_gt]):

if not gt_covered[matched_gt]:

gt_covered[matched_gt] = True # GT占位

tp[k, i] = 1

else:

fp[k, i] = 1

# otherwise ignore this detected bbox, tp = 0, fp = 0

elif min_area is None:

fp[k, i] = 1

else:

bbox = det_bboxes[i, :4]

area = (bbox[2] - bbox[0] + 1) * (bbox[3] - bbox[1] + 1)

if area >= min_area and area < max_area:

fp[k, i] = 1

return tp, fp

def _poly2mask(mask_ann, img_h, img_w):

"""Private function to convert masks represented with polygon to

bitmaps.

Args:

mask_ann (list | dict): Polygon mask annotation input.

img_h (int): The height of output mask.

img_w (int): The width of output mask.

Returns:

numpy.ndarray: The decode bitmap mask of shape (img_h, img_w).

"""

if isinstance(mask_ann, list):

# polygon -- a single object might consist of multiple parts

# we merge all parts into one mask rle code

rles = maskUtils.frPyObjects(mask_ann, img_h, img_w)

rle = maskUtils.merge(rles)

elif isinstance(mask_ann['counts'], list):

# uncompressed RLE

rle = maskUtils.frPyObjects(mask_ann, img_h, img_w)

else:

# rle

rle = mask_ann

mask = maskUtils.decode(rle)

return mask

def construct_det_results(det_json_path):

results = dict()

bbox_results = dict()

scores = dict()

with open(det_json_path) as f:

json_data = json.load(f)

for info in json_data:

img_id = info["image_id"]

if img_id not in results:

results[img_id] = list()

scores[img_id] = list()

bbox_results[img_id] = list()

bbox = info["bbox"]

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[0] + bbox[2], bbox[1] + bbox[3]

segm = [[x1, y1, x2, y1, x2, y2, x1, y2]]

# mask = _poly2mask(segm, img_h=1544, img_w=2064)

score = info["score"]

# results[img_id].append([x1, y1, x2, y2, score])

results[img_id].append(segm)

bbox_results[img_id].append([x1, y1, x2, y2])

scores[img_id].append(score)

return results, scores, bbox_results

def construct_gt_results(gt_json_path):

results = dict()

bbox_results = dict()

cocoGt = COCO(annotation_file=gt_json_path)

# cat_ids = cocoGt.getCatIds()

img_ids = cocoGt.getImgIds()

for id in img_ids:

anno_ids = cocoGt.getAnnIds(imgIds=[id])

annotations = cocoGt.loadAnns(ids=anno_ids)

for info in annotations:

img_id = info["image_id"]

if img_id not in results:

results[img_id] = list()

bbox_results[img_id] = list()

bbox = info["bbox"]

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[0] + bbox[2], bbox[1] + bbox[3]

# results[img_id].append([x1, y1, x2, y2])

# mask = _poly2mask(info["segmentation"], img_h=1544, img_w=2064)

results[img_id].append(info["segmentation"])

bbox_results[img_id].append([x1, y1, x2, y2])

return results, img_ids, cocoGt, bbox_results

def draw_bbox(img_id, cocoGt, det_bboxes, gt_bboxes):

path = cocoGt.loadImgs(ids=[img_id])[0]["file_name"]

img_path = os.path.join(IMG_ROOT, path)

img_data = cv2.imread(img_path)

for box in det_bboxes:

# color_mask = (0, 0, 255)

# color_mask = np.array([0, 0, 255], dtype=np.int8)

# bbox_mask = box.astype(np.bool)

cv2.rectangle(img_data, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])), (0, 0, 255), 3)

# img_data[bbox_mask] = img_data[bbox_mask] * 0.5 + color_mask * 0.5

for box in gt_bboxes:

# color_mask = np.array([0, 255, 0], dtype=np.int8)

# bbox_mask = box.astype(np.bool)

# img_data[bbox_mask] = img_data[bbox_mask] * 0.5 + color_mask * 0.5

cv2.rectangle(img_data, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])), (0, 255, 0), 3)

return img_data, path

def eval_pr(img_id):

tp, fp, gt = 0, 0, 0

gt_bboxes, gt_ignore = [], []

det_bboxes = list()

gt_bboxes = list()

det_thrs_scores = list()

plot_det_bboxes = list()

plot_gt_bboxes = list()

if img_id in det_results:

# for dt in det_results[img_id]:

for idx, score in enumerate(det_scores[img_id]):

# score = dt[-1]

if score > conf_thrs:

mask = _poly2mask(det_results[img_id][idx], img_h=1544, img_w=2064)

det_bboxes.append(mask)

det_thrs_scores.append(score)

plot_det_bboxes.append(det_tmp_bboxes[img_id][idx])

if img_id in gt_results:

for segm in gt_results[img_id]:

mask = _poly2mask(segm, img_h=1544, img_w=2064)

gt_bboxes.append(mask)

plot_gt_bboxes = gt_tmp_bboxes[img_id]

det_bboxes = np.array(det_bboxes)

gt_bboxes = np.array(gt_bboxes)

det_thrs_scores = np.array(det_thrs_scores)

gt_ignore = np.array(gt_ignore).reshape(-1, 4)

if len(gt_bboxes) > 0:

if len(det_bboxes) == 0:

tp, fp = 0, 0

else:

tp, fp = tpfp_default(det_bboxes, gt_bboxes, gt_ignore, det_thrs_scores, iou_thrs)

tp, fp = np.sum(tp == 1), np.sum(fp == 1)

gt = len(gt_bboxes)

else:

fp = len(det_bboxes)

if VIS and (fp > 0 or tp < gt):

img_data, path = draw_bbox(img_id=img_id, cocoGt=cocoGt, det_bboxes=plot_det_bboxes, gt_bboxes=plot_gt_bboxes)

if fp > 0:

save_dir = os.path.join(VIS_ROOT, "tmp/FP/")

os.makedirs(save_dir, exist_ok=True)

cv2.imwrite(os.path.join(save_dir, os.path.basename(path)+".jpg"), img_data, [int(cv2.IMWRITE_JPEG_QUALITY), 30])

if tp < gt:

save_dir = os.path.join(VIS_ROOT, "tmp/FN/")

os.makedirs(save_dir, exist_ok=True)

cv2.imwrite(os.path.join(save_dir, os.path.basename(path)+".jpg"), img_data,

[int(cv2.IMWRITE_JPEG_QUALITY), 30])

return gt, tp, fp

def eval_multiprocessing(img_ids):

from multiprocessing import Pool

pool = Pool(processes=16)

results = pool.map(eval_pr, img_ids)

# 关闭进程池,表示不再接受新的任务

pool.close()

# 等待所有任务完成

pool.join()

return np.sum(np.array(results), axis=0)

if __name__ == '__main__':

VIS = 1

IMG_ROOT = "gaotie_data"

VIS_ROOT = 'badcase-vis-test-2/'

conf_thrs = 0.5

iou_thrs = 0.001

det_json_path = "results.bbox.json"

gt_json_path = "datasets/gaotie_test_data/annotations/test5_seg_removed.json"

det_results, det_scores, det_tmp_bboxes = construct_det_results(det_json_path)

gt_results, img_ids, cocoGt, gt_tmp_bboxes = construct_gt_results(gt_json_path)

gt, tp, fp = eval_multiprocessing(img_ids)

eps = np.finfo(np.float32).eps

recalls = tp / np.maximum(gt, eps)

precisions = tp / np.maximum((tp + fp), eps)

print("conf_thrs:{:.3f} iou_thrs:{:.3f}, gt:{:d}, TP={:d}, FP={:d}, P={:.3f}, R={:.3f}".format(conf_thrs, iou_thrs, gt, tp, fp, precisions, recalls))

总结

本文针对目标检测任务中GT存在多边形情况下给出了如下计算数据集的PR结果,基于mask来计算IoU,与语义分割计算IoU的思路一致,最后也给出了所有的实现代码作为参考。