Conv计算:

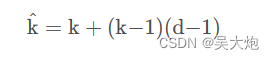

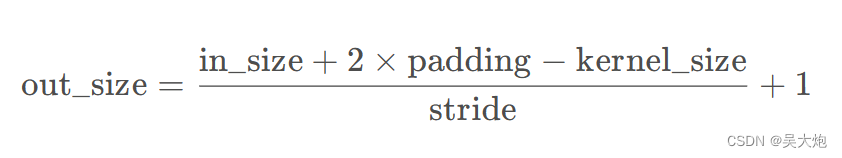

计算公示

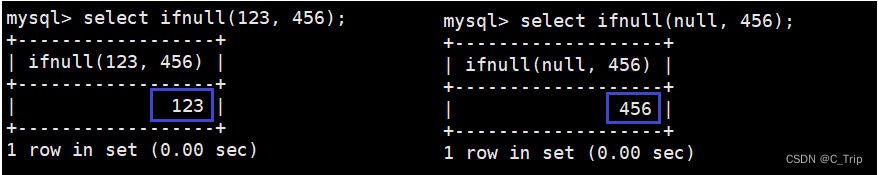

1、pytorch中默认参数,以conv1d为例

torch.nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=‘zeros’, device=None, dtype=None)

2、输出卷积尺寸,向下取整

3、常用的参数:

1) 输入输出大小一样,

kernel_size=3,padding=1,stride=1;

kernel_size=5,padding=2,stride=1;

2)输出为输入的一半,下采样

kernel_size=3, padding=1, stride=2;

import torch

input_data = torch.randn((64,131,280))

print(input_data.shape)

out: torch.Size([64, 131, 280])

model = torch.nn.Conv1d(131,10,3,2,1)

out_data = model(input_data)

out_data.shape

out: torch.Size([64, 10, 140])

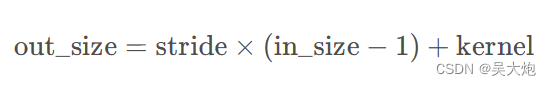

反卷积 转置卷积

计算公式

torch.nn.ConvTranspose1d(in_channels, out_channels, kernel_size, stride=1, padding=0, output_padding=0, groups=1, bias=True, dilation=1, padding_mode=‘zeros’, device=None, dtype=None)

padding:转置卷积中的padding作用于卷积正好相反,是将最外层的去掉一圈,所以带有padding的反卷积输出为:

实现两倍上采样参数组合:

stride=2, kernel=2, padding=0

stride=2, kernel=4, padding=1,

model2 = torch.nn.ConvTranspose1d(10,131,3,2)

out_data2 = model2(out_data)

out_data2.shape

out: torch.Size([64, 131, 281])

model3 = torch.nn.ConvTranspose1d(10,131,2,2)

out_data3 = model3(out_data)

out_data3.shape

out: torch.Size([64, 131, 280])

膨胀卷积

膨胀卷积或者说空洞卷积,就是在原conv中改变dilation这个参数,默认为1

torch.nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=‘zeros’, device=None, dtype=None)

如果该参数大于1,就要重新计算一下kerne size的大小,然后带入计算