文章目录

- 1.直接在源代码demo中修改

- 2. 如何修改呢?

https://github.com/DeployAI/nndeploy

https://nndeploy-zh.readthedocs.io/zh/latest/introduction/index.html

1.直接在源代码demo中修改

如果你想运行yolo5:

onnxruntime:115ms

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeOnnxRuntime --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeOnnx --is_path --model_value …/…/yolov5s.onnx --input_type kInputTypeImage --input_path …/…/sample.jpg --output_path …/…/sample_output.jpg

openvino:57ms

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeOpenVino --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeOnnx --is_path --model_value …/…/yolov5s.onnx --input_type kInputTypeImage --input_path …/…/sample.jpg --output_path …/…/sample_output.jpg

mnn:78ms

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeMnn --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeMnn --is_path --model_value …/…/yolov5s.mnn --input_type kInputTypeImage --input_path …/…/sample.jpg --output_path …/…/sample_output.jpg

tensorrt: 17ms

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeTensorRt --device_type kDeviceTypeCodeCuda:0 --model_type kModelTypeOnnx --is_path --model_value …/…/yolov5s.onnx --input_type kInputTypeImage --input_path …/…/sample.jpg --output_path …/…/sample_output.jpg

然后我直接修改源码然后通过下面的命令 可以运行 unet de model

modify for unet

tensorrt

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeTensorRt --device_type kDeviceTypeCodeCuda:0 --model_type kModelTypeOnnx --is_path --model_value /home/tony/nndeploy/mymodel/scripts/unet8.opt.onnx --input_type kInputTypeImage --input_path …/…/1007_01_06_40_000101.png --output_path …/…/sample_output.jpg

onnxruntime

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeOnnxRuntime --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeOnnx --is_path --model_value /home/tony/nndeploy/mymodel/scripts/unet8.opt.onnx --input_type kInputTypeImage --input_path …/…/1007_01_06_40_000101.png --output_path …/…/sample_output.jpg

openvino:

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeOpenVino --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeOnnx --is_path --model_value /home/tony/nndeploy/mymodel/scripts/unet8.opt.onnx --input_type kInputTypeImage --input_path …/…/1007_01_06_40_000101.png --output_path …/…/sample_output.jpg

MNN:

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeMnn --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeMnn --is_path --model_value /home/tony/nndeploy/mymodel/scripts/unet8.opt.mnn --input_type kInputTypeImage --input_path …/…/1007_01_06_40_000101.png --output_path …/…/sample_output.jpg

TNN:

./install/lib/demo_nndeploy_detect --name NNDEPLOY_YOLOV5 --inference_type kInferenceTypeTnn --device_type kDeviceTypeCodeX86:0 --model_type kModelTypeTnn --is_path --model_value /home/tony/nndeploy/mymodel/scripts/unet8.sim.tnnproto,/home/tony/nndeploy/mymodel/scripts/unet8.sim.tnnmodel --input_type kInputTypeImage --input_path …/…/1007_01_06_40_000101.png --output_path …/…/sample_output.jpg

2. 如何修改呢?

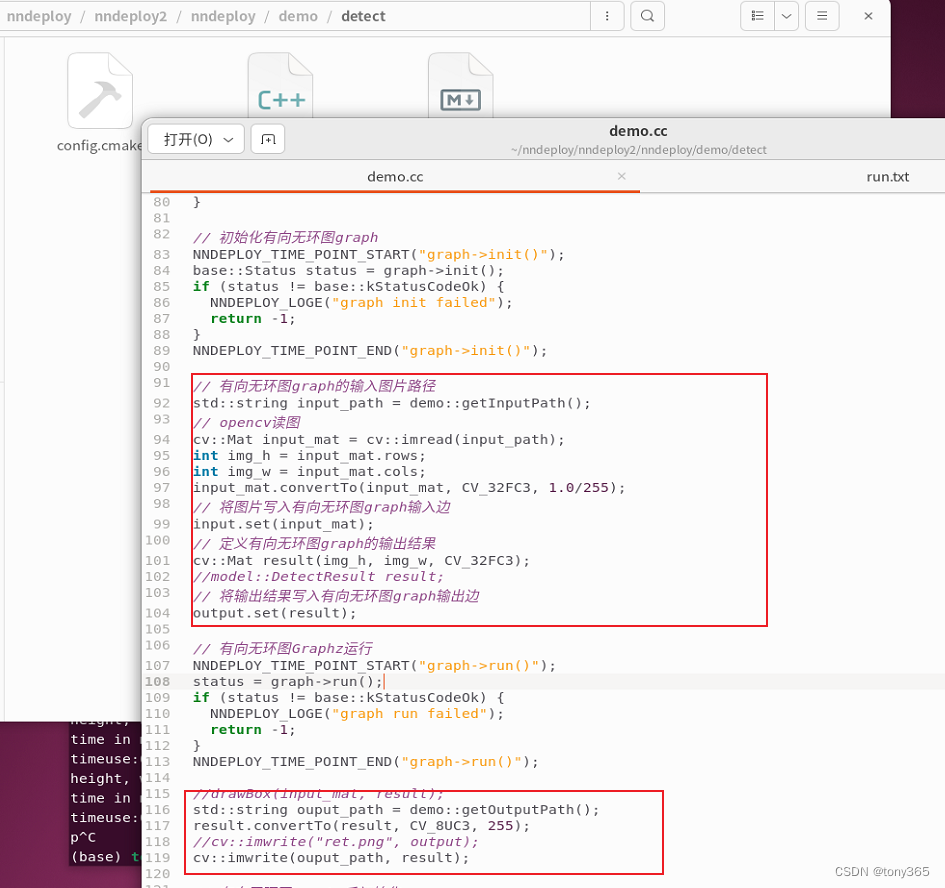

首先修改demo.cc

输入是 h,w,c float32

输出也是h,w,c float32

完整代码:

#include "flag.h"

#include "nndeploy/base/glic_stl_include.h"

#include "nndeploy/base/time_profiler.h"

#include "nndeploy/dag/node.h"

#include "nndeploy/device/device.h"

#include "nndeploy/model/detect/yolo/yolo.h"

using namespace nndeploy;

cv::Mat drawBox(cv::Mat &cv_mat, model::DetectResult &result) {

// float w_ratio = float(cv_mat.cols) / float(640);

// float h_ratio = float(cv_mat.rows) / float(640);

float w_ratio = float(cv_mat.cols);

float h_ratio = float(cv_mat.rows);

const int CNUM = 80;

cv::RNG rng(0xFFFFFFFF);

cv::Scalar_<int> randColor[CNUM];

for (int i = 0; i < CNUM; i++)

rng.fill(randColor[i], cv::RNG::UNIFORM, 0, 256);

int i = -1;

for (auto bbox : result.bboxs_) {

std::array<float, 4> box;

box[0] = bbox.bbox_[0]; // 640.0;

box[2] = bbox.bbox_[2]; // 640.0;

box[1] = bbox.bbox_[1]; // 640.0;

box[3] = bbox.bbox_[3]; // 640.0;

box[0] *= w_ratio;

box[2] *= w_ratio;

box[1] *= h_ratio;

box[3] *= h_ratio;

int width = box[2] - box[0];

int height = box[3] - box[1];

int id = bbox.label_id_;

NNDEPLOY_LOGE("box[0]:%f, box[1]:%f, width :%d, height :%d\n", box[0],

box[1], width, height);

cv::Point p = cv::Point(box[0], box[1]);

cv::Rect rect = cv::Rect(box[0], box[1], width, height);

cv::rectangle(cv_mat, rect, randColor[id]);

std::string text = " ID:" + std::to_string(id);

cv::putText(cv_mat, text, p, cv::FONT_HERSHEY_PLAIN, 1, randColor[id]);

}

return cv_mat;

}

//

int main(int argc, char *argv[]) {

gflags::ParseCommandLineNonHelpFlags(&argc, &argv, true);

if (demo::FLAGS_usage) {

demo::showUsage();

return -1;

}

// 检测模型的有向无环图graph名称,例如:

// NNDEPLOY_YOLOV5/NNDEPLOY_YOLOV6/NNDEPLOY_YOLOV8

std::string name = demo::getName();

// 推理后端类型,例如:

// kInferenceTypeOpenVino / kInferenceTypeTensorRt / kInferenceTypeOnnxRuntime

base::InferenceType inference_type = demo::getInferenceType();

// 推理设备类型,例如:

// kDeviceTypeCodeX86:0/kDeviceTypeCodeCuda:0/...

base::DeviceType device_type = demo::getDeviceType();

// 模型类型,例如:

// kModelTypeOnnx/kModelTypeMnn/...

base::ModelType model_type = demo::getModelType();

// 模型是否是路径

bool is_path = demo::isPath();

// 模型路径或者模型字符串

std::vector<std::string> model_value = demo::getModelValue();

// 有向无环图graph的输入边packert

dag::Edge input("detect_in");

// 有向无环图graph的输出边packert

dag::Edge output("detect_out");

// 创建检测模型有向无环图graph

dag::Graph *graph =

dag::createGraph(name, inference_type, device_type, &input, &output,

model_type, is_path, model_value);

if (graph == nullptr) {

NNDEPLOY_LOGE("graph is nullptr");

return -1;

}

// 初始化有向无环图graph

NNDEPLOY_TIME_POINT_START("graph->init()");

base::Status status = graph->init();

if (status != base::kStatusCodeOk) {

NNDEPLOY_LOGE("graph init failed");

return -1;

}

NNDEPLOY_TIME_POINT_END("graph->init()");

// 有向无环图graph的输入图片路径

std::string input_path = demo::getInputPath();

// opencv读图

cv::Mat input_mat = cv::imread(input_path);

int img_h = input_mat.rows;

int img_w = input_mat.cols;

input_mat.convertTo(input_mat, CV_32FC3, 1.0/255);

// 将图片写入有向无环图graph输入边

input.set(input_mat);

// 定义有向无环图graph的输出结果

cv::Mat result(img_h, img_w, CV_32FC3);

//model::DetectResult result;

// 将输出结果写入有向无环图graph输出边

output.set(result);

// 有向无环图Graphz运行

NNDEPLOY_TIME_POINT_START("graph->run()");

status = graph->run();

if (status != base::kStatusCodeOk) {

NNDEPLOY_LOGE("graph run failed");

return -1;

}

NNDEPLOY_TIME_POINT_END("graph->run()");

//drawBox(input_mat, result);

std::string ouput_path = demo::getOutputPath();

result.convertTo(result, CV_8UC3, 255);

//cv::imwrite("ret.png", output);

cv::imwrite(ouput_path, result);

// 有向无环图graphz反初始化

NNDEPLOY_TIME_POINT_START("graph->deinit()");

status = graph->deinit();

if (status != base::kStatusCodeOk) {

NNDEPLOY_LOGE("graph deinit failed");

return -1;

}

NNDEPLOY_TIME_POINT_END("graph->deinit()");

NNDEPLOY_TIME_PROFILER_PRINT("detetct time profiler");

// 有向无环图graphz销毁

delete graph;

NNDEPLOY_LOGE("hello world!\n");

return 0;

}

然后在

dag::Graph* createYoloV5Graph(const std::string& name,

base::InferenceType inference_type,

base::DeviceType device_type, dag::Edge* input,

dag::Edge* output, base::ModelType model_type,

bool is_path,

std::vectorstd::string model_value)

中修改前后处理函数即可。

前处理,infer , 后处理是一个 graph , 也就是demo中完整的图。

demo中的input和output是 完整的图的输入输出。

然后前处理,infer, 后处理 内部也有自己的input和output,不要搞混淆了。

比如模型infer输出是c,h,w, float32的结果,后处理 input是 c,h,w float32 的数据,output转换为 h,w,c float32的数据(对应上面的cv::Mat result(img_h, img_w, CV_32FC3);)

base::Status YoloPostProcess::runV5V6() {

YoloPostParam* param = (YoloPostParam*)param_.get();

float score_threshold = param->score_threshold_;

int num_classes = param->num_classes_;

device::Tensor* tensor = inputs_[0]->getTensor();

//很多代码都是冗余的,这一步只要获得 output的tensor的指针,然后进行c,h,w -> h, w, c即可。

float* data = (float*)tensor->getPtr();

int batch = tensor->getBatch();

int channel = tensor->getChannel();

int height = tensor->getHeight();

int width = tensor->getWidth();

NNDEPLOY_LOGE("batch:%d, channel:%d, height:%d, width:%d. (%f,%f,%f))\n", batch, channel, height, width, data[0], data[1], data[2]);

cv::Mat* dst = outputs_[0]->getCvMat();

NNDEPLOY_LOGE("mat channel:%d, height:%d, width:%d.\n", dst->channels(), dst->rows, dst->cols);

auto* img_data = (float*)dst->data;

for (int h = 0; h < height; h++)

{

for (int w = 0; w < width; w++)

{

for (int c = 0; c < 3; c++)

{

int in_index = h * width * 3 + w * 3 + c;

int out_index = c * width * height + h * width + w;

// if (w < 10)

// if(h < 10)

// printf("%.2f,", data[out_index]);

img_data[in_index] = data[out_index];

}

}

// if(h < 10)

// printf("\n");

}

return base::kStatusCodeOk;

}

前处理也是同样的道理