lora微调模版

- 1、版一:使用peft包的lora微调

- (1)设置超参

- 方式一:代码中设置(便于debug)

- 方式二: .sh文件指定

- (2)加载数据集

- I、对应的.jsonl或json文件, 原始格式为:

- (3)加载基础模型

- (4)内存优化、梯度计算设置

- (5)加载loraConfig,设置peft模型

- (6)模型训练

- (7)模型保存

1、版一:使用peft包的lora微调

感谢:github:LLM-Tuning ,该项目集成了internLM、百川、chinese-llama-alpaca-2、chatglm的lora微调。

from transformers.integrations import TensorBoardCallback

from torch.utils.tensorboard import SummaryWriter

from transformers import TrainingArguments

from transformers import Trainer, HfArgumentParser

from transformers import AutoTokenizer, AutoModel, AutoConfig, DataCollatorForLanguageModeling

from accelerate import init_empty_weights

import torch

import torch.nn as nn

from peft import get_peft_model, LoraConfig, TaskType, PeftModel

from dataclasses import dataclass, field

import datasets

import os

from pprint import pprint as print

(1)设置超参

方式一:代码中设置(便于debug)

@dataclass

class FinetuneArguments:

model_version: str = field(default="chat-7b")

tokenized_dataset: str = field(default=" ") # todo tokenized之后的数据集文件夹。每个模型是不一样的,不可混用!

# tokenized_train_dataset: str = field(default=" ") # tokenized之后的数据集文件夹

# tokenized_eval_dataset: str = field(default=" ") # tokenized之后的数据集文件夹

train_size: int = field(default=1000) # train size

eval_size: int = field(default=1000) # train size

model_path: str = field(default=" ")

lora_rank: int = field(default=8)

previous_lora_weights: str = field(default=None) # 如果要在前面的 LoRA 上继续训练,就设置一下之前的地址

no_prompt_loss: int = field(default=0) # 默认 prompt 参与loss计算

training_args = TrainingArguments(

per_device_train_batch_size=10,

gradient_accumulation_steps=1,

warmup_steps=10, # number of warmup steps for learning rate scheduler

max_steps=60000,

save_steps=200,

save_total_limit=3,

learning_rate=float(1e-4),

remove_unused_columns=False,

logging_steps=50,

weight_decay=0.01, # strength of weight decay

# output_dir='weights/chatglm2-lora-tuning'

output_dir='weights/chatglm2-lora-tuning-test'

)

finetune_args = FinetuneArguments(tokenized_dataset="simple_math_4op_chatglm3", lora_rank=8)

方式二: .sh文件指定

如果喜欢用.sh文件启动,以上参数也可以不用在代码中配置,直接写在xx.sh文件:

CUDA_VISIBLE_DEVICES=2,3 python your_main.py

–tokenized_dataset simple_math_4op

–lora_rank 8

–per_device_train_batch_size 10

–gradient_accumulation_steps 1

–max_steps 100000

–save_steps 200

–save_total_limit 2

–learning_rate 1e-4

–fp16

–remove_unused_columns false

–logging_steps 50

–output_dir weights/simple_math_4op

对应代码:

finetune_args, training_args = HfArgumentParser((FinetuneArguments, TrainingArguments)).parse_args_into_dataclasses()

(2)加载数据集

# load dataset

# 加载数据集

dataset = datasets.load_from_disk('data/tokenized_data/' + finetune_args.tokenized_dataset)

# dataset = dataset.select(range(10000))

print(f"\n{len(dataset)=}\n")

# 训练、评估集各取N条

train_dataset = dataset.select(range(finetune_args.train_size)) if finetune_args.train_size else dataset # 取前 N 条训练

eval_dataset = dataset.select(list(range(len(dataset)))[-finetune_args.eval_size:]) if finetune_args.eval_size else dataset # 取后 N 条验证

# print(train_dataset[0])

# print(eval_dataset[0])

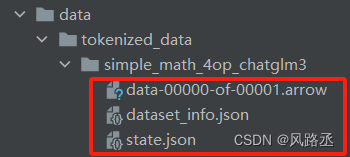

说明:datasets.load_from_disk( tokenized_data_path)加载的是已经tokenized后的数据。执行tokenize_dataset_rows.py即可完成原始数据到tokenized_data转变。

I、对应的.jsonl或json文件, 原始格式为:

{

“instruction”: "Instruction: What are the three primary colors?\nAnswer: ",

“output”: “The three primary colors are red, blue, and yellow.”

}

{

“instruction”: “Instruction: Identify the odd one out from the following array of words.”,

“input”: Fever, shiver, wrinkle\nAnswer: ",

“output”: “Wrinkle is the odd one out since it is the only word that does not describe a physical sensation.”

}

II、tokenize需要的文件格式:

{“context”: "Instruction: What are the three primary colors?\nAnswer: ", “target”: “The three primary colors are red, blue, and yellow.”}

{"context": "Instruction: Identify the odd one out from the following array of words.\nInput: Fever, shiver, wrinkle\nAnswer: ", “target”: “Wrinkle is the odd one out since it is the only word that does not describe a physical sensation.”}

tokenize_dataset_rows.py

import argparse

import json

from tqdm import tqdm

import datasets

import transformers

from transformers import AutoTokenizer, LlamaTokenizer

# 基础模型地址

# model_path = "D:\\openmodel1\\chatglm3-6b"

model_path = "D:\\openmodel1\\internlm-chat-7b"

parser = argparse.ArgumentParser()

parser.add_argument("--data_path", type=str, help="原始数据地址")

parser.add_argument("--model_checkpoint", type=str, help="checkpoint, like `THUDM/chatglm-6b`", default=model_path)

parser.add_argument("--input_file", type=str, help="tokenize需要的数据文件地址,文件中每一行都是json格式,包含一个输出和一个输出",

default="alpaca_data-cf.jsonl")

parser.add_argument("--prompt_key", type=str, default=f"context", help="你的jsonl文件里,Instruction 的输入字段是什么")

parser.add_argument("--target_key", type=str, default=f"target", help="你的jsonl文件里,Instruction 的输出字段是什么")

parser.add_argument("--save_name", type=str, default=f"simple_math_4op_internlm", help="经过tokenize之后的数据集的存放位置")

parser.add_argument("--max_seq_length", type=int, default=2000)

parser.add_argument("--skip_overlength", type=bool, default=False)

args = parser.parse_args()

model_checkpoint = args.model_checkpoint

def preprocess(tokenizer, config, example, max_seq_length, prompt_key, target_key):

prompt = example[prompt_key]

target = example[target_key]

prompt_ids = tokenizer.encode(prompt, max_length=max_seq_length, truncation=True)

target_ids = tokenizer.encode(target, max_length=max_seq_length, truncation=True, add_special_tokens=False)

# 最终还是将instruction的输入输出都拼在一起,使用经典的causal-LM的next word prediction方式来训练

input_ids = prompt_ids + target_ids + [config.eos_token_id]

return {"input_ids": input_ids, "seq_len": len(prompt_ids)}

def read_jsonl(path, max_seq_length, prompt_key, target_key, skip_overlength=False):

if 'llama' in model_checkpoint.lower() or 'alpaca' in model_checkpoint.lower():

tokenizer = LlamaTokenizer.from_pretrained(

model_checkpoint, trust_remote_code=True)

else:

tokenizer = AutoTokenizer.from_pretrained(

model_checkpoint, trust_remote_code=True)

config = transformers.AutoConfig.from_pretrained(

model_checkpoint, trust_remote_code=True, device_map='auto')

with open(path, "r") as f:

for line in tqdm(f.readlines()):

example = json.loads(line)

feature = preprocess(tokenizer, config, example, max_seq_length, prompt_key, target_key)

if skip_overlength and len(feature["input_ids"]) > max_seq_length:

continue

feature["input_ids"] = feature["input_ids"][:max_seq_length]

yield feature

def format_example(example: dict) -> dict:

context = f"Instruction: {example['instruction']}\n"

if example.get("input"):

context += f"Input: {example['input']}\n"

context += "Answer: "

target = example["output"]

return {"context": context, "target": target}

with open(args.data_path) as f:

examples = json.load(f)

with open(args.input_file, 'w') as f:

for example in tqdm(examples, desc="formatting.."):

f.write(json.dumps(format_example(example)) + '\n')

# 输出文件统一放在 data/tokenized_data 文件夹下

input_file_path = f'data/{args.input_file}'

save_path = f"data/tokenized_data/{args.save_name}"

dataset = datasets.Dataset.from_generator(

lambda: read_jsonl(input_file_path, args.max_seq_length, args.prompt_key, args.target_key, args.skip_overlength)

)

dataset.save_to_disk(save_path)

执行成功后生成的文件目录:

(3)加载基础模型

print(model):model打印出来,以便 (1)查看模型结构;(2)后续设置lora微调层(常作用在attention相关的模块)load_in_8bit=False:是否使用8bit量化:如果加载时内存溢出,可尝试设置为True

print('loading init model...')

model = AutoModel.from_pretrained(

model_path, trust_remote_code=True,

load_in_8bit=False, # 量化:如果加载时内存溢出,可尝试设置为True

device_map="auto" # 模型不同层会被自动分配到不同GPU上进行计算

# device_map={'':torch.cuda.current_device()}

)

print(model.hf_device_map)

print(f'memory_allocated {torch.cuda.memory_allocated()}')

print(model) # 把model打印出来,以便:(1)查看模型结构;(2)后续设置lora微调层(常作用在attention相关的模块)

如果多卡训练出现loss计算报错:

设置了 device_map=“auto” 之后:

- chatglm 1.0 的时候,lm_head会跟input_layer自动分配到同个 device,

- chatglm 2.0 的时候,没有了 lm_head,有一个 output_layer,这个时候可能会分配到两个device,导致计算loss的时候报错显示 RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:2 and cuda:0!

(1) 一个解决办法是设置device_map={‘’:torch.cuda.current_device()},进行数据并行,但是这样batchsize只能设置非常小,而且很占显存

(2)另一个解决办法是: 手动把 output_layer 设置为跟 input 一样的 device。

然后这里会加载两次模型,可以先加载,调整device_map之后,再把旧模型删掉:https://github.com/pytorch/pytorch/issues/37250#issuecomment-1622972872

if torch.cuda.device_count() > 1:

model.hf_device_map['transformer.output_layer'] = model.hf_device_map['transformer.embedding']

new_hf_device_map = model.hf_device_map

model.cpu()

del model

torch.cuda.empty_cache()

print(f'memory_allocated {torch.cuda.memory_allocated()}')

print('loading real model...')

model = AutoModel.from_pretrained(model_path, trust_remote_code=True, device_map=new_hf_device_map)

print(model.hf_device_map)

(4)内存优化、梯度计算设置

"""

.gradient_checkpointing_enable()

.enable_input_require_grads()

.is_parallelizable

这三个都是 transformers 模型的函数/参数(见 transformers/modeling_utils.py 文件)

"""

model.gradient_checkpointing_enable()

# note: use gradient checkpointing to save memory at the expense of slower backward pass.

model.enable_input_require_grads()

# note: Enables the gradients for the input embeddings. This is useful for fine-tuning adapter weights while keeping the model weights fixed.

# See https://github.com/huggingface/transformers/blob/ee88ae59940fd4b2c8fc119373143d7a1175c651/src/transformers/modeling_utils.py#L1190

(5)加载loraConfig,设置peft模型

# setup peft

if finetune_args.previous_lora_weights == None:

peft_config = LoraConfig(

task_type=TaskType.CAUSAL_LM,

inference_mode=False,

r=finetune_args.lora_rank,

lora_alpha=32,

lora_dropout=0.1,

target_modules=["q_proj", "k_proj", "v_proj"] # 把model打印出来,找跟attention相关的模块

)

model = get_peft_model(model, peft_config)

else:

# 当设置了 previous_lora_weights 说明要继续训练之前的 lora weights

model = PeftModel.from_pretrained(model, finetune_args.previous_lora_weights)

# see: https://github.com/huggingface/peft/issues/184

for name, param in model.named_parameters():

if 'lora' in name or 'Lora' in name:

param.requires_grad = True

(6)模型训练

# 定义数据加载器,可以根据需要自定义

if finetune_args.no_prompt_loss:

print("*** If you see this message, ***")

print("*** it means: Prompt is not calculated in loss. ***")

data_collator = my_data_collator

else:

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

# 开始训练

trainer = ModifiedTrainer( # 这里也可以直接使用Trainer类

model=model,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

args=training_args,

callbacks=[TensorBoardCallback(writer)],

data_collator=data_collator,

)

trainer.train()

备注1:以上代码中的 ModifiedTrainer只是简化了Trainer的代码实现,并没有改变代码逻辑,所以该类可要可不要。

class ModifiedTrainer(Trainer):

def compute_loss(self, model, inputs, return_outputs=False):

outputs = model(

input_ids=inputs["input_ids"],

labels=inputs["labels"],

)

loss = outputs.loss

return (loss, outputs) if return_outputs else loss

def save_model(self, output_dir=None, _internal_call=False):

# 因为交给Trainer的model实际上是PeftModel类型,所以这里的 save_pretrained 会直接使用PeftModel的保存方法

# 从而只保存 LoRA weights

self.model.save_pretrained(output_dir)

备注2:my_data_collator数据加载器:根据需要自定义功能

# 这里的 collator 主要参考了 https://github.com/mymusise/ChatGLM-Tuning/blob/master/finetune.py 中的写法

# 将 prompt 的部分的label也设置为了 -100,从而在训练时不纳入loss的计算

# 对比之下,也可以直接使用 DataCollatorForLanguageModeling,会将prompt 也纳入了计算。

# 这两种方式孰优孰劣尚不得而知,欢迎在issue或discussion中讨论。

def my_data_collator(features: list) -> dict:

"""

这个 collator 会把 prompt 的部分给mask掉,使得只有 output 部分参与计算 loss

"""

len_ids = [len(feature["input_ids"]) for feature in features]

longest = max(len_ids)

input_ids = []

labels_list = []

for ids_l, feature in sorted(zip(len_ids, features), key=lambda x: -x[0]):

ids = feature["input_ids"] # ids= prompt + target, 总长度是max_seq_length

seq_len = feature["seq_len"] # prompt length

labels = (

[-100] * (seq_len - 1) + ids[(seq_len - 1):] + [-100] * (longest - ids_l)

)

ids = ids + [tokenizer.pad_token_id] * (longest - ids_l)

_ids = torch.LongTensor(ids)

labels_list.append(torch.LongTensor(labels))

input_ids.append(_ids)

input_ids = torch.stack(input_ids)

labels = torch.stack(labels_list)

return {

"input_ids": input_ids,

"labels": labels,

}

(7)模型保存

# save model

model.save_pretrained(training_args.output_dir)