1.VOC数据集

LabelImg是一款广泛应用于图像标注的开源工具,主要用于构建目标检测模型所需的数据集。Visual Object Classes(VOC)数据集作为一种常见的目标检测数据集,通过labelimg工具在图像中标注边界框和类别标签,为训练模型提供了必要的注解信息。VOC数据集源于对PASCAL挑战赛的贡献,涵盖多个物体类别,成为目标检测领域的重要基准之一,推动着算法性能的不断提升。

使用labelimg标注或者其他VOC标注工具标注后,会得到两个文件夹,如下:

Annotations ------->>> 存放.xml标注信息文件

JPEGImages ------->>> 存放图片文件

2.划分VOC数据集

如下代码是按照训练集:验证集 = 8:2来划分的,会找出没有对应.xml的图片文件,且划分的时候支持JPEGImages文件夹下有如下图片格式:

['.jpg', '.png', '.gif', '.bmp', '.tiff', '.jpeg', '.webp', '.svg', '.psd', '.cr2', '.nef', '.dng']

整体代码为:

import os

import random

image_extensions = ['.jpg', '.png', '.gif', '.bmp', '.tiff', '.jpeg', '.webp', '.svg', '.psd', '.cr2', '.nef', '.dng']

def split_voc_dataset(dataset_dir, train_ratio, val_ratio):

if not (0 < train_ratio + val_ratio <= 1):

print("Invalid ratio values. They should sum up to 1.")

return

annotations_dir = os.path.join(dataset_dir, 'Annotations')

images_dir = os.path.join(dataset_dir, 'JPEGImages')

output_dir = os.path.join(dataset_dir, 'ImageSets/Main')

if not os.path.exists(output_dir):

os.makedirs(output_dir)

dict_info = dict()

# List all the image files in the JPEGImages directory

for file in os.listdir(images_dir):

if any(ext in file for ext in image_extensions):

jpg_files, endwith = os.path.splitext(file)

dict_info[jpg_files] = endwith

# List all the XML files in the Annotations directory

xml_files = [file for file in os.listdir(annotations_dir) if file.endswith('.xml')]

random.shuffle(xml_files)

num_samples = len(xml_files)

num_train = int(num_samples * train_ratio)

num_val = int(num_samples * val_ratio)

train_xml_files = xml_files[:num_train]

val_xml_files = xml_files[num_train:num_train + num_val]

with open(os.path.join(output_dir, 'train_list.txt'), 'w') as train_file:

for xml_file in train_xml_files:

image_name = os.path.splitext(xml_file)[0]

if image_name in dict_info:

image_path = os.path.join('JPEGImages', image_name + dict_info[image_name])

annotation_path = os.path.join('Annotations', xml_file)

train_file.write(f'{image_path} {annotation_path}\n')

else:

print(f"没有找到图片 {os.path.join(images_dir, image_name)}")

with open(os.path.join(output_dir, 'val_list.txt'), 'w') as val_file:

for xml_file in val_xml_files:

image_name = os.path.splitext(xml_file)[0]

if image_name in dict_info:

image_path = os.path.join('JPEGImages', image_name + dict_info[image_name])

annotation_path = os.path.join('Annotations', xml_file)

val_file.write(f'{image_path} {annotation_path}\n')

else:

print(f"没有找到图片 {os.path.join(images_dir, image_name)}")

labels = set()

for xml_file in xml_files:

annotation_path = os.path.join(annotations_dir, xml_file)

with open(annotation_path, 'r') as f:

lines = f.readlines()

for line in lines:

if '<name>' in line:

label = line.strip().replace('<name>', '').replace('</name>', '')

labels.add(label)

with open(os.path.join(output_dir, 'labels.txt'), 'w') as labels_file:

for label in labels:

labels_file.write(f'{label}\n')

if __name__ == "__main__":

dataset_dir = 'BirdNest/'

train_ratio = 0.8 # Adjust the train-validation split ratio as needed

val_ratio = 0.2

split_voc_dataset(dataset_dir, train_ratio, val_ratio)

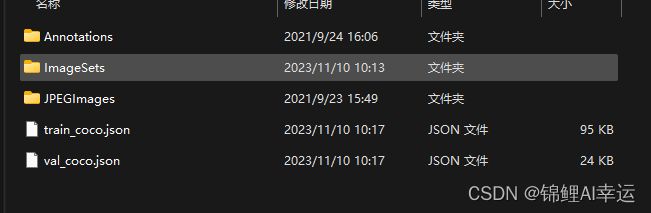

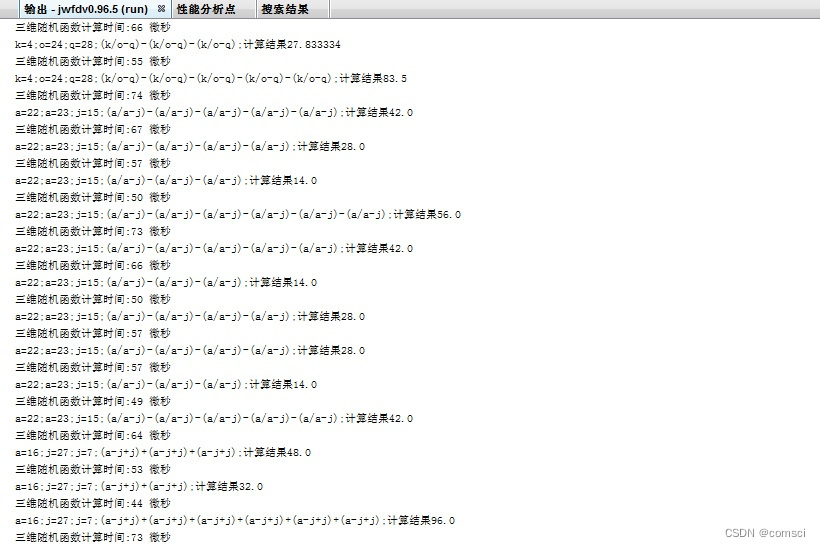

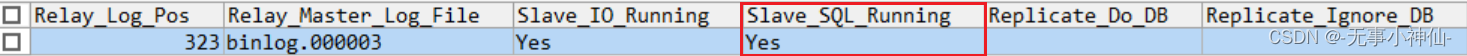

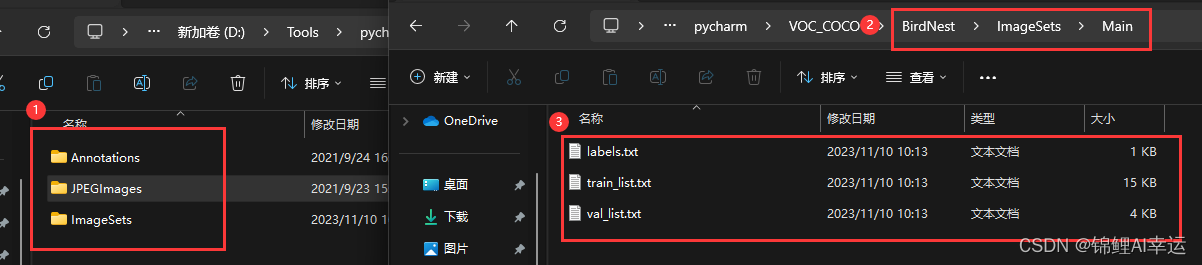

划分好后的截图:

3.VOC转COCO格式

目前很多框架大多支持的是COCO格式,因为存放与使用起来方便,采用了json文件来代替xml文件。

import json

import os

from xml.etree import ElementTree as ET

def parse_xml(dataset_dir, xml_file):

xml_path = os.path.join(dataset_dir, xml_file)

tree = ET.parse(xml_path)

root = tree.getroot()

objects = root.findall('object')

annotations = []

for obj in objects:

bbox = obj.find('bndbox')

xmin = int(bbox.find('xmin').text)

ymin = int(bbox.find('ymin').text)

xmax = int(bbox.find('xmax').text)

ymax = int(bbox.find('ymax').text)

# Extract label from XML annotation

label = obj.find('name').text

if not label:

print(f"Label not found in XML annotation. Skipping annotation.")

continue

annotations.append({

'xmin': xmin,

'ymin': ymin,

'xmax': xmax,

'ymax': ymax,

'label': label

})

return annotations

def convert_to_coco_format(image_list_file, annotations_dir, output_json_file, dataset_dir):

images = []

annotations = []

categories = []

# Load labels

with open(os.path.join(os.path.dirname(image_list_file), 'labels.txt'), 'r') as labels_file:

label_lines = labels_file.readlines()

categories = [{'id': i + 1, 'name': label.strip()} for i, label in enumerate(label_lines)]

# Load image list file

with open(image_list_file, 'r') as image_list:

image_lines = image_list.readlines()

for i, line in enumerate(image_lines):

image_path, annotation_path = line.strip().split(' ')

image_id = i + 1

image_filename = os.path.basename(image_path)

images.append({

'id': image_id,

'file_name': image_filename,

'height': 0, # You need to fill in the actual height of the image

'width': 0, # You need to fill in the actual width of the image

'license': None,

'flickr_url': None,

'coco_url': None,

'date_captured': None

})

# Load annotations from XML files

xml_annotations = parse_xml(dataset_dir, annotation_path)

for xml_annotation in xml_annotations:

label = xml_annotation['label']

category_id = next((cat['id'] for cat in categories if cat['name'] == label), None)

if category_id is None:

print(f"Label '{label}' not found in categories. Skipping annotation.")

continue

bbox = {

'xmin': xml_annotation['xmin'],

'ymin': xml_annotation['ymin'],

'xmax': xml_annotation['xmax'],

'ymax': xml_annotation['ymax']

}

annotations.append({

'id': len(annotations) + 1,

'image_id': image_id,

'category_id': category_id,

'bbox': [bbox['xmin'], bbox['ymin'], bbox['xmax'] - bbox['xmin'], bbox['ymax'] - bbox['ymin']],

'area': (bbox['xmax'] - bbox['xmin']) * (bbox['ymax'] - bbox['ymin']),

'segmentation': [],

'iscrowd': 0

})

coco_data = {

'images': images,

'annotations': annotations,

'categories': categories

}

with open(output_json_file, 'w') as json_file:

json.dump(coco_data, json_file, indent=4)

if __name__ == "__main__":

# 根据需要调整路径

dataset_dir = 'BirdNest/'

image_sets_dir = 'BirdNest/ImageSets/Main/'

train_list_file = os.path.join(image_sets_dir, 'train_list.txt')

val_list_file = os.path.join(image_sets_dir, 'val_list.txt')

output_train_json_file = os.path.join(dataset_dir, 'train_coco.json')

output_val_json_file = os.path.join(dataset_dir, 'val_coco.json')

convert_to_coco_format(train_list_file, image_sets_dir, output_train_json_file, dataset_dir)

convert_to_coco_format(val_list_file, image_sets_dir, output_val_json_file, dataset_dir)

print("The json file has been successfully generated!!!")

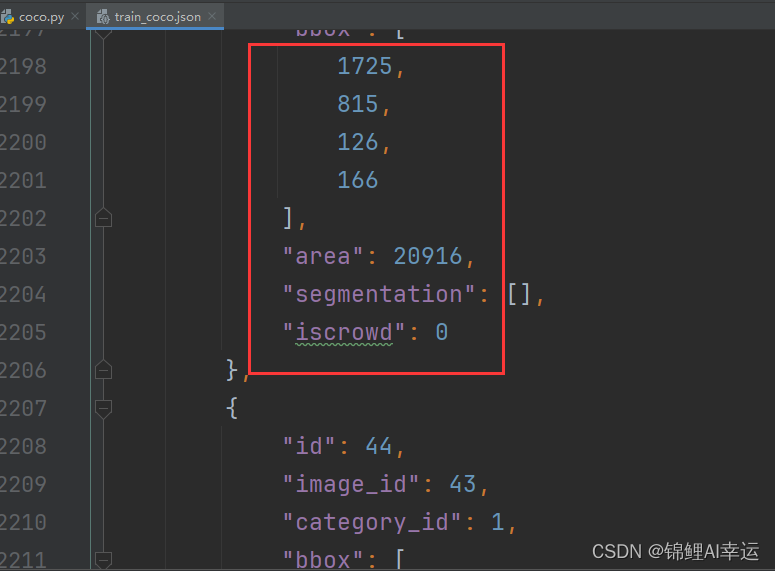

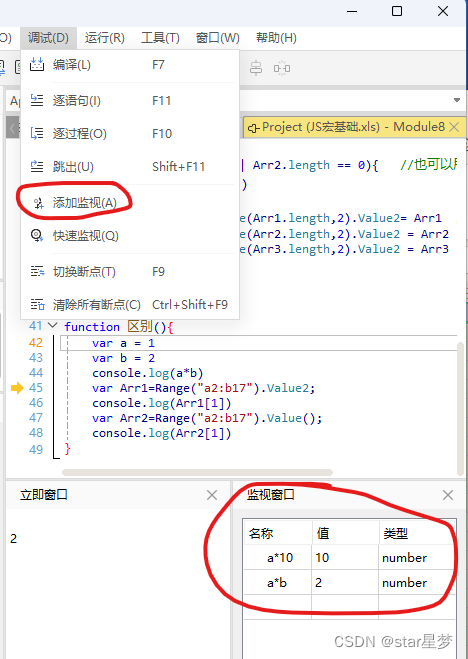

转COCO格式成功截图: