k8s资源监控

资源限制

上传镜像

[root@k8s2 limit]# vim limit.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi

limits:

memory: 100Mi

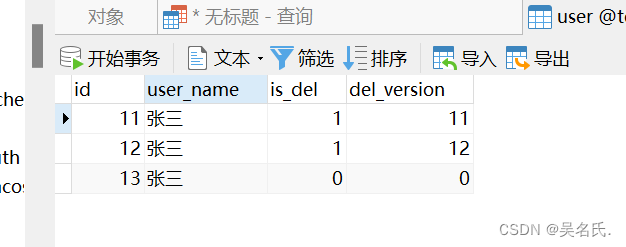

limitrange

[root@k8s2 limit]# cat range.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

[root@k8s2 limit]# kubectl apply -f range.yaml

[root@k8s2 limit]# kubectl describe limitrange

创建的pod自动添加限制

[root@k8s2 limit]# kubectl run demo --image nginx

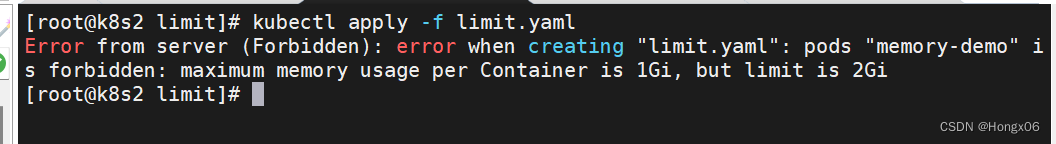

自定义限制的pod也需要在limitrange定义的区间内

ResourceQuota

[root@k8s2 limit]# vim range.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

pods: "2"

[root@k8s2 limit]# kubectl apply -f range.yaml

[root@k8s2 limit]# kubectl describe resourcequotas

配额是针对namespace施加的总限额,命名空间内的所有pod资源总和不能超过此配额

创建的pod必须定义资源限制

kubectl edit quota mem-cpu-demo 编辑指定名称的资源配额对象

metrics-server

官网:GitHub - kubernetes-sigs/metrics-server: Scalable and efficient source of container resource metrics for Kubernetes built-in autoscaling pipelines.

下载部署文件

[root@k8s2 metrics]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

修改部署文件

上传镜像到harbor

[root@k8s2 metrics]# kubectl apply -f components.yaml

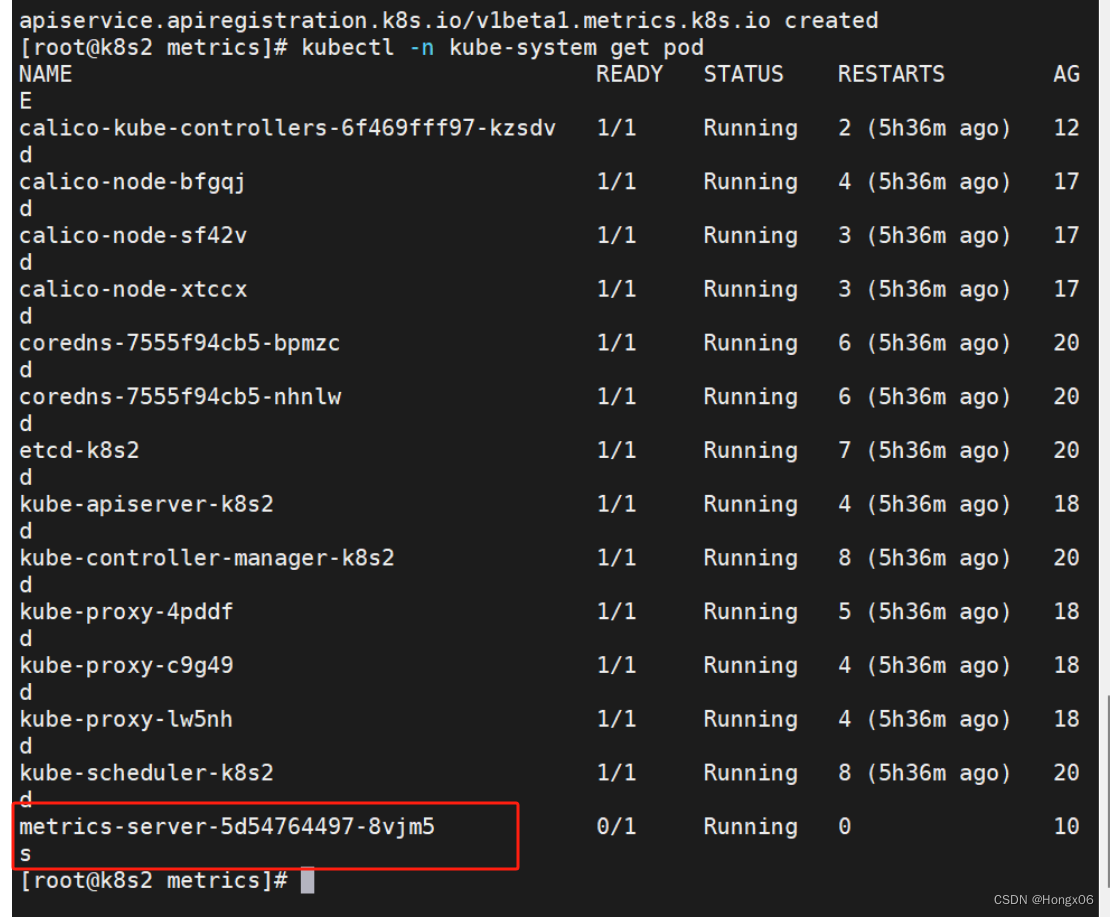

[root@k8s2 metrics]# kubectl -n kube-system get pod

如有问题,可以查看日志

[root@k8s2 metrics]# kubectl -n kube-system logs metrics-server-5d54764497-8vjm5

[root@k8s2 metrics]# kubectl top node

[root@k8s2 metrics]# kubectl top pod -A --sort-by cpu

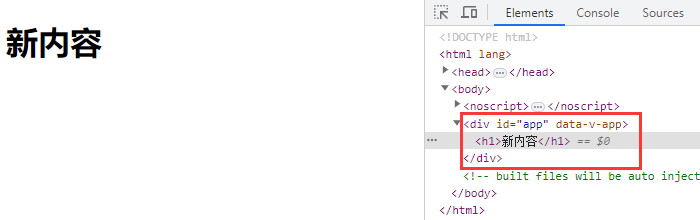

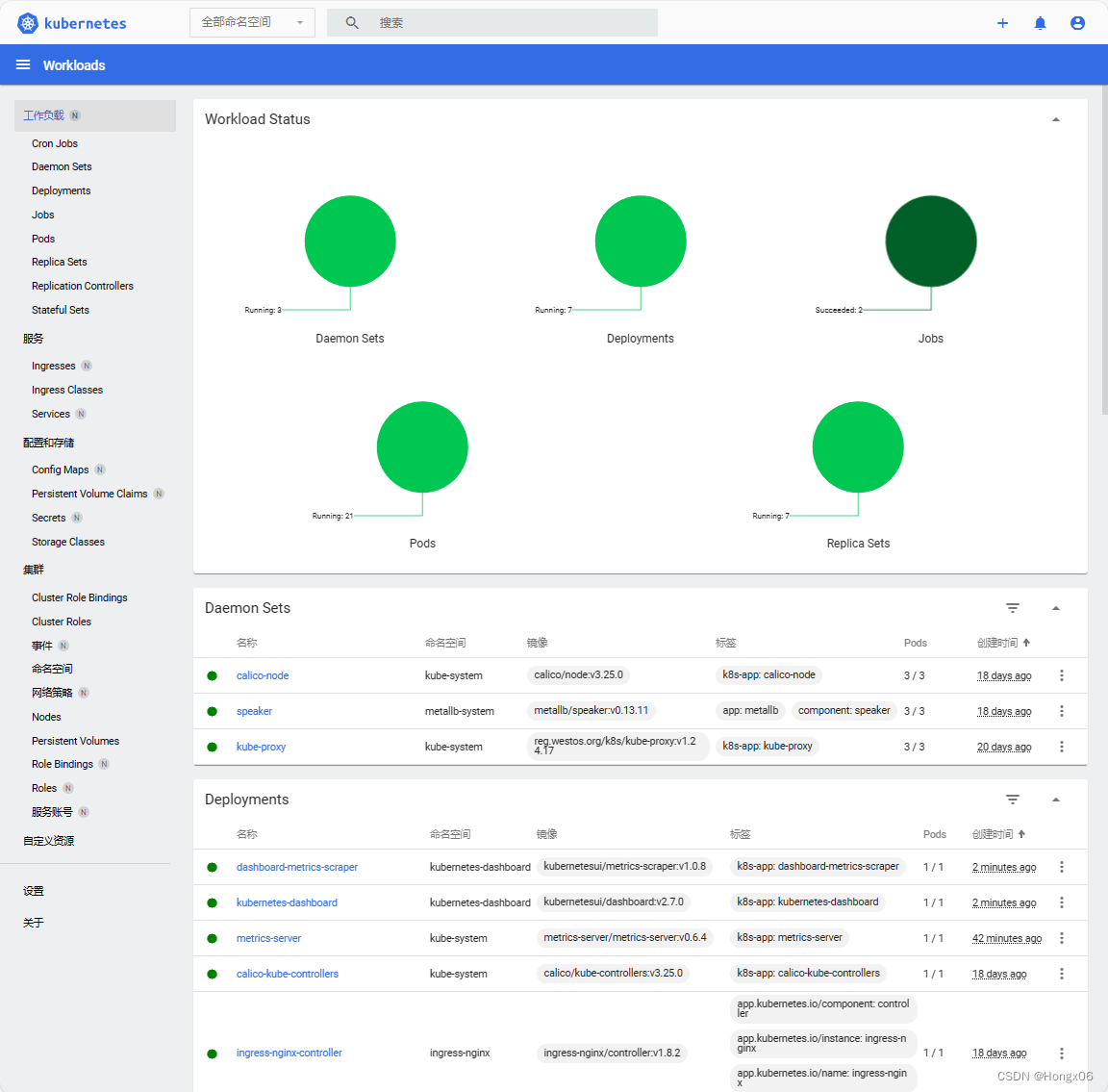

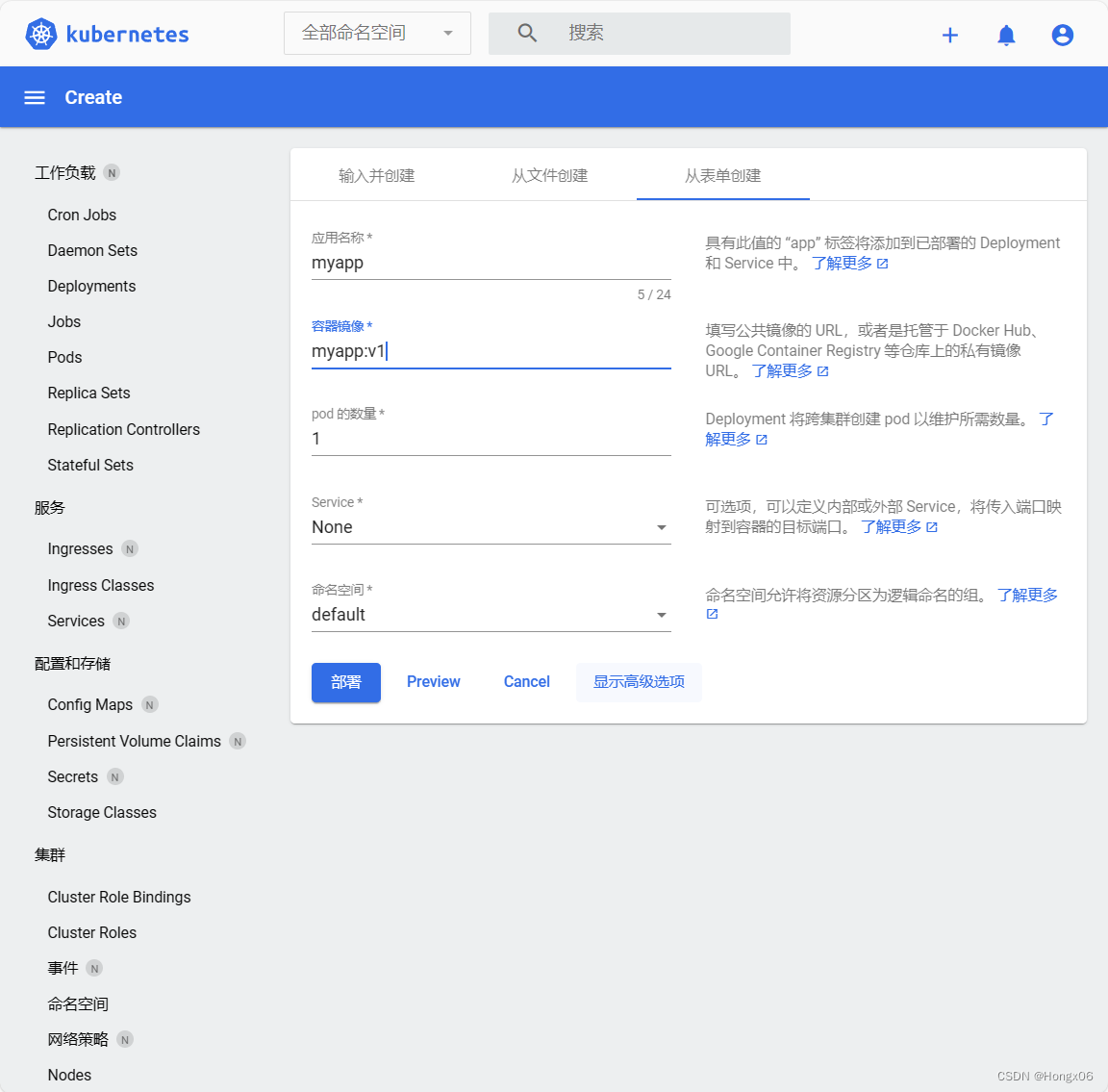

dashboard

官网:GitHub - kubernetes/dashboard: General-purpose web UI for Kubernetes clusters

下载部署文件

[root@k8s2 dashboard]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

上传所需镜像到harbor

修改svc为loadbalancer

[root@k8s2 dashboard]# kubectl apply -f recommended.yaml

[root@k8s2 dashboard]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

集群需要部署metallb-system,如果没有可以使用NodePort方式

访问https://192.168.81.101

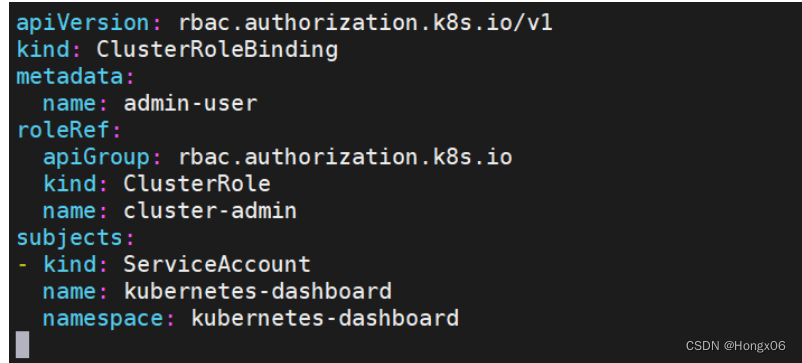

授权

[root@k8s2 dashboard]# vim rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

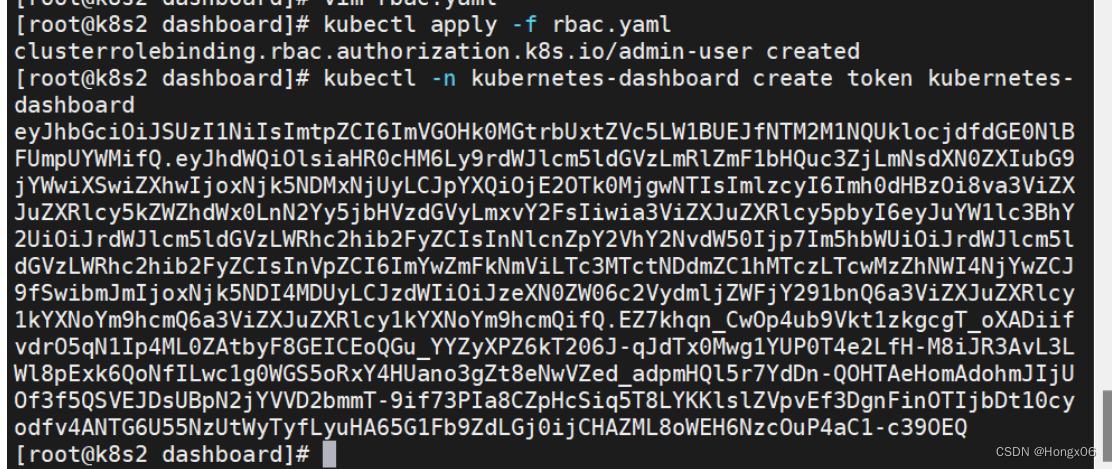

获取token

[root@k8s2 dashboard]# kubectl apply -f rbac.yaml

[root@k8s2 dashboard]# kubectl -n kubernetes-dashboard create token kubernetes-dashboard

使用token登录网页

k9s

[root@k8s2 dashboard]# tar zxf k9s.tar

[root@k8s2 dashboard]# ./k9s

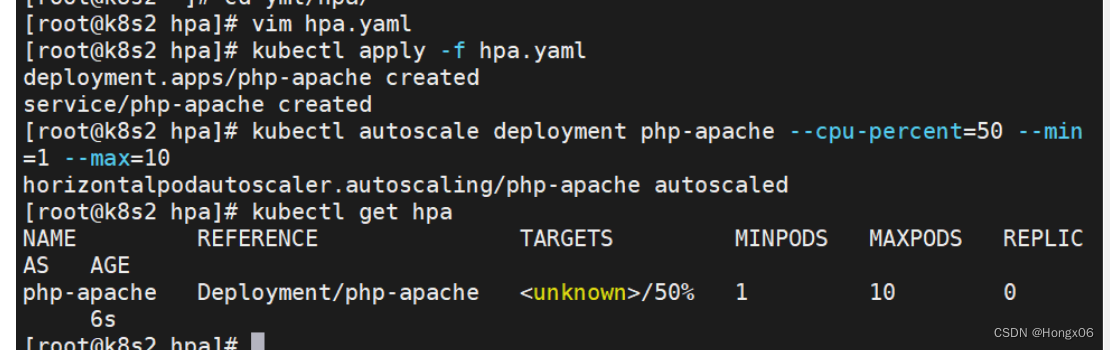

hpa

官网:HorizontalPodAutoscaler 演练 | Kubernetes

上传镜像

[root@k8s2 hpa]# vim hpa.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

[root@k8s2 hpa]# kubectl apply -f hpa.yaml

[root@k8s2 hpa]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

//当 CPU 利用率超过 50% 时,HPA 将根据当前 CPU 利用率来动态地扩展 Deployment 的副本数,使其保持在 1 到 10 之间

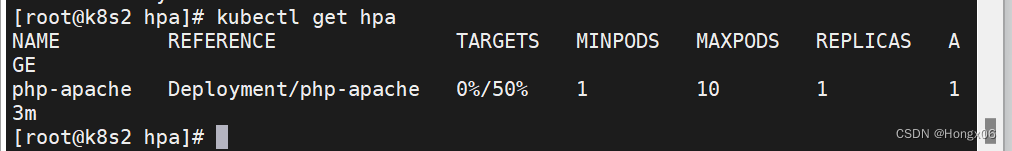

[root@k8s2 hpa]# kubectl get hpa

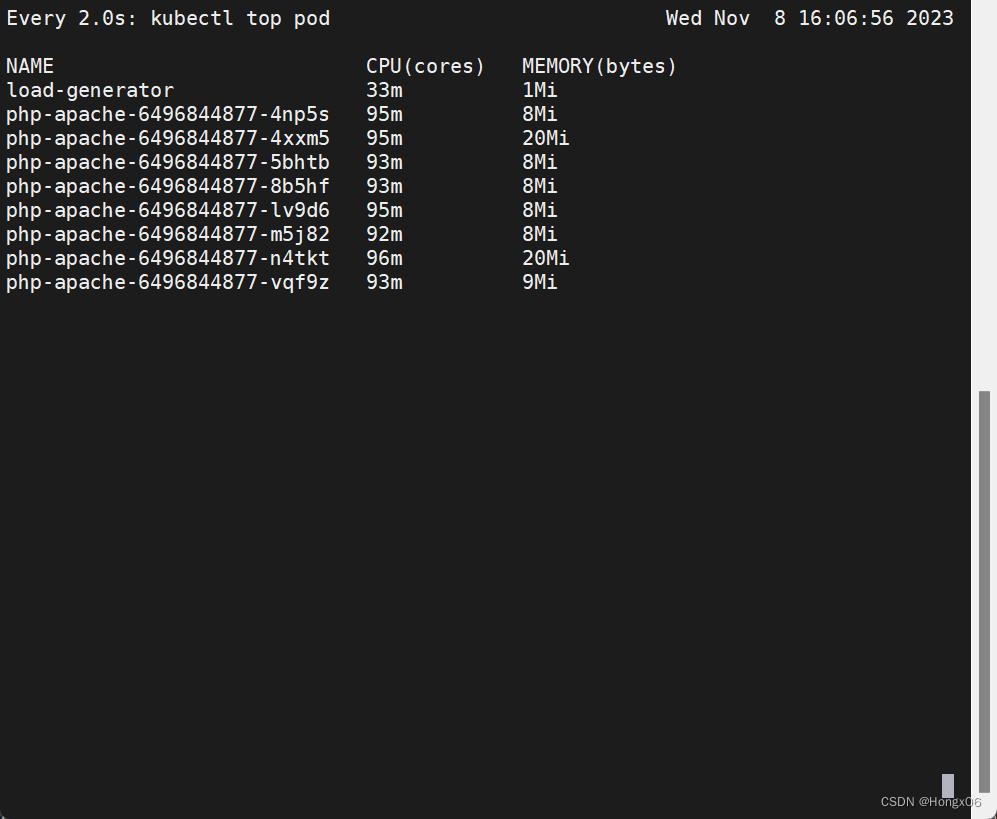

压测

[root@k8s2 hpa]# kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

pod负载上升

触发hpa扩容pod

结束压测后,默认等待5分钟冷却时间,pod会被自动回收

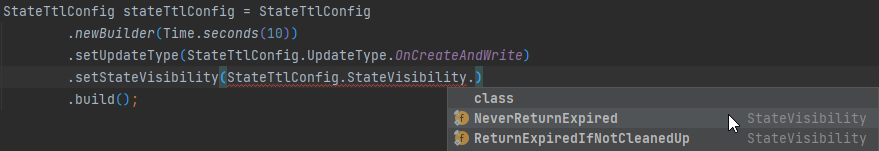

多项量度指标

[root@k8s2 hpa]# kubectl get hpa php-apache -o yaml > hpa-v2.yaml

修改文件,增加内存指标

- resource:

name: memory

target:

averageValue: 50Mi

type: AverageValue

type: Resource

[root@k8s2 hpa]# kubectl apply -f hpa-v2.yaml

[root@k8s2 hpa]# kubectl get hpa