keepalived VRRP介绍

集群(cluster)技术是一种较新的技术,通过集群技术,可以在付出较低成本的情况下获得在性能、可靠性、灵活性方面的相对较高的收益,其任务调度则是集群系统中的核心技术。

集群组成后,可以利用多个计算机和组合进行海量请求处理(**负载均衡**),从而获得很高的处理效率,也可以用多个计算机做备份(高可用),使得任何一个机器坏了整个系统还是能正常运行。

keepalived工作原理

keepalived是以VRRP协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该路由器所在局域网内其他机器的默认路由为该vip),master会发组播,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样的话就可以保证路由器的高可用了。

keepalived主要有三个模块,分别是core、check和vrrp。core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check负责健康检查,包括常见的各种检查方式。vrrp模块是来实现VRRP协议的。

脑裂 split barin:

Keepalived的BACKUP主机在收到不MASTER主机报文后就会切换成为master,如果是它们之间的通信线路出现问题,无法接收到彼此的组播通知,但是两个节点实际都处于正常工作状态,这时两个节点均为master强行绑定虚拟IP,导致不可预料的后果,这就是脑裂。

解决方式:

1、添加更多的检测手段,比如冗余的心跳线(两块网卡做健康监测),ping对方等等。尽量减少"裂脑"发生机会。(指标不治本,只是提高了检测到的概率);

2、设置仲裁机制。两方都不可靠,那就依赖第三方。比如启用共享磁盘锁,ping网关等。(针对不同的手段还需具体分析);

3、爆头,将master停掉。然后检查机器之间的防火墙。网络之间的通信

Nginx+keepalived实现七层负载均衡

Nginx通过Upstream模块实现负载均衡

upstream支持的负载均衡算法

主机清单:

主机名 IP 系统 用途 Proxy-master 172.16.147.155 centos7.5 主负载 Proxy-slave 172.16.147.156 centos7.5 主备 Real-server1 172.16.147.153 Centos7.5 web1 Real-server2 172.16.147.154 centos7.5 Web2 Vip for proxy 172.16.147.100

配置安装nginx 所有的机器,关闭防火墙和selinux

[root@proxy-master ~]# systemctl stop firewalld //关闭防火墙

[root@proxy-master ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/sysconfig/selinux //关闭selinux,重启生效

[root@proxy-master ~]# setenforce 0 //关闭selinux,临时生效安装nginx, 全部4台

[root@proxy-master ~]# cd /etc/yum.repos.d/

[root@proxy-master yum.repos.d]# vim nginx.repo

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=0

enabled=1

[root@proxy-master yum.repos.d]# yum install yum-utils -y

[root@proxy-master yum.repos.d]# yum install nginx -y一、实施过程

1、选择两台nginx服务器作为代理服务器。

2、给两台代理服务器安装keepalived制作高可用生成VIP

3、配置nginx的负载均衡

# 两台配置完全一样

[root@proxy-master ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

upstream backend {

server 172.16.147.154:80 weight=1 max_fails=3 fail_timeout=20s;

server 172.16.147.153:80 weight=1 max_fails=3 fail_timeout=20s;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://backend;

proxy_set_header Host $host:$proxy_port;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

}keepalived实现调度器HA

注:主/备调度器均能够实现正常调度

1. 主/备调度器安装软件

[root@proxy-master ~]# yum install -y keepalived

[root@proxy-slave ~]# yum install -y keepalived

[root@proxy-master ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@proxy-master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id directory1 #辅助改为directory2

}

vrrp_instance VI_1 {

state MASTER #定义主还是备

interface ens33 #VIP绑定接口

virtual_router_id 80 #整个集群的调度器一致

priority 100 #back改为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.100/24 # vip

}

}

[root@proxy-slave ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@proxy-slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id directory2

}

vrrp_instance VI_1 {

state BACKUP #设置为backup

interface ens33

nopreempt #设置到back上面,不抢占资源

virtual_router_id 80

priority 50 #辅助改为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.100/24

}

}启动KeepAlived(主备均启动)

[root@proxy-master ~]# systemctl enable keepalived

[root@proxy-slave ~]# systemctl start keepalived

[root@proxy-master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 172.16.147.100/32 scope global lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ec:8a:fe brd ff:ff:ff:ff:ff:ff

inet 172.16.147.155/24 brd 172.16.147.255 scope global noprefixroute dynamic ens33

valid_lft 1115sec preferred_lft 1115sec

inet 172.16.147.101/24 scope global secondary ens33

valid_lft forever preferred_lft forever可以解决心跳故障keepalived

不能解决Nginx服务故障

扩展对调度器Nginx健康检查(可选)两台都设置

思路:

让Keepalived以一定时间间隔执行一个外部脚本,脚本的功能是当Nginx失败,则关闭本机的Keepalived

(1) script

[root@proxy-master ~]# vim /etc/keepalived/check_nginx_status.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &>/dev/null

if [ $? -ne 0 ];then

# /etc/init.d/keepalived stop

systemctl stop keepalived

fi

[root@proxy-master ~]# chmod a+x /etc/keepalived/check_nginx_status.sh(2). keepalived使用script

! Configuration File for keepalived

global_defs {

router_id director1

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx_status.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.246.16/24

}

track_script {

check_nginx

}

}注:必须先启动nginx,再启动keepalived

客户端访问的是虚拟VIP,由虚拟vip去连接负载均衡的ip,让负载均衡去访问后端的nginx页面。而keepalived是提供VIP的,服务器分为主备,当主服务器的keepalived开启时, 那么VIP就会在主服务器上。只有当主服务器的keepalived关闭时,VIP才会飘向备服务器,客户端访问的才是备负载均衡服务器的内容。

但是当主负载均衡服务器的nginx关闭时,不会影响vip的存在,他还会在主负载均衡服务器上,但是客户端访问的 时候会显示拒绝连接,因为vip是与负载均衡服务器的IP连接的,他是去找她的。

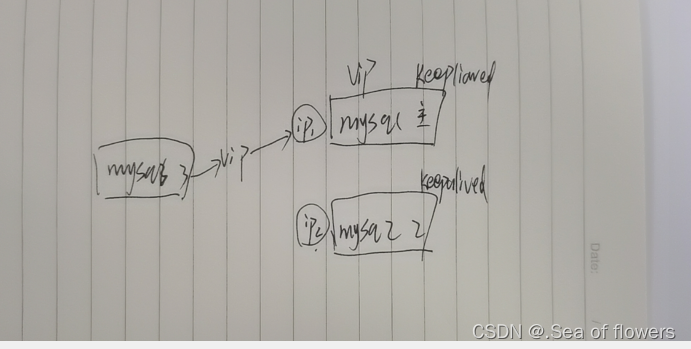

MySQL+Keepalived

项目环境: IP1:192.168.231.185 keepalived:master

IP2:192.168.231.187 keepalived:salve

VIP:192.168.231.66

俩台机器都做相同的操作:

安装MySQL

# yum -y install mysql-server mysql

启动myslqd

# systemctl start mysqld

查看密码,修改密码

grep password /var/log/mysqld.log

修改密码

mysqladmin -uroot -p'旧密码' password '新密码'

登录MySQL

mysql -p'password'

创建远程登录这俩台mysql的用户

grant all on *.* to 'root'@'%' identified by 'Qianfeng@123!';

为了体现实验结果

在IP1的数据库上创建数据库db1,IP2不创建数据库。

IP1

创建新数据库:

create database db1;

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| db1 |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

安装keepalived

俩台机器安装keepalived

[root@mysql-keepalived-master ~]# yum -y install keepalived

[root@mysql-keepalived-slave ~]# yum -y install keepalived修改配置文件

192.168.231.185的配置文件

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master

}

vrrp_instance VI_1 {

state MASTER #定义主还是备

interface ens33 #VIP绑定接口

virtual_router_id 89 #整个集群的调度器一致

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.231.66/24 #定义的虚拟ip VIP

}

}

192.168.231.187配置文件

! Configuration File for keepalived

global_defs {

router_id backup

}

vrrp_instance VI_1 {

state BACKUP #设置成backup

nopreempt #设置到back上面,不抢占资源

interface ens33

virtual_router_id 89

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.231.66/24 #虚拟VIP

}

}主备同时启动keepalived

[root@proxy-master ~]# systemctl enable keepalived

[root@proxy-slave ~]# systemctl start keepalived查看IP

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:b5:2b:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.231.185/24 brd 192.168.231.255 scope global noprefixroute dynamic ens33

valid_lft 1348sec preferred_lft 1348sec

inet 192.168.231.66/24 scope global secondary ens33

valid_lft forever preferred_lft forever

远程登录MySQL

在第三台拥有MySQL的服务器上进行远程登录MySQL

mysql -uroot -p'Qianfeng@123!' -h192.168.231.66 -P3306

-p密码是之前在俩台服务器创建的用户

-h的IP是虚拟vip

-p是MySQL的端口3306当IP1,keepalived开启,MySQL开启时,此时VIP在IP1上 查询到的是MySQL1

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| db1 |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

当IP1,keepalived关闭,IP1的MySQL开启时,此时VIP在IP2上 查询到的是MySQL2

mysql> show databases;

ERROR 2013 (HY000): Lost connection to MySQL server during query

mysql> show databases;

ERROR 2006 (HY000): MySQL server has gone away

No connection. Trying to reconnect...

Connection id: 18

Current database: *** NONE ***

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.01 sec)

###连接到IP1的数据库切换到IP2的数据库上当IP1,keepalived关闭,IP1的MySQL开启时,此时VIP在IP2上,接着将IP1的keepalived开启,那么此时vip会跑到IP1上, 查询到的是 MySQL1

mysql> show databases;

ERROR 2006 (HY000): MySQL server has gone away

No connection. Trying to reconnect...

Connection id: 4

Current database: *** NONE ***

+--------------------+

| Database |

+--------------------+

| information_schema |

| db1 |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.01 sec)

当IP1的keepalived开启,IP1的MySQL开启,此时关闭IP1的MySQL,那么VIP还是在IP1上,那么此时查询到的数据库是 : 无法连接

mysql> show databases;

ERROR 2013 (HY000): Lost connection to MySQL server during query

mysql> show databases;

ERROR 2006 (HY000): MySQL server has gone away

No connection. Trying to reconnect...

ERROR 2003 (HY000): Can't connect to MySQL server on '192.168.231.66' (111)

ERROR:

Can't connect to the server

此时将IP1的keepalived也停掉,那么VIP会飘逸到IP2上,此时查询到的数据库是 MySQL2

mysql> show databases;

No connection. Trying to reconnect...

Connection id: 19

Current database: *** NONE ***

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

因此也就是说

客户端访问的是虚拟VIP,由虚拟vip去连接后端的MySQL。而keepalived是提供VIP的,服务器分为主备,当主服务器的keepalived开启时, 那么VIP就会在主服务器上。只有当主服务器的keepalived关闭时,VIP才会飘向备服务器,客户端访问的才是备服务器的内容。

但是当主服务器的MySQL关闭时,不会影响vip的存在,他还会在主服务器上,但是客户端访问的 时候会显示拒绝连接,因为vip是与MySQL服务器的IP连接的,他是去找她的。

在企业生产环境中,我们不可能一直在观察MySQL与keepalived的状态,那么我们需要使用脚本,但是脚本多久执行一次 回花费大量精力,这时候我们可以将脚本放至keepalived的配置文件中。只要检查到服务器的MySQL关闭,那么就会停止该服务器的keepalived。让其他服务器工作,从而保证了高可用

脚本:

vim keepalived_check_mysql.sh

#!/bin/bash

/usr/bin/mysql -uroot -p'QianFeng@2019!' -e "show status" &>/dev/null

if [ $? -ne 0 ] ;then

# service keepalived stop

systemctl stop keepalived

fi

~ 在keepalived的配置文件中引用

! Configuration File for keepalived

global_defs {

router_id master

}

vrrp_script check_run { ####定义脚本

script "/etc/keepalived/keepalived_chech_mysql.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 89

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.231.66/24

}

track_script { ####引用脚本

}

}

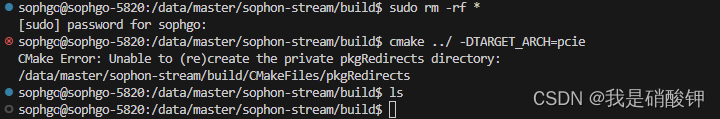

实验过程遇到的错误:

虚拟IP是自己提供的,避免虚拟ip在其他服务器上已经存在的情况

脚本的引用,可以不使用引号,或者使用双引号