文章目录

1 pytorch的安装 2 PyTorch基础知识 2.1 张量简介 2.2 初始化 2.3 张量的属性 2.4 ndarray与tensor互转 2.5 索引、切片、变形、聚合、矩阵拼接、切割、转置 3 pytorch自动微分 4 线性回归 5 分类

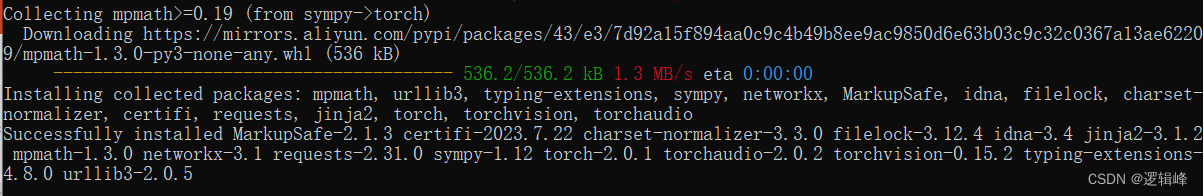

pytorch官网 个人学习因此,选择CPU安装。 使用国内阿里云镜像安装 库名 作用 torchvision 图像视频处理 torchaudio 音频处理 torchtext 自然语言处理

pip install -i https://mirrors.aliyun.com/pypi/simple/ torch torchvision torchaudio torchtext

出现以下界面说明安装成功 验证是否安装成功 分类:0维张量(标量)、1维张量(向量)、2维张量(矩阵)、3维张量(时间序列)、4维张量(图像)、5维张量(视频) 概念:一个数据容器,可以包含数据、字符串等 常见的构造Tensor的函数 函数 功能 Tensor(*sizes) 基础构造函数 tensor(data) 类似于np.array ones(*sizes) 全1 zeros(*sizes) 全0 eye(*sizes) 对角为1,其余为0 arange(s,e,step) 从s到e,步长为step linspace(s,e,steps) 从s到e,均匀分成step份 rand/randn(*sizes) rand是[0,1)均匀分布;randn是服从N(0,1)的正态分布 normal(mean,std) 正态分布(均值为mean,标准差是std) randperm(m) 随机排列

函数 作用 torch.abs(A) 绝对值 torch.add(A,B) 相加,A和B既可以是Tensor也可以是标量 torch.clamp(A,max,min) 裁剪,A中的数据若小于min或大于max,则变成min或max,即保证范围在[min,max] torch.div(A,B) 相除,A%B,A和B既可以是Tensor也可以是标量 torch.mul(A,B) 点乘,A*B,A和B既可以是Tensor也可以是标量 torch.pow(A,n) 求幂,A的n次方 torch.mm(A,B.T) 矩阵叉乘,注意与torch.mul之间的区别 torch.mv(A,B) 矩阵与向量相乘,A是矩阵,B是向量,这里的B需不需要转置都是可以的 A.item() 将Tensor转化为基本数据类型,注意Tensor中只有一个元素的时候才可以使用,一般用于在Tensor中取出数值 A.numpy() 将Tensor转化为Numpy类型 A.size() 查看尺寸 A.shape 查看尺寸 A.dtype 查看数据类型 A.view() 重构张量尺寸,类似于Numpy中的reshape A.transpose(0,1) 行列交换 A[1:]A[-1,-1]=100 切面,类似Numpy中的切面 A.zero_() 归零化 torch.stack((A,B),sim=-1) 拼接,升维 torch.diag(A) 取A对角线元素形成一个一维向量 torch.diag_embed(A) 将一维向量放到对角线中,其余数值为0的Tensor

import torch

data = [ [ 1 , 2 ] , [ 3 , 4 ] ]

x_data = torch. tensor( data)

print ( f"Tensor from Data:\n { x_data} \n" )

import numpy as np

data = [ [ 1 , 2 ] , [ 3 , 4 ] ]

np_array = np. array( data)

x_np = torch. from_numpy( np_array)

print ( f"Tensor from Numpy:\n { x_np} \n" )

import torch

data = [ [ 1 , 2 ] , [ 3 , 4 ] ]

x_data = torch. tensor( data)

x_ones = torch. ones_like( x_data)

print ( f"Ones Tensor: \n { x_ones} \n" )

x_rand = torch. rand_like( x_data, dtype= torch. float )

import torch

shape = ( 2 , 3 , )

rand_tensor = torch. rand( shape)

ones_tensor = torch. ones( shape)

zeros_tensor = torch. zeros( shape)

print ( f"Random Tensor: \n { rand_tensor} \n" )

print ( f"Ones Tensor: \n { ones_tensor} \n" )

print ( f"Zeros Tensor: \n { zeros_tensor} " )

import torch

tensor = torch. rand( 3 , 4 )

print ( f"Shape of tensor: { tensor. shape} " )

print ( f"Datatype of tensor: { tensor. dtype} " )

print ( f"Device tensor is stored on: { tensor. device} " )

import numpy as np

import torch

print ( np. __version__)

print ( torch. __version__)

print ( "tensor转ndarray" )

a = torch. ones( 5 )

print ( type ( a) )

b = a. numpy( )

print ( type ( b) )

print ( "ndarray转tensor" )

a1 = np. ones( 5 )

print ( type ( a1) )

b2 = torch. from_numpy( a1)

print ( type ( b2) )

import torch

import numpy as np

t = torch. randint( 0 , 10 , ( 4 , 5 ) )

print ( t)

print ( t. shape)

print ( t[ 0 , 0 ] )

print ( t[ 0 ] )

print ( t[ 1 : 3 ] )

print ( t[ : , 1 : 3 ] )

print ( t[ 1 : 3 , 1 : 3 ] )

print ( t. reshape( 4 , 5 , 1 ) )

print ( t[ : , : , None ] )

print ( t[ . . . , None ] )

print ( t[ : , None , : ] )

print ( t. reshape( 4 , 5 , 1 ) . squeeze( ) )

print ( t[ : , None , : ] . squeeze( ) )

print ( t. unsqueeze( dim= 0 ) . shape)

tensor = torch. tensor( [ [ 1 ] , [ 2 ] , [ 3 ] ] )

print ( tensor. expand( 3 , 4 ) )

n = np. random. random( ( 32 , 224 , 224 , 3 ) )

print ( n[ 0 , : , : , 0 ] . shape)

t = torch. tensor( n)

print ( t. shape)

print ( t[ 0 , : , : , 0 ] . shape)

t = torch. randint( 0 , 10 , ( 4 , 5 ) )

print ( t)

print ( t. sum ( ) )

print ( t. sum ( dim= 0 ) )

print ( t. sum ( dim= 0 , keepdim= True ) )

print ( t. sum ( dim= 1 ) )

print ( t. sum ( dim= 1 , keepdim= True ) )

print ( t. argmax( dim= 0 ) )

print ( t. argmax( dim= 1 ) )

t1 = torch. randint( 0 , 10 , size= ( 4 , 5 ) )

t2 = torch. randint( 0 , 10 , size= ( 4 , 5 ) )

print ( t1)

print ( t2)

print ( t1[ 0 ] . dot( t2[ 0 ] ) )

print ( torch. concat( ( t1, t2) ) )

print ( torch. vstack( ( t1, t2) ) )

print ( torch. concat( ( t1, t2) , dim= 1 ) )

print ( torch. hstack( ( t1, t2) ) )

print ( "--------------切割----------------" )

t = torch. randint( 0 , 10 , size= ( 4 , 5 ) )

print ( t)

print ( t. split( [ 1 , 2 , 1 ] ) )

print ( torch. permute( t, [ 1 , 0 ] ) . shape)

print ( t. transpose( 1 , 0 ) . shape)

import torch

x = torch. ones( 1 , 1 , requires_grad= True )

y = 2 * x + 2

y. backward( )

print ( x. grad)

from torch import nn, optim, tensor

X = tensor( [ [ 1.0 ] , [ 2.0 ] , [ 3.0 ] , [ 4.0 ] ] )

Y = tensor( [ [ 3.0 ] , [ 5.0 ] , [ 7.0 ] , [ 9.0 ] ] )

model = nn. Linear( 1 , 1 )

loss_fn = nn. MSELoss( )

optimizer = optim. SGD( model. parameters( ) , 0.001 )

for epoch in range ( 1000 ) :

for x, y in zip ( X, Y) :

y_pred = model( x)

loss = loss_fn( y, y_pred)

optimizer. zero_grad( )

loss. backward( )

optimizer. step( )

weight = model. weight

print ( weight)

bias = model. bias

print ( bias)

import torch

from torch import nn, float

data = [ [ 1 , 3 , 5 , 7 , 9 , 1 ] ,

[ 2 , 4 , 6 , 8 , 10 , 0 ] ,

[ 11 , 13 , 15 , 17 , 19 , 1 ] ,

[ 12 , 14 , 16 , 18 , 20 , 0 ] ,

[ 21 , 23 , 25 , 27 , 29 , 1 ] ,

[ 22 , 24 , 26 , 28 , 30 , 0 ] ,

[ 31 , 33 , 35 , 37 , 39 , 1 ] ,

[ 32 , 34 , 36 , 38 , 40 , 0 ] ,

[ 41 , 43 , 45 , 47 , 49 , 1 ] ,

[ 42 , 44 , 46 , 48 , 50 , 0 ] , ]

t_data = torch. tensor( data, dtype= float )

X = t_data[ : , : - 1 ]

print ( type ( X) )

print ( X)

Y = t_data[ : , - 1 ]

Y = Y. reshape( - 1 , 1 )

print ( type ( X) )

print ( Y)

print ( X. shape)

model = nn. Sequential(

nn. Linear( 5 , 50 ) ,

nn. Linear( 50 , 1 ) ,

nn. Sigmoid( )

)

state_dict = model. state_dict( )

print ( state_dict)

loss_fn = nn. BCELoss( )

optim_sgd = torch. optim. SGD( model. parameters( ) , 0.001 )

batch_size = 2

steps = 10 // 2

for epoch in range ( 100 ) :

for batch in range ( steps) :

start = batch * batch_size

end = start + batch_size

x = X[ start: end]

y = Y[ start: end]

y_pred = model( x)

loss = loss_fn( y_pred, y)

optim_sgd. zero_grad( )

loss. backward( )

optim_sgd. step( )

print ( model. state_dict( ) )

acc_rate = ( ( model( X) . data. numpy( ) >= 0.5 ) == Y. numpy( ) ) . mean( )

import torch

from torch import nn, float

import numpy as np

data = [ [ 1 , 1 ] ,

[ 2 , 0 ] ,

[ 11 , 1 ] ,

[ 12 , 0 ] ,

[ 21 , 1 ] ,

[ 22 , 0 ] ,

[ 31 , 1 ] ,

[ 32 , 0 ] ,

[ 41 , 1 ] ,

[ 42 , 0 ] , ]

t_data = torch. tensor( data, dtype= float )

X = t_data[ : , : - 1 ]

Y = t_data[ : , - 1 ]

Y = Y. reshape( - 1 , 1 )

print ( X. shape)

class DemoModl ( nn. Module) :

def __init__ ( self) :

super ( ) . __init__( )

self. lin_1 = nn. Linear( 1 , 50 )

self. lin_2 = nn. Linear( 50 , 1 )

self. sigmod = nn. Sigmoid( )

self. activate = nn. ReLU( )

def forward ( self, input ) :

x = self. lin_1( input )

x = self. activate( x)

x = self. lin_2( x)

x = self. sigmod( x)

return x

lr = 0.001

def get_model ( ) :

model = DemoModl( )

return model, torch. optim. Adam( model. parameters( ) , lr= lr)

loss_fn = nn. BCELoss( )

model, opt = get_model( )

batch_size = 2

steps = 10 // 2

for epoch in range ( 1000 ) :

for batch in range ( steps) :

start = batch * batch_size

end = start + batch_size

x = X[ start: end]

y = Y[ start: end]

y_pred = model( x)

loss = loss_fn( y_pred, y)

opt. zero_grad( )

loss. backward( )

opt. step( )

print ( 'loss=========' , loss_fn( model( X) , Y) )

acc_rate = ( ( model( X) . data. numpy( ) >= 0.5 ) == Y. numpy( ) ) . mean( )

print ( acc_rate)

print ( np. unique( model( X) . data. numpy( ) ) )

参考