-

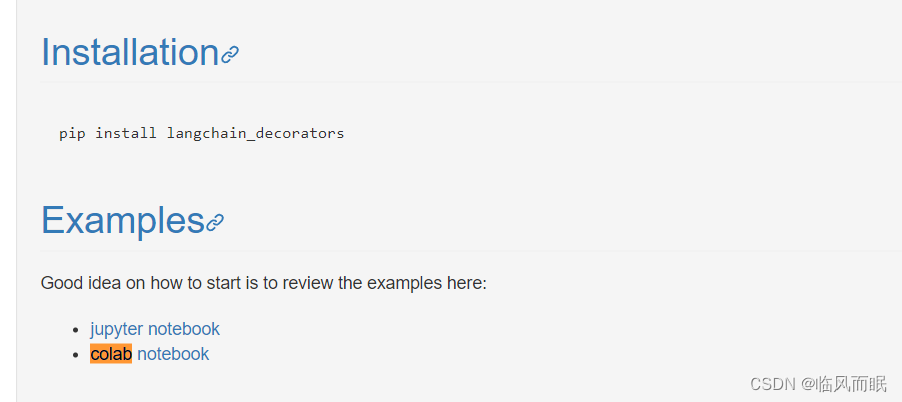

libraryIO的链接:https://libraries.io/pypi/langchain-decorators

-

来colab玩玩它的demo

-

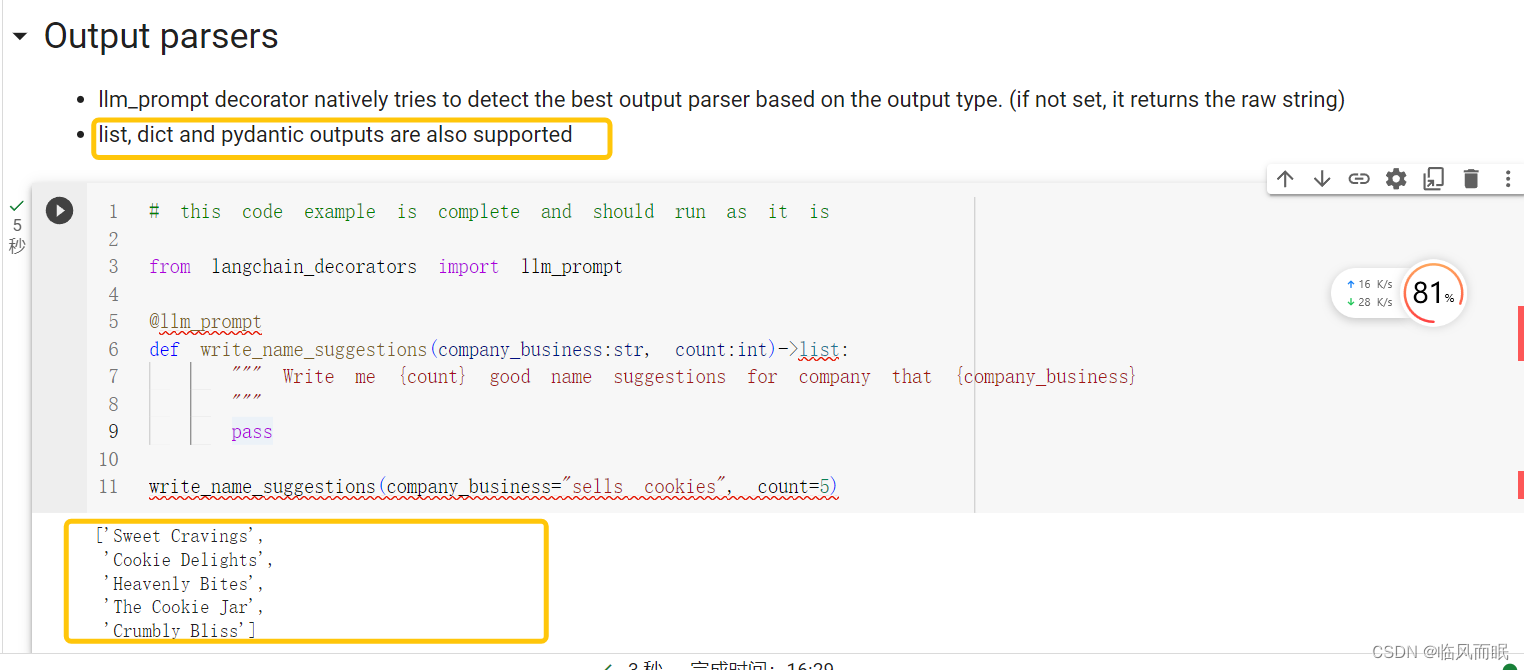

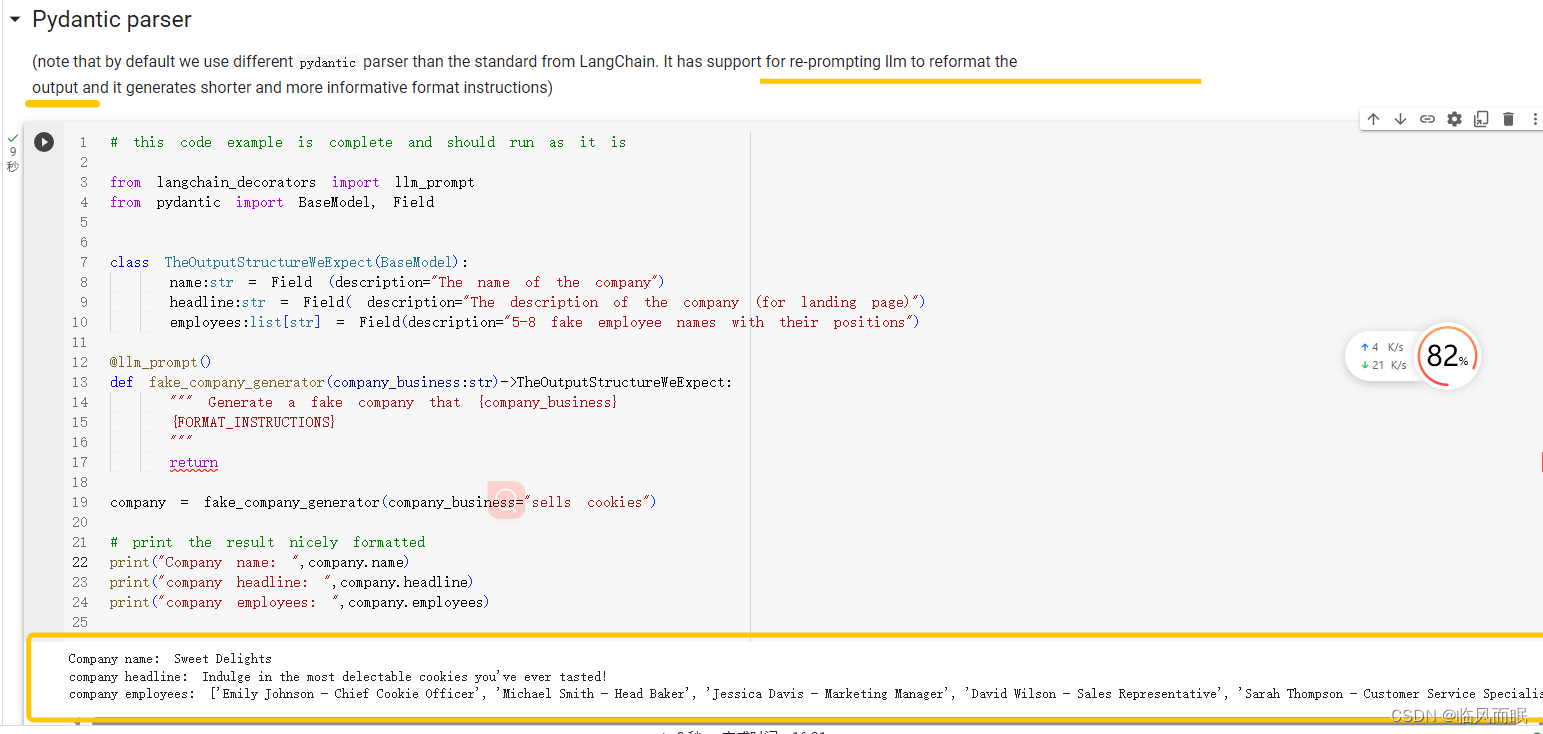

感觉这确实是个挺好用的库

- 想到之前纯调prompt来控制输出格式的痛苦,这个可太有效了

- 想到之前纯调prompt来控制输出格式的痛苦,这个可太有效了

-

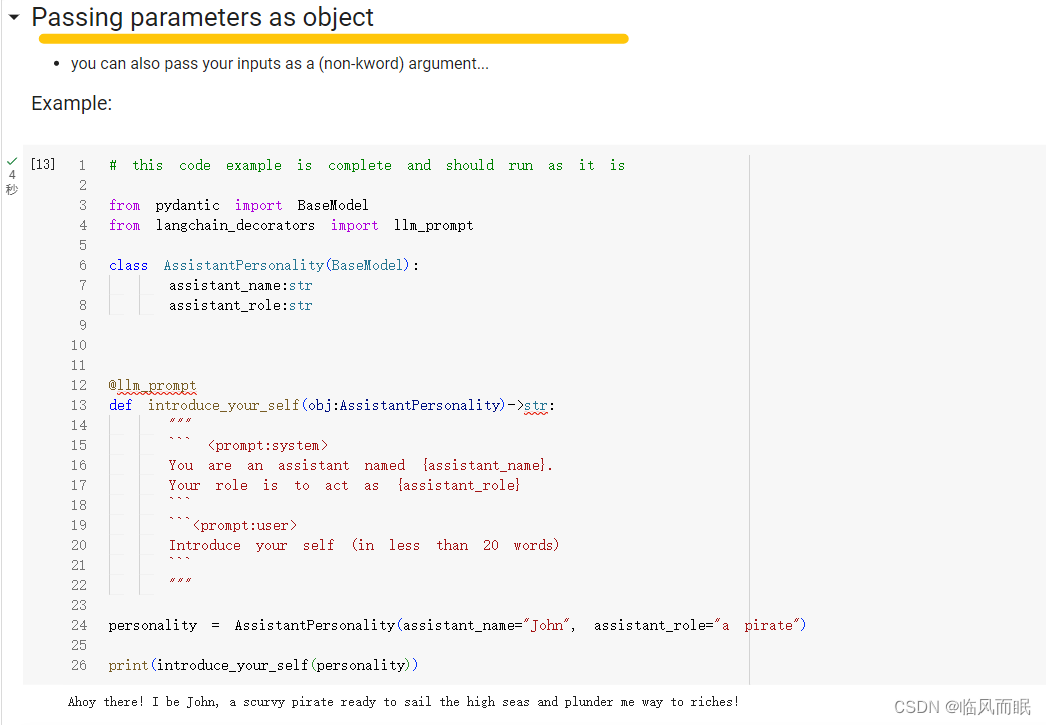

cool~

-

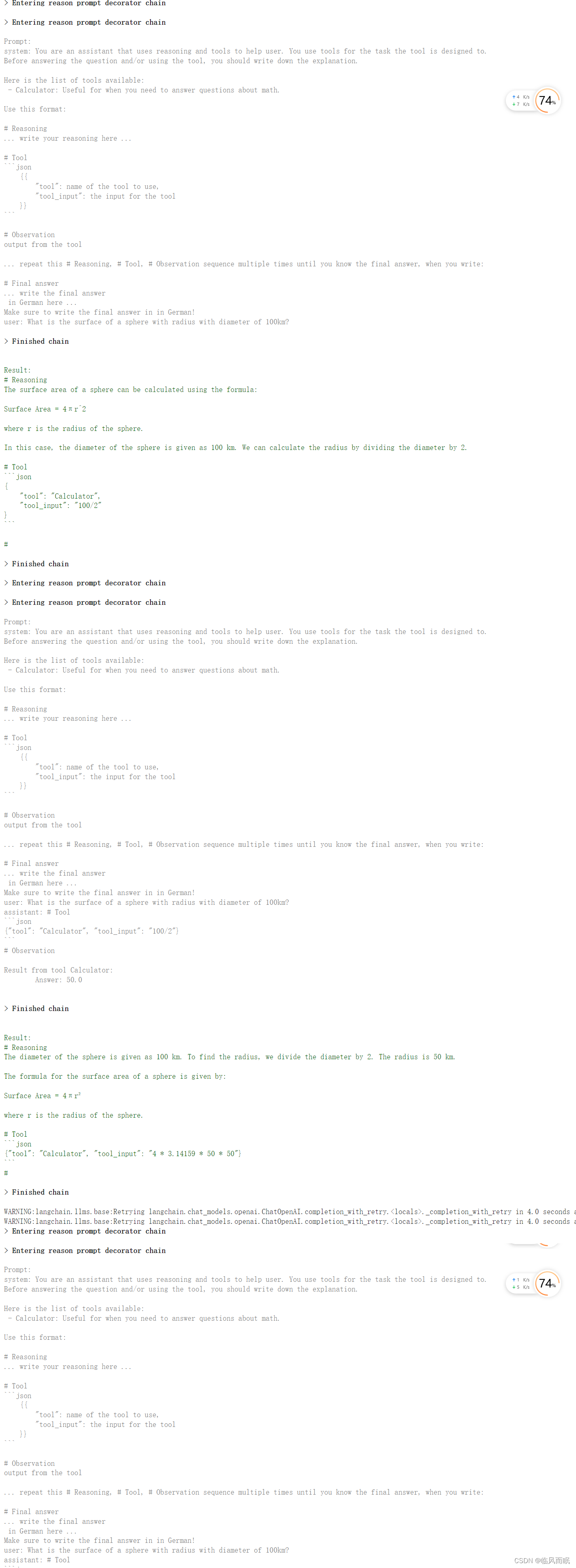

最下面这个react的多智能体例子很好玩,来看看!

from typing import List from langchain_decorators import llm_prompt from langchain.agents import load_tools from langchain.tools.base import BaseTool from textwrap import dedent from langchain_decorators import PromptTypes from langchain_decorators.output_parsers import JsonOutputParser import json tools = load_tools([ "llm-math"], llm=GlobalSettings.get_current_settings().default_llm) # you may, or may not use pydantic as your base class... totally up to you class MultilingualAgent: def __init__(self, tools:List[BaseTool],result_language:str=None) -> None: self.tools = tools # we can refer to our field in all out prompts self.result_language = result_language self.agent_scratchpad = "" # we initialize our scratchpad self.feedback = "" # we initialize our feedback if we get some error # other settings self.iterations=10 self.agent_format_instructions = dedent("""\ # Reasoning ... write your reasoning here ... # Tool ```json {{ "tool": name of the tool to use, "tool_input": the input for the tool }} ``` # Observation output from the tool ... repeat this # Reasoning, # Tool, # Observation sequence multiple times until you know the final answer, when you write: # Final answer ... write the final answer """) @property def tools_description(self)->str: # we can refer to properties in out prompts too return "\n".join([f" - {tool.name}: {tool.description}" for tool in self.tools]) # we defined prompt type here, which will make @llm_prompt(prompt_type=PromptTypes.AGENT_REASONING, output_parser="markdown", stop_tokens=["Observation"], verbose=True) def reason(self)->dict: """ The system prompt: ```<prompt:system> You are an assistant that uses reasoning and tools to help user. You use tools for the task the tool is designed to. Before answering the question and/or using the tool, you should write down the explanation. Here is the list of tools available: {tools_description} Use this format: {agent_format_instructions}{? in {result_language}?} here ...{? Make sure to write the final answer in in {result_language}!?} ``` User question: ```<prompt:user> {question} ``` Scratchpad: ```<prompt:assistant> {agent_scratchpad} ``` ```<prompt:user> {feedback} ``` """ return def act(self, tool_name:str, tool_input:str)->str: tool = next((tool for tool in self.tools if tool.name.lower()==tool_name.lower()==tool_name.lower())) if tool is None: self.feedback = f"Tool {tool_name} is not available. Available tools are: {self.tools_description}" return else: try: result = tool.run(tool_input) except Exception as e: if self.feedback is not None: # we've already experienced an error, so we are not going to try forever... let's raise this one raise e self.feedback = f"Tool {tool_name} failed with error: {e}.\nLet's fix it and try again." tool_instructions = json.dumps({"tool":tool.name, "tool_input":tool_input}) self.agent_scratchpad += f"# Tool\n```json\n{tool_instructions}\n```\n# Observation\n\nResult from tool {tool_name}:\n\t{result}\n" def run(self, question): for i in range(self.iterations): reasoning = self.reason(question=question) if reasoning.get("Final answer") is not None: return reasoning.get("Final answer") else: tool_info = reasoning.get("Tool") tool_name, tool_input = (None, None) if tool_info: tool_info_parsed = JsonOutputParser().parse(tool_info) tool_name = tool_info_parsed.get("tool") tool_input = tool_info_parsed.get("tool_input") if tool_name is None or tool_input is None: self.feedback = "Your response was not in the expected format. Please make sure to response in correct format:\n" + self.agent_format_instructions continue self.act(tool_name, tool_input) raise Exception(f"Failed to answer the question after {self.iterations} iterations. Last result: {reasoning}") agent = MultilingualAgent(tools=tools, result_language="German" ) result = agent.run("What is the surface of a sphere with radius with diameter of 100km?") print("\n\nHere is the agent's answer:", result) -

下面是输出

-

这个包确实能带来不少方便~

LangChain-Decorators 包学习

news2026/2/12 19:44:27

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.coloradmin.cn/o/1096302.html

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈,一经查实,立即删除!相关文章

MySQL 函数 索引 事务 管理

目录 一. 字符串相关的函数

二.数学相关函数

编辑

三.时间日期相关函数 date.sql

四.流程控制函数 centrol.sql

分页查询

使用分组函数和分组字句 group by

数据分组的总结

多表查询

自连接

子查询 subquery.sql

五.表的复制

六.合并查询 七.表的外连接

…

【微服务 SpringCloud】实用篇 · 服务拆分和远程调用

微服务(2) 文章目录 微服务(2)1. 服务拆分原则2. 服务拆分示例1.2.1 导入demo工程1.2.2 导入Sql语句 3. 实现远程调用案例1.3.1 案例需求:1.3.2 注册RestTemplate1.3.3 实现远程调用1.3.4 查看效果 4. 提供者与消费者 …

windows安装npm教程

在安装和使用NPM之前,我们需要先了解一下,NPM 是什么,能干啥?

一、NPM介绍 NPM(Node Package Manager)是一个用于管理和共享JavaScript代码包的工具。它是Node.js生态系统的一部分,广泛用于构…

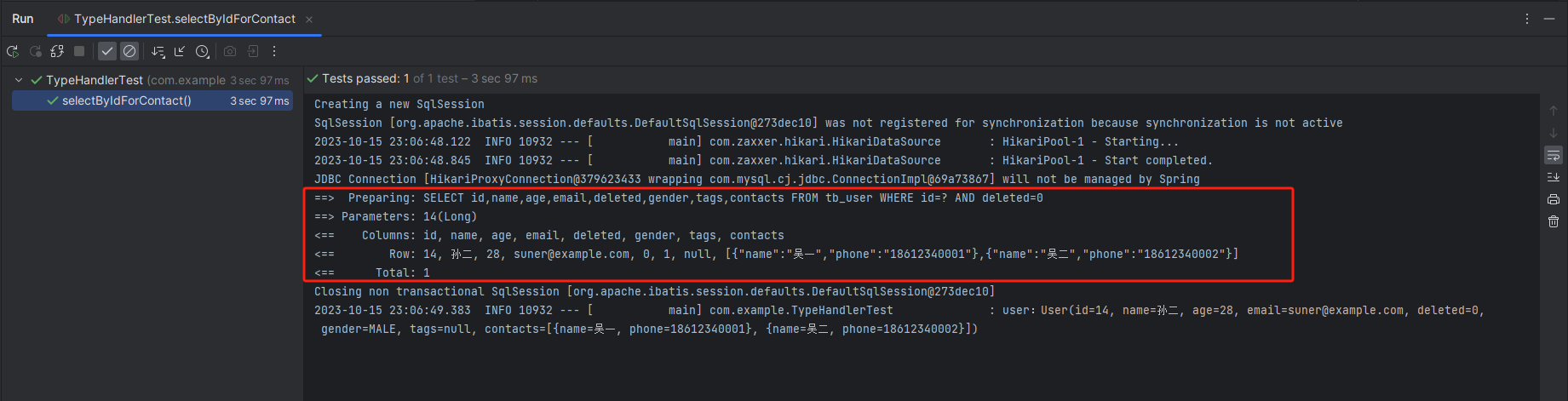

MyBatisPlus(十八)字段类型处理器:对象存为JSON字符串

说明

将一个复杂对象(集合或者普通对象),作为 JSON字符串 存储到数据库表中的某个字段中。

MyBatisPlus 提供优雅的方式,映射复杂对象类型字段和数据库表中的字符串类型字段。

核心注解

TableName(autoResultMap true)TableF…

【Spring Boot 源码学习】@Conditional 条件注解

Spring Boot 源码学习系列 Conditional 条件注解 引言往期内容主要内容1. 初识 Conditional2. Conditional 的衍生注解 总结 引言

前面的博文,Huazie 带大家从 Spring Boot 源码深入了解了自动配置类的读取和筛选的过程,然后又详解了OnClassCondition、…

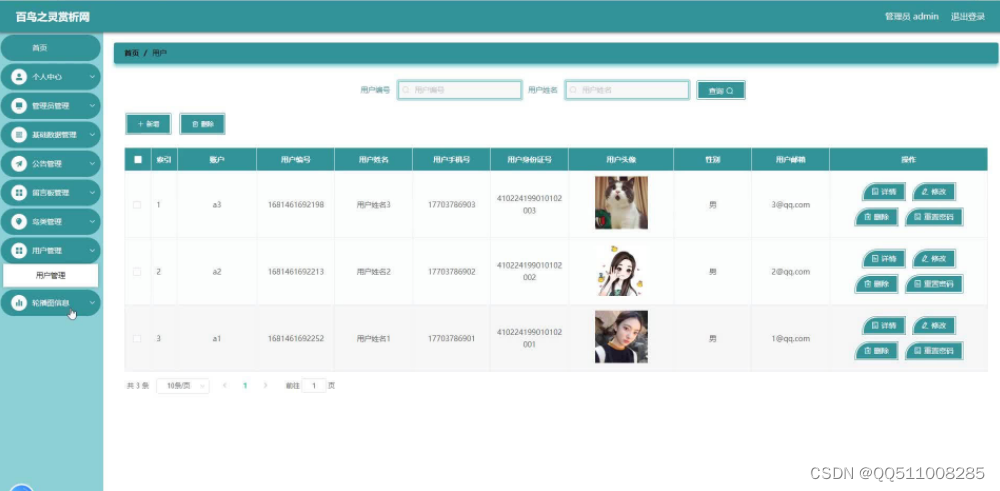

nodejs+vue百鸟全科赏析网站

目 录 摘 要 I ABSTRACT II 目 录 II 第1章 绪论 1 1.1背景及意义 1 1.2 国内外研究概况 1 1.3 研究的内容 1 第2章 相关技术 3 2.1 nodejs简介 4 2.2 express框架介绍 6 2.4 MySQL数据库 4 第3章 系统分析 5 3.1 需求分析 5 3.2 系统可行性分析 5 3.2.1技术可行性:…

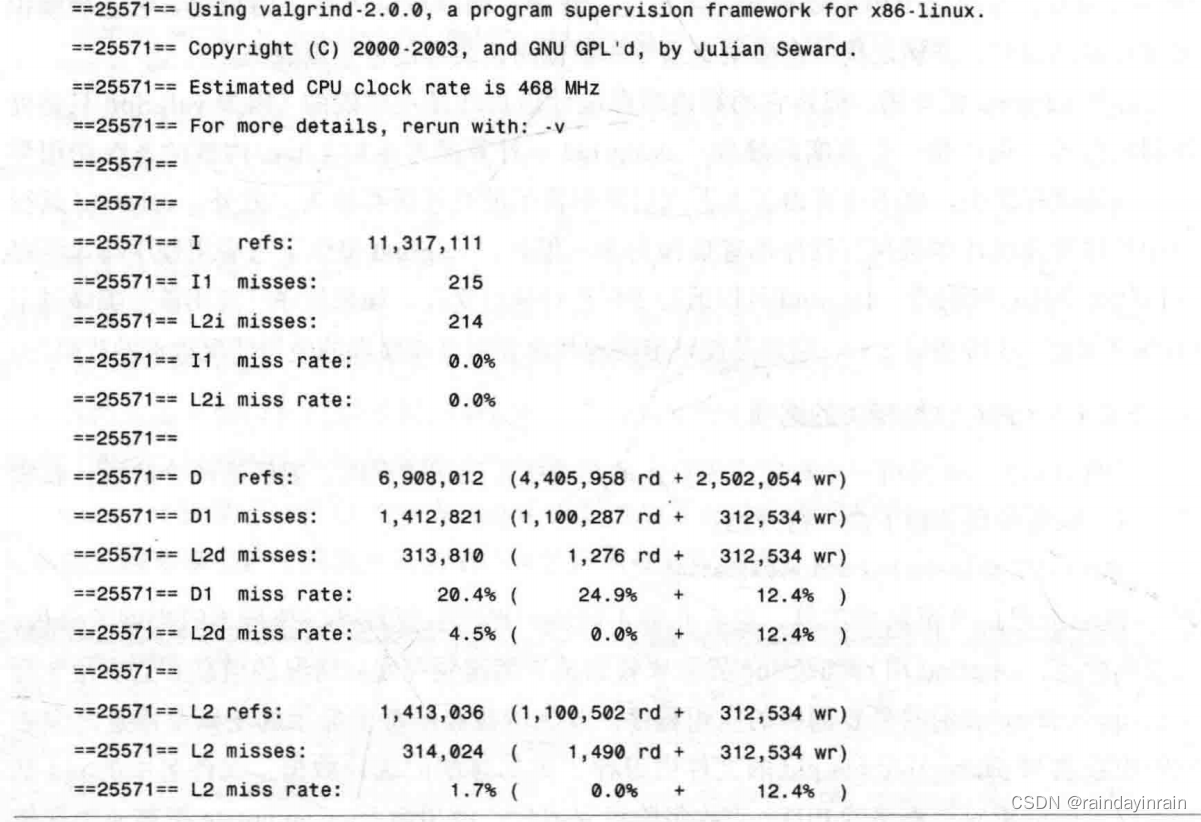

Linux性能优化--性能工具:特定进程内存

5.0 概述

本章介绍的工具使你能诊断应用程序与内存子系统之间的交互,该子系统由Linux内核和CPU管理。由于内存子系统的不同层次在性能上有数量级的差异,因此,修复应用程序使其有效地使用内存子系统会对程序性能产生巨大的影响。 阅读本章后&…

ubuntu 怎样查看隐藏文件

1.通过界面可视化方式

鼠标点击进入该待显示的文件路径,按下ctrl h 刷新,则显示隐藏文件 2. 通过控制台命令行

若使用命令行,则使用命令:ls -a 显示所有文件,也包括隐藏文件

MPNN 模型:GNN 传递规则的实现

首先,假如我们定义一个极简的传递规则 A是邻接矩阵,X是特征矩阵, 其物理意义就是 通过矩阵乘法操作,批量把图中的相邻节点汇聚到当前节点。

但是由于A的对角线都是 0.因此自身的节点特征会被过滤掉。

图神经网络的核心是 吸周围…

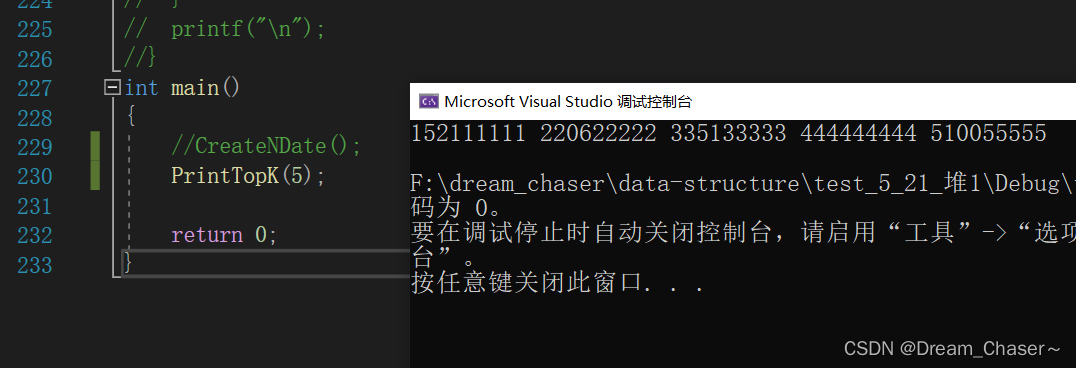

【数据结构与算法】堆排序(向下和向上调整)、TOP-K问题(超详细解读)

前言: 💥🎈个人主页:Dream_Chaser~ 🎈💥 ✨✨专栏:http://t.csdn.cn/oXkBa ⛳⛳本篇内容:c语言数据结构--堆排序,TOP-K问题 目录

堆排序

1.二叉树的顺序结构

1.1父节点和子节点…

Ubuntu:ESP-IDF 开发环境配置【保姆级】

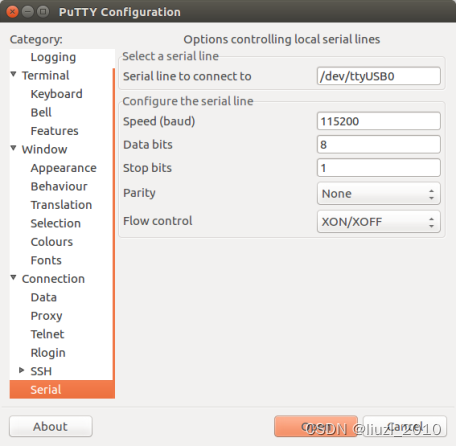

物联网开发学习笔记——目录索引 参考官网:ESP-IDF 物联网开发框架 | 乐鑫科技

ESP-IDF 是乐鑫官方推出的物联网开发框架,支持 Windows、Linux 和 macOS 操作系统。适用于 ESP32、ESP32-S、ESP32-C 和 ESP32-H 系列 SoC。它基于 C/C 语言提供了一个自给…

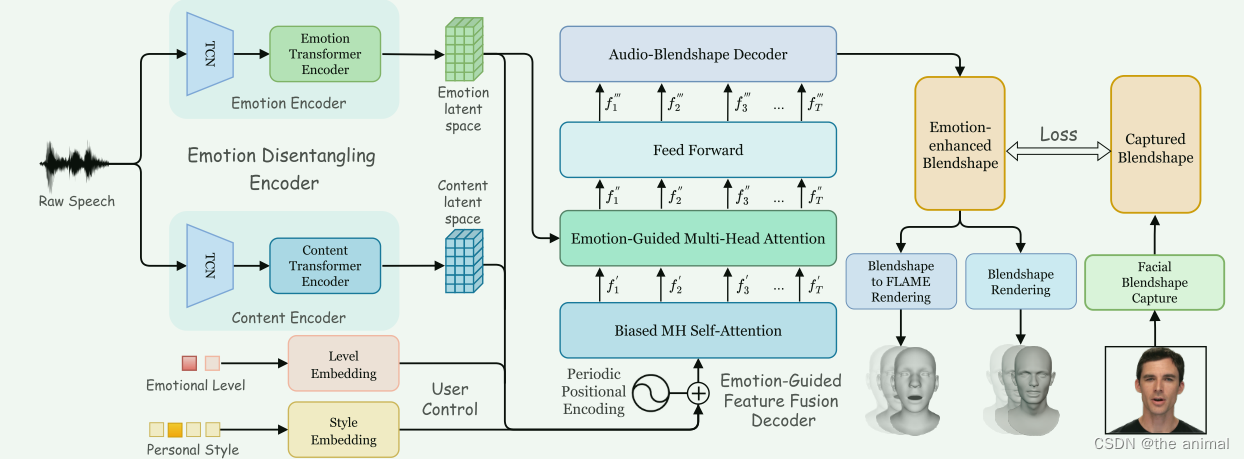

EmoTalk: Speech-Driven Emotional Disentanglement for 3D Face Animation

问题:现存的方法经常忽略面部的情感或者不能将它们从语音内容中分离出来。 方法:本文提出了一种端到端神经网络来分解语音中的不同情绪,从而生成丰富的 3D 面部表情。 1.我们引入了情感分离编码器(EDE),通过交叉重构具有不同情感标签的语音信号来分离语音中的情感和内容。…

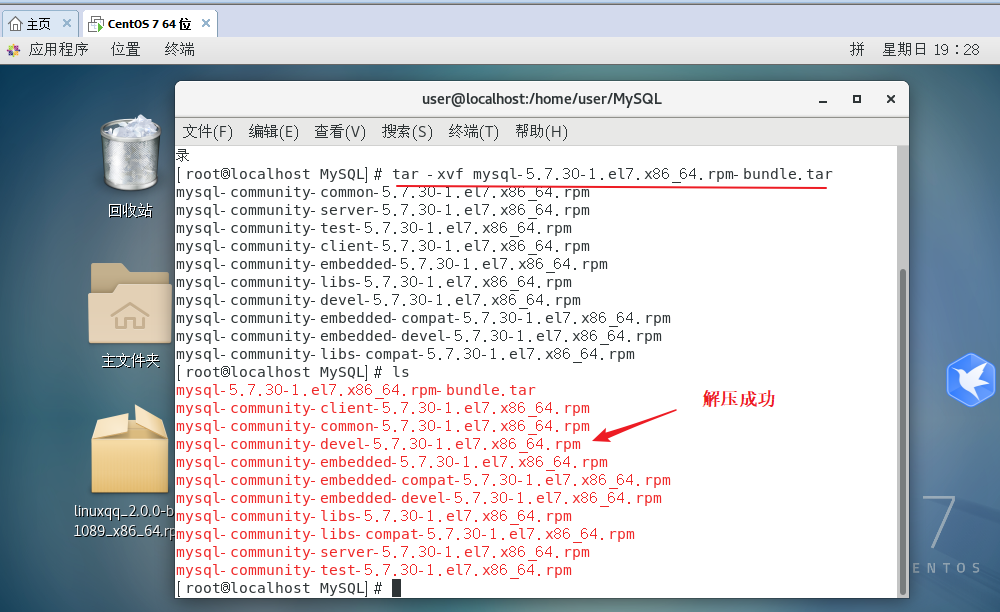

MySQL 的下载与安装

MySQL 的下载

https://cdn.mysql.com/archives/mysql-5.7/mysql-5.7.30-1.el7.x86_64.rpm-bundle.tar

将下载的数据包拉到虚拟机的linux系统的主文件夹下,创建一个MySQL文件存放 安装MySQL

1、解压数据包

tar -xvf mysql-5.7.30-1.el7.x86_64.rpm-bundle.tar -x: 表示解压…

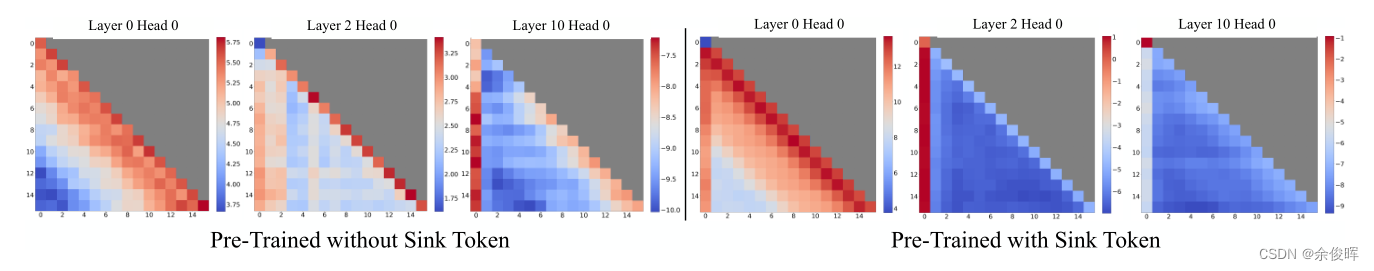

【LLM】浅谈 StreamingLLM中的attention sink和sink token

前言

Softmax函数 SoftMax ( x ) i e x i e x 1 ∑ j 2 N e x j , x 1 ≫ x j , j ∈ 2 , … , N \text{SoftMax}(x)_i \frac{e^{x_i}}{e^{x_1} \sum_{j2}^{N} e^{x_j}}, \quad x_1 \gg x_j, j \in 2, \dots, N SoftMax(x)iex1∑j2Nexjexi,x1≫xj,j∈2,……

智慧公厕:提升城市形象的必备利器

智慧公厕是什么?智慧公厕基于物联网的技术基础,整合了互联网、人工智能、大数据、云计算、区块链、5G/4G等最新技术,针对公共厕所日常建设、使用、运营和管理的全方位整体解决方案。智慧公厕广泛应用于旅游景区、城市公厕、购物中心、商业楼宇…

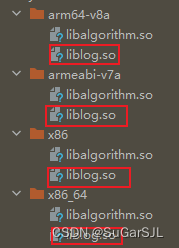

2 files found with path ‘lib/armeabi-v7a/liblog.so‘ from inputs:

下图两个子模块都用CMakeLists.txt引用了android的log库,编译后,在它们的build目录下都有liblog.so的文件。 四个CPU架构的文件夹下都有。 上层模块app不能决定使用哪一个,因此似乎做了合并,路径就是报错里的哪个路径,…

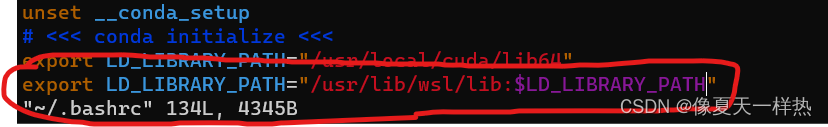

WSL Ubuntu 22.04.2 LTS 安装paddle踩坑日记

使用conda安装paddlepaddle-gpu: conda install paddlepaddle-gpu2.5.1 cudatoolkit11.7 -c https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/Paddle/ -c conda-forge 等待安装...

报错处理:

(1)(1)PreconditionNotMetError: Cannot load cudnn shared libr…

[BigData:Hadoop]:安装部署篇

文章目录 一:机器103设置密钥对免密登录二:机器102设置密钥对免密登录三:机器103安装Hadoop安装包3.1:wget拉取安装Hadoop包3.2:解压移到指定目录3.2.1:解压移动路径异常信息3.2.2:切换指定目录…

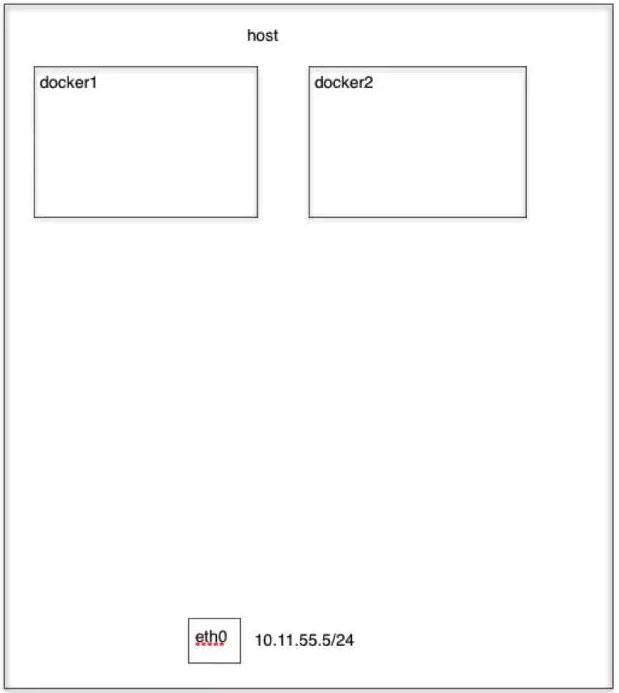

Docker容器端口暴露方式

【Bridge 模式】

当 Docker 进程启动时,会在主机上创建一个名为docker0的虚拟网桥,此主机上启动的 Docker 容器会连接到这个虚拟网桥上。虚拟网桥的工作方式和物理交换机类似,这样主机上的所有容器就通过交换机连在了一个二层网络中。从 doc…

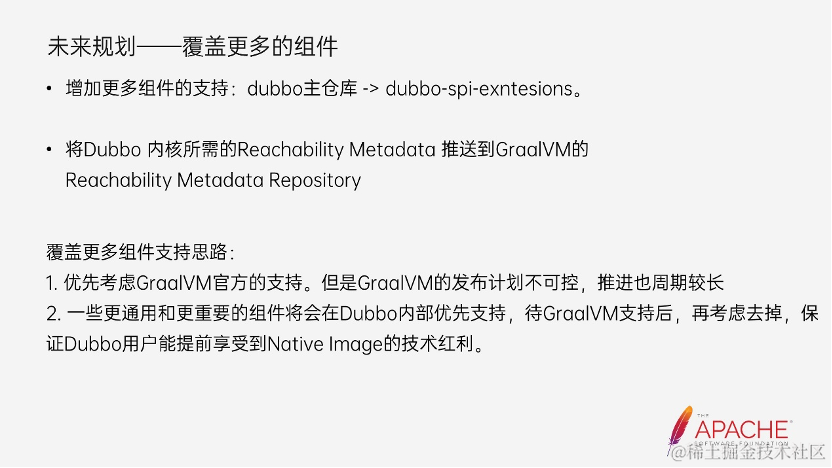

启动速度提升 10 倍:Apache Dubbo 静态化方案深入解析

作者:华钟明

文章摘要:

本文整理自有赞中间件技术专家、Apache Dubbo PMC 华钟明的分享。本篇内容主要分为五个部分:

-GraalVM 直面 Java 应用在云时代的挑战

-Dubbo 享受 AOT 带来的技术红利

-Dubbo Native Image 的实践和示例

-Dubbo…

![[BigData:Hadoop]:安装部署篇](https://img-blog.csdnimg.cn/4434177eac0d47449b9d78065899728b.png)