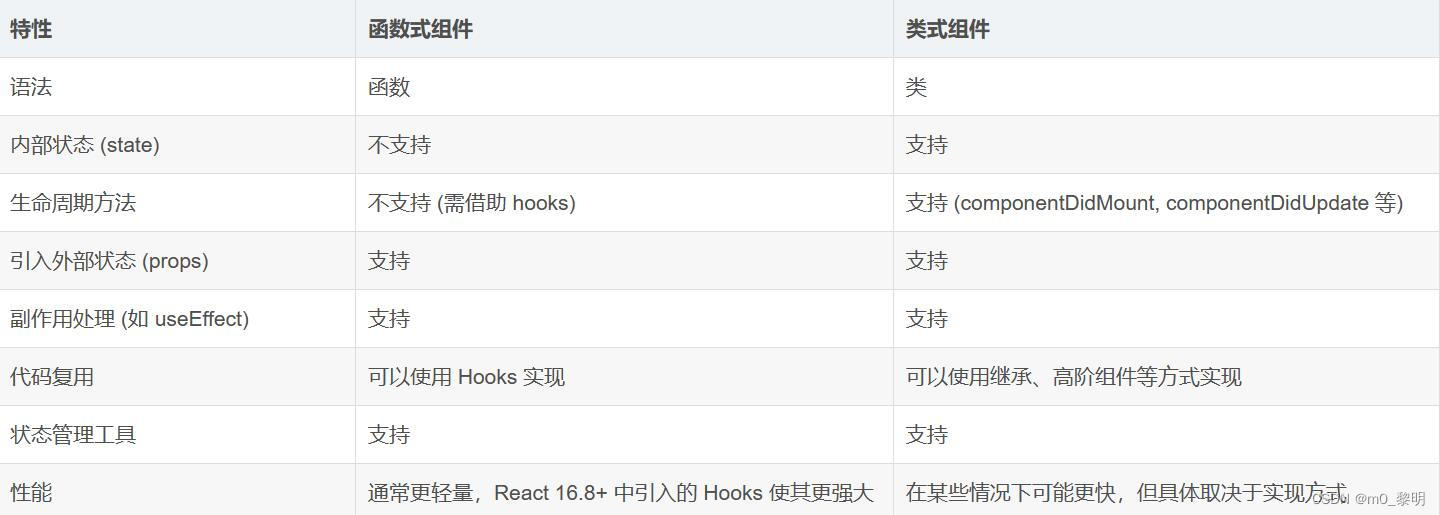

文章目录

- 前言

- 服务器介绍

- 准备工作

- 设置服务器静态ip

- 修改host

- 关闭防火墙和swap

- 修改所需的内核参数

- 部署步骤

- 安装containerd

- 安装cri工具(效果等同于docker)

- 安装kubernetes集群

- 安装网络插件flannel

- 安装可视化面板kuboard(可选)

- 下期预告

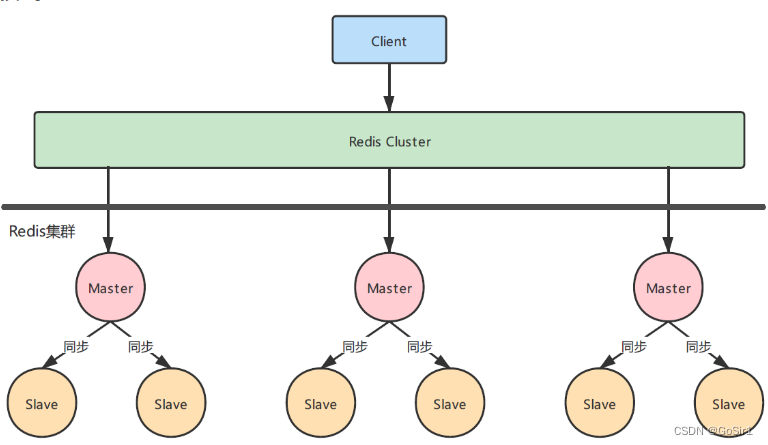

前言

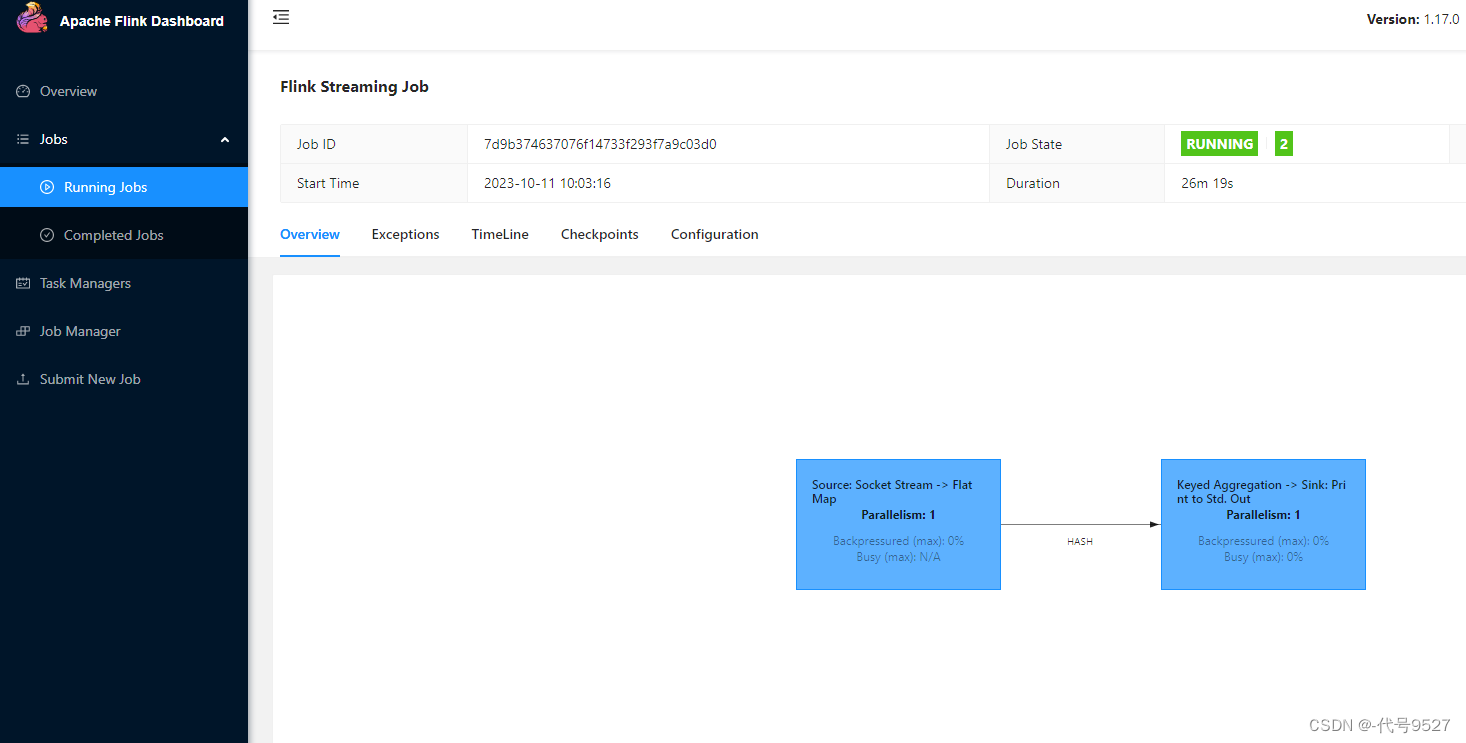

前面的博文我们讲解了Kubernetes概述架构与工作流程简述,应该都大致了解到了k8s的基本概念、用途、架构以及基础的执行流程。今天我们就直接实战用kubeadmin部署一个可以用于生产环境的k8s集群环境。

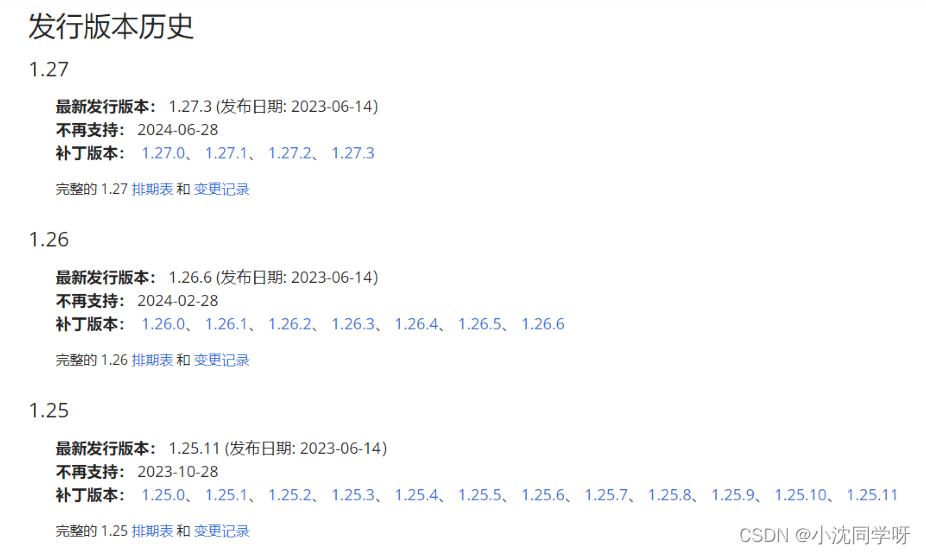

使用kubeadm部署kubernetes集群,kubernetes版本为v1.27.2。截止2023年上半年,kubernetes发型版本时间于下图所示:

故而部署较新的v1.27.2版本。

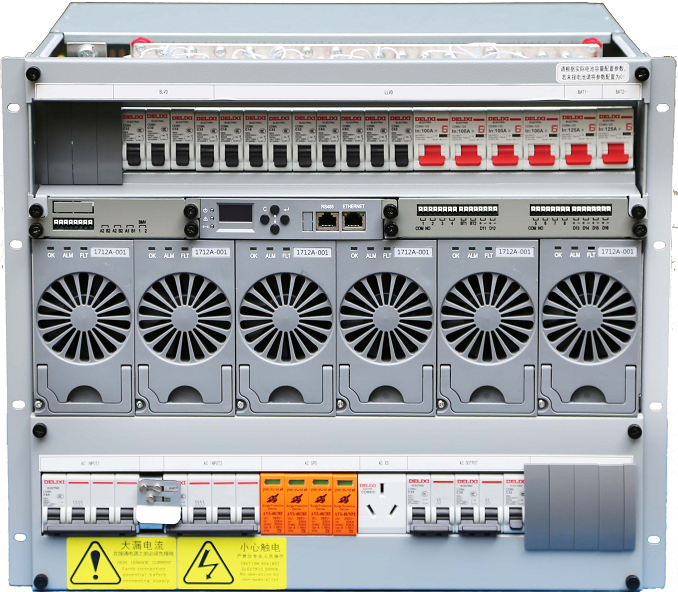

服务器介绍

由于项目正在本地开发、测试环境,采用如下较小化单master节点部署,各服务器系统和配置参考如下:

| 系统 | IP | 配置 | 节点 |

|---|---|---|---|

| Centos7 | 192.168.1.91 | 30G+16核32线程+100G机械盘 | master |

| Centos7 | 192.168.1.82 | 30G+16核32线程+100G机械盘 | node1 |

| Centos7 | 192.168.1.199 | 30G+16核32线程+100G机械盘 | node2 |

准备工作

设置服务器静态ip

使用命令ip addr查看网卡信息,PS:这里我已经修改好了所以可以看到inet

10.10.22.82/24

[root@master nexus3]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:66:6e:e1 brd ff:ff:ff:ff:ff:ff

inet 10.10.22.82/24 brd 10.10.22.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet6 fe80::220d:190:842d:aade/64 scope link noprefixroute

valid_lft forever preferred_lft forever

修改/etc/sysconfig/network-scripts/ifcfg-ens192文件内容如下:

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static" #静态ip

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens192"

UUID="20943376-ce4f-4410-ad6d-6c00565af006"

DEVICE="ens192"

ONBOOT="yes"

IPADDR="10.10.22.82" #ip地址

GATEWAY="10.10.22.1" #网关

NETMASK="255.255.255.0" #子网掩码

DNS1="61.139.2.69" #dns

PS.其他服务器依次修改上面的内容即可,UUID和IPADDR修改其他不变。

重启网络服务

systemctl restart network

修改host

分别在kubernetes集群中每一台服务器中修改/etc/hosts文件,增加如下内容:

echo '

10.10.22.91 master

10.10.22.82 node1

10.10.22.199 node2

' >> /etc/hosts

各个服务器设置自己的hosename

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

关闭防火墙和swap

分别在kubernetes集群中每一台服务器中执行如下命令永久关闭服务器防火墙和swap:

systemctl stop firewalld && systemctl disable firewalld

sed -i ‘/ swap / s/^(.)$/#\1/g’ /etc/fstab

sed -i 's/^SELINUX=./SELINUX=disabled/’ /etc/selinux/config && setenforce 0

修改所需的内核参数

分别在kubernetes集群中每一台服务器中执行:

yum install net-tools -y

yum install chrony -y

yum install ipset ipvsadm -y

systemctl start chronyd

systemctl enable chronyd

cat <<EOF >> /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

cat <<EOF >> /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

cat <<EOF >> /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

cat <<EOF >> /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

执行命令

modprobe overlay

modprobe br_netfilter

sysctl --system

chmod +x /etc/sysconfig/modules/ipvs.modules

sh +x /etc/sysconfig/modules/ipvs.modules

部署步骤

安装containerd

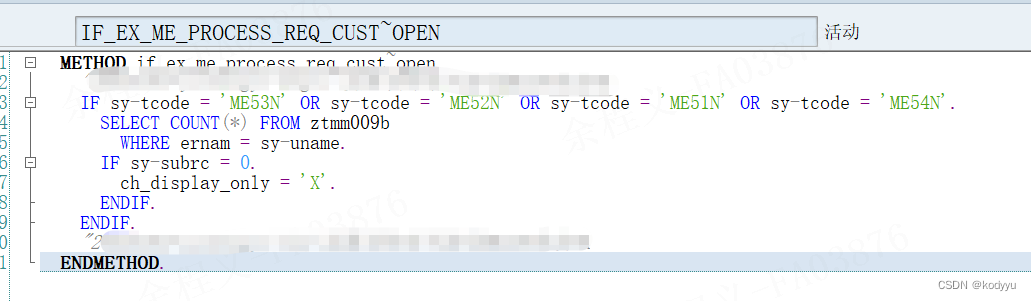

kubernetes v1.27版本不再使用docker为镜像拉取工具,那么使用containerd作为镜像拉取工具。

#每一台服务器都需要安装

curl -L -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y containerd.io

containerd config default > /etc/containerd/config.toml

config.toml内容如下,注意修改以下注释的位置

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

# 这个https://97ck5svl.mirror.aliyuncs.com地址是自己的阿里云分配给的拉取镜像地址

endpoint = ["https://97ck5svl.mirror.aliyuncs.com" ,"https://registry-1.docker.io"]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

#使containerd配置生效,依次在每一台服务器中执行:

systemctl daemon-reload && systemctl enable --now containerd && systemctl restart containerd

安装cri工具(效果等同于docker)

#每一台服务器都需要执行

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#执行命令

yum update

yum install -y epel-release

yum install -y cri-tools

crictl config runtime-endpoint

cat <<EOF >> /etc/crictl.yaml

runtime-endpoint: "unix:///run/containerd/containerd.sock"

image-endpoint: "unix:///run/containerd/containerd.sock"

timeout: 10

debug: false

pull-image-on-create: false

disable-pull-on-run: false

EOF

然后就可以使用crictl命令拉取镜像,kubernetes默认使用cri进行拉取,命令参考如下:

[root@node1 ~]# crictl -h

NAME:

crictl - client for CRI

USAGE:

crictl [global options] command [command options] [arguments…]

VERSION:

v1.26.0

COMMANDS:

attach Attach to a running container

create Create a new container

exec Run a command in a running container

version Display runtime version information

images, image, img List images

inspect Display the status of one or more containers

inspecti Return the status of one or more images

imagefsinfo Return image filesystem info

inspectp Display the status of one or more pods

logs Fetch the logs of a container

port-forward Forward local port to a pod

ps List containers

pull Pull an image from a registry

run Run a new container inside a sandbox

runp Run a new pod

rm Remove one or more containers

rmi Remove one or more images

rmp Remove one or more pods

pods List pods

start Start one or more created containers

info Display information of the container runtime

stop Stop one or more running containers

stopp Stop one or more running pods

update Update one or more running containers

config Get and set crictl client configuration options

stats List container(s) resource usage statistics

statsp List pod resource usage statistics

completion Output shell completion code

checkpoint Checkpoint one or more running containers

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

–config value, -c value Location of the client config file. If not specified and the default does not exist, the program’s directory is searched as well (default: “/etc/crictl.yaml”) [ C R I C O N F I G F I L E ] − − d e b u g , − D E n a b l e d e b u g m o d e ( d e f a u l t : f a l s e ) − − i m a g e − e n d p o i n t v a l u e , − i v a l u e E n d p o i n t o f C R I i m a g e m a n a g e r s e r v i c e ( d e f a u l t : u s e s ′ r u n t i m e − e n d p o i n t ′ s e t t i n g ) [ CRI_CONFIG_FILE] --debug, -D Enable debug mode (default: false) --image-endpoint value, -i value Endpoint of CRI image manager service (default: uses 'runtime-endpoint' setting) [ CRICONFIGFILE]−−debug,−DEnabledebugmode(default:false)−−image−endpointvalue,−ivalueEndpointofCRIimagemanagerservice(default:uses′runtime−endpoint′setting)[IMAGE_SERVICE_ENDPOINT]

–runtime-endpoint value, -r value Endpoint of CRI container runtime service (default: uses in order the first successful one of [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]). Default is now deprecated and the endpoint should be set instead. [$CONTAINER_RUNTIME_ENDPOINT]

–timeout value, -t value Timeout of connecting to the server in seconds (e.g. 2s, 20s.). 0 or less is set to default (default: 2s)

–help, -h show help (default: false)

–version, -v print the version (default: false)

PS:到这一步骤准备已经结束,如果是在虚拟机中操作采用克隆的方式更加快捷,可进行服务器快照防止后期错误又要一步步的进行准备工作。

安装kubernetes集群

#使用kubeadm安装kubernetes集群,在三台服务器中都需要执行如下命令安装:

swapoff -a

yum install --setopt=obsoletes=0 kubelet-1.27.2-0 kubeadm-1.27.2-0 kubectl-1.27.2-0 -y

#开机自启以及启动kubelet

systemctl enable kubelet && systemctl start kubelet

PS.经过一些测试以上的准备工作适用于kubernetes v1.27版本,其他版本的安装的时候会出现一些问题,即使安装的时候不出现问题,在集群初始化的时候也会出现问题。

节点初始化:

#在master节点执行:

kubeadm init \

--apiserver-advertise-address=10.10.22.91 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.27.2 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all \

--cri-socket=unix:///var/run/containerd/containerd.sock

对命令中各部分的解释:

kubeadm init:这是用于初始化 Kubernetes 控制平面节点的命令。

–image-repository registry.aliyuncs.com/google_containers:这是指定 Kubernetes 组件镜像仓库的参数,将镜像仓库设置为阿里云提供的 Google 容器镜像仓库。这通常有助于在中国区加速镜像拉取速度。

–apiserver-advertise-address=10.10.22.91:这是指定 Kubernetes API 服务器应该发布(广播)的 IP 地址,即其他 Kubernetes 组件和客户端如 kubectl 将在该 IP 地址下访问 API 服务器。在这里,它设置为 10.10.22.91。

–pod-network-cidr=10.244.0.0/16:这是指定 Pod 网络的 CIDR,通常与您所选用的容器网络插件相关。在这里,它设置为 10.244.0.0/16。您可能会根据实际需求调整这个值。

CIDR一般指无类别域间路由

#master节点执行

#看到执行成功之后会有提示,安装提示依次继续执行执行如下命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown

(

i

d

−

u

)

:

(id -u):

(id−u):(id -g) $HOME/.kube/config

将master节点上的/etc/kubernetes/admin.conf拷贝到node节点上,在局域网状态下,可以使用scp命令快速高效地完成两个节点之间的文件传输。

#格式:scp

c

u

r

r

e

n

t

s

e

r

v

e

r

p

a

t

h

/

f

i

l

e

n

a

m

e

t

a

r

g

e

t

s

e

r

v

e

r

i

p

:

{current_server_path}/file_name target_server_ip:

currentserverpath/filenametargetserverip:{target_server_path}

sudo scp /etc/kubernetes/admin.conf 10.10.22.82:/etc/kubernetes/

sudo scp /etc/kubernetes/admin.conf 10.10.22.199:/etc/kubernetes/

#node节点执行,node1\node2

#init成功之后命令行提示其他服务器节点的join命令

#到node节点检查admin.conf文件是否传输完成

cd /etc/kubenetes

ls

admin.conf manifests

#不要忘记将admin.conf加入环境变量,这里直接使用永久生效。

//sudo echo “export KUBECONFIG=/etc/kubernetes/admin.conf” >> ~/.bash_profile

//sudo source ~/.bash_profile

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown

(

i

d

−

u

)

:

(id -u):

(id−u):(id -g) $HOME/.kube/config

#node1 node2加入master

kubeadm join 10.10.22.91:6443 --token lfd5qo.1icuhx1c2x3r5ik0 \

--discovery-token-ca-cert-hash sha256:f785887fe5df52096e360db38bcd0f66cfab3550bf00a77eafa946122f424609 \

--cri-socket unix:///var/run/containerd/containerd.sock

加入节点失败重新加入需要清除集群记录

1、kubeadm reset

2、$HOME/.kube

然后重新构建集群即可

$HOME 为用户主目录 echo $HOME

kubernetes集群初始化成功后使用kubectl get nodes命令可以查看集群节点的信息,注意此时的节点状态应该是NotReady,因为还没有安装网络插件导致未能正常通信。

PS.注意一下所有的有关kubernetes集群的操作命令都将在master节点执行。

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 8m44s v1.27.2

node1 NotReady 69s v1.27.2

node2 NotReady 73s v1.27.2

安装网络插件flannel

vi kube-flannel.yml

#内容如下,注意net-conf.json中的Network的值需要和kubernetes集群初始化的时候设置的pod-network-cidr的值一致即可。

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

安装网络插件:

kubectl apply -f kube-flannel.yml

这一步骤通常需要等待下载镜像,需要一根烟的功夫等待几分钟。然后可以采用如下命令镜像验证:

[root@master k8s]# kubectl get all -n kube-flannel

NAME READY STATUS RESTARTS AGE

pod/kube-flannel-ds-c4mss 1/1 Running 0 5m13s

pod/kube-flannel-ds-m9zbp 1/1 Running 0 5m13s

pod/kube-flannel-ds-mh5cw 1/1 Running 0 5m13s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 3 3 3 3 3 5m13s

#获取集群节点

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 24m v1.27.2

node1 Ready 16m v1.27.2

node2 Ready 17m v1.27.2

#缺少master节点处理

[root@master k8s]# kubectl label nodes master node-role.kubernetes.io/master=10.10.22.91

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 78m v1.27.2

node1 Ready 70m v1.27.2

node2 Ready 70m v1.27.2

这个时候kubernetes集群中所有的节点的状态应该为Ready,到这里就恭喜你kubernetes集群安装成功了,到这里最好在备份一下服务器的快照。

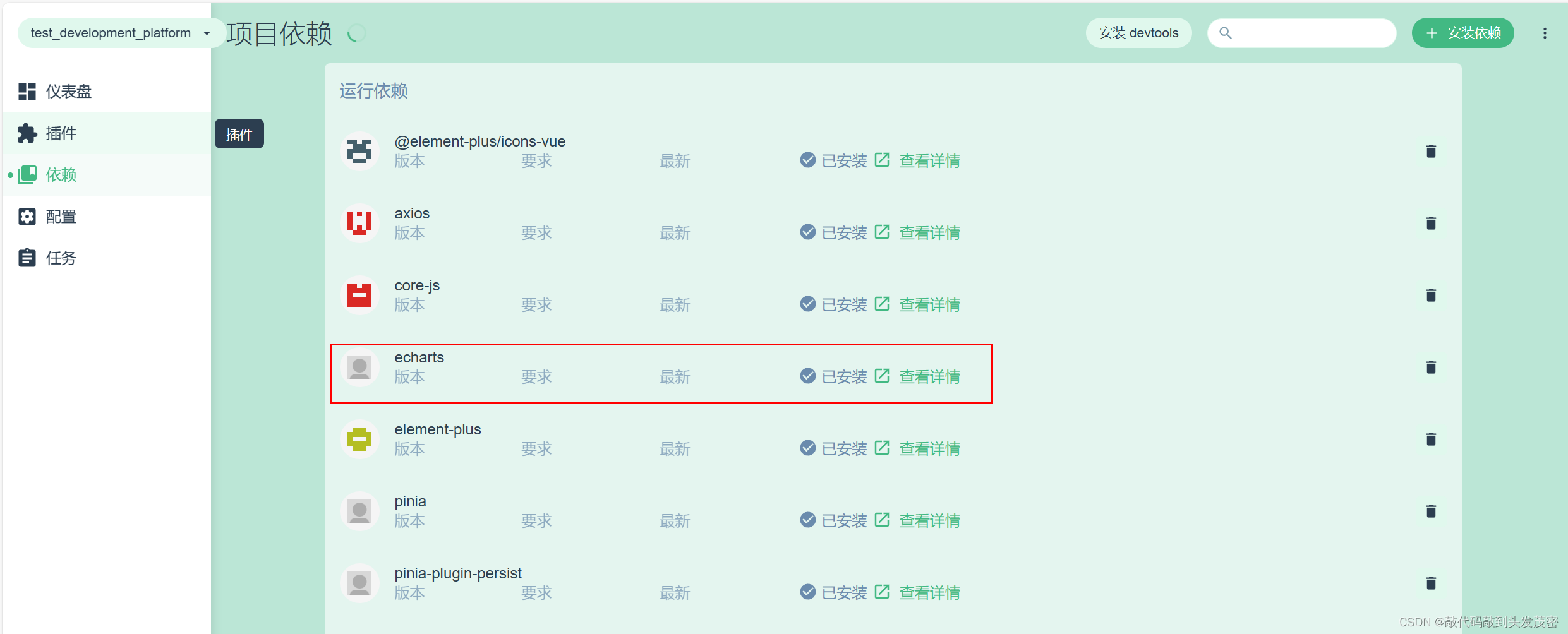

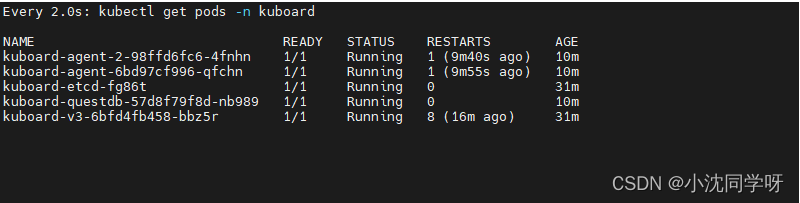

安装可视化面板kuboard(可选)

#k8s直接安装即可

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

watch kubectl get pods -n kuboard

等待 kuboard 名称空间中所有的 Pod 就绪

#注意:要保证Master Role存在

#在浏览器输入 http://10.10.22.91:30080即可访问 Kuboard v3.x 的界面,登录方式:

#用户名: admin

#密 码: Kuboard123

到这里kebeadmin安装kubernetes集群就已经完成了,大家可以愉快的玩耍了。

下期预告

【实战】Kubernetes持久化NFS与包管理工具Helm安装

⭐️路漫漫其修远兮,吾将上下而求索 🔍