-

线性回归是一种线性模型,例如,假设输入变量"(x) “与单一输出变量”(y) “之间存在线性关系的模型。更具体地说,输出变量”(y) “可以通过输入变量”(x) "的线性组合计算得出。单变量线性回归是一种线性回归,只有1个输入参数和1个输出标签。这里建立一个模型,根据 "人均 GDP "参数预测各国的 “幸福指数”。

-

导包

-

import numpy as np import pandas as pd import matplotlib.pyplot as plt import sys sys.path.append('../..') # Import custom linear regression implementation. from homemade.linear_regression import LinearRegression -

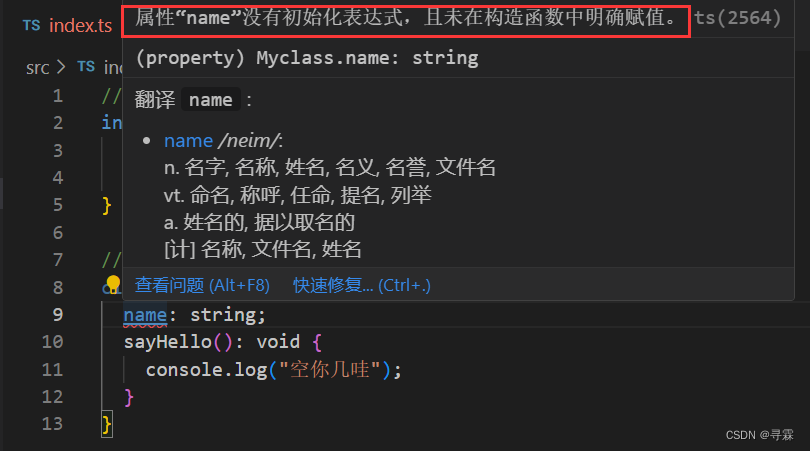

关于自定义的线性回归py文件为:

-

# Import dependencies. import numpy as np from ..utils.features import prepare_for_training class LinearRegression: # pylint: disable=too-many-instance-attributes """Linear Regression Class""" def __init__(self, data, labels, polynomial_degree=0, sinusoid_degree=0, normalize_data=True): # pylint: disable=too-many-arguments """Linear regression constructor. :param data: training set. :param labels: training set outputs (correct values). :param polynomial_degree: degree of additional polynomial features. :param sinusoid_degree: multipliers for sinusoidal features. :param normalize_data: flag that indicates that features should be normalized.表示应将特征标准化。 """ # 标准化: 数据的标准化(normalization)是将数据按比例缩放,使之落入一个小的特定区间。在某些比较和评价的指标处理中经常会用到,去除数据的单位限制,将其转化为无量纲的纯数值。 常用的标准化有:Min-Max scaling, Z score # 中心化:即变量减去它的均值,对数据进行平移。 # Normalize features and add ones column. ( data_processed, features_mean, features_deviation ) = prepare_for_training(data, polynomial_degree, sinusoid_degree, normalize_data) self.data = data_processed self.labels = labels self.features_mean = features_mean self.features_deviation = features_deviation self.polynomial_degree = polynomial_degree self.sinusoid_degree = sinusoid_degree self.normalize_data = normalize_data # Initialize model parameters. num_features = self.data.shape[1] self.theta = np.zeros((num_features, 1)) def train(self, alpha, lambda_param=0, num_iterations=500): """Trains linear regression. :param alpha: learning rate (the size of the step for gradient descent) :param lambda_param: regularization parameter :param num_iterations: number of gradient descent iterations. """ # Run gradient descent. cost_history = self.gradient_descent(alpha, lambda_param, num_iterations) return self.theta, cost_history def gradient_descent(self, alpha, lambda_param, num_iterations): """梯度下降。它能计算出每个 theta 参数应采取的步骤(deltas)以最小化成本函数。 :param alpha: learning rate (the size of the step for gradient descent) :param lambda_param: regularization parameter :param num_iterations: number of gradient descent iterations. """ # Initialize J_history with zeros. cost_history = [] for _ in range(num_iterations): # 在参数向量 theta 上执行一个梯度步骤。 self.gradient_step(alpha, lambda_param) # 在每次迭代中保存成本 J。 cost_history.append(self.cost_function(self.data, self.labels, lambda_param)) return cost_history def gradient_step(self, alpha, lambda_param): """单步梯度下降。 函数对 theta 参数执行一步梯度下降。 :param alpha: learning rate (the size of the step for gradient descent) :param lambda_param: regularization parameter """ # Calculate the number of training examples. num_examples = self.data.shape[0] # 对所有 m 个例子的假设预测。 predictions = LinearRegression.hypothesis(self.data, self.theta) # 所有 m 个示例的预测值与实际值之间的差值。 delta = predictions - self.labels # 计算正则化参数 reg_param = 1 - alpha * lambda_param / num_examples # 创建快捷方式。 theta = self.theta # 梯度下降的矢量化版本。 theta = theta * reg_param - alpha * (1 / num_examples) * (delta.T @ self.data).T # 我们不应该对参数 theta_zero 进行正则化处理。 theta[0] = theta[0] - alpha * (1 / num_examples) * (self.data[:, 0].T @ delta).T self.theta = theta def get_cost(self, data, labels, lambda_param): """获取特定数据集的成本值。 :param data: the set of training or test data. :param labels: training set outputs (correct values). :param lambda_param: regularization parameter """ data_processed = prepare_for_training( data, self.polynomial_degree, self.sinusoid_degree, self.normalize_data, )[0] return self.cost_function(data_processed, labels, lambda_param) def cost_function(self, data, labels, lambda_param): """成本函数。它显示了我们的模型在当前模型参数基础上的精确度。 :param data: the set of training or test data. :param labels: training set outputs (correct values). :param lambda_param: regularization parameter """ # Calculate the number of training examples and features. num_examples = data.shape[0] # Get the difference between predictions and correct output values. delta = LinearRegression.hypothesis(data, self.theta) - labels # Calculate regularization parameter. # Remember that we should not regularize the parameter theta_zero. theta_cut = self.theta[1:, 0] reg_param = lambda_param * (theta_cut.T @ theta_cut) # 计算当前的预测成本。 cost = (1 / 2 * num_examples) * (delta.T @ delta + reg_param) # Let's extract cost value from the one and only cost numpy matrix cell. return cost[0][0] def predict(self, data): """Predict the output for data_set input based on trained theta values :param data: training set of features. """ # Normalize features and add ones column. data_processed = prepare_for_training( data, self.polynomial_degree, self.sinusoid_degree, self.normalize_data, )[0] # Do predictions using model hypothesis. predictions = LinearRegression.hypothesis(data_processed, self.theta) return predictions @staticmethod def hypothesis(data, theta):### 非常不理解,能告诉我嘛 """假设函数。它根据输入值 X 和模型参数预测输出值 y。 :param data: data set for what the predictions will be calculated. :param theta: model params. :return: predictions made by model based on provided theta. """ predictions = data @ theta return predictions -

在聚类过程中,标准化显得尤为重要。这是因为聚类操作依赖于对类间距离和类内聚类之间的衡量。如果一个变量的衡量标准高于其他变量,那么我们使用的任何衡量标准都将受到该变量的过度影响。

-

在PCA降维操作之前。在主成分PCA分析之前,对变量进行标准化至关重要。 这是因为PCA给那些方差较高的变量比那些方差非常小的变量赋予更多的权重。而 标准化原始数据会产生相同的方差,因此高权重不会分配给具有较高方差的变量。

-

KNN操作,原因类似于kmeans聚类。由于KNN需要用欧式距离去度量。标准化会让变量之间起着相同的作用。

-

在SVM中,使用所有跟距离计算相关的的kernel都需要对数据进行标准化。

-

在选择岭回归和Lasso时候,标准化是必须的。原因是正则化是有偏估计,会对权重进行惩罚。在量纲不同的情况,正则化会带来更大的偏差。

-

prepare_for_training方法

-

import numpy as np from .normalize import normalize from .generate_sinusoids import generate_sinusoids from .generate_polynomials import generate_polynomials def prepare_for_training(data, polynomial_degree=0, sinusoid_degree=0, normalize_data=True): """Prepares data set for training on prediction""" # Calculate the number of examples. num_examples = data.shape[0] # Prevent original data from being modified.深拷贝(Deep Copy)和浅拷贝(Shallow Copy)是在进行对象拷贝时常用的两种方式,它们之间的主要区别在于是否复制了对象内部的数据。 # 浅拷贝只是简单地将原对象的引用赋值给新对象,新旧对象共享同一块内存空间。当其中一个对象修改了这块内存中的数据时,另一个对象也会受到影响。 view操作,如numpy的slice,只会copy父对象,不会copy底层的数据,共用原始引用指向的对象数据。如果在view上修改数据,会直接反馈到原始对象。 # 深拷贝则是创建一个全新的对象,并且递归地复制原对象及其所有子对象的内容。新对象与原对象完全独立,对任何一方的修改都不会影响另一方。 data_processed = np.copy(data) #deep copy # Normalize data set. features_mean = 0 features_deviation = 0 data_normalized = data_processed if normalize_data: ( data_normalized, features_mean, features_deviation ) = normalize(data_processed) # 将处理过的数据替换为归一化处理过的数据。在添加多项式和正弦曲线时,我们需要下面的归一化数据。 data_processed = data_normalized # 在数据集中添加正弦特征。 if sinusoid_degree > 0: sinusoids = generate_sinusoids(data_normalized, sinusoid_degree) data_processed = np.concatenate((data_processed, sinusoids), axis=1) # 为数据集添加多项式特征。 if polynomial_degree > 0: polynomials = generate_polynomials(data_normalized, polynomial_degree, normalize_data) data_processed = np.concatenate((data_processed, polynomials), axis=1) # Add a column of ones to X. data_processed = np.hstack((np.ones((num_examples, 1)), data_processed)) # np.hstack 按水平方向(列顺序)堆叠数组构成一个新的数组; np.vstack() 按垂直方向(行顺序)堆叠数组构成一个新的数组 return data_processed, features_mean, features_deviation

-

-

-

引用拷贝是指将一个对象的引用直接赋值给另一个变量,使得两个变量指向同一个对象。这样,在修改其中一个变量所指向的对象时,另一个变量也会随之改变。引用拷贝通常发生在传递参数、返回值等场景中。例如,如果将一个对象作为参数传递给方法,实际上是将该对象的引用传递给了方法,而不是对象本身的拷贝。引用拷贝并非真正意义上的拷贝,而是共享同一份数据。因此,对于引用拷贝的对象,在修改其内部数据时需要注意是否会影响到其他使用该对象的地方。浅拷贝与深拷贝的区别(详解)_深拷贝和浅拷贝的区别-CSDN博客

-

-

基本数据类型的特点:直接存储在栈(stack)中的数据。引用数据类型的特点:存储的是该对象在栈中引用,真实的数据存放在堆内存里。引用数据类型在栈中存储了指针,该指针指向堆中该实体的起始地址。当解释器寻找引用值时,会首先检索其在栈中的地址,取得地址后从堆中获得实体。

-

normalize.py

-

import numpy as np def normalize(features): """Normalize features. Normalizes input features X. Returns a normalized version of X where the mean value of each feature is 0 and deviation is close to 1. :param features: set of features. :return: normalized set of features. """ # Copy original array to prevent it from changes. features_normalized = np.copy(features).astype(float) # Get average values for each feature (column) in X. features_mean = np.mean(features, 0) # #取纵轴上的平均值 返回一个 1*len(features[0]) # Calculate the standard deviation for each feature. features_deviation = np.std(features, 0) # 从每个示例(行)的每个特征(列)中减去平均值,使所有特征都分布在零点附近。 if features.shape[0] > 1: features_normalized -= features_mean # 广播机制,m*n-1*n # 对每个特征值进行归一化处理,使所有特征值都接近 [-1:1] 边界。 同时防止除以零的错误。 # features_deviation[features_deviation == 0] = 1 min_eps = np.finfo(features_deviation.dtype).eps features_deviation = np.maximum(features_deviation, min_eps) features_normalized /= features_deviation return features_normalized, features_mean, features_deviation -

generate_sinusoids.py

-

import numpy as np def generate_sinusoids(dataset, sinusoid_degree): """用正弦特征扩展数据集。返回包含更多特征的新特征数组,包括 sin(x). :param dataset: data set. :param sinusoid_degree: multiplier for sinusoid parameter multiplications """ # Create sinusoids matrix. num_examples = dataset.shape[0] sinusoids = np.empty((num_examples, 0)) # array([], shape=(num_examples, 0), dtype=float64) # 生成指定度数的正弦特征。 for degree in range(1, sinusoid_degree + 1): sinusoid_features = np.sin(degree * dataset) sinusoids = np.concatenate((sinusoids, sinusoid_features), axis=1) # np.concatenate 是numpy中对array进行拼接的函数 # Return generated sinusoidal features. return sinusoids -

generate_polynomials.py

-

import numpy as np from .normalize import normalize def generate_polynomials(dataset, polynomial_degree, normalize_data=False): """用一定程度的多项式特征扩展数据集。返回包含更多特征的新特征数组,包括 x1、x2、x1^2、x2^2、x1*x2、x1*x2^2 等。 :param dataset: dataset that we want to generate polynomials for. :param polynomial_degree: the max power of new features. :param normalize_data: flag that indicates whether polynomials need to normalized or not. """ # Split features on two halves. # numpy.array_split(ary, indices_or_sections, axis=0) array_split允许indexs_or_sections是一个不等分轴的整数。 对于长度为l的数组,应将其分割为成n个部分,它将返回大小为l//n + 1的l%n个子数组,其余大小为l//n。 features_split = np.array_split(dataset, 2, axis=1) dataset_1 = features_split[0] dataset_2 = features_split[1] # Extract sets parameters. (num_examples_1, num_features_1) = dataset_1.shape (num_examples_2, num_features_2) = dataset_2.shape # Check if two sets have equal amount of rows. if num_examples_1 != num_examples_2: raise ValueError('Can not generate polynomials for two sets with different number of rows') # Check if at list one set has features. if num_features_1 == 0 and num_features_2 == 0: raise ValueError('无法为无列的两个集合生成多项式') # 用非空集替换空集。 if num_features_1 == 0: dataset_1 = dataset_2 elif num_features_2 == 0: dataset_2 = dataset_1 # 确保各组具有相同数量的特征,以便能够将它们相乘。 num_features = num_features_1 if num_features_1 < num_examples_2 else num_features_2 dataset_1 = dataset_1[:, :num_features] dataset_2 = dataset_2[:, :num_features] # Create polynomials matrix. polynomials = np.empty((num_examples_1, 0)) # 生成指定度数的多项式特征。 for i in range(1, polynomial_degree + 1): for j in range(i + 1): polynomial_feature = (dataset_1 ** (i - j)) * (dataset_2 ** j) polynomials = np.concatenate((polynomials, polynomial_feature), axis=1) # Normalize polynomials if needed. if normalize_data: polynomials = normalize(polynomials)[0] # Return generated polynomial features. return polynomials -

在本演示https://github.com/trekhleb/homemade-machine-learning中,将使用 2017 年的 [World Happindes Dataset](https://www.kaggle.com/unsdsn/world-happiness#2017.csv

-

data = pd.read_csv('../../data/world-happiness-report-2017.csv') data.shape #(155, 12) -

-

GDP_Happy_Corr = data.corr() GDP_Happy_Corr import seaborn as sns cmap = sns.choose_diverging_palette() # 使用choose_diverging_palette()方法交互式的进行调色,可以代替diverging_palette() # 注:仅在jupyter中使用 -

-

# 创建热图,并调整参数 sns.heatmap(GDP_Happy_Corr # ,mask=mask #只显示为true的值 , cmap=cmap , vmax=.3 , center=0 # ,square=True , linewidths=.5 , cbar_kws={"shrink": .5} , annot=True #底图带数字 True为显示数字 ) -

-

# 打印每个特征的直方图,查看它们的变化情况。 histohrams = data.hist(grid=False, figsize=(10, 10)) -

-

将数据分成训练子集和测试子集;在这一步中,我们将把数据集分成_训练和测试_子集(比例为 80/20%)。训练数据集将用于训练我们的线性模型。测试数据集将用于验证模型。测试数据集中的所有数据对模型来说都是新的,我们可以检查模型预测的准确性。

-

train_data = data.sample(frac=0.8) test_data = data.drop(train_data.index) # Decide what fields we want to process. input_param_name = 'Economy..GDP.per.Capita.' output_param_name = 'Happiness.Score' # Split training set input and output. x_train = train_data[[input_param_name]].values y_train = train_data[[output_param_name]].values # Split test set input and output. x_test = test_data[[input_param_name]].values y_test = test_data[[output_param_name]].values # Plot training data. plt.scatter(x_train, y_train, label='Training Dataset') plt.scatter(x_test, y_test, label='Test Dataset') plt.xlabel(input_param_name) plt.ylabel(output_param_name) plt.title('Countries Happines') plt.legend() plt.show() -

-

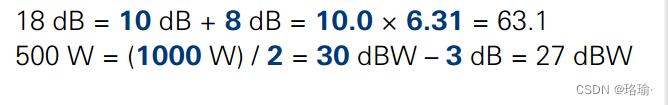

polynomial_degree(多项式度数)–这个参数可以添加一定度数的多项式特征。特征越多,线条越弯曲。num_iterations- 这是梯度下降算法用于寻找代价函数最小值的迭代次数。数字过低可能会导致梯度下降算法无法达到最小值。数值过高会延长算法的工作时间,但不会提高其准确性。learning_rate- 这是梯度下降步骤的大小。小的学习步长会延长算法的工作时间,可能需要更多的迭代才能达到代价函数的最小值。大的学习步长可能会导致算法无法达到最小值,并且成本函数值会随着新的迭代而增长。regularization_param- 防止过度拟合的参数。参数越高,模型越简单。polynomial_degree- 附加多项式特征的程度( ‘ x 1 2 ∗ x 2 , x 1 2 ∗ x 2 2 , . . . ‘ `x1^2 * x2, x1^2 * x2^2, ...` ‘x12∗x2,x12∗x22,...‘)。这将允许您对预测结果进行曲线处理``sinusoid_degree- 附加特征的正弦参数乘数的度数(sin(x), sin(2*x), …`)。这将允许您通过在预测曲线中添加正弦分量来绘制预测曲线。 -

num_iterations = 500 # Number of gradient descent iterations. regularization_param = 0 # Helps to fight model overfitting. learning_rate = 0.01 # The size of the gradient descent step. polynomial_degree = 0 # The degree of additional polynomial features.附加多项式特征的程度。 sinusoid_degree = 0 # The degree of sinusoid parameter multipliers of additional features.附加特征的正弦参数乘数。 # Init linear regression instance. linear_regression = LinearRegression(x_train, y_train, polynomial_degree, sinusoid_degree) # Train linear regression. (theta, cost_history) = linear_regression.train( learning_rate, regularization_param, num_iterations ) # Print training results. print('Initial cost: {:.2f}'.format(cost_history[0])) print('Optimized cost: {:.2f}'.format(cost_history[-1])) # Print model parameters theta_table = pd.DataFrame({'Model Parameters': theta.flatten()}) theta_table.head() -

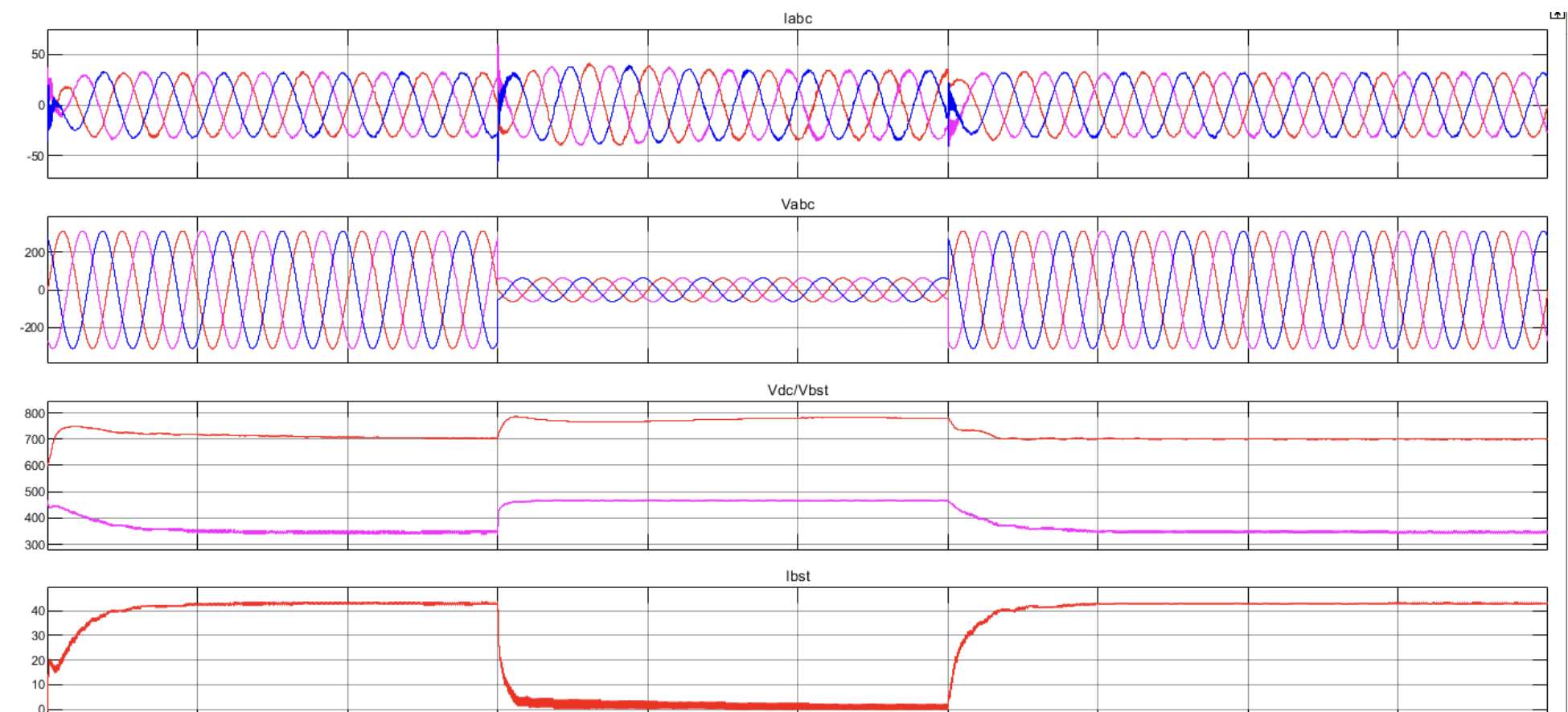

既然模型已经训练好了,我们就可以在训练数据集和测试数据集上绘制模型预测图,看看模型与数据的拟合程度如何。

-

# Get model predictions for the trainint set. predictions_num = 100 x_predictions = np.linspace(x_train.min(), x_train.max(), predictions_num).reshape(predictions_num, 1); y_predictions = linear_regression.predict(x_predictions) # Plot training data with predictions. plt.scatter(x_train, y_train, label='Training Dataset') plt.scatter(x_test, y_test, label='Test Dataset') plt.plot(x_predictions, y_predictions, 'r', label='Prediction') plt.xlabel('Economy..GDP.per.Capita.') plt.ylabel('Happiness.Score') plt.title('Countries Happines') plt.legend() plt.show() -

-

-

-

多变量线性回归是一种线性回归,它有_多个_输入参数和一个输出标签。演示项目: 在这个演示中,我们将建立一个模型,根据 "人均经济生产总值 "和 "自由度 "参数预测各国的 “幸福指数”。

-

train_data = data.sample(frac=0.8) test_data = data.drop(train_data.index) # 决定我们要处理哪些字段。 input_param_name_1 = 'Economy..GDP.per.Capita.' input_param_name_2 = 'Freedom' output_param_name = 'Happiness.Score' # 分割训练集的输入和输出。 x_train = train_data[[input_param_name_1, input_param_name_2]].values y_train = train_data[[output_param_name]].values # Split test set input and output. x_test = test_data[[input_param_name_1, input_param_name_2]].values y_test = test_data[[output_param_name]].values -

使用训练数据集配置绘图。

-

import plotly import plotly.graph_objs as go # Configure Plotly to be rendered inline in the notebook. plotly.offline.init_notebook_mode() plot_training_trace = go.Scatter3d( x=x_train[:, 0].flatten(), y=x_train[:, 1].flatten(), z=y_train.flatten(), name='Training Set', mode='markers', marker={ 'size': 10, 'opacity': 1, 'line': { 'color': 'rgb(255, 255, 255)', 'width': 1 }, } ) # Configure the plot with test dataset. plot_test_trace = go.Scatter3d( x=x_test[:, 0].flatten(), y=x_test[:, 1].flatten(), z=y_test.flatten(), name='Test Set', mode='markers', marker={ 'size': 10, 'opacity': 1, 'line': { 'color': 'rgb(255, 255, 255)', 'width': 1 }, } ) # Configure the layout. plot_layout = go.Layout( title='Date Sets', scene={ 'xaxis': {'title': input_param_name_1}, 'yaxis': {'title': input_param_name_2}, 'zaxis': {'title': output_param_name} }, margin={'l': 0, 'r': 0, 'b': 0, 't': 0} ) plot_data = [plot_training_trace, plot_test_trace] plot_figure = go.Figure(data=plot_data, layout=plot_layout) # Render 3D scatter plot. plotly.offline.iplot(plot_figure) -

-

# Generate different combinations of X and Y sets to build a predictions plane. predictions_num = 10 # Find min and max values along X and Y axes. x_min = x_train[:, 0].min(); x_max = x_train[:, 0].max(); y_min = x_train[:, 1].min(); y_max = x_train[:, 1].max(); # Generate predefined numbe of values for eaxh axis betwing correspondent min and max values. x_axis = np.linspace(x_min, x_max, predictions_num) y_axis = np.linspace(y_min, y_max, predictions_num) # Create empty vectors for X and Y axes predictions # We're going to find cartesian product of all possible X and Y values. x_predictions = np.zeros((predictions_num * predictions_num, 1)) y_predictions = np.zeros((predictions_num * predictions_num, 1)) # Find cartesian product of all X and Y values. x_y_index = 0 for x_index, x_value in enumerate(x_axis): for y_index, y_value in enumerate(y_axis): x_predictions[x_y_index] = x_value y_predictions[x_y_index] = y_value x_y_index += 1 # Predict Z value for all X and Y pairs. z_predictions = linear_regression.predict(np.hstack((x_predictions, y_predictions))) # Plot training data with predictions. # Configure the plot with test dataset. plot_predictions_trace = go.Scatter3d( x=x_predictions.flatten(), y=y_predictions.flatten(), z=z_predictions.flatten(), name='Prediction Plane', mode='markers', marker={ 'size': 1, }, opacity=0.8, surfaceaxis=2, ) plot_data = [plot_training_trace, plot_test_trace, plot_predictions_trace] plot_figure = go.Figure(data=plot_data, layout=plot_layout) plotly.offline.iplot(plot_figure) -

-

-

多项式回归是一种回归分析形式,其中自变量 "x "与因变量 "y "之间的关系被模拟为 "x "的 n t h n^{th} nth 度多项式。虽然多项式回归将一个非线性模型拟合到数据中,但作为一个统计估计问题,它是线性的,即回归函数

E(y|x)与根据数据估计的未知参数是线性的。因此,多项式回归被认为是多元线性回归的特例。-

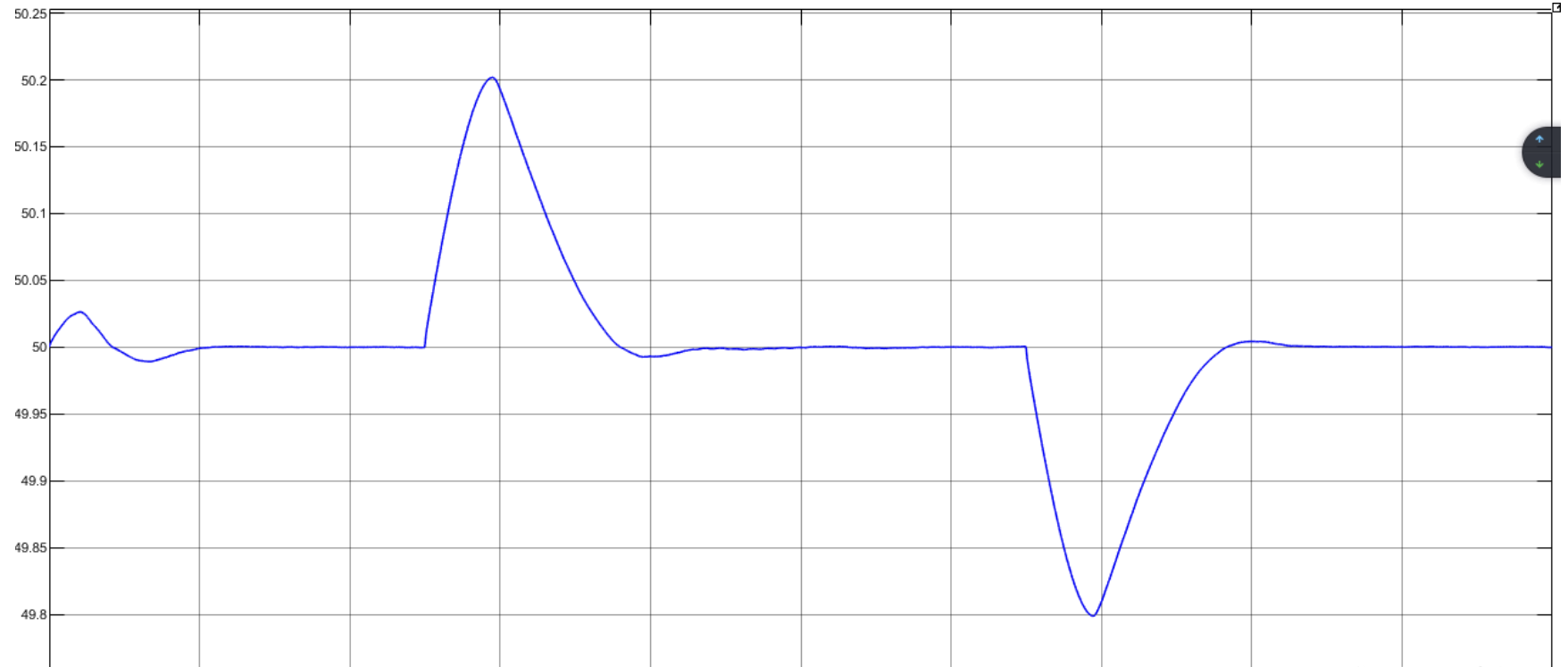

data = pd.read_csv('../../data/non-linear-regression-x-y.csv') # Fetch traingin set and labels. x = data['x'].values.reshape((data.shape[0], 1)) y = data['y'].values.reshape((data.shape[0], 1)) # Print the data table. data.head(10) plt.plot(x, y) plt.show() -

-

# Set up linear regression parameters. num_iterations = 50000 # Number of gradient descent iterations. regularization_param = 0 # Helps to fight model overfitting. learning_rate = 0.02 # The size of the gradient descent step. polynomial_degree = 15 # The degree of additional polynomial features. sinusoid_degree = 15 # The degree of sinusoid parameter multipliers of additional features. normalize_data = True # Flag that indicates that data needs to be normalized before training. # Init linear regression instance. linear_regression = LinearRegression(x, y, polynomial_degree, sinusoid_degree, normalize_data) # Train linear regression. (theta, cost_history) = linear_regression.train( learning_rate, regularization_param, num_iterations ) # Print training results. print('Initial cost: {:.2f}'.format(cost_history[0])) print('Optimized cost: {:.2f}'.format(cost_history[-1])) # Print model parameters theta_table = pd.DataFrame({'Model Parameters': theta.flatten()}) theta_table -

-

既然模型已经训练完成,我们就可以绘制模型在训练数据集和测试数据集上的预测结果,看看模型与数据的拟合程度如何。

-

# Get model predictions for the trainint set. predictions_num = 1000 x_predictions = np.linspace(x.min(), x.max(), predictions_num).reshape(predictions_num, 1); y_predictions = linear_regression.predict(x_predictions) # Plot training data with predictions. plt.scatter(x, y, label='Training Dataset') plt.plot(x_predictions, y_predictions, 'r', label='Prediction') plt.show()

-

tten()})

theta_table

```

-

[外链图片转存中…(img-g6KhuEn7-1696686291862)]

-

既然模型已经训练完成,我们就可以绘制模型在训练数据集和测试数据集上的预测结果,看看模型与数据的拟合程度如何。

-

# Get model predictions for the trainint set. predictions_num = 1000 x_predictions = np.linspace(x.min(), x.max(), predictions_num).reshape(predictions_num, 1); y_predictions = linear_regression.predict(x_predictions) # Plot training data with predictions. plt.scatter(x, y, label='Training Dataset') plt.plot(x_predictions, y_predictions, 'r', label='Prediction') plt.show() -