通义千问大模型微调源码(chatglm2 微调失败,训练通义千问成功):GitHub - hiyouga/LLaMA-Efficient-Tuning: Easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon, Baichuan, Qwen, ChatGLM2)Easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon, Baichuan, Qwen, ChatGLM2) - GitHub - hiyouga/LLaMA-Efficient-Tuning: Easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon, Baichuan, Qwen, ChatGLM2)![]() https://github.com/hiyouga/LLaMA-Efficient-Tuning

https://github.com/hiyouga/LLaMA-Efficient-Tuning

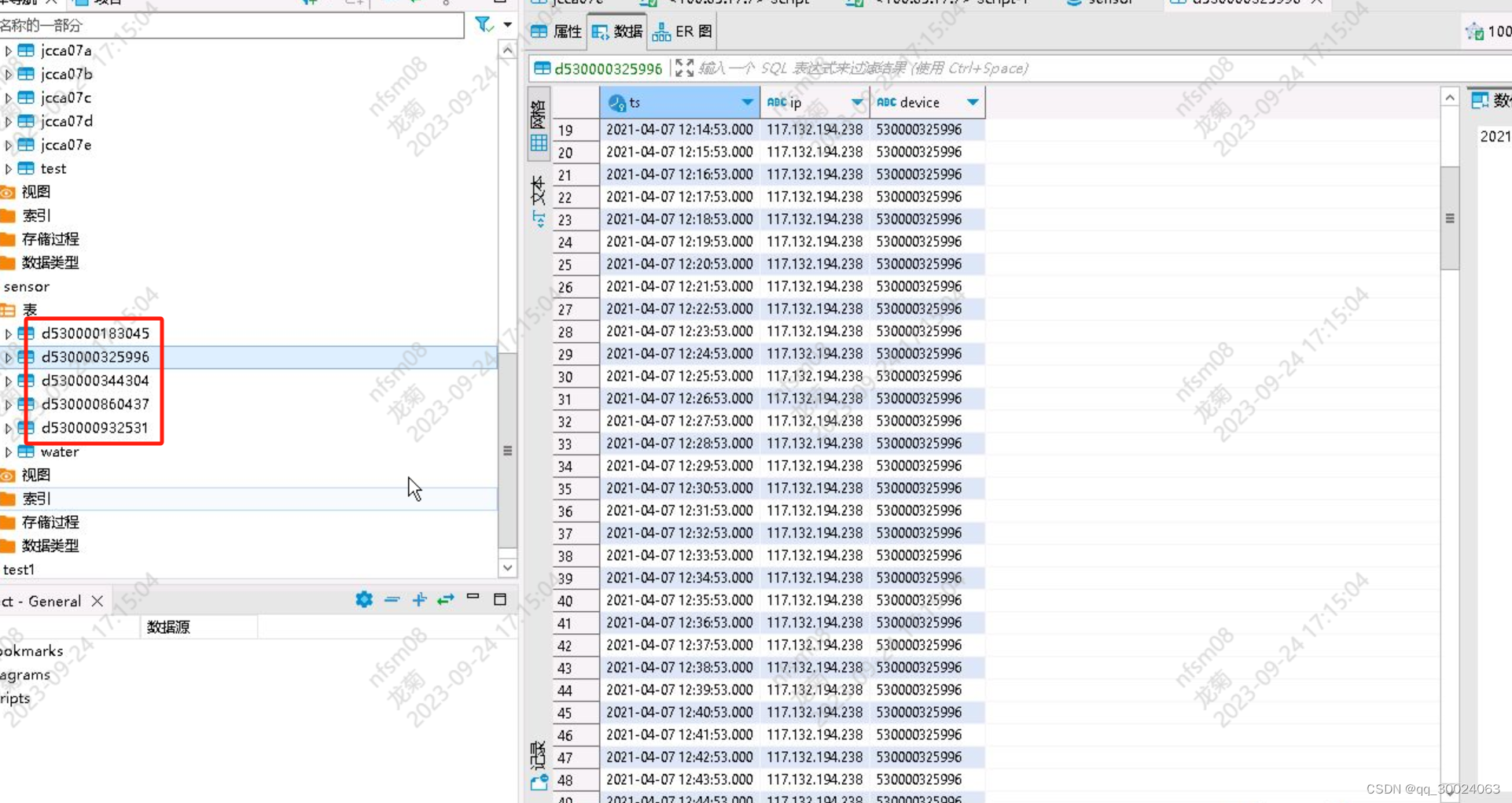

训练数据准备,进入LLaMA-Efficient-Tuning根目录后,有一个data目录,

创建一个自己的训练数据文件,录入内容格式如下:

[{"instruction":"阅读下列短文,从每题所给的四个选项《A、 B、 C和D)中。选出最佳选项。",

"input":"sdfdsg",

"output:"A"}]训练数据文件配置到dataset_info.json中

训练命令:

vim run.sh

Model_path="model_path"

save_Model_dir="save_model"

dataset="qwentest"CUDA_VISIBLE_DEVICES=0

torchrun --nproc_per_node 1 src/train_bash.py \

--model_name_or_path ${Model_path} \

--stage sft \--do_train \

--dataset ${dataset} \

--dataset_dir data \

--finetuning_type lora \

--output_dir ${save_Model_dir} \

--overwrite_cache \

--per_device_train_batch_size 4 \

--gradient_accumulation_steps 1 \

--lr_scheduler_type cosine \

--logging_steps 10 \

--save_steps 1000 \--learning_rate 1e-3 \

--num_train_epochs 10.0 \

--plot_loss \--lora_target c_attn \

--template chatml \

--fp16

执行训练:

3、执行nohup ./finetune_llm.sh &

4、训练完的模型调用:

from transformers import AutoTokenizer,AutoModelForCausalLM, AutoConfig

from peft import PeftModelfrom transformers.generation import GenerationConfig

import osmodel_path = "原始模型"

ckpt_path = "lora微调后的模型"save_pre_name = os.path.basename(model_path)

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)base_model = AutoModelForCausaLLM.from_pretrained(model_path,device_map={"":0},trust_remote_code=True)

base_model.generation_config = GenerationConfig.from_pretrained(model_path, trust_remote_code=True)

lora_model = PeftModel.from_pretrained(base_model, ckpt_path)

lora_model.to('cuda:4')

lora_model.eval()

final_result = []input = ""

line_format=input.replace("######","\n")llm_question="ddsfdsfddsfsdg\nA. 非常满意\nB. 满意\nC. 不满意\nD. 非常不满意\n答案:".format(line_format)

inputs = tokenizer(llm_question, return_tensors='pt')

inputs = inputs.to(lora_model.device)

pred = lora_model.generate(**inputs, max_new_tokens=500)

predict_txt = tokenizer.decode(pred.cpu()[0], skip_special_tokens=True).replace("\n", " ")

print(predict_txt)

关于chatglm2模型微调:(chatglm2成功): https://github.com/hiyouga/ChatGLM-Efficient-Tuning

训练数据准备,进入LLaMA-Efficient-Tuning根目录后,有一个data目录,

创建一个自己的训练数据文件,录入内容格式如下:

[{"instruction":"阅读下列短文,从每题所给的四个选项《A、 B、 C和D)中。选出最佳选项。",

"input":"sdfdsg",

"output:"A"}]训练数据文件配置到dataset_info.json中

训练命令: