1.docker+flask方式

# YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

"""

Run a Flask REST API exposing one or more YOLOv5s models

"""

import argparse

import io

import json

import torch

from flask import Flask, jsonify, request,Response

from PIL import Image

import numpy as np

import cv2

import requests

from collections import OrderedDict

import os

app = Flask(__name__)

models = {}

DETECTION_URL = '/v1/object-detection/<model>'

@app.route(DETECTION_URL, methods=['POST'])

# @app.route(DETECTION_URL, methods=['GET'])

def predict(model):

if request.method != 'POST':

# if request.method != 'GET':

return jsonify({'error':'Invalid request method'})

print(request.data)

if request.data:

try:

# img = np.frombuffer(request.data,dtype=np.uint8)#转成np矩阵

img = cv2.imdecode(np.frombuffer(request.data,dtype=np.uint8),cv2.IMREAD_COLOR)

if img is None:

raise Exception("Invalid image data")

except Exception as e:

# print(f"An error occurred: {str(e)}")

return jsonify({'error':'Invalid image data'})

if model in models:

# results = models[model](img, size=640) # reduce size=320 for faster inference

results = models[model](img) # reduce size=320 for faster inference

# return results.pandas().xyxy[0].to_json(orient='records')

detection_results = []

for detection in results.pandas().xyxy[0].to_dict(orient='records'):

detection_result = {

"name": detection["name"],

"score": float(detection["confidence"]),

"bbox": [

float(detection["xmin"]),

float(detection["ymin"]),

detection["xmax"] - detection["xmin"],

detection["ymax"] - detection["ymin"]

]

}

detection_results.append(detection_result)

response_data = OrderedDict()

response_data["imgUrl"] = img

response_data["imgSize"] = [img.shape[1], img.shape[0]]

response_data["code"] = 0

response_data["msg"] = ""

response_data["objects"] = detection_results

return Response(json.dumps(response_data), mimetype='application/json')

if request.files.get('image'):

# Method 1

# with request.files["image"] as f:

# im = Image.open(io.BytesIO(f.read()))

# Method 2

im_file = request.files['image']

im_filename = os.path.join(os.path.dirname(__file__),im_file.filename)

im_bytes = im_file.read()

try:

im = Image.open(io.BytesIO(im_bytes))

except Exception as e:

response_data = OrderedDict()

response_data["imgUrl"] = ""

response_data["imgSize"] = [0,0]

response_data["code"] = 1

response_data["msg"] = ""

response_data["objects"] = []

response_data["error"] = 'Invalid image file'

return Response(json.dumps(response_data), mimetype='application/json')

# if model in models:

# results = models[model](im, size=640) # reduce size=320 for faster inference

# # return results.pandas().xyxy[0].to_json(orient='records')

# detection_results = []

# for detection in results.pandas().xyxy[0].to_dict(orient='records'):

# detection_result = {

# "name": detection["name"],

# "score": float(detection["confidence"]),

# "bbox": [

# float(detection["xmin"]),

# float(detection["ymin"]),

# detection["xmax"] - detection["xmin"],

# detection["ymax"] - detection["ymin"]

# ]

# }

# detection_results.append(detection_result)

# response_data = OrderedDict()

# response_data["imagePath"] = im_filename

# response_data["imageSize"] = [im.width, im.height]

# response_data["code"] = 0

# response_data["msg"] = ""

# response_data["objects"] = detection_results

# return Response(json.dumps(response_data), mimetype='application/json')

if request.form.get('image_url'):

image_url = request.form.get('image_url')

try:

response = requests.get(image_url)

im = Image.open(io.BytesIO(response.content))

except Exception as e:

return jsonify({'error':'Failed to download or open image from URL'})

if model in models:

results = models[model](im, size=640) # reduce size=320 for faster inference

# return results.pandas().xyxy[0].to_json(orient='records')

detection_results = []

for detection in results.pandas().xyxy[0].to_dict(orient='records'):

detection_result = OrderedDict()

detection_result = {

"name": detection["name"],

"score": float(detection["confidence"]),

"bbox": [

float(detection["xmin"]),

float(detection["ymin"]),

detection["xmax"] - detection["xmin"],

detection["ymax"] - detection["ymin"]

]

}

detection_results.append(detection_result)

response_data = OrderedDict()

response_data["imgUrl"] = image_url

response_data["imgSize"] = [im.width, im.height]

response_data["code"] = 0

response_data["msg"] = ""

response_data["objects"] = detection_results

return Response(json.dumps(response_data), mimetype='application/json')

response_data = OrderedDict()

response_data["imgUrl"] = ""

response_data["imgSize"] = [0,0]

response_data["code"] = 1

response_data["msg"] = ""

response_data["objects"] = []

response_data["error"] = 'No image file or URL or model found'

return Response(json.dumps(response_data), mimetype='application/json')

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='Flask API exposing YOLOv5 model')

parser.add_argument('--port', default=8008, type=int, help='port number')

parser.add_argument('--model', nargs='+', default=['yolov5s'], help='model(s) to run, i.e. --model yolov5n yolov5s')

opt = parser.parse_args()

for m in opt.model:

# models[m] = torch.hub.load('ultralytics/yolov5', m, force_reload=True, skip_validation=True)

# models[m] = torch.hub.load('./', m, source="local")WWWWWW

# models[m] = torch.hub.load('./', 'custom', path='/data1/hzb/yolov5/animals-80.pt', source="local")

models[m] = torch.hub.load('./', 'custom', path='/data1/hzb/yolov5/zs_animals.pt', source="local")

app.run(host='0.0.0.0', port=opt.port) # debug=True causes Restarting with stat

2.lcm方式

详细教程可以参考官网:(写的很清楚),各种语言

http://lcm-proj.github.io/lcm/

lcm只能是组播地址

默认使用的通信方式:

LCM (std::string lcm_url="")

1

参数的含义是一个url,一般使用默认,这个url写了ip地址和端口号,我们查看一下lcm默认的地址:

"udpm://239.255.76.67:7667?ttl=1"

如果要使用多机通信

参考下面链接

https://blog.csdn.net/weixin_45467056/article/details/123569027

在 Windows 上还需要关闭防火墙,成功 ping 通后,在 Ubuntu 环境中首先需要运行ifconfig | grep -B 1 | grep “flags”| cut -d ‘:’ -f1,查看该 IP 对应的网络设备,其中 需要用实际获取到的 IP 地址进行替换。

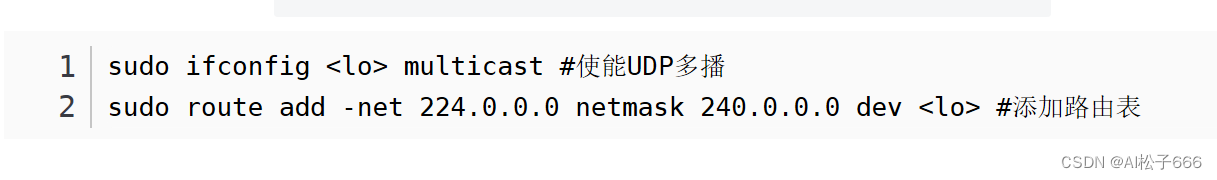

假设我们对应的网络设备是 lo,下面用 代替,使用时需要进行替换。运行下面两条命令来显式使能 UDP 多播和添加路由表。

Ubuntu 配置结束,可以正常进行消息的收发。