卷积神经网络(基础篇):

下采样(Subsampling):通道数不变,减少数据量,降低运算需求。

做这个卷积:

网络:

最大池化层(MaxPooling):通道数不变,图像大小缩成原来的一半,没有权重。

代码:

import torchfrom torchvision import transformsfrom torchvision import datasetsfrom torch.utils.data import DataLoaderimport torch.nn.functional as Fimport torch.optim as optim# prepare datasetbatch_size = 64transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)# design model using classclass Net(torch.nn.Module):def __init__(self):super(Net, self).__init__()self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)self.pooling = torch.nn.MaxPool2d(2)self.fc = torch.nn.Linear(320, 10)def forward(self, x):# flatten data from (n,1,28,28) to (n, 784)batch_size = x.size(0) #求batchsizex = F.relu(self.pooling(self.conv1(x))) #卷积、池化、激活x = F.relu(self.pooling(self.conv2(x)))x = x.view(batch_size, -1) # -1 此处自动算出的是320;view的目的就是变成全连接网络需要的格式。flattenx = self.fc(x)return xmodel = Net()device = torch.device("cuda" if torch.cuda.is_available() else "cpu") #如果你有GPU,这两行的意思就是用GPU跑model.to(device) #没有GPU的话这两行可以不写(写上也无妨)# construct loss and optimizercriterion = torch.nn.CrossEntropyLoss()optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)# training cycle forward, backward, updatedef train(epoch):running_loss = 0.0for batch_idx, data in enumerate(train_loader, 0):inputs, target = datainputs, target = inputs.to(device), target.to(device)#把数据迁移到GPU上,如果没有GPU,这行可以不写optimizer.zero_grad()outputs = model(inputs)loss = criterion(outputs, target)loss.backward()optimizer.step()running_loss += loss.item()if batch_idx % 300 == 299:print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))running_loss = 0.0def test():correct = 0total = 0with torch.no_grad():for data in test_loader:images, labels = dataimages, labels = images.to(device), labels.to(device) #如果没有GPU,这行可以不写outputs = model(images)_, predicted = torch.max(outputs.data, dim=1)total += labels.size(0)correct += (predicted == labels).sum().item()print('accuracy on test set: %d %% ' % (100 * correct / total))if __name__ == '__main__':for epoch in range(10):train(epoch)test()

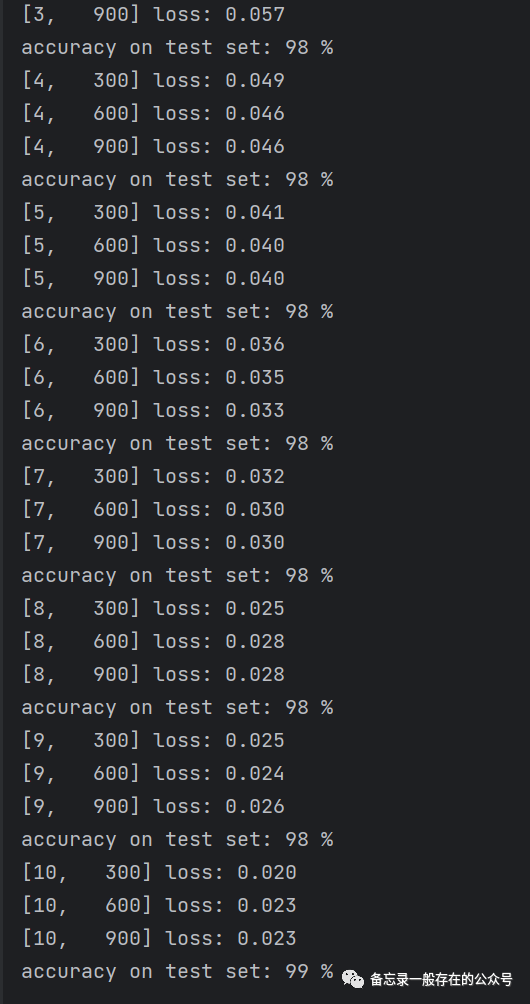

经过10轮的训练后,模型的准确度达到了98%,用线性模型是97%。

卷积神经网络(提高篇):

Inception Module:

Concatenate:把张量拼接到一块;

Average Pooling:均值池化;

1×1卷积:将来的卷积核是1×1,个数取决于输出张量的通道。

1×1卷积作用:(主要就是降维)

部分模块代码:

代码:

import torchimport torch.nn as nnfrom torchvision import transformsfrom torchvision import datasetsfrom torch.utils.data import DataLoaderimport torch.nn.functional as Fimport torch.optim as optim# prepare datasetbatch_size = 64transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # 归一化,均值和方差train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)# design model using classclass InceptionA(nn.Module):def __init__(self, in_channels):super(InceptionA, self).__init__()self.branch1x1 = nn.Conv2d(in_channels, 16, kernel_size=1)self.branch5x5_1 = nn.Conv2d(in_channels, 16, kernel_size=1)self.branch5x5_2 = nn.Conv2d(16, 24, kernel_size=5, padding=2)self.branch3x3_1 = nn.Conv2d(in_channels, 16, kernel_size=1)self.branch3x3_2 = nn.Conv2d(16, 24, kernel_size=3, padding=1)self.branch3x3_3 = nn.Conv2d(24, 24, kernel_size=3, padding=1)self.branch_pool = nn.Conv2d(in_channels, 24, kernel_size=1)def forward(self, x):branch1x1 = self.branch1x1(x)branch5x5 = self.branch5x5_1(x)branch5x5 = self.branch5x5_2(branch5x5)branch3x3 = self.branch3x3_1(x)branch3x3 = self.branch3x3_2(branch3x3)branch3x3 = self.branch3x3_3(branch3x3)branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)branch_pool = self.branch_pool(branch_pool)outputs = [branch1x1, branch5x5, branch3x3, branch_pool]return torch.cat(outputs, dim=1) # b,c,w,h c对应的是dim=1class Net(nn.Module):def __init__(self):super(Net, self).__init__()self.conv1 = nn.Conv2d(1, 10, kernel_size=5)self.conv2 = nn.Conv2d(88, 20, kernel_size=5) # 88 = 24x3 + 16self.incep1 = InceptionA(in_channels=10) # 与conv1 中的10对应self.incep2 = InceptionA(in_channels=20) # 与conv2 中的20对应self.mp = nn.MaxPool2d(2)self.fc = nn.Linear(1408, 10)def forward(self, x):in_size = x.size(0)x = F.relu(self.mp(self.conv1(x))) #通道变为10x = self.incep1(x) #88x = F.relu(self.mp(self.conv2(x))) #20x = self.incep2(x) #88x = x.view(in_size, -1)x = self.fc(x)return xmodel = Net()# construct loss and optimizercriterion = torch.nn.CrossEntropyLoss()optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)# training cycle forward, backward, updatedef train(epoch):running_loss = 0.0for batch_idx, data in enumerate(train_loader, 0):inputs, target = dataoptimizer.zero_grad()outputs = model(inputs)loss = criterion(outputs, target)loss.backward()optimizer.step()running_loss += loss.item()if batch_idx % 300 == 299:print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))running_loss = 0.0def test():correct = 0total = 0with torch.no_grad():for data in test_loader:images, labels = dataoutputs = model(images)_, predicted = torch.max(outputs.data, dim=1)total += labels.size(0)correct += (predicted == labels).sum().item()print('accuracy on test set: %d %% ' % (100 * correct / total))if __name__ == '__main__':for epoch in range(10):train(epoch)test()

Residual Network:

?:保持输入和输出大小相同。

代码:

import torchimport torch.nn as nnfrom torchvision import transformsfrom torchvision import datasetsfrom torch.utils.data import DataLoaderimport torch.nn.functional as Fimport torch.optim as optim# prepare datasetbatch_size = 64transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # 归一化,均值和方差train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)# design model using classclass ResidualBlock(nn.Module):def __init__(self, channels):super(ResidualBlock, self).__init__()self.channels = channelsself.conv1 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)self.conv2 = nn.Conv2d(channels, channels, kernel_size=3, padding=1)def forward(self, x):y = F.relu(self.conv1(x))y = self.conv2(y)return F.relu(x + y)class Net(nn.Module):def __init__(self):super(Net, self).__init__()self.conv1 = nn.Conv2d(1, 16, kernel_size=5)self.conv2 = nn.Conv2d(16, 32, kernel_size=5) # 88 = 24x3 + 16self.rblock1 = ResidualBlock(16)self.rblock2 = ResidualBlock(32)self.mp = nn.MaxPool2d(2)self.fc = nn.Linear(512, 10) # 暂时不知道1408咋能自动出来的def forward(self, x):in_size = x.size(0)x = self.mp(F.relu(self.conv1(x)))x = self.rblock1(x)x = self.mp(F.relu(self.conv2(x)))x = self.rblock2(x)x = x.view(in_size, -1)x = self.fc(x)return xmodel = Net()# construct loss and optimizercriterion = torch.nn.CrossEntropyLoss()optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)# training cycle forward, backward, updatedef train(epoch):running_loss = 0.0for batch_idx, data in enumerate(train_loader, 0):inputs, target = dataoptimizer.zero_grad()outputs = model(inputs)loss = criterion(outputs, target)loss.backward()optimizer.step()running_loss += loss.item()if batch_idx % 300 == 299:print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))running_loss = 0.0def test():correct = 0total = 0with torch.no_grad():for data in test_loader:images, labels = dataoutputs = model(images)_, predicted = torch.max(outputs.data, dim=1)total += labels.size(0)correct += (predicted == labels).sum().item()print('accuracy on test set: %d %% ' % (100 * correct / total))if __name__ == '__main__':for epoch in range(10):train(epoch)test()

【番外:1.理论学习 2.阅读文献 3.复现经典 4.扩充视野】

![【pytest】 pytest拓展功能 pycharm PermissionError: [Errno 13] Permission denied:](https://img-blog.csdnimg.cn/0042fe0bbb6541c9a016359e01c26b2b.png)