数据湖客户端运维工具Hudi-Cli实战

help

hudi:student_mysql_cdc_hudi_fl->help

AVAILABLE COMMANDS

Archived Commits Command

trigger archival: trigger archival

show archived commits: Read commits from archived files and show details

show archived commit stats: Read commits from archived files and show details

Bootstrap Command

bootstrap run: Run a bootstrap action for current Hudi table

bootstrap index showmapping: Show bootstrap index mapping

bootstrap index showpartitions: Show bootstrap indexed partitions

Built-In Commands

help: Display help about available commands

stacktrace: Display the full stacktrace of the last error.

clear: Clear the shell screen.

quit, exit: Exit the shell.

history: Display or save the history of previously run commands

version: Show version info

script: Read and execute commands from a file.

Cleans Command

cleans show: Show the cleans

clean showpartitions: Show partition level details of a clean

cleans run: run clean

Clustering Command

clustering run: Run Clustering

clustering scheduleAndExecute: Run Clustering. Make a cluster plan first and execute that plan immediately

clustering schedule: Schedule Clustering

Commits Command

commits compare: Compare commits with another Hoodie table

commits sync: Sync commits with another Hoodie table

commit showpartitions: Show partition level details of a commit

commits show: Show the commits

commits showarchived: Show the archived commits

commit showfiles: Show file level details of a commit

commit show_write_stats: Show write stats of a commit

Compaction Command

compaction run: Run Compaction for given instant time

compaction scheduleAndExecute: Schedule compaction plan and execute this plan

compaction showarchived: Shows compaction details for a specific compaction instant

compaction repair: Renames the files to make them consistent with the timeline as dictated by Hoodie metadata. Use when compaction unschedule fails partially.

compaction schedule: Schedule Compaction

compaction show: Shows compaction details for a specific compaction instant

compaction unscheduleFileId: UnSchedule Compaction for a fileId

compaction validate: Validate Compaction

compaction unschedule: Unschedule Compaction

compactions show all: Shows all compactions that are in active timeline

compactions showarchived: Shows compaction details for specified time window

Diff Command

diff partition: Check how file differs across range of commits. It is meant to be used only for partitioned tables.

diff file: Check how file differs across range of commits

Export Command

export instants: Export Instants and their metadata from the Timeline

File System View Command

show fsview all: Show entire file-system view

show fsview latest: Show latest file-system view

HDFS Parquet Import Command

hdfsparquetimport: Imports Parquet table to a hoodie table

Hoodie Log File Command

show logfile records: Read records from log files

show logfile metadata: Read commit metadata from log files

Hoodie Sync Validate Command

sync validate: Validate the sync by counting the number of records

Kerberos Authentication Command

kerberos kdestroy: Destroy Kerberos authentication

kerberos kinit: Perform Kerberos authentication

Markers Command

marker delete: Delete the marker

Metadata Command

metadata stats: Print stats about the metadata

metadata list-files: Print a list of all files in a partition from the metadata

metadata list-partitions: List all partitions from metadata

metadata validate-files: Validate all files in all partitions from the metadata

metadata delete: Remove the Metadata Table

metadata create: Create the Metadata Table if it does not exist

metadata init: Update the metadata table from commits since the creation

metadata set: Set options for Metadata Table

Repairs Command

repair deduplicate: De-duplicate a partition path contains duplicates & produce repaired files to replace with

rename partition: Rename partition. Usage: rename partition --oldPartition <oldPartition> --newPartition <newPartition>

repair overwrite-hoodie-props: Overwrite hoodie.properties with provided file. Risky operation. Proceed with caution!

repair migrate-partition-meta: Migrate all partition meta file currently stored in text format to be stored in base file format. See HoodieTableConfig#PARTITION_METAFILE_USE_DATA_FORMAT.

repair addpartitionmeta: Add partition metadata to a table, if not present

repair deprecated partition: Repair deprecated partition ("default"). Re-writes data from the deprecated partition into __HIVE_DEFAULT_PARTITION__

repair show empty commit metadata: show failed commits

repair corrupted clean files: repair corrupted clean files

Rollbacks Command

show rollback: Show details of a rollback instant

commit rollback: Rollback a commit

show rollbacks: List all rollback instants

Savepoints Command

savepoint rollback: Savepoint a commit

savepoints show: Show the savepoints

savepoint create: Savepoint a commit

savepoint delete: Delete the savepoint

Spark Env Command

set: Set spark launcher env to cli

show env: Show spark launcher env by key

show envs all: Show spark launcher envs

Stats Command

stats filesizes: File Sizes. Display summary stats on sizes of files

stats wa: Write Amplification. Ratio of how many records were upserted to how many records were actually written

Table Command

table update-configs: Update the table configs with configs with provided file.

table recover-configs: Recover table configs, from update/delete that failed midway.

refresh, metadata refresh, commits refresh, cleans refresh, savepoints refresh: Refresh table metadata

create: Create a hoodie table if not present

table delete-configs: Delete the supplied table configs from the table.

fetch table schema: Fetches latest table schema

connect: Connect to a hoodie table

desc: Describe Hoodie Table properties

Temp View Command

temp_query, temp query: query against created temp view

temps_show, temps show: Show all views name

temp_delete, temp delete: Delete view name

Timeline Command

metadata timeline show incomplete: List all incomplete instants in active timeline of metadata table

metadata timeline show active: List all instants in active timeline of metadata table

timeline show incomplete: List all incomplete instants in active timeline

timeline show active: List all instants in active timeline

Upgrade Or Downgrade Command

downgrade table: Downgrades a table

upgrade table: Upgrades a table

Utils Command

utils loadClass: Load a class

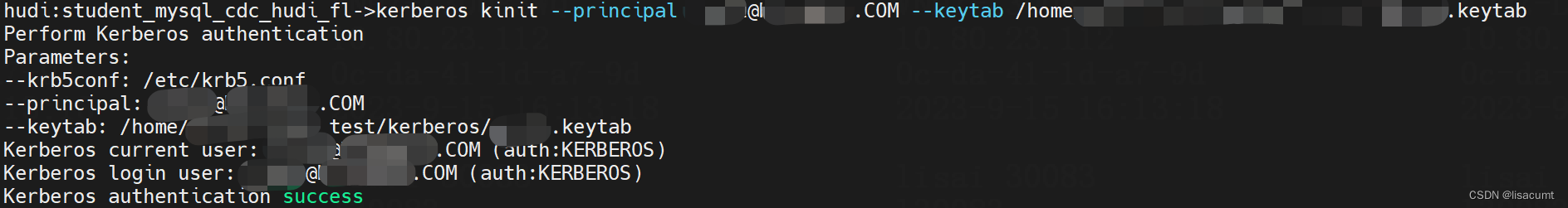

kerberos

kerberos kinit --principal xxx@XXXXX.COM --keytab /xxx/kerberos/xxx.keytab

先看下样例表的表结构:

分区表哦!

-- FLink SQL建表语句

create table student_mysql_cdc_hudi_fl(

`_hoodie_commit_time` string comment 'hoodie commit time',

`_hoodie_commit_seqno` string comment 'hoodie commit seqno',

`_hoodie_record_key` string comment 'hoodie record key',

`_hoodie_partition_path` string comment 'hoodie partition path',

`_hoodie_file_name` string comment 'hoodie file name',

`s_id` bigint not null comment '主键',

`s_name` string not null comment '姓名',

`s_age` int comment '年龄',

`s_sex` string comment '性别',

`s_part` string not null comment '分区字段',

`create_time` timestamp(6) not null comment '创建时间',

`dl_ts` timestamp(6) not null,

`dl_s_sex` string not null,

PRIMARY KEY(s_id) NOT ENFORCED

)PARTITIONED BY (`dl_s_sex`) with (

,'connector' = 'hudi'

,'hive_sync.table' = 'student_mysql_cdc_hudi'

,'hoodie.datasource.write.drop.partition.columns' = 'true'

,'hoodie.datasource.write.hive_style_partitioning' = 'true'

,'hoodie.datasource.write.partitionpath.field' = 'dl_s_sex'

,'hoodie.datasource.write.precombine.field' = 'dl_ts'

,'path' = 'hdfs://xxx/hudi_db.db/student_mysql_cdc_hudi'

,'precombine.field' = 'dl_ts'

,'primaryKey' = 's_id'

)

table

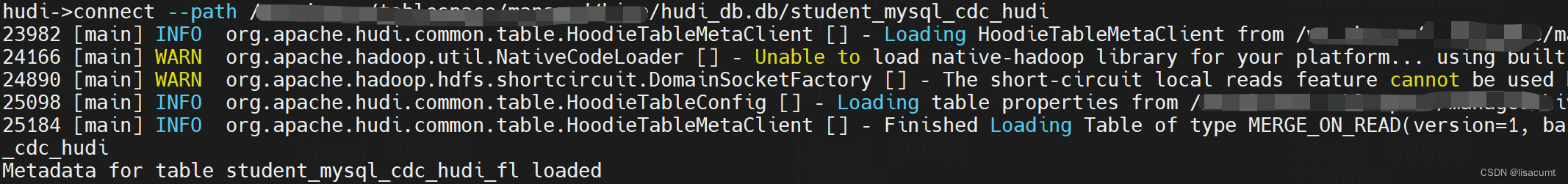

connect

connect --path /xxx/hudi_db.db/student_mysql_cdc_hudi

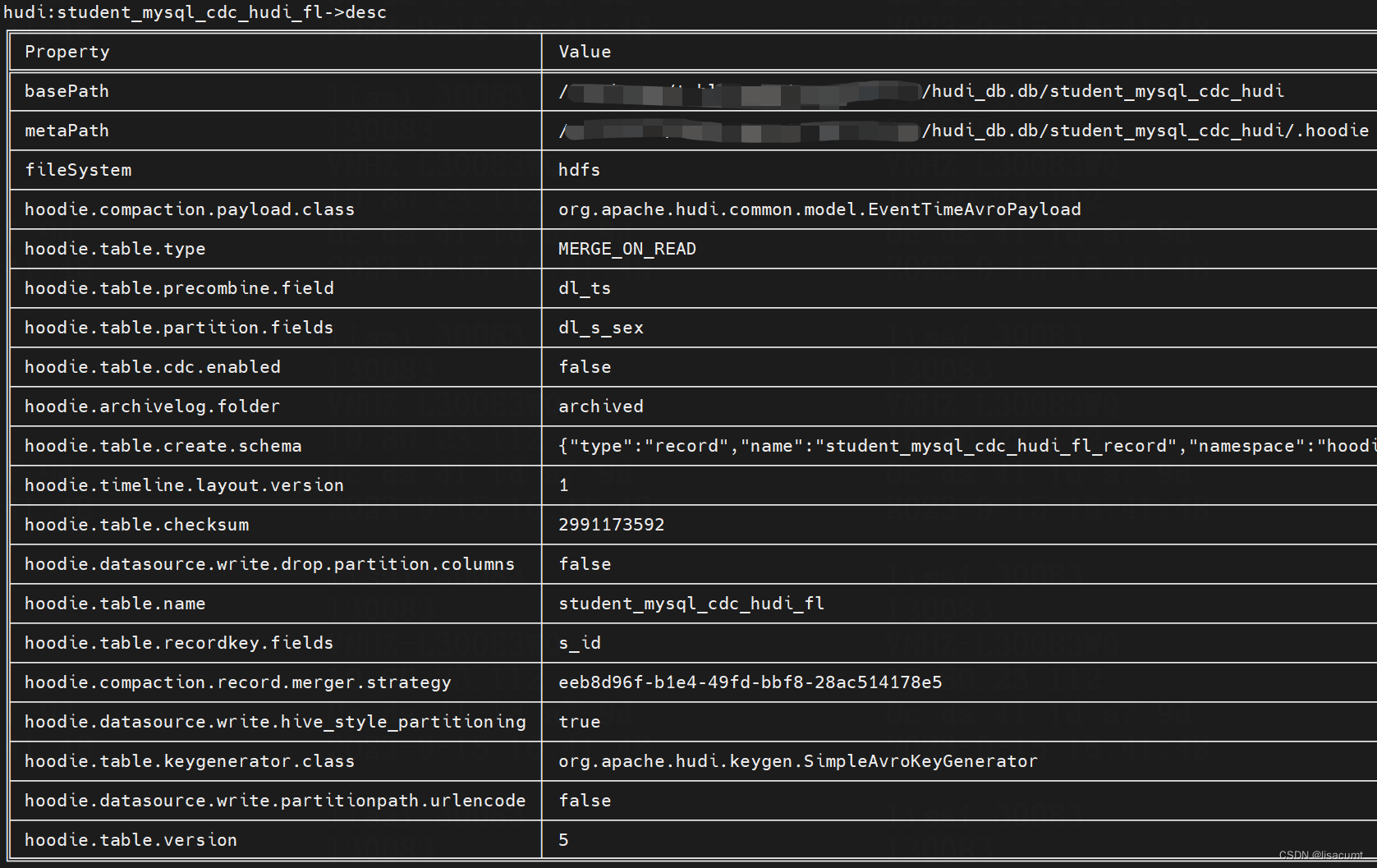

desc

desc

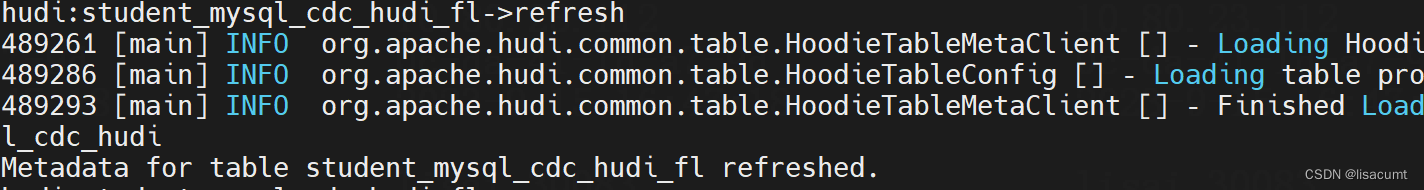

refresh

refresh

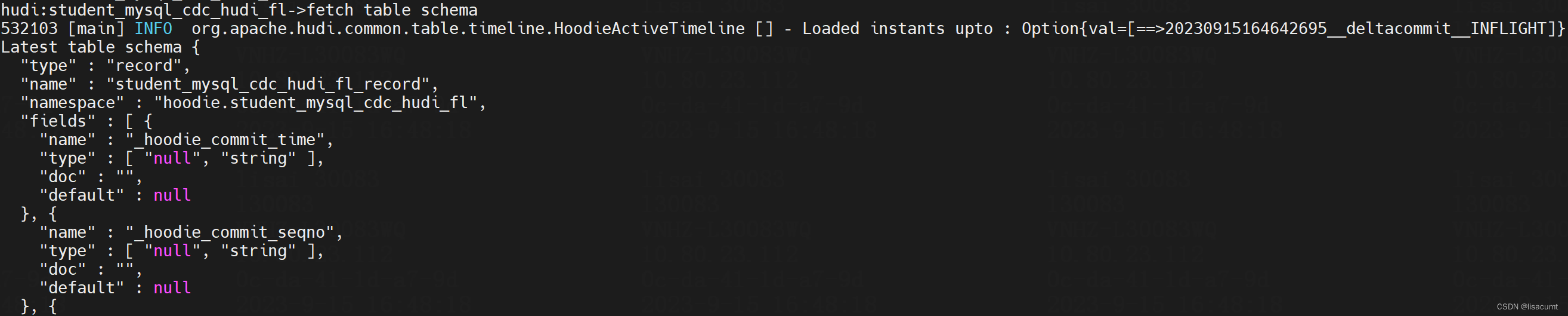

fetch table schema

fetch table schema

"type" : "record",

"name" : "student_mysql_cdc_hudi_fl_record",

"namespace" : "hoodie.student_mysql_cdc_hudi_fl",

"fields" : [ {

"name" : "_hoodie_commit_time",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "_hoodie_commit_seqno",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "_hoodie_record_key",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "_hoodie_partition_path",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "_hoodie_file_name",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "_hoodie_operation",

"type" : [ "null", "string" ],

"doc" : "",

"default" : null

}, {

"name" : "s_id",

"type" : "long"

}, {

"name" : "s_name",

"type" : "string"

}, {

"name" : "s_age",

"type" : [ "null", "int" ],

"default" : null

}, {

"name" : "s_sex",

"type" : [ "null", "string" ],

"default" : null

}, {

"name" : "s_part",

"type" : "string"

}, {

"name" : "create_time",

"type" : {

"type" : "long",

"logicalType" : "timestamp-micros"

}

}, {

"name" : "dl_ts",

"type" : {

"type" : "long",

"logicalType" : "timestamp-micros"

}

}, {

"name" : "dl_s_sex",

"type" : "string"

} ]

}

commit

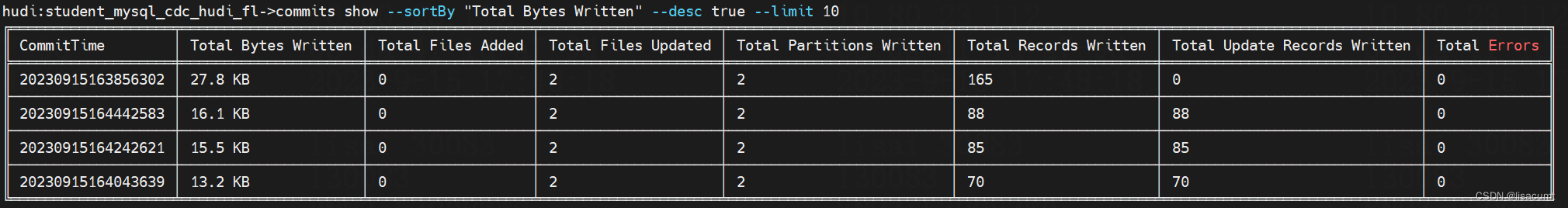

commits show

commits show --sortBy "Total Bytes Written" --desc true --limit 10

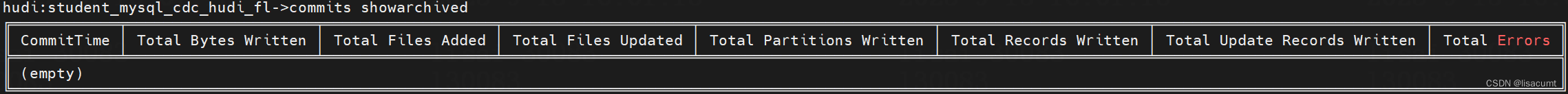

commits showarchived

commits showarchived

commit showfiles

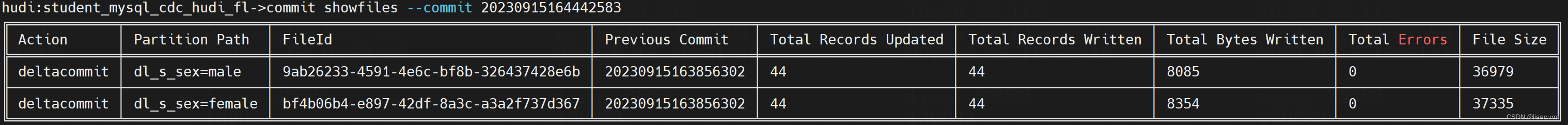

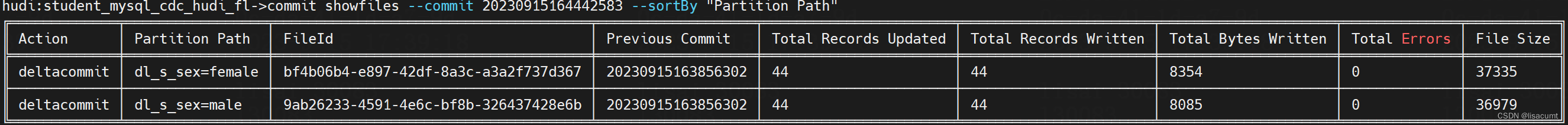

commit showfiles --commit 20230915164442583

commit showfiles --commit 20230915164442583 --sortBy "Partition Path"

commit showpartitions

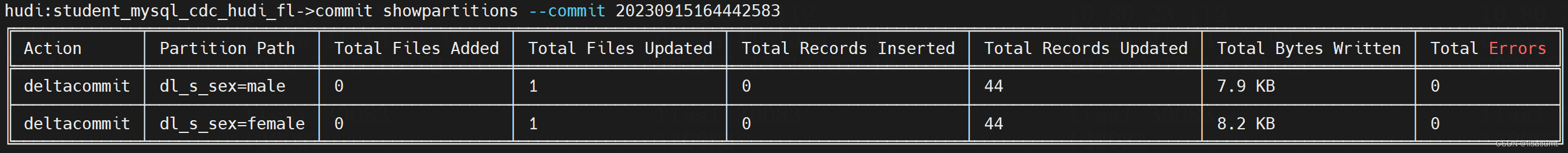

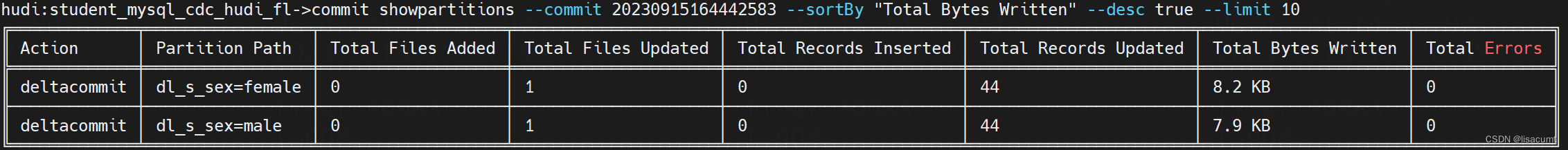

commit showpartitions --commit 20230915164442583

commit showpartitions --commit 20230915164442583 --sortBy "Total Bytes Written" --desc true --limit 10

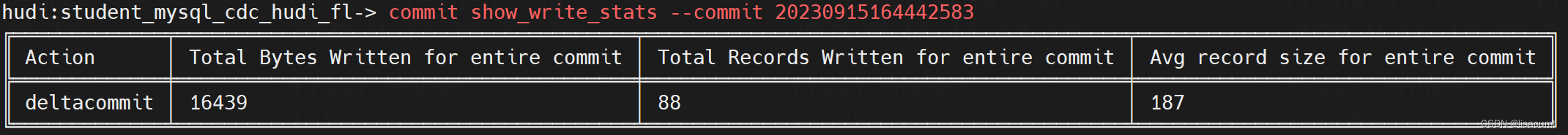

commit show_write_stats

commit show_write_stats --commit 20230915164442583

File System View

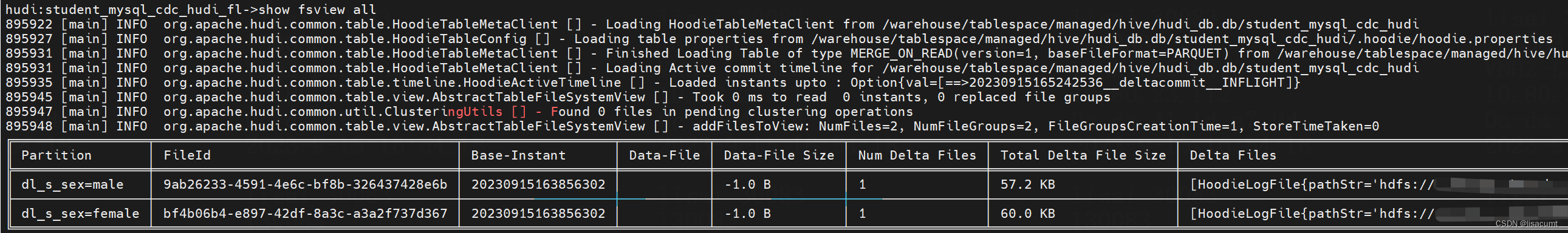

show fsview all

show fsview all

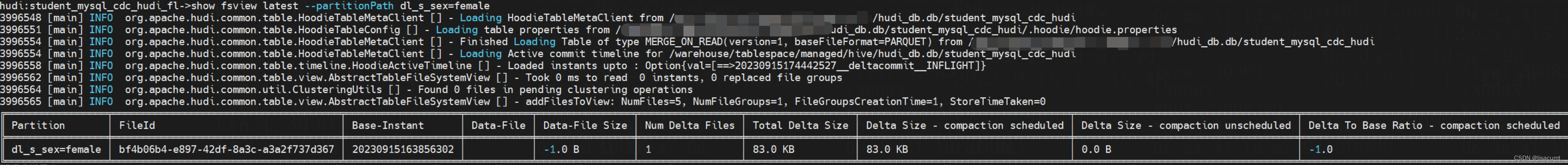

show fsview latest

show fsview latest --partitionPath dl_s_sex=female

Log File

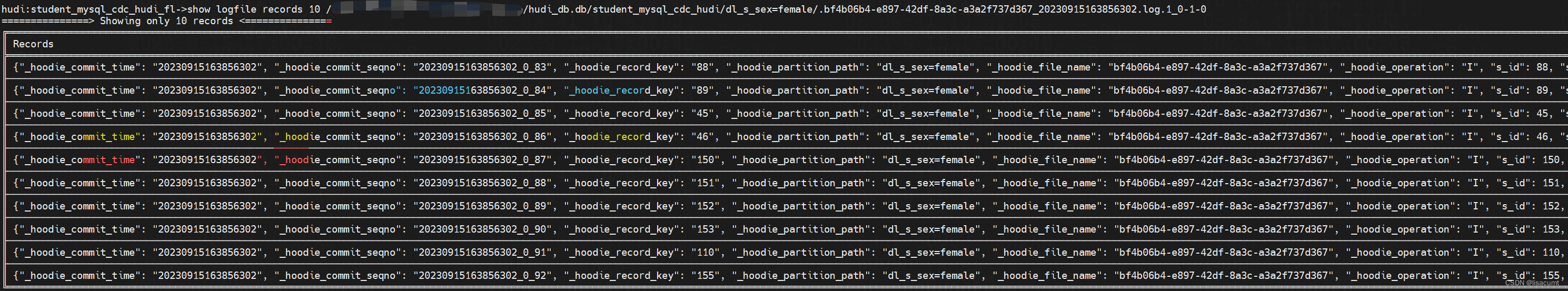

show logfile records

# 注意10 是需要取数据记录条数

show logfile records 10 /xxx/hudi_db.db/student_mysql_cdc_hudi/dl_s_sex=female/.bf4b06b4-e897-42df-8a3c-a3a2f737d367_20230915163856302.log.1_0-1-0

数据是json格式的:

{

"_hoodie_commit_time": "20230915163856302",

"_hoodie_commit_seqno": "20230915163856302_0_83",

"_hoodie_record_key": "88",

"_hoodie_partition_path": "dl_s_sex=female",

"_hoodie_file_name": "bf4b06b4-e897-42df-8a3c-a3a2f737d367",

"_hoodie_operation": "I",

"s_id": 88,

"s_name": "傅亮",

"s_age": 4,

"s_sex": "female",

"s_part": "2017/11/20",

"create_time": 790128367000000,

"dl_ts": -28800000000,

"dl_s_sex": "female"

}

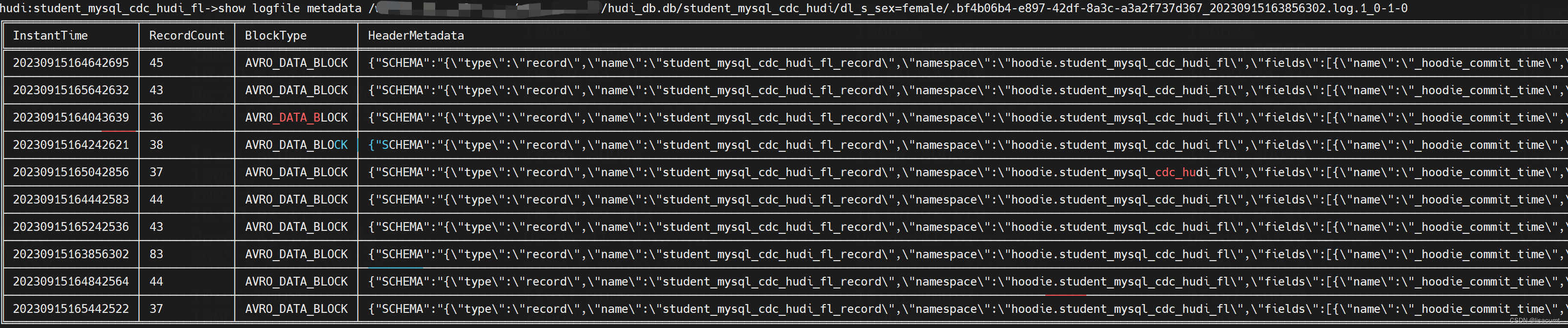

show logfile metadata

show logfile metadata /xxx/xxx/hive/hudi_db.db/student_mysql_cdc_hudi/dl_s_sex=female/dl_create_time_yyyy=1971/dl_create_time_mm=03/.dadac2dd-7e5e-46c3-9b27-f1f03e04a90c_20230915151426134.log.1_0

图片中还有FooterMetadata列没显示全

{

"SCHEMA": "{\"type\":\"record\",\"name\":\"student_mysql_cdc_hudi_fl_record\",\"namespace\":\"hoodie.student_mysql_cdc_hudi_fl\",\"fields\":[{\"name\":\"_hoodie_commit_time\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"_hoodie_commit_seqno\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"_hoodie_record_key\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"_hoodie_partition_path\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"_hoodie_file_name\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"_hoodie_operation\",\"type\":[\"null\",\"string\"],\"doc\":\"\",\"default\":null},{\"name\":\"s_id\",\"type\":\"long\"},{\"name\":\"s_name\",\"type\":\"string\"},{\"name\":\"s_age\",\"type\":[\"null\",\"int\"],\"default\":null},{\"name\":\"s_sex\",\"type\":[\"null\",\"string\"],\"default\":null},{\"name\":\"s_part\",\"type\":\"string\"},{\"name\":\"create_time\",\"type\":{\"type\":\"long\",\"logicalType\":\"timestamp-micros\"}},{\"name\":\"dl_ts\",\"type\":{\"type\":\"long\",\"logicalType\":\"timestamp-micros\"}},{\"name\":\"dl_s_sex\",\"type\":\"string\"}]}",

"INSTANT_TIME": "20230915164442583"

}

differ

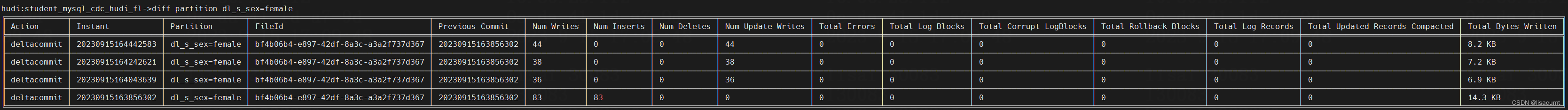

diff partition

diff partition dl_s_sex=female

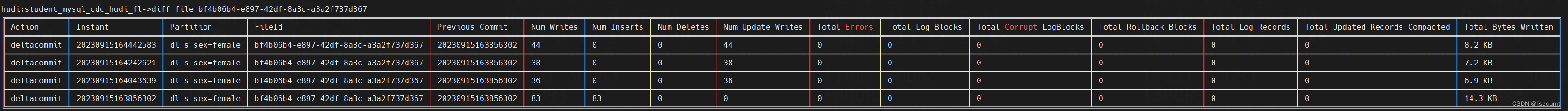

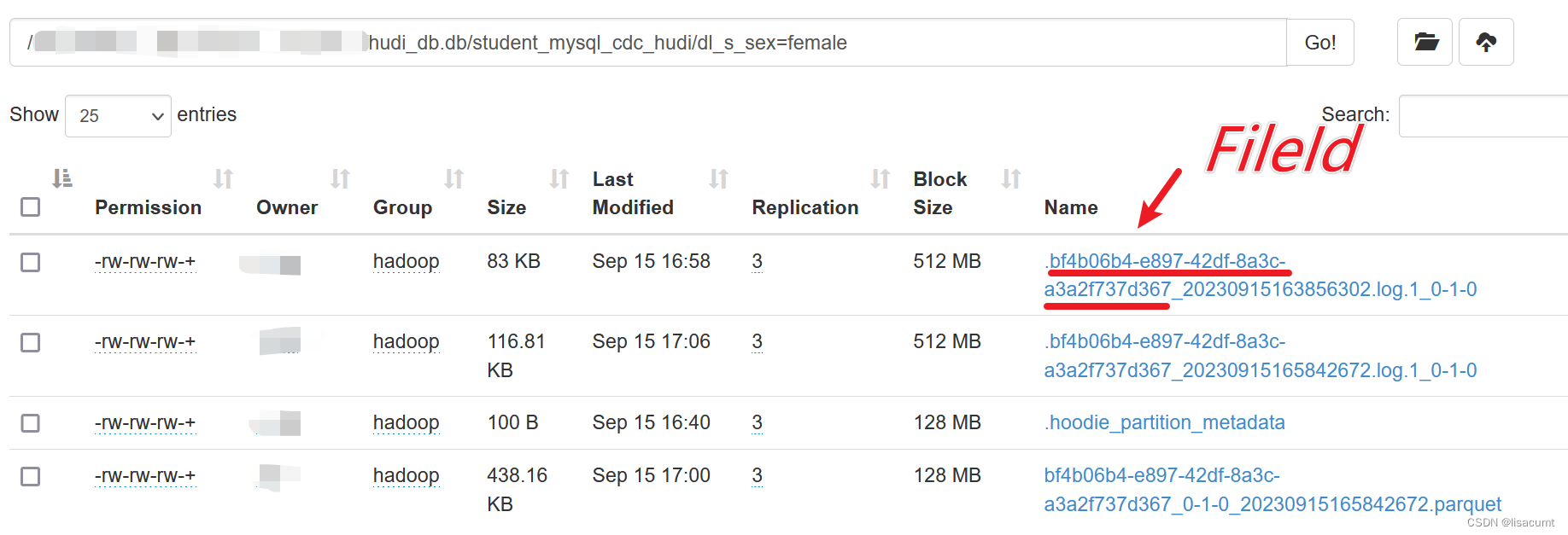

differ file

# 需要提供FileID。就是log文件的部分

# 如log文件:.bf4b06b4-e897-42df-8a3c-a3a2f737d367_20230915163856302.log.1_0-1-0

diff file bf4b06b4-e897-42df-8a3c-a3a2f737d367

rollbacks

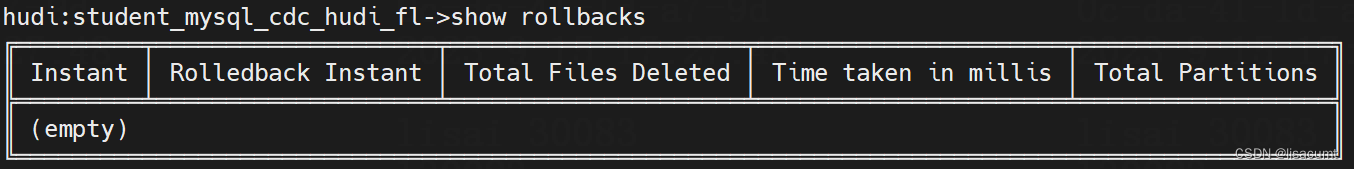

show rollbacks

show rollbacks

stats

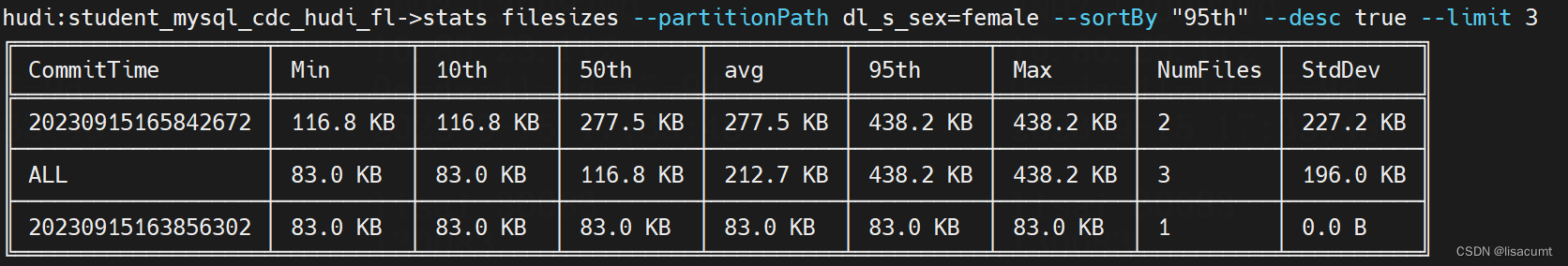

stats filesizes

stats filesizes --partitionPath dl_s_sex=female --sortBy "95th" --desc true --limit 3

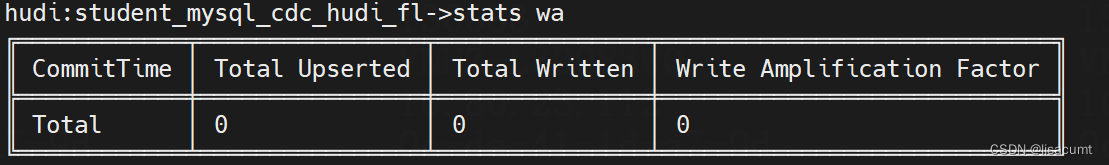

stats wa

stats wa

compaction

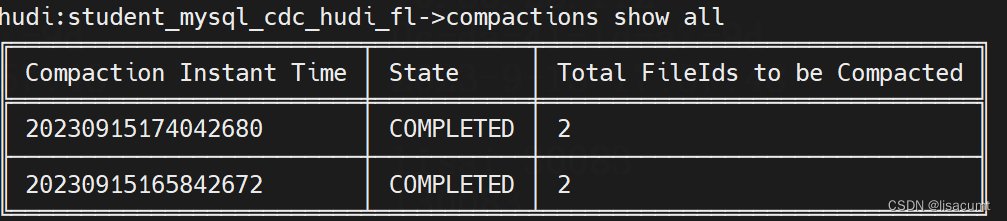

compactions show all

compactions show all

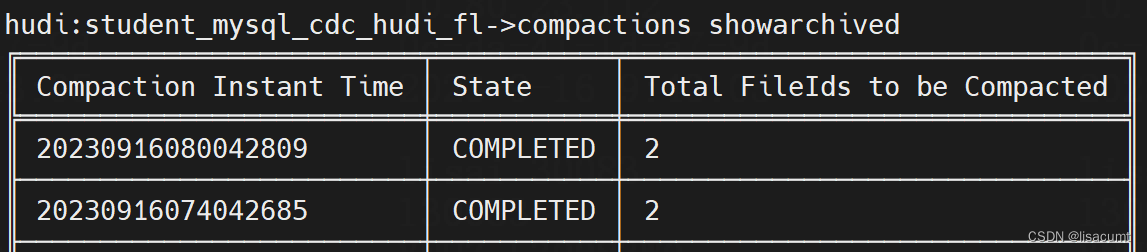

compactions showarchived

compactions showarchived

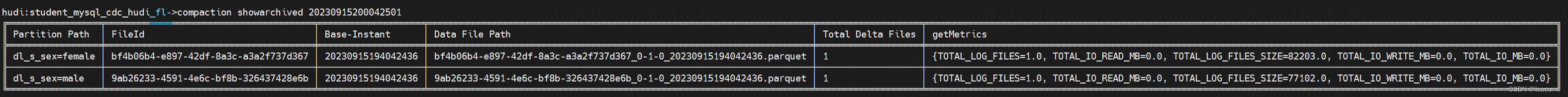

compaction showarchived

compaction showarchived 20230915200042501

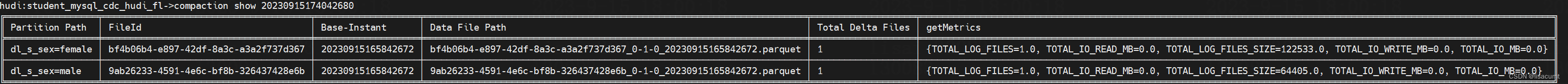

compaction show

compaction show 20230915174042680

参考文章:

Apache Hudi数据湖hudi-cli客户端使用