准备工作:

1、下载opencv

地址:Releases - OpenCV

我下载的是opencv-4.5.5,存放的路径为:

2、下载OpenVino

地址:https://storage.openvinotoolkit.org/repositories/openvino/packages/2023.0.1/

我存放的路径为:

- C++预处理器所需的头文件:include文件夹

- C++链接器所需的lib文件:lib文件夹

- 可执行文件(*.exe)所需的动态链接库文件:bin文件夹

- OpenVINO runtime第三方依赖库文件:3rdparty文件夹

新建项目

main.cpp代码 如下:

#include <iostream>

#include <string>

#include <vector>

#include <openvino/openvino.hpp> //openvino header file

#include <opencv2/opencv.hpp> //opencv header file

#include <direct.h>

#include <stdio.h>

#include <time.h>

std::vector<cv::Scalar> colors = { cv::Scalar(0, 0, 255) , cv::Scalar(0, 255, 0) , cv::Scalar(255, 0, 0) ,

cv::Scalar(255, 100, 50) , cv::Scalar(50, 100, 255) , cv::Scalar(255, 50, 100) };

const std::vector<std::string> class_names = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };

using namespace cv;

using namespace dnn;

// Keep the ratio before resize

Mat letterbox(const cv::Mat& source)

{

int col = source.cols;

int row = source.rows;

int _max = MAX(col, row);

Mat result = Mat::zeros(_max, _max, CV_8UC3);

source.copyTo(result(Rect(0, 0, col, row)));

return result;

}

int main()

{

clock_t start, end;//定义clock_t变量

std::cout << "共8步" << std::endl;

char buffer[100];

_getcwd(buffer, 100);

std::cout << "当前路径:" << buffer << std::endl;

// -------- Step 1. Initialize OpenVINO Runtime Core --------

std::cout << "1. Initialize OpenVINO Runtime Core" << std::endl;

ov::Core core;

// -------- Step 2. Compile the Model --------

std::cout << "2. Compile the Model" << std::endl;

String model_path = String(buffer) + "\\yolov8s.xml";

std::cout << "model_path:\t" << model_path << std::endl;

ov::CompiledModel compiled_model;

try {

compiled_model = core.compile_model(model_path, "CPU");

}

catch (std::exception& e) {

std::cout << "Compile the Model 异常:" << e.what() << std::endl;

return 0;

}

//auto compiled_model = core.compile_model("C:\\MyPro\\yolov8\\yolov8s.xml", "CPU");

// -------- Step 3. Create an Inference Request --------

std::cout << "3. Create an Inference Request" << std::endl;

ov::InferRequest infer_request = compiled_model.create_infer_request();

// -------- Step 4.Read a picture file and do the preprocess --------

std::cout << "4.Read a picture file and do the preprocess" << std::endl;

String img_path = String(buffer) + "\\test.jpg";

std::cout << "img_path:\t" << img_path << std::endl;

Mat img = cv::imread(img_path);

// Preprocess the image

Mat letterbox_img = letterbox(img);

float scale = letterbox_img.size[0] / 640.0;

Mat blob = blobFromImage(letterbox_img, 1.0 / 255.0, Size(640, 640), Scalar(), true);

// -------- Step 5. Feed the blob into the input node of the Model -------

std::cout << "5. Feed the blob into the input node of the Model" << std::endl;

// Get input port for model with one input

auto input_port = compiled_model.input();

// Create tensor from external memory

ov::Tensor input_tensor(input_port.get_element_type(), input_port.get_shape(), blob.ptr(0));

// Set input tensor for model with one input

infer_request.set_input_tensor(input_tensor);

start = clock();//开始时间

// -------- Step 6. Start inference --------

std::cout << "6. Start inference" << std::endl;

infer_request.infer();

end = clock();//结束时间

std::cout << "inference time = " << double(end - start) << "ms" << std::endl;

// -------- Step 7. Get the inference result --------

std::cout << "7. Get the inference result" << std::endl;

auto output = infer_request.get_output_tensor(0);

auto output_shape = output.get_shape();

std::cout << "The shape of output tensor:\t" << output_shape << std::endl;

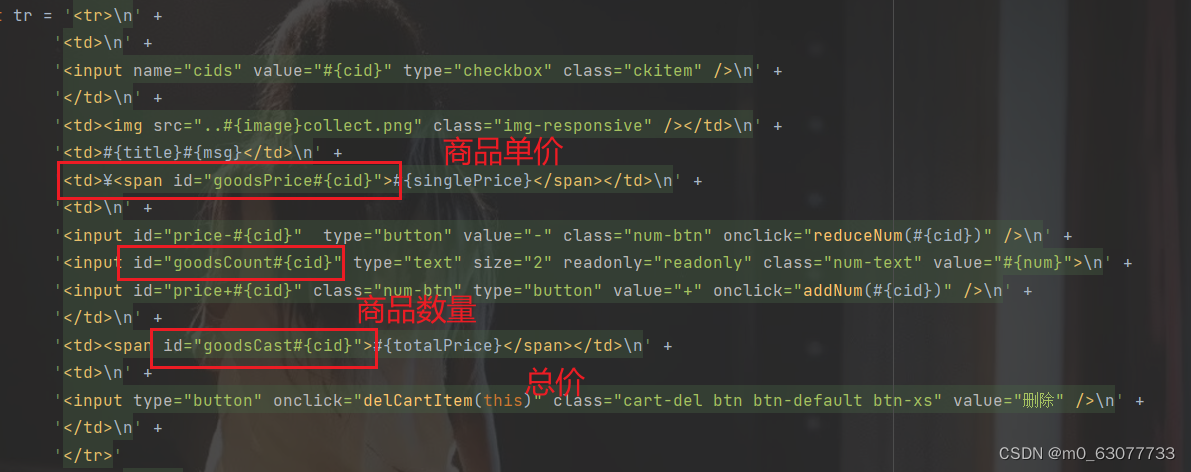

int rows = output_shape[2]; //8400

int dimensions = output_shape[1]; //84: box[cx, cy, w, h]+80 classes scores

std::cout << "8. Postprocess the result " << std::endl;

// -------- Step 8. Postprocess the result --------

float* data = output.data<float>();

Mat output_buffer(output_shape[1], output_shape[2], CV_32F, data);

transpose(output_buffer, output_buffer); //[8400,84]

float score_threshold = 0.25;

float nms_threshold = 0.5;

std::vector<int> class_ids;

std::vector<float> class_scores;

std::vector<Rect> boxes;

// Figure out the bbox, class_id and class_score

for (int i = 0; i < output_buffer.rows; i++) {

Mat classes_scores = output_buffer.row(i).colRange(4, 84);

Point class_id;

double maxClassScore;

minMaxLoc(classes_scores, 0, &maxClassScore, 0, &class_id);

if (maxClassScore > score_threshold) {

class_scores.push_back(maxClassScore);

class_ids.push_back(class_id.x);

float cx = output_buffer.at<float>(i, 0);

float cy = output_buffer.at<float>(i, 1);

float w = output_buffer.at<float>(i, 2);

float h = output_buffer.at<float>(i, 3);

int left = int((cx - 0.5 * w) * scale);

int top = int((cy - 0.5 * h) * scale);

int width = int(w * scale);

int height = int(h * scale);

boxes.push_back(Rect(left, top, width, height));

}

}

//NMS

std::vector<int> indices;

NMSBoxes(boxes, class_scores, score_threshold, nms_threshold, indices);

// -------- Visualize the detection results -----------

for (size_t i = 0; i < indices.size(); i++) {

int index = indices[i];

int class_id = class_ids[index];

rectangle(img, boxes[index], colors[class_id % 6], 2, 8);

std::string label = class_names[class_id] + ":" + std::to_string(class_scores[index]).substr(0, 4);

Size textSize = cv::getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, 0);

Rect textBox(boxes[index].tl().x, boxes[index].tl().y - 15, textSize.width, textSize.height + 5);

cv::rectangle(img, textBox, colors[class_id % 6], FILLED);

putText(img, label, Point(boxes[index].tl().x, boxes[index].tl().y - 5), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255, 255, 255));

}

//namedWindow("YOLOv8 OpenVINO Inference C++ Demo", WINDOW_AUTOSIZE);

//imshow("YOLOv8 OpenVINO Inference C++ Demo", img);

//waitKey(0);

//destroyAllWindows();

cv::imwrite("detection.png", img);

std::cout << "detect success" << std::endl;

system("pause");

return 0;

}

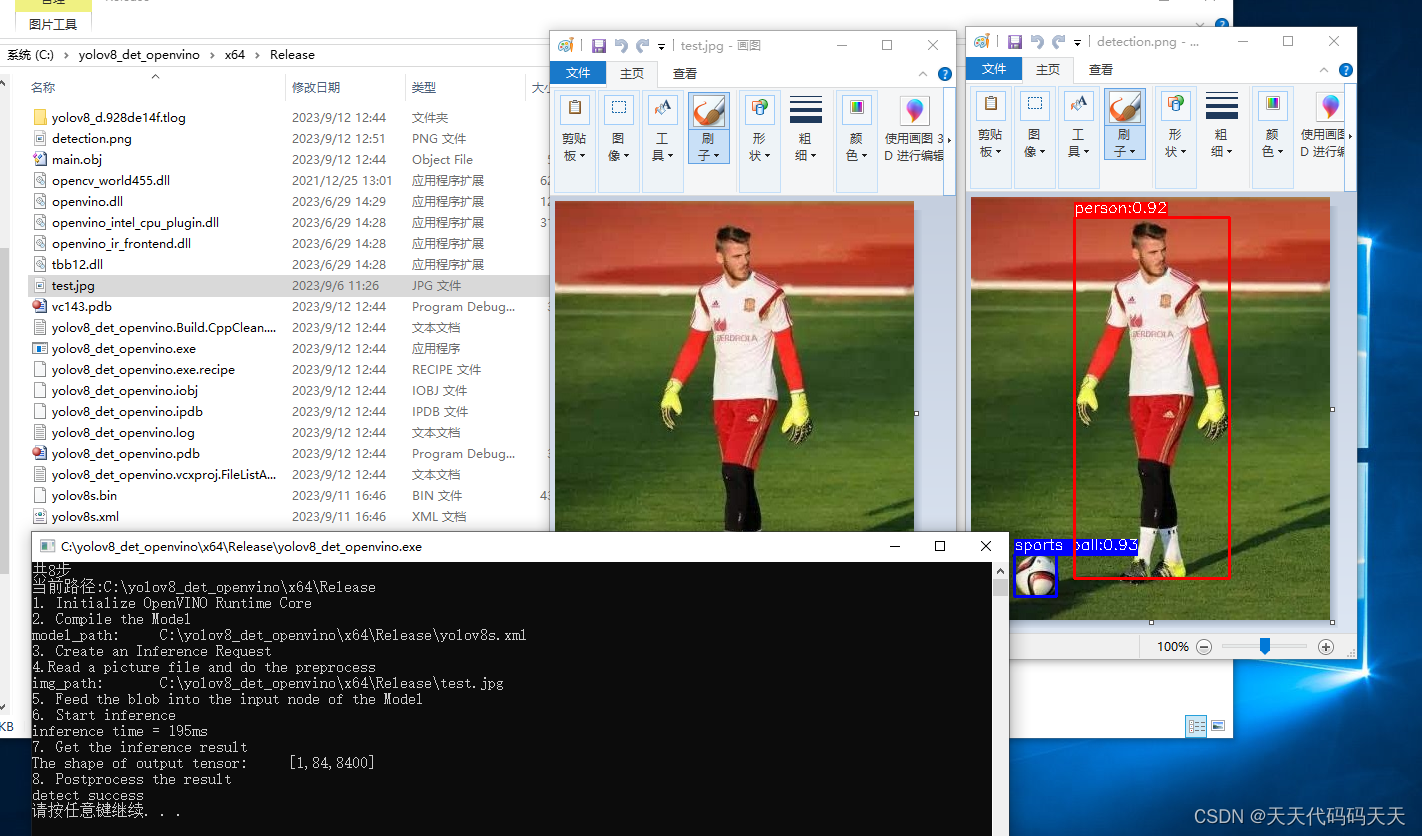

我们直接使用 Release

设置包含目录和库目录

包含目录:

C:\Program Files\opencv-4.5.5\build\include;

C:\Program Files\opencv-4.5.5\build\include\opencv2;

C:\Program Files\openvino_2023.0.1.11005\runtime\include;

C:\Program Files\openvino_2023.0.1.11005\runtime\include\ie;库目录

C:\Program Files\opencv-4.5.5\build\x64\vc15\lib;

C:\Program Files\openvino_2023.0.1.11005\runtime\lib\intel64\Release;添加附加依赖项

openvino.lib

opencv_world455.lib生成

配置环境变量

配置环境变量

或者复制Opencv和OpenVino的DLL到 C:\yolov8_det_openvino\x64\Release

复制测试图片和模型

运行exe

效果

代码参考:GitHub - openvino-book/yolov8_openvino_cpp: YOLOv8 Inference C++ sample code based on OpenVINO C++ API

exe程序下载

Demo下载