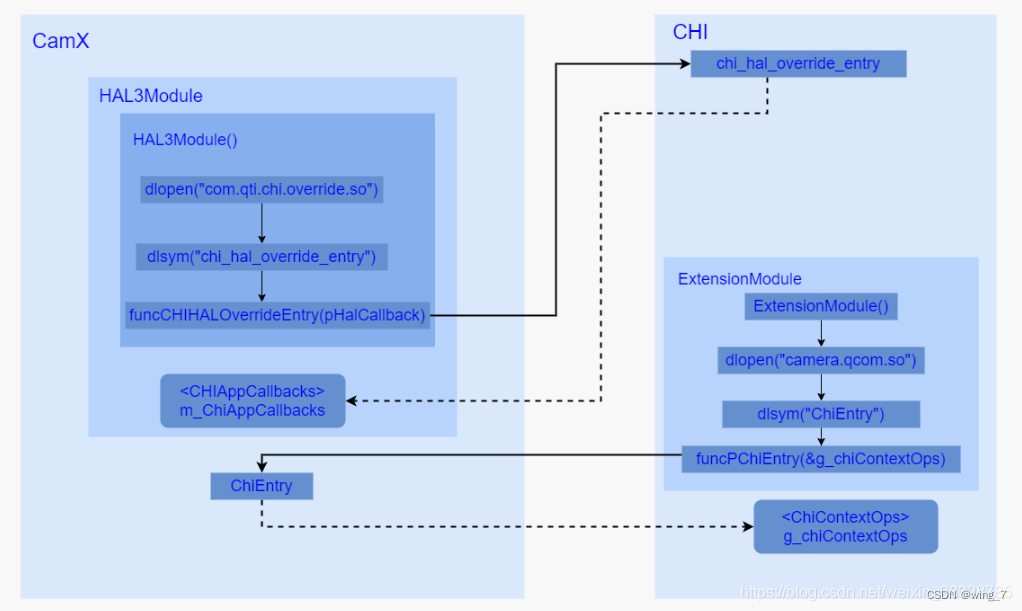

qnx 平台中的camera hal 接口

HAL3Module:chi_hal_override_entry

在android 的中使用Camx 打开com.qti.chi.override.so进行注册hal ops 操作接口

camhal3module.cpp 中的构造函数HAL3Module中

CHIHALOverrideEntry funcCHIHALOverrideEntry = reinterpret_cast<CHIHALOverrideEntry>( CamX::OsUtils::LibGetAddr(m_hChiOverrideModuleHandle, "chi_hal_override_entry"));

qnx 中的so 路径 ./camera_qcx/build/qnx/cdk_qcx/core/lib/aarch64/dll-le/com.qti.chi.override.so

/// @brief HAL Override entry

void chi_hal_override_entry(

chi_hal_callback_ops_t* callbacks)

{

ExtensionModule* pExtensionModule = ExtensionModule::GetInstance();

CHX_ASSERT(NULL != callbacks);

if (NULL != pExtensionModule)

{

callbacks->chi_get_num_cameras = chi_get_num_cameras;

callbacks->chi_get_camera_info = chi_get_camera_info;

callbacks->chi_get_info = chi_get_info;

callbacks->chi_initialize_override_session = chi_initialize_override_session;

callbacks->chi_finalize_override_session = chi_finalize_override_session;

callbacks->chi_override_process_request = chi_override_process_request;

callbacks->chi_teardown_override_session = chi_teardown_override_session;

callbacks->chi_extend_open = chi_extend_open;

callbacks->chi_extend_close = chi_extend_close;

callbacks->chi_remap_camera_id = chi_remap_camera_id;

callbacks->chi_modify_settings = chi_modify_settings;

callbacks->chi_get_default_request_settings = chi_get_default_request_settings;

callbacks->chi_override_flush = chi_override_flush;

callbacks->chi_override_signal_stream_flush = chi_override_signal_stream_flush;

callbacks->chi_override_dump = chi_override_dump;

callbacks->chi_get_physical_camera_info = chi_get_physical_camera_info;

callbacks->chi_is_stream_combination_supported = chi_is_stream_combination_supported;

callbacks->chi_is_concurrent_stream_combination_supported = chi_is_concurrent_stream_combination_supported;

#if CAMERA3_API_HIGH_PERF

callbacks->chi_override_start_recurrent_capture_requests = chi_override_start_recurrent_capture_requests;

callbacks->chi_override_stop_recurrent_capture_requests = chi_override_stop_recurrent_capture_requests;

callbacks->chi_override_set_settings = chi_override_set_settings;

callbacks->chi_override_activate_channel = chi_override_activate_channel;

#endif // CAMERA3_API_HIGH_PERF

}

}

CAMXHAL::Init

类似于android hardware\interfaces\camera\provider\2.4\default\cameraProvider.cpp 的函数CameraProvider::initialize 对camera 进行初始化操作获取注册注册的操作接口camera_common

最终获取的是chi_hal_override_entry注册接口

/**

* @brief

* Initialize CAMX HAL and CSL.

*

* @return

* Status as defined in CamStatus_e.

*/

CamStatus_e CAMXHAL::Init()

{

CamStatus_e rc = CAMERA_SUCCESS;

const hw_module_t* hw_module = NULL;

CAM_TRACE_LOG(STREAM_MGR, "CAMXHAL::Init");

int ret = hw_get_module(CAMERA_HARDWARE_MODULE_ID, &hw_module);

if (0 != ret)

{

QCX_LOG(STREAM_MGR, ERROR, "Camera module not found");

rc = CAMERA_EFAILED;

}

else

{

m_CameraModule = reinterpret_cast<const camera_module_t*>(hw_module);

m_CameraModule->get_vendor_tag_ops(&m_Vtags);

ret = m_CameraModule->init();

if (0 != ret)

{

QCX_LOG(STREAM_MGR, ERROR, "Camera module init failed");

rc = CAMERA_EFAILED;

}

else

{

ret = set_camera_metadata_vendor_ops(const_cast<vendor_tag_ops_t*>(&m_Vtags));

if (0 != ret)

{

QCX_LOG(STREAM_MGR, ERROR, "set vendor tag ops failed (ret = %d)", ret);

rc = CAMERA_EFAILED;

}

else

{

MetaData metaData(&m_Vtags);

const char sectionName[] = "org.quic.camera.qtimer";

const char tagName[] = "timestamp";

uint32_t tagId = 0;

rc = metaData.GetTagId(tagName, sectionName, &tagId);

if (CAMERA_SUCCESS != rc)

{

QCX_LOG(STREAM_MGR, ERROR, "GetTagId failed, tagName = %s (result = 0x%x)",

tagName, rc);

}

else

{

QCX_LOG(STREAM_MGR, DEBUG, "GetTagId successful, time stamp tagId = %u", tagId);

m_TimeStampTagId = tagId;

}

}

}

if (CAMERA_SUCCESS == rc)

{

m_NumOfCameras = m_CameraModule->get_number_of_cameras();

if (m_NumOfCameras < 0)

{

QCX_LOG(STREAM_MGR, ERROR, "No camera found");

rc = CAMERA_EFAILED;

}

else

{

QCX_LOG(STREAM_MGR, HIGH, "Camera module init success, no. of camera: %u",

m_NumOfCameras);

}

}

if (CAMERA_SUCCESS == rc)

{

rc = BuildCameraIdMap();

if (CAMERA_SUCCESS != rc)

{

QCX_LOG(STREAM_MGR, ERROR, "Populating logical camera Ids failed(result = 0x%x)",

rc);

}

else

{

QCX_LOG(STREAM_MGR, DEBUG, "Logical camera Ids obtained");

}

}

}

CAM_TRACE_LOG(STREAM_MGR, "CAMXHAL::Init : End");

return rc;

}

CAMXHAL::OpenCamera

调用的是 chi_extend_open函数进行camera的open

Android 中与之对应的是hardware\qcom\camera\qcamera2\QCamera2Factory.cpp

QCamera2Factory::cameraDeviceOpen

/**

* @brief

* Open camera corresponding Stream Session.

*

* @param pSession [in, out]

* pointer to Stream Session.

*

* @return

* Status as defined in CamStatus_e.

*/

CamStatus_e CAMXHAL::OpenCamera(StreamSession* pSession)

{

CamStatus_e rc = CAMERA_SUCCESS;

CamStatus_e rcMutex = CAMERA_SUCCESS;

rcMutex = OSAL_CriticalSectionEnter(m_CamxMutex);

CAM_TRACE_LOG(STREAM_MGR, "CAMXHAL::OpenCamera");

if (CAMERA_SUCCESS != rcMutex)

{

QCX_LOG(STREAM_MGR, ERROR, "Failed in OSAL_CriticalSectionEnter(rc=%u)", rcMutex);

rc = rcMutex;

}

else

{

hw_device_t* localDevice = nullptr;

uint32_t logicalId = 0xFFFFFFFFU;

for (uint32_t i = 0; i < pSession->m_NumInputs; i++)

{

if (m_CamIdMap.find(pSession->m_InputId[i]) == m_CamIdMap.end())

{

QCX_LOG(STREAM_MGR, ERROR, "Logical camera Id not found for physical CamId=%u",

pSession->m_InputId[i]);

rc = CAMERA_EBADPARAM;

break;

}

else

{

if (0xFFFFFFFFU == logicalId)

{

logicalId = m_CamIdMap[pSession->m_InputId[i]];

}

else

{

if (m_CamIdMap[pSession->m_InputId[i]] != logicalId)

{

QCX_LOG(STREAM_MGR, ERROR, "Logical camera Id %u for input Id %u is invalid",

logicalId, pSession->m_InputId[i]);

rc = CAMERA_EBADPARAM;

break;

}

}

}

}

if (CAMERA_SUCCESS == rc)

{

pSession->m_logicalCameraId = logicalId;

int ret = m_CameraModule->common.methods->open((const hw_module_t*)m_CameraModule,

std::to_string(logicalId).c_str(), &localDevice);

if ((0 != ret) || (nullptr == localDevice))

{

QCX_LOG(STREAM_MGR, ERROR, "Camera open failed for camera id: %u", logicalId);

rc = CAMERA_EFAILED;

}

else

{

QCX_LOG(STREAM_MGR, HIGH, "Camera opened for camera id: %u", logicalId);

camera3_device_t* camDevice = reinterpret_cast<camera3_device_t*>(localDevice);

m_CamDevices.insert(std::pair<uint32_t, camera3_device_t*>(logicalId, camDevice));

ret = camDevice->ops->initialize(camDevice, this);

if (0 != ret)

{

QCX_LOG(STREAM_MGR, ERROR, "Camera device initialize failed for"

"camera id: %u", logicalId);

rc = CAMERA_EFAILED;

}

else

{

QCX_LOG(STREAM_MGR, HIGH, "Camera device initialized for Camera Id: %u",

logicalId);

}

}

}

rcMutex = OSAL_CriticalSectionLeave(m_CamxMutex);

if (CAMERA_SUCCESS != rcMutex)

{

QCX_LOG(STREAM_MGR, ERROR, "Failed in OSAL_CriticalSectionLeave(rc=%u)",rcMutex);

if (CAMERA_SUCCESS == rc)

{

rc = rcMutex;

}

}

}

CAM_TRACE_LOG(STREAM_MGR, "CAMXHAL::OpenCamera : End");

return rc;

}

camDevice->ops->initialize=CamX::initialize 初始化

m_HALCallbacks.ProcessCaptureResult = ProcessCaptureResult

camx 找到在camera hal层的函数指针的映射关系。

映射到:vendor/qcom/proprietary/camx/src/core/hal/camxhal3entry.cpp 中的

static Dispatch g_dispatchHAL3(&g_jumpTableHAL3);:

定义了g_camera3DeviceOps变量:

static camera3_device_ops_t g_camera3DeviceOps =

{

CamX::initialize,

CamX::configure_streams,

NULL,

CamX::construct_default_request_settings,

CamX::process_capture_request,

NULL,

CamX::dump,

CamX::flush,

{0},

};camera.provider中如何实现到camera hal层的跳跃,camera service调用到camera provider中的接口方法,现在调用到 camera provider中的 hardware/interfaces/camera/device/3.2/default/CameraDeviceSession.cpp 中的processCaptureRequest(…)方法,最终会调用到

mDevice->ops->process_capture_request

initialize

该方法在调用open后紧接着被调用,主要用于将上层的回调接口传入HAL中,一旦有数据或者事件产生,CamX便会通过这些回调接口将数据或者事件上传至调用者,其内部的实现较为简单。

initialize方法中有两个参数,分别是之前通过open方法获取的camera3_device_t结构体和实现了camera3_callback_ops_t的CameraDevice,很显然camera3_device_t结构体并不是重点,所以该方法的主要工作是将camera3_callback_ops_t与CamX关联上,一旦数据准备完成便通过这里camera3_callback_ops_t中回调方法将数据回传到Camera Provider中的CameraDevice中,基本流程可以总结为以下几点:

1.实例化了一个Camera3CbOpsRedirect对象并将其加入了g_HAL3Entry.m_cbOpsList队列中,这样方便之后需要的时候能够顺利拿到该对象。

2.将本地的process_capture_result以及notify方法地址分别赋值给Camera3CbOpsRedirect.cbOps中的process_capture_result以及notify函数指针。

3.将上层传入的回调方法结构体指针pCamera3CbOpsAPI赋值给Camera3CbOpsRedirect.pCbOpsAPI,并将Camera3CbOpsRedirect.cbOps赋值给pCamera3CbOpsAPI,通过JumpTableHal3的initialize方法将pCamera3CbOpsAPI传给HALDevice中的m_pCamera3CbOps成员变量,这样HALDevice中的m_pCamera3CbOps就指向了CamX中本地方法process_capture_result以及notify。

经过这样的一番操作之后,一旦CHI有数据传入便会首先进入到本地方法ProcessCaptureResult,然后在该方法中获取到HALDevice的成员变量m_pCamera3CbOps,进而调用m_pCamera3CbOps中的process_capture_result方法,即camxhal3entry.cpp中定义的process_capture_result方法,然后这个方法中会去调用JumpTableHAL3.process_capture_result方法,该方法最终会去调用Camera3CbOpsRedirect.pCbOpsAPI中的process_capture_result方法,这样就调到从Provider传入的回调方法,将数据顺利给到了CameraCaptureSession中。

配置相机设备数据流

在打开相机应用过程中,App在获取并打开相机设备之后,会调用CameraDevice.createCaptureSession来获取CameraDeviceSession,并且通过Camera api v2标准接口,通知Camera Service,调用其CameraDeviceClient.endConfigure方法,在该方法内部又会去通过HIDL接口ICameraDeviceSession::configureStreams_3_4通知Provider开始处理此次配置需求,在Provider内部,会去通过在调用open流程中获取的camera3_device_t结构体的configure_streams方法来将数据流的配置传入CamX-CHI中,之后由CamX-CHI完成对数据流的配置工作,接下来我们来详细分析下CamX-CHI对于该标准HAL3接口 configure_streams的具体实现:

高通Camera驱动(3)-- configure_streams_高通camx configurestreams_Cam_韦的博客-CSDN博客

vendor/qcom/proprietary/camx/src/core/hal/camxhaldevice.cpp

CamxResult HALDevice::ConfigureStreams(

Camera3StreamConfig* pStreamConfigs)

{

CamxResult result = CamxResultSuccess;

// Validate the incoming stream configurations

result = CheckValidStreamConfig(pStreamConfigs);

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfigs->operationMode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfigs->operationMode))

{

SearchNumBatchedFrames (pStreamConfigs, &m_usecaseNumBatchedFrames, &m_FPSValue);

CAMX_ASSERT(m_usecaseNumBatchedFrames > 1);

}

else

{

// Not a HFR usecase batch frames value need to set to 1.

m_usecaseNumBatchedFrames = 1;

}

if (CamxResultSuccess == result)

{

ClearFrameworkRequestBuffer();

m_numPipelines = 0;

if (TRUE == m_bCHIModuleInitialized)

{

GetCHIAppCallbacks()->chi_teardown_override_session(reinterpret_cast<camera3_device*>(&m_camera3Device), 0, NULL);

}

m_bCHIModuleInitialized = CHIModuleInitialize(pStreamConfigs);

if (FALSE == m_bCHIModuleInitialized)

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "CHI Module failed to configure streams");

result = CamxResultEFailed;

}

else

{

CAMX_LOG_VERBOSE(CamxLogGroupHAL, "CHI Module configured streams ... CHI is in control!");

}

}

return result;

}

1)如果之前有过配流的操作,m_bCHIModuleInitialized会被赋值,然后销毁 session的操作

2)同文件下调用CHIModuleInitialize函数操作,然后m_bCHIModuleInitialized赋值

BOOL HALDevice::CHIModuleInitialize(

Camera3StreamConfig* pStreamConfigs)

{

BOOL isOverrideEnabled = FALSE;

if (TRUE == HAL3Module::GetInstance()->IsCHIOverrideModulePresent())

{

/// @todo (CAMX-1518) Handle private data from Override module

VOID* pPrivateData;

chi_hal_callback_ops_t* pCHIAppCallbacks = GetCHIAppCallbacks();

pCHIAppCallbacks->chi_initialize_override_session(GetCameraId(),

reinterpret_cast<const camera3_device_t*>(&m_camera3Device),

&m_HALCallbacks,

reinterpret_cast<camera3_stream_configuration_t*>(pStreamConfigs),

&isOverrideEnabled,

&pPrivateData);

}

return isOverrideEnabled;

}

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxextensioninterface.cpp

static CDKResult chi_initialize_override_session(

uint32_t cameraId,

const camera3_device_t* camera3_device,

const chi_hal_ops_t* chiHalOps,

camera3_stream_configuration_t* stream_config,

int* override_config,

void** priv)

{

ExtensionModule* pExtensionModule = ExtensionModule::GetInstance();

pExtensionModule->InitializeOverrideSession(cameraId, camera3_device, chiHalOps, stream_config, override_config, priv);

return CDKResultSuccess;

}

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxextensionmodule.cpp

CDKResult ExtensionModule::InitializeOverrideSession(

uint32_t logicalCameraId,

const camera3_device_t* pCamera3Device,

const chi_hal_ops_t* chiHalOps,

camera3_stream_configuration_t* pStreamConfig,

int* pIsOverrideEnabled,

VOID** pPrivate)

{

CDKResult result = CDKResultSuccess;

UINT32 modeCount = 0;

ChiSensorModeInfo* pAllModes = NULL;

UINT32 fps = *m_pDefaultMaxFPS;

BOOL isVideoMode = FALSE;

uint32_t operation_mode;

static BOOL fovcModeCheck = EnableFOVCUseCase();

UsecaseId selectedUsecaseId = UsecaseId::NoMatch;

UINT minSessionFps = 0;

UINT maxSessionFps = 0;

*pPrivate = NULL;

*pIsOverrideEnabled = FALSE;

m_aFlushInProgress = FALSE;

m_firstResult = FALSE;

m_hasFlushOccurred = FALSE;

if (NULL == m_hCHIContext)

{

m_hCHIContext = g_chiContextOps.pOpenContext();

}

ChiVendorTagsOps vendorTagOps = { 0 };

g_chiContextOps.pTagOps(&vendorTagOps);

operation_mode = pStreamConfig->operation_mode >> 16;

operation_mode = operation_mode & 0x000F;

pStreamConfig->operation_mode = pStreamConfig->operation_mode & 0xFFFF;

for (UINT32 stream = 0; stream < pStreamConfig->num_streams; stream++)

{

if (0 != (pStreamConfig->streams[stream]->usage & GrallocUsageHwVideoEncoder))

{

isVideoMode = TRUE;

break;

}

}

if ((isVideoMode == TRUE) && (operation_mode != 0))

{

UINT32 numSensorModes = m_logicalCameraInfo[logicalCameraId].m_cameraCaps.numSensorModes;

CHISENSORMODEINFO* pAllSensorModes = m_logicalCameraInfo[logicalCameraId].pSensorModeInfo;

if ((operation_mode - 1) >= numSensorModes)

{

result = CDKResultEOverflow;

CHX_LOG_ERROR("operation_mode: %d, numSensorModes: %d", operation_mode, numSensorModes);

}

else

{

fps = pAllSensorModes[operation_mode - 1].frameRate;

}

}

m_pResourcesUsedLock->Lock();

if (m_totalResourceBudget > CostOfAnyCurrentlyOpenLogicalCameras())

{

UINT32 myLogicalCamCost = CostOfLogicalCamera(logicalCameraId, pStreamConfig);

if (myLogicalCamCost > (m_totalResourceBudget - CostOfAnyCurrentlyOpenLogicalCameras()))

{

CHX_LOG_ERROR("Insufficient HW resources! myLogicalCamCost = %d, remaining cost = %d",

myLogicalCamCost, (m_totalResourceBudget - CostOfAnyCurrentlyOpenLogicalCameras()));

result = CamxResultEResource;

}

else

{

m_IFEResourceCost[logicalCameraId] = myLogicalCamCost;

m_resourcesUsed += myLogicalCamCost;

}

}

else

{

CHX_LOG_ERROR("Insufficient HW resources! TotalResourceCost = %d, CostOfAnyCurrentlyOpencamera = %d",

m_totalResourceBudget, CostOfAnyCurrentlyOpenLogicalCameras());

result = CamxResultEResource;

}

m_pResourcesUsedLock->Unlock();

if (CDKResultSuccess == result)

{

#if defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28) //Android-P or better

camera_metadata_t *metadata = const_cast<camera_metadata_t*>(pStreamConfig->session_parameters);

camera_metadata_entry_t entry = { 0 };

entry.tag = ANDROID_CONTROL_AE_TARGET_FPS_RANGE;

// The client may choose to send NULL sesssion parameter, which is fine. For example, torch mode

// will have NULL session param.

if (metadata != NULL)

{

int ret = find_camera_metadata_entry(metadata, entry.tag, &entry);

if(ret == 0) {

minSessionFps = entry.data.i32[0];

maxSessionFps = entry.data.i32[1];

m_usecaseMaxFPS = maxSessionFps;

}

}

#endif

CHIHANDLE staticMetaDataHandle = const_cast<camera_metadata_t*>(

m_logicalCameraInfo[logicalCameraId].m_cameraInfo.static_camera_characteristics);

UINT32 metaTagPreviewFPS = 0;

UINT32 metaTagVideoFPS = 0;

CHITAGSOPS vendorTagOps;

m_previewFPS = 0;

m_videoFPS = 0;

GetInstance()->GetVendorTagOps(&vendorTagOps);

result = vendorTagOps.pQueryVendorTagLocation("org.quic.camera2.streamBasedFPS.info", "PreviewFPS",

&metaTagPreviewFPS);

if (CDKResultSuccess == result)

{

vendorTagOps.pGetMetaData(staticMetaDataHandle, metaTagPreviewFPS, &m_previewFPS,

sizeof(m_previewFPS));

}

result = vendorTagOps.pQueryVendorTagLocation("org.quic.camera2.streamBasedFPS.info", "VideoFPS", &metaTagVideoFPS);

if (CDKResultSuccess == result)

{

vendorTagOps.pGetMetaData(staticMetaDataHandle, metaTagVideoFPS, &m_videoFPS,

sizeof(m_videoFPS));

}

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfig->operation_mode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfig->operation_mode))

{

SearchNumBatchedFrames(logicalCameraId, pStreamConfig,

&m_usecaseNumBatchedFrames, &m_usecaseMaxFPS, maxSessionFps);

if (480 > m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR;

}

else

{

// For 480FPS or higher, require more aggresive power hint

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR_480FPS;

}

}

else

{

// Not a HFR usecase, batch frames value need to be set to 1.

m_usecaseNumBatchedFrames = 1;

if (maxSessionFps == 0)

{

m_usecaseMaxFPS = fps;

}

if (TRUE == isVideoMode)

{

if (30 >= m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE;

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_60FPS;

}

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_PREVIEW;

}

}

if ((NULL != m_pPerfLockManager[logicalCameraId]) && (m_CurrentpowerHint != m_previousPowerHint))

{

m_pPerfLockManager[logicalCameraId]->ReleasePerfLock(m_previousPowerHint);

}

// Example [B == batch]: (240 FPS / 4 FPB = 60 BPS) / 30 FPS (Stats frequency goal) = 2 BPF i.e. skip every other stats

*m_pStatsSkipPattern = m_usecaseMaxFPS / m_usecaseNumBatchedFrames / 30;

if (*m_pStatsSkipPattern < 1)

{

*m_pStatsSkipPattern = 1;

}

m_VideoHDRMode = (StreamConfigModeVideoHdr == pStreamConfig->operation_mode);

m_torchWidgetUsecase = (StreamConfigModeQTITorchWidget == pStreamConfig->operation_mode);

// this check is introduced to avoid set *m_pEnableFOVC == 1 if fovcEnable is disabled in

// overridesettings & fovc bit is set in operation mode.

// as well as to avoid set,when we switch Usecases.

if (TRUE == fovcModeCheck)

{

*m_pEnableFOVC = ((pStreamConfig->operation_mode & StreamConfigModeQTIFOVC) == StreamConfigModeQTIFOVC) ? 1 : 0;

}

SetHALOps(chiHalOps, logicalCameraId);

m_logicalCameraInfo[logicalCameraId].m_pCamera3Device = pCamera3Device;

selectedUsecaseId = m_pUsecaseSelector->GetMatchingUsecase(&m_logicalCameraInfo[logicalCameraId],

pStreamConfig);

CHX_LOG_CONFIG("Session_parameters FPS range %d:%d, previewFPS %d, videoFPS %d,"

"BatchSize: %u FPS: %u SkipPattern: %u,"

"cameraId = %d selected use case = %d",

minSessionFps,

maxSessionFps,

m_previewFPS,

m_videoFPS,

m_usecaseNumBatchedFrames,

m_usecaseMaxFPS,

*m_pStatsSkipPattern,

logicalCameraId,

selectedUsecaseId);

// FastShutter mode supported only in ZSL usecase.

if ((pStreamConfig->operation_mode == StreamConfigModeFastShutter) &&

(UsecaseId::PreviewZSL != selectedUsecaseId))

{

pStreamConfig->operation_mode = StreamConfigModeNormal;

}

m_operationMode[logicalCameraId] = pStreamConfig->operation_mode;

}

if (UsecaseId::NoMatch != selectedUsecaseId)

{

m_pSelectedUsecase[logicalCameraId] =

m_pUsecaseFactory->CreateUsecaseObject(&m_logicalCameraInfo[logicalCameraId],

selectedUsecaseId, pStreamConfig);

if (NULL != m_pSelectedUsecase[logicalCameraId])

{

m_pStreamConfig[logicalCameraId] = static_cast<camera3_stream_configuration_t*>(

CHX_CALLOC(sizeof(camera3_stream_configuration_t)));

m_pStreamConfig[logicalCameraId]->streams = static_cast<camera3_stream_t**>(

CHX_CALLOC(sizeof(camera3_stream_t*) * pStreamConfig->num_streams));

m_pStreamConfig[logicalCameraId]->num_streams = pStreamConfig->num_streams;

for (UINT32 i = 0; i< m_pStreamConfig[logicalCameraId]->num_streams; i++)

{

m_pStreamConfig[logicalCameraId]->streams[i] = pStreamConfig->streams[i];

}

m_pStreamConfig[logicalCameraId]->operation_mode = pStreamConfig->operation_mode;

// use camera device / used for recovery only for regular session

m_SelectedUsecaseId[logicalCameraId] = (UINT32)selectedUsecaseId;

CHX_LOG_CONFIG("Logical cam Id = %d usecase addr = %p", logicalCameraId, m_pSelectedUsecase[

logicalCameraId]);

m_pCameraDeviceInfo[logicalCameraId].m_pCamera3Device = pCamera3Device;

*pIsOverrideEnabled = TRUE;

}

else

{

CHX_LOG_ERROR("For cameraId = %d CreateUsecaseObject failed", logicalCameraId);

m_logicalCameraInfo[logicalCameraId].m_pCamera3Device = NULL;

m_pResourcesUsedLock->Lock();

// Reset the m_resourcesUsed & m_IFEResourceCost if usecase creation failed

if (m_resourcesUsed >= m_IFEResourceCost[logicalCameraId]) // to avoid underflow

{

m_resourcesUsed -= m_IFEResourceCost[logicalCameraId]; // reduce the total cost

m_IFEResourceCost[logicalCameraId] = 0;

}

m_pResourcesUsedLock->Unlock();

}

}

return result;

}

(1) 判断是否是视频模式,做帧率的操作

(2) 获取camera资源消耗情况,并对相关参数赋值

(3)根据logicalCameraId 匹配 usecase

(4)根据usecaseId 创建usecase

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxutils.cpp

UsecaseId UsecaseSelector::GetMatchingUsecase(

const LogicalCameraInfo* pCamInfo,

camera3_stream_configuration_t* pStreamConfig)

{

UsecaseId usecaseId = UsecaseId::Default;

UINT32 VRDCEnable = ExtensionModule::GetInstance()->GetDCVRMode();

if ((pStreamConfig->num_streams == 2) && IsQuadCFASensor(pCamInfo) &&

(LogicalCameraType_Default == pCamInfo->logicalCameraType))

{

// need to validate preview size <= binning size, otherwise return error

/// If snapshot size is less than sensor binning size, select defaut zsl usecase.

/// Only if snapshot size is larger than sensor binning size, select QuadCFA usecase.

/// Which means for snapshot in QuadCFA usecase,

/// - either do upscale from sensor binning size,

/// - or change sensor mode to full size quadra mode.

if (TRUE == QuadCFAMatchingUsecase(pCamInfo, pStreamConfig))

{

usecaseId = UsecaseId::QuadCFA;

CHX_LOG_CONFIG("Quad CFA usecase selected");

return usecaseId;

}

}

if (pStreamConfig->operation_mode == StreamConfigModeSuperSlowMotionFRC)

{

usecaseId = UsecaseId::SuperSlowMotionFRC;

CHX_LOG_CONFIG("SuperSlowMotionFRC usecase selected");

return usecaseId;

}

/// Reset the usecase flags

VideoEISV2Usecase = 0;

VideoEISV3Usecase = 0;

GPURotationUsecase = FALSE;

GPUDownscaleUsecase = FALSE;

if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && VRDCEnable)

{

CHX_LOG_CONFIG("MultiCameraVR usecase selected");

usecaseId = UsecaseId::MultiCameraVR;

}

else if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && (pStreamConfig->num_streams > 1))

{

CHX_LOG_CONFIG("MultiCamera usecase selected");

usecaseId = UsecaseId::MultiCamera;

}

else

{

switch (pStreamConfig->num_streams)

{

case 2:

if (TRUE == IsRawJPEGStreamConfig(pStreamConfig))

{

CHX_LOG_CONFIG("Raw + JPEG usecase selected");

usecaseId = UsecaseId::RawJPEG;

break;

}

/// @todo Enable ZSL by setting overrideDisableZSL to FALSE

if (FALSE == m_pExtModule->DisableZSL())

{

if (TRUE == IsPreviewZSLStreamConfig(pStreamConfig))

{

usecaseId = UsecaseId::PreviewZSL;

CHX_LOG_CONFIG("ZSL usecase selected");

}

}

if(TRUE == m_pExtModule->UseGPURotationUsecase())

{

CHX_LOG_CONFIG("GPU Rotation usecase flag set");

GPURotationUsecase = TRUE;

}

if (TRUE == m_pExtModule->UseGPUDownscaleUsecase())

{

CHX_LOG_CONFIG("GPU Downscale usecase flag set");

GPUDownscaleUsecase = TRUE;

}

if (TRUE == m_pExtModule->EnableMFNRUsecase())

{

if (TRUE == MFNRMatchingUsecase(pStreamConfig))

{

usecaseId = UsecaseId::MFNR;

CHX_LOG_CONFIG("MFNR usecase selected");

}

}

if (TRUE == m_pExtModule->EnableHFRNo3AUsecas())

{

CHX_LOG_CONFIG("HFR without 3A usecase flag set");

HFRNo3AUsecase = TRUE;

}

break;

case 3:

VideoEISV2Usecase = m_pExtModule->EnableEISV2Usecase();

VideoEISV3Usecase = m_pExtModule->EnableEISV3Usecase();

if(TRUE == IsRawJPEGStreamConfig(pStreamConfig))

{

CHX_LOG_CONFIG("Raw + JPEG usecase selected");

usecaseId = UsecaseId::RawJPEG;

}

else if((FALSE == IsVideoEISV2Enabled(pStreamConfig)) && (FALSE == IsVideoEISV3Enabled(pStreamConfig)) &&

(TRUE == IsVideoLiveShotConfig(pStreamConfig)))

{

CHX_LOG_CONFIG("Video With Liveshot, ZSL usecase selected");

usecaseId = UsecaseId::VideoLiveShot;

}

break;

case 4:

if(TRUE == IsYUVInBlobOutConfig(pStreamConfig))

{

CHX_LOG_CONFIG("YUV callback and Blob");

usecaseId = UsecaseId::YUVInBlobOut;

}

break;

default:

CHX_LOG_CONFIG("Default usecase selected");

break;

}

}

if (TRUE == ExtensionModule::GetInstance()->IsTorchWidgetUsecase())

{

CHX_LOG_CONFIG("Torch widget usecase selected");

usecaseId = UsecaseId::Torch;

}

CHX_LOG_INFO("usecase ID:%d",usecaseId);

return usecaseId;

}(1)匹配usecase:根据 stream_number、operation_mode、numPhysicalCameras来判断选择哪个usecaseId

vendor/qcom/proprietary/chi-cdk/vendor/chioverride/default/chxusecaseutils.cpp

Usecase* UsecaseFactory::CreateUsecaseObject(

LogicalCameraInfo* pLogicalCameraInfo, ///< camera info

UsecaseId usecaseId, ///< Usecase Id

camera3_stream_configuration_t* pStreamConfig) ///< Stream config

{

Usecase* pUsecase = NULL;

UINT camera0Id = pLogicalCameraInfo->ppDeviceInfo[0]->cameraId;

switch (usecaseId)

{

case UsecaseId::PreviewZSL:

case UsecaseId::VideoLiveShot:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

case UsecaseId::MultiCamera:

{

#if defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28) //Android-P or better

LogicalCameraType logicalCameraType = m_pExtModule->GetCameraType(pLogicalCameraInfo->cameraId);

if (LogicalCameraType_DualApp == logicalCameraType)

{

pUsecase = UsecaseDualCamera::Create(pLogicalCameraInfo, pStreamConfig);

}

else

#endif

{

pUsecase = UsecaseMultiCamera::Create(pLogicalCameraInfo, pStreamConfig);

}

break;

}

case UsecaseId::MultiCameraVR:

//pUsecase = UsecaseMultiVRCamera::Create(pLogicalCameraInfo, pStreamConfig);

break;

case UsecaseId::QuadCFA:

if (TRUE == ExtensionModule::GetInstance()->UseFeatureForQuadCFA())

{

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

}

else

{

pUsecase = UsecaseQuadCFA::Create(pLogicalCameraInfo, pStreamConfig);

}

break;

case UsecaseId::Torch:

pUsecase = UsecaseTorch::Create(camera0Id, pStreamConfig);

break;

#if (!defined(LE_CAMERA)) // SuperSlowMotion not supported in LE

case UsecaseId::SuperSlowMotionFRC:

pUsecase = UsecaseSuperSlowMotionFRC::Create(pLogicalCameraInfo, pStreamConfig);

break;

#endif

default:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

}

return pUsecase;

}

(1)创建usecase:根据usecaseId选择,创建不同的usecase(AdvancedCameraUsecase : ZSL、VideoLiveShot

UsecaseDualCamera:双摄

UsecaseMultiCamera:多摄

UsecaseQuadCFA:四合一、九合一处理

UsecaseTorch:闪光灯模式

UsecaseSuperSlowMotionFRC:慢动作

qnx UsecaseFactory::CreateUsecaseObject

apps/qnx_ap/AMSS/multimedia/qcamera/camera_qcx/cdk_qcx/core/chiusecase/chxusecaseutils.cpp

// UsecaseFactory::CreateUsecaseObject

VOID* UsecaseFactory::CreateUsecaseObject(

LogicalCameraInfo* pLogicalCameraInfo, ///< camera info

UsecaseId usecaseId, ///< Usecase Id

camera3_stream_configuration_t* pStreamConfig, ///< Stream config

ChiMcxConfigHandle hDescriptorConfig) ///< mcx config

{

Usecase* pUsecase = NULL;

UsecaseXR* pUsecaseXR = NULL;

UsecaseAuto* pUsecaseAuto = NULL;

VOID* pReturnUsecase = NULL;

switch (usecaseId)

{

case UsecaseId::MultiCameraVR:

//pUsecase = UsecaseMultiVRCamera::Create(pLogicalCameraInfo, pStreamConfig);

break;

#ifndef __QNXNTO__

case UsecaseId::Torch:

pUsecase = UsecaseTorch::Create(pLogicalCameraInfo, pStreamConfig);

break;

case UsecaseId::AON:

pUsecase = CHXUsecaseAON::Create(pLogicalCameraInfo);

break;

#endif // !defined(QNXNTO)

#if CAMERA3_API_HIGH_PERF

#ifndef __QNXNTO__

case UsecaseId::XR:

pUsecaseXR = UsecaseXR::Create(pLogicalCameraInfo, pStreamConfig);

break;

#endif // !defined(QNXNTO)

case UsecaseId::Auto:

pUsecaseAuto = UsecaseAuto::Create(pLogicalCameraInfo, pStreamConfig);

break;

default:

break;

#else

case UsecaseId::PreviewZSL:

#ifndef __QNXNTO__

case UsecaseId::VideoLiveShot:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

#if !defined(LE_CAMERA) && !defined(__QNXNTO__)

case UsecaseId::MultiCamera:

if ((LogicalCameraType::LogicalCameraType_Default == pLogicalCameraInfo->logicalCameraType) &&

(pLogicalCameraInfo->numPhysicalCameras > 1))

{

pUsecase = ChiMulticameraBase::Create(pLogicalCameraInfo, pStreamConfig, hDescriptorConfig);

}

break;

#endif // !defined(LE_CAMERA)

case UsecaseId::QuadCFA:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

case UsecaseId::Depth:

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

break;

#endif // !defined(QNXNTO)

#if CAMERA3_API_HIGH_PERF

case UsecaseId::Auto:

pUsecaseAuto = UsecaseAuto::Create(pLogicalCameraInfo, pStreamConfig);

break;

#endif // CAMERA3_API_HIGH_PERF

default:

#ifndef __QNXNTO__

pUsecase = AdvancedCameraUsecase::Create(pLogicalCameraInfo, pStreamConfig, usecaseId);

#endif

break;

#endif // CAMERA3_API_HIGH_PERF

}

#if CAMERA3_API_HIGH_PERF

if (UsecaseId::XR == usecaseId)

{

pReturnUsecase = reinterpret_cast<VOID*>(pUsecaseXR);

}

if (UsecaseId::Auto == usecaseId)

{

pReturnUsecase = reinterpret_cast<VOID*>(pUsecaseAuto);

}

#else

pReturnUsecase = reinterpret_cast<VOID*>(pUsecase);

#endif // CAMERA3_API_HIGH_PERF

return pReturnUsecase;

}

配置数据流是整个CamX-CHI流程比较重要的一环,其中主要包括两个阶段:

1.选择UsecaseId

2.根据选择的UsecaseId创建Usecase

Camx 基本组件及其结构关系_yaoming168的博客-CSDN博客

Camera camx代码结构、编译、代码流程简介_camx 编译-CSDN博客

Camera 初始化(Open)二(HAL3中Open过程)_cameramodule::open_zhuyong006的博客-CSDN博客