根据c++高性能多进程 cuda编程:GPU结构和通信速度+tiling的分析,依靠pytorch的JIT进行了实现,所以在安装pytorch的环境中,直接执行test.py就能直接运行。

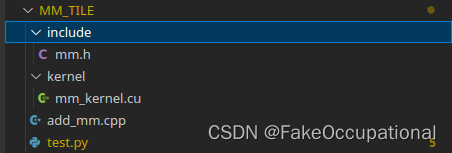

- 代码结构如下,地址

mm.h

void function_mm(float *c,

const float *a,

const float *b,

int n);

void function_mm_tiled(float *c,

const float *a,

const float *b,

int n);

mm_kernel.cu

__global__ void matrixMul(float* c,

const float* a,

const float* b,

int n) {// C_gpu,A_gpu,B_gpu,K

float accu = 0;

int i = blockIdx.y * blockDim.y + threadIdx.y; // Row i of matrix C

int j = blockIdx.x * blockDim.x + threadIdx.x; // Column j of matrix C

for (int k=0; k < n; k++) {

accu = accu+ a[i*32+k] *b[k*32+j];// accu+ a[i,k] *b[k,j];warning: #174-D: expression has no effect

}

c[i*32+j] = accu;

}

void function_mm(float* c,

const float* a,

const float* b,

int n) {

dim3 dimBlock(16, 16);

dim3 dimGrid(32/dimBlock.x, 32/dimBlock.y);

matrixMul<<<dimGrid, dimBlock>>>(c, a, b,n);

}

#define Tile_size 16 // https://github.com/yogesh-desai/TiledMatrixMultiplicationInCUDA/blob/master/Tiled_Mat_Mult.cu

__global__ void matrixMultiplyShared(const float * A, const float * B, float * C,

int numARows, int numAColumns,

int numBRows, int numBColumns,

int numCRows, int numCColumns)

{

__shared__ float sA[Tile_size][Tile_size]; // Tile size to store elements in shared memory

__shared__ float sB[Tile_size][Tile_size];

int Row = blockDim.y*blockIdx.y + threadIdx.y; //To generate ids of threads.

int Col = blockDim.x*blockIdx.x + threadIdx.x;

float Cvalue = 0.0;

sA[threadIdx.y][threadIdx.x] = 0.0;

sB[threadIdx.y][threadIdx.x] = 0.0;

for (int k = 0; k < (((numAColumns - 1)/ Tile_size) + 1); k++)

{

if ( (Row < numARows) && (threadIdx.x + (k*Tile_size)) < numAColumns)//Copy Data to Tile from Matrix (Global Memory to Shared Memory)

{

sA[threadIdx.y][threadIdx.x] = A[(Row*numAColumns) + threadIdx.x + (k*Tile_size)];

}

else

{

sA[threadIdx.y][threadIdx.x] = 0.0;

}

if ( Col < numBColumns && (threadIdx.y + k*Tile_size) < numBRows)//Copy Data to Tile from Matrix (Global Memory to Shared Memory)

{

sB[threadIdx.y][threadIdx.x] = B[(threadIdx.y + k*Tile_size)*numBColumns + Col];

}

else

{

sB[threadIdx.y][threadIdx.x] = 0.0;

}

__syncthreads();

for (int j = 0; j < Tile_size; ++j)//Multiplying Elements present in tile

{

Cvalue += sA[threadIdx.y][j] * sB[j][threadIdx.x];

}

}

if (Row < numCRows && Col < numCColumns)//Saving Final result into Matrix C

{

C[Row*numCColumns + Col] = Cvalue;

}

}

void function_mm_tiled(float* c,

const float* a,

const float* b,

int n) {

dim3 dimBlock(16, 16);

dim3 dimGrid(32/dimBlock.x, 32/dimBlock.y);

matrixMultiplyShared<<<dimGrid, dimBlock>>>( a, b,c,n,n,n,n,n,n);

}

add_mm.cpp

#include <torch/extension.h>

#include "mm.h"

void torch_launch_mm(torch::Tensor &c,

const torch::Tensor &a,

const torch::Tensor &b,

int64_t n) {

function_mm((float *)c.data_ptr(),

(const float *)a.data_ptr(),

(const float *)b.data_ptr(),

n);

}

void torch_launch_mm_tiled(torch::Tensor &c,

const torch::Tensor &a,

const torch::Tensor &b,

int64_t n) {

function_mm_tiled((float *)c.data_ptr(),

(const float *)a.data_ptr(),

(const float *)b.data_ptr(),

n);

}

PYBIND11_MODULE(TORCH_EXTENSION_NAME, m) {

m.def("torch_launch_mm",

&torch_launch_mm,

"torch_launch_mm:normal mm");

m.def("torch_launch_mm_tiled",

&torch_launch_mm_tiled,

"torch_launch_mm_tiled : in shared memory");

}

// https://github1s.com/pytorch/pytorch/blob/HEAD/torch/library.h#L875-L906

// TORCH_LIBRARY(ops_namespace_name, m) { // https://zhuanlan.zhihu.com/p/466043104

// m.def("torch_launch_mm", torch_launch_mm);

// }

// TORCH_LIBRARY(ops_namespace_name, m) {

// m.def("torch_launch_mm_tiled", torch_launch_mm_tiled);

// }

test.py

import argparse

import numpy as np

import torch

from torch import nn

from torch.autograd import Function

from torch.utils.cpp_extension import load # https://pytorch.org/docs/master/cpp_extension.html

cuda_module = load(name="MM", # MM

extra_include_paths=["include"],

sources=["add_mm.cpp", "kernel/mm_kernel.cu"],

verbose=True)

n = 32

a = torch.eye(n=32).to(device="cuda:0")

b = torch.eye(n=32).to(device="cuda:0")

c = torch.zeros(size = (32,32)).to(device="cuda:0")

print("BEFORE ...",c)

output = cuda_module.torch_launch_mm(c, a, b, n)

print("AFTER ...",c)

# if a = torch.Tensor(n) will get the sub errors :

# File "pytorch/train.py", line 34, in <module>

# print(c)

# File "/opt/conda/lib/python3.8/site-packages/torch/_tensor.py", line 338, in __repr__

# return torch._tensor_str._str(self)

# File "/opt/conda/lib/python3.8/site-packages/torch/_tensor_str.py", line 439, in _str

# return _str_intern(self)

# File "/opt/conda/lib/python3.8/site-packages/torch/_tensor_str.py", line 414, in _str_intern

# tensor_str = _tensor_str(self, indent)

# File "/opt/conda/lib/python3.8/site-packages/torch/_tensor_str.py", line 264, in _tensor_str

# formatter = _Formatter(get_summarized_data(self) if summarize else self)

# File "/opt/conda/lib/python3.8/site-packages/torch/_tensor_str.py", line 100, in __init__

# nonzero_finite_vals = torch.masked_select(tensor_view, torch.isfinite(tensor_view) & tensor_view.ne(0))

# RuntimeError: CUDA error: an illegal memory access was encountered

# CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

# For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

CG

- https://github.com/pytorch/pytorch/blob/main/test/test_cpp_extensions_jit.py