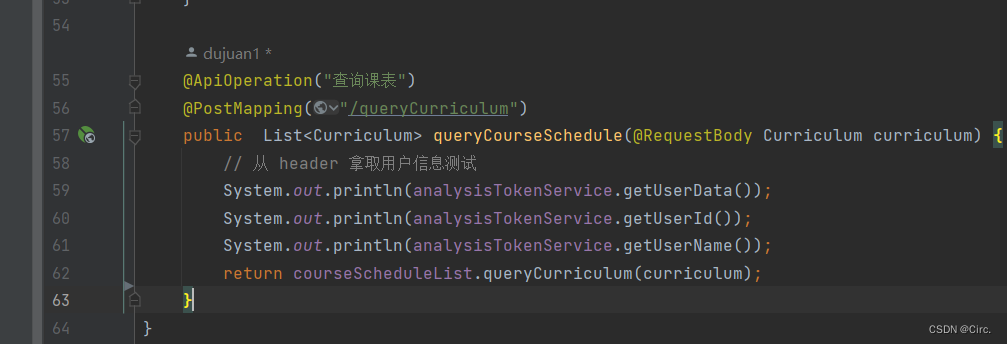

1 不借助 pyspark.sql.types

from pyspark.sql.functions import col

data = [("Alice", "28"), ("Bob", "22"), ("Charlie", "30")]

columns = ["name", "age_str"]

df = spark.createDataFrame(data, columns)

df

#DataFrame[name: string, age_str: string]

#创建一个pyspark的DataFrame

#########################以上是源数据,以下是cast之后的结果############################

df.withColumn('cast_col',col('age_str').cast('int'))

#DataFrame[name: string, age_str: string, cast_col: int]

df.withColumn('cast_col',col('age_str').cast('float'))

#DataFrame[name: string, age_str: string, cast_col: float]2 借助pysparks.sql.types

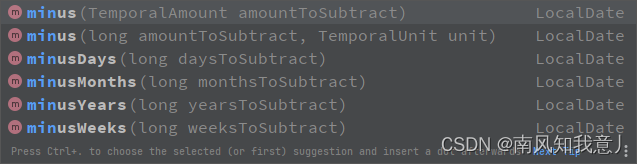

from pyspark.sql.types import *

df.withColumn('cast_col',col('age_str').cast(BooleanType()))

#DataFrame[name: string, age_str: string, cast_col: boolean]| BooleanType | |

| ByteType | 字节数据类型,占用一个字节的存储空间 |

| DateType | datetime.date 的数据类型 |

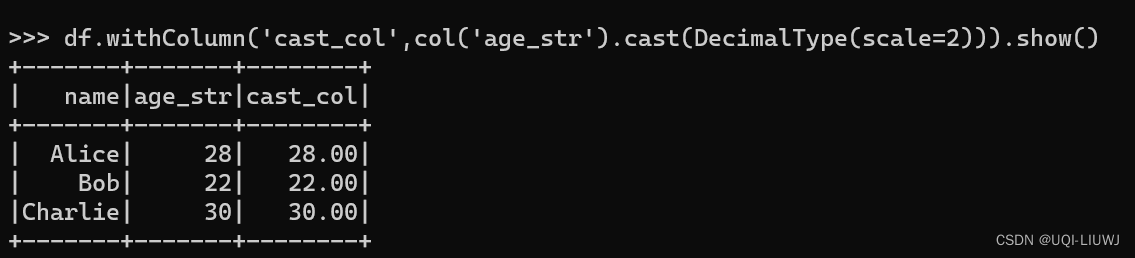

| DecimalType | 这个类型有两个可选参数,分别是

|

| DoubleType | |

| FloatType | |

| IntegerType | |

| LongType | |

| NullType | |

| ShortType | |

| StringType | |

| TimestampType | datetime.datetime 类型 |

| DayTimeIntervalType | datetime.timedelta类型 |