Hive3.1.2安装

前言

Hive是何物,自己去百度,在此不多bb,直接开整…

学习一个组件,个人觉得最重要的是先学会安装再说,巧妇难为无米之炊撒…

下载

下载地址:https://downloads.apache.org/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

解压安装

前置条件

开整之前再多bb一句,hive是依托hdfs+MapReduce进行存储和计算的,务必要先安装好hadoop可以玩的喔。

安装hadoop可以参考链接:https://blog.csdn.net/MrZhangBaby/article/details/131683270?spm=1001.2014.3001.5502

安装

# 将下载好的tar包进行解压

tar -xzvf apache-hive-3.1.2-bin.tar.gz -C /Users/zhangchenguang/software/

# 名字太长的话可以选择执行重命名操作

mv apache-hive-3.1.2-bin hive-3.1.2

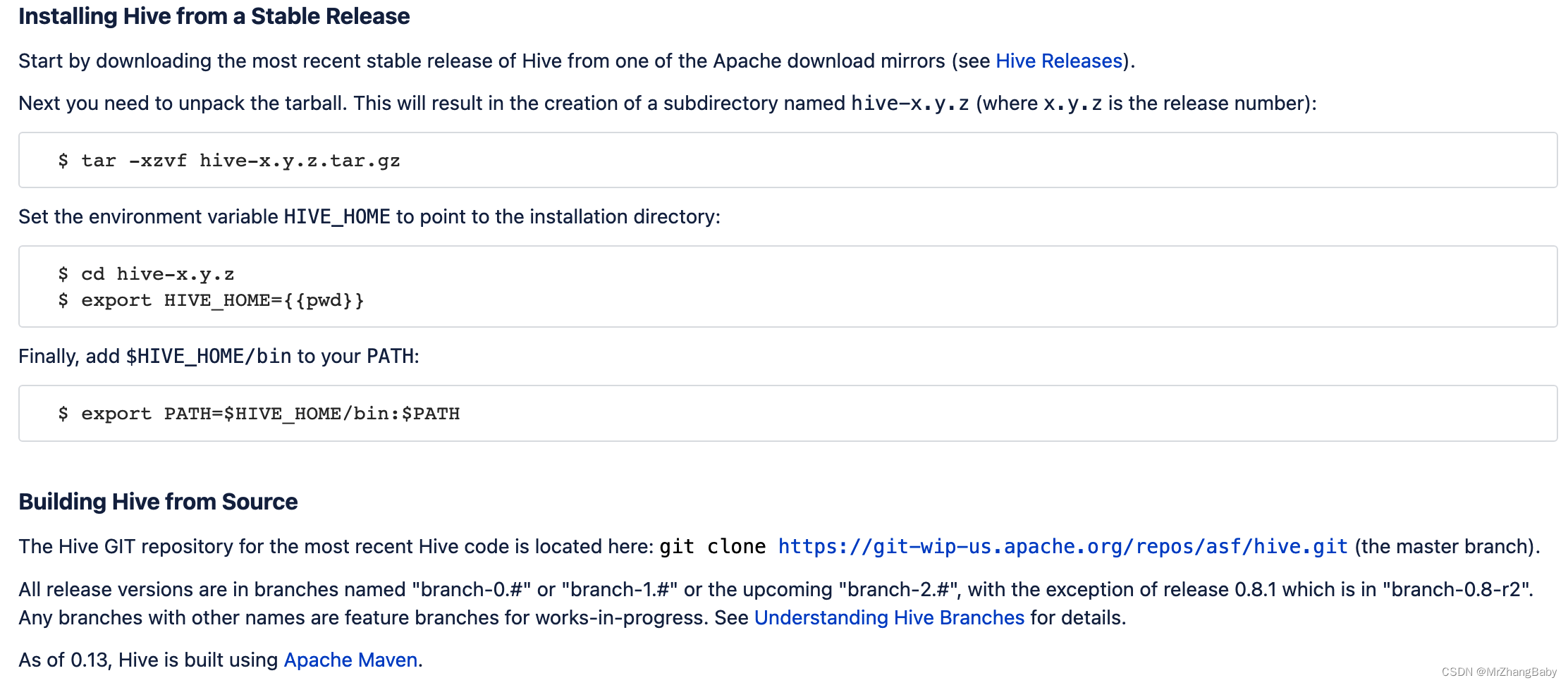

参考官网

配置:https://cwiki.apache.org/confluence/display/Hive/GettingStarted#GettingStarted-InstallingHivefromaStableRelease

好像没怎么写配置,仅仅芝士配置了一下,个人感觉有点乱吧。

直接开整

# 参考hadoop把home先搞到环境变量里面 windows:/etc/profice

vi ~/.bash_profile

# 新增HIVE_HOME配置,便于使用命令hive等

# hive

export HIVE_HOME=/Users/zhangchenguang/software/hive-3.1.2

export PATH=$PATH:$HIVE_HOME/bin

# 调整配置文件

cp conf/hive-env.sh.template conf/hive-env.sh

cp conf/hive-default.xml.template conf/hive-site.xml

# 在hive-env.sh 加入HADOOP_HOME

export HADOOP_HOME=/Users/zhangchenguang/software/hadoop-3.2.4

# 至于hive-site.xml这个文件,暂时没有在官网发现需要调整哪里,暂不做修改。

# 然后执行hive

hive

然后出现第一个异常

异常一

(base) zhangchenguang@cgzhang.local:/Users/zhangchenguang/software/hive-3.1.2/conf $ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hadoop-3.2.4/share/hadoop/common/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1357)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1338)

at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:536)

at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:554)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:448)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5141)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5099)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:97)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

解决方法

能咋解决嘞,看这个报错像是jar包冲突,百度一波。参考:https://blog.51cto.com/u_15316404/3216184

经过搜索确实是hadoop和hive的jar包冲突了,名字叫guava

在hadoop的目录为 hadoop/share/hadoop/common/lib下

在hive的目录为hive/lib

比较两个版本的jar包,将高版本的复制给另一个,然后低版本的删除,无非就是 mv、cp、rm(删除前最好养成备份的好习惯喔~),在此不多bb了。

继续hive执行出现异常二

Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

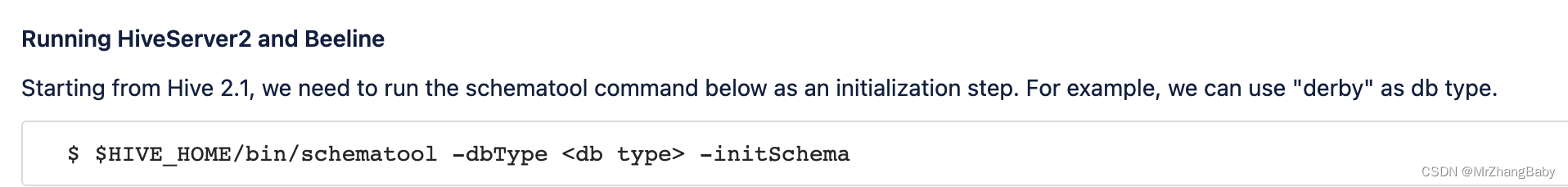

HiveMetaStore元数据,hive要有元数据呀??? 继续查呗,官网查到这样一段描述:

那就开始搞元数据,从官网获悉默认会以 derby 为元数据存储,先不着急换,先用着呗。开整,执行后出现了异常三

(base) zhangchenguang@cgzhang.local:/Users/zhangchenguang/software/hive-3.1.2 $ schematool -dbType derby -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hadoop-3.2.4/share/hadoop/common/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3215,96,"file:/Users/zhangchenguang/software/hive-3.1.2/conf/hive-site.xml"]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3040)

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><configuration>

<!-- WARNING!!! This file is auto generated for documentation purposes ONLY! -->

<!-- WARNING!!! Any changes you make to this file will be ignored by Hive. -->

<!-- WARNING!!! You must make your changes in hive-site.xml instead. -->

<!-- Hive Execution Parameters -->

<property>

<name>hive.exec.script.wrapper</name>

<value/>

<description/>

</property>

<property>

<name>hive.exec.plan</name>

<value/>

E486: Pattern not found: 3215

3203 <description>

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2989)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2862)

at org.apache.hadoop.conf.Configuration.addResourceObject(Configuration.java:1012)

at org.apache.hadoop.conf.Configuration.addResource(Configuration.java:917)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5151)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5104)

at org.apache.hive.beeline.HiveSchemaTool.<init>(HiveSchemaTool.java:96)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3215,96,"file:/Users/zhangchenguang/software/hive-3.1.2/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:634)

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:504)

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2469)

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2416)

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2382)

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1528)

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2818)

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1121)

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3336)

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3130)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3023)

... 14 more

解决方法

原因:hive-site.xml配置文件中存在  特殊字符

解决:干掉  特殊字符

Exception in thread “main” java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3215,96,“file:/Users/zhangchenguang/software/hive-3.1.2/conf/hive-site.xml”]

位置信息在日志中已经说明了 ,删除即可。

再继续,初始化成功

(base) zhangchenguang@cgzhang.local:/Users/zhangchenguang/software/hive-3.1.2 $ schematool -dbType derby -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hadoop-3.2.4/share/hadoop/common/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 3.1.0

Initialization script hive-schema-3.1.0.derby.sql

.

.

.

.

.

.

Initialization script completed

schemaTool completed

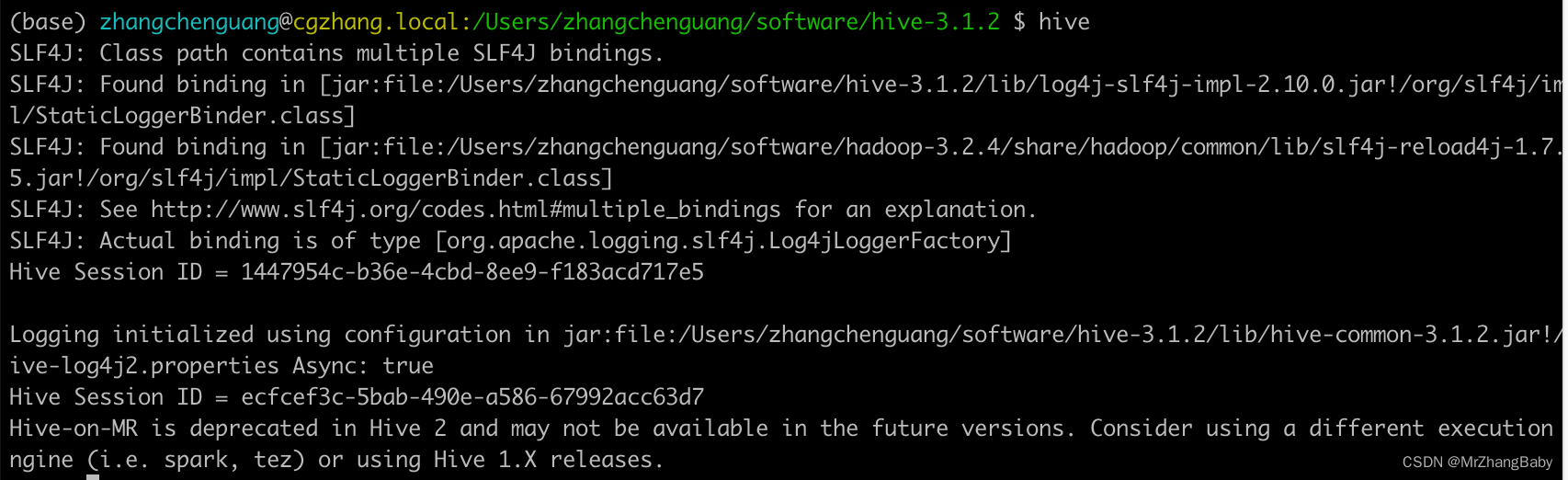

继续执行Hive

出现了异常四

(base) zhangchenguang@cgzhang.local:/Users/zhangchenguang/software/hive-3.1.2 $ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/zhangchenguang/software/hadoop-3.2.4/share/hadoop/common/lib/slf4j-reload4j-1.7.35.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = d5a22c3b-992d-44fe-bef1-35932439f12a

Logging initialized using configuration in jar:file:/Users/zhangchenguang/software/hive-3.1.2/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:263)

at org.apache.hadoop.fs.Path.<init>(Path.java:221)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:710)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:627)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:591)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:747)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at java.net.URI.checkPath(URI.java:1823)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:260)

... 12 more

如何解决?

按着提示 ”${system:java.io.tmpdir“ 检索发现出现了很多个地方,一个一个试着改呗。

经过验证调整如下

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>${system:java.io.tmpdir}/${system:user.name}/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

.

.

.

<property>

<name>hive.querylog.location</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Location of Hive run time structured log file</description>

</property>

.

.

.

<property>

<name>hive.exec.local.scratchdir</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

.

.

.

<property>

<name>hive.downloaded.resources.dir</name>

<value>${system:java.io.tmpdir}/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/Users/zhangchenguang/software/hive-3.1.2/hive_tmp/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

.

.

.

<property>

<name>hive.querylog.location</name>

<value>/Users/zhangchenguang/software/hive-3.1.2/hive_tmp/</value>

<description>Location of Hive run time structured log file</description>

</property>

.

.

.

<property>

<name>hive.exec.local.scratchdir</name>

<value>/Users/zhangchenguang/software/hive-3.1.2/hive_tmp</value>

<description>Local scratch space for Hive jobs</description>

</property>

.

.

.

<property>

<name>hive.downloaded.resources.dir</name>

<value>/Users/zhangchenguang/software/hive-3.1.2/hive_tmp/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

再执行hive

发现已经能进入了

📢注意:前提是一定要提前安装好hadoop喔

验证测试

建库、建表、写数据、查询

show databases;

create database test20230713;

use test20230713;

create table test_1(name string);

insert into test_1 select "hello,hive!";

select * from test_1;

结果如下

hive> show databases;

OK

default

Time taken: 0.035 seconds, Fetched: 1 row(s)

hive> create database test20230713;

OK

Time taken: 0.097 seconds

hive> use test20230713;

OK

Time taken: 0.019 seconds

hive> create table test_1(name string);

OK

Time taken: 0.428 seconds

hive> insert into test_1 select "hello,hive!";

Query ID = zhangchenguang_20230713183204_881b9cda-9aca-402f-a922-70891bc7be11

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1689240208463_0002, Tracking URL = http://localhost:8088/proxy/application_1689240208463_0002/

Kill Command = /Users/zhangchenguang/software/hadoop-3.2.4/bin/mapred job -kill job_1689240208463_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2023-07-13 18:32:13,681 Stage-1 map = 0%, reduce = 0%

2023-07-13 18:32:18,889 Stage-1 map = 100%, reduce = 0%

2023-07-13 18:32:23,027 Stage-1 map = 100%, reduce = 100%

Ended Job = job_1689240208463_0002

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://cgzhang.local:9000/user/hive/warehouse/test20230713.db/test_1/.hive-staging_hive_2023-07-13_18-32-04_250_407082641911746305-1/-ext-10000

Loading data to table test20230713.test_1

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 HDFS Read: 12393 HDFS Write: 222 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

Time taken: 20.266 seconds

hive> select * from test_1;

OK

hello,hive!

Time taken: 0.127 seconds, Fetched: 1 row(s)