一、前言

最近需要实现一个录屏功能,网上查了好多资料,最可靠的方案当然还是用FFmpeg实现,但是也踩了很多坑,包括FFmpeg版本问题,vs2019里相关编译问题,FFmpeg也不太熟悉,很多代码不太容易看懂,想要按自己熟悉的方式实现录屏功能,花了一番功夫。

如果你进来了,可以不用走了,应该能帮到你。

二、环境

VS2019 + Qt5 + FFmpeg4.2.2

FFmpeg的版本比较重要,不同的版本很多函数没法通用。

0、查看FFmpeg版本:

extern "C"

{

#include "libavutil/version.h"

}

const char* versionInfo = av_version_info();

qDebug() << "FFmpeg Version: " << versionInfo;1、vs2019配置FFmpeg

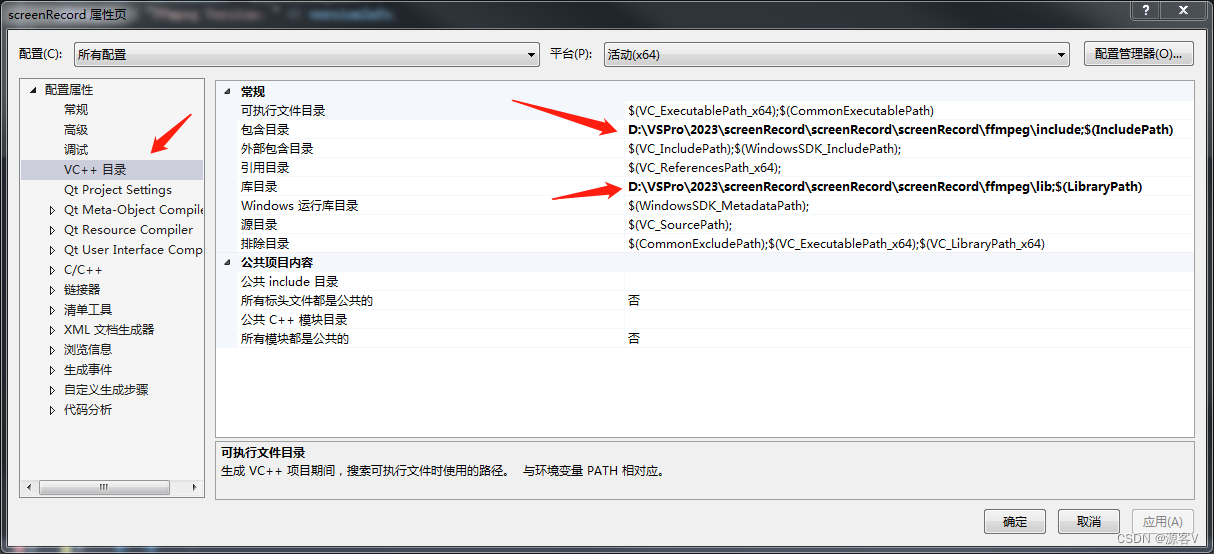

右键点击项目——>属性——>VC++目录——>添加包含目录和库目录为FFmpeg的include和lib目录。

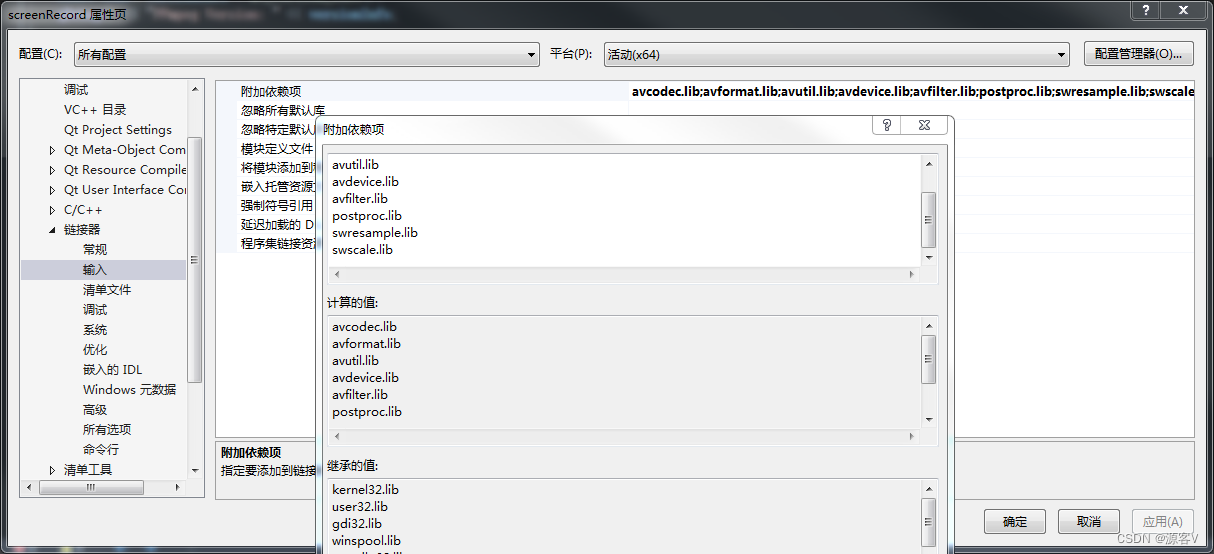

右键点击项目——>属性——>链接器——>输入——>添加附加依赖项,avcodec.lib,avformat.lib,avutil.lib,avdevice.lib,avfilter.lib,postproc.lib,swresample.lib,swscale.lib

三、实现代码

1、main.cpp

#include "screenRecord.h"

#include <QtWidgets/QApplication>

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

screenRecord w;

w.show();

return a.exec();

}

2、screenRecord.h

#pragma once

#pragma execution_character_set("utf-8")

#include <QtWidgets/QMainWindow>

#include "ui_screenRecord.h"

#include "ffmpegVideoThread.h"

#include <QDateTime>

#include <QTimer>

#include <QFileDialog>

#include <QMessageBox>

class screenRecord : public QMainWindow

{

Q_OBJECT

public:

screenRecord(QWidget *parent = nullptr);

~screenRecord();

private slots:

void on_pushButton_startRecord_clicked();

void on_pushButton_savePath_clicked();

void slotShowTime();

private:

Ui::screenRecordClass ui;

ffmpegVideoThread* m_ffmpegVideoThread;

bool hasScreenRecord = false;

QTimer* m_timer;

int _h, _m, _s;

};

3、screenRecord.cpp.

#include "screenRecord.h"

screenRecord::screenRecord(QWidget *parent)

: QMainWindow(parent)

{

ui.setupUi(this);

this->setWindowTitle("录屏");

this->setWindowFlags(Qt::WindowCloseButtonHint);

ui.pushButton_startRecord->setEnabled(false);

m_timer = new QTimer();

connect(m_timer, &QTimer::timeout, this, &screenRecord::slotShowTime);

_h = 0;

_m = 0;

_s = 0;

}

screenRecord::~screenRecord()

{}

void screenRecord::on_pushButton_startRecord_clicked()

{

if (ui.pushButton_startRecord->text() == "开始录屏") {

ui.pushButton_startRecord->setText("停止录屏");

ui.pushButton_savePath->setEnabled(false);

//开始录屏

m_ffmpegVideoThread = new ffmpegVideoThread();

connect(m_ffmpegVideoThread, &ffmpegVideoThread::sigRecordError, this, [=](QString errorStr) {

QMessageBox::warning(this, "错误", errorStr, "确定");

m_timer->stop();

});

m_ffmpegVideoThread->start();

m_ffmpegVideoThread->setOutMpeFileName(ui.lineEdit->text().trimmed().toStdString().c_str());

hasScreenRecord = true;

_h = 0;

_m = 0;

_s = 0;

if (m_timer->isActive())

m_timer->stop();

m_timer->start(1000);

}

else {

ui.pushButton_savePath->setEnabled(true);

ui.pushButton_startRecord->setEnabled(true);

ui.pushButton_startRecord->setText("开始录屏");

//停止录屏

m_ffmpegVideoThread->setStoped(true);

m_ffmpegVideoThread->quitThread();

m_ffmpegVideoThread->quit();

m_ffmpegVideoThread->wait();

m_ffmpegVideoThread->deleteLater();

m_ffmpegVideoThread = NULL;

hasScreenRecord = false;

m_timer->stop();

}

}

void screenRecord::on_pushButton_savePath_clicked()

{

QString dirpath = QFileDialog::getExistingDirectory(this, "选择目录", "./", QFileDialog::ShowDirsOnly);

ui.lineEdit->setText(dirpath);

ui.pushButton_startRecord->setEnabled(true);

}

void screenRecord::slotShowTime()

{

_s++;

if (_s >= 60) {

_m++;

_s = 0;

}

if (_m >= 60) {

_h++;

_m = 0;

}

QString showTime = QString("%1:%2:%3").arg(_h).arg(_m).arg(_s);

ui.label_recordTime->setText(showTime);

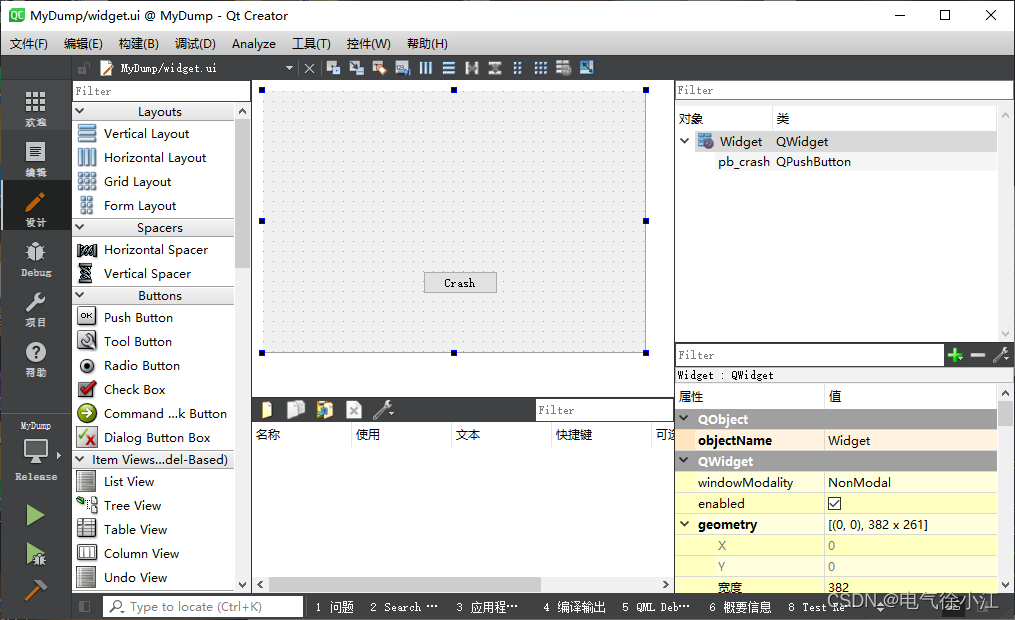

}4、screenRecord.ui

5、ffmpegVideoThread.h

#pragma once

#pragma execution_character_set("utf-8")

#include <QThread>

#include <QImage>

#include <QDebug>

#include <QDateTime>

#include <stdio.h>

#include <tchar.h>

#include <Windows.h>

#include <conio.h>

#include <SDKDDKVer.h>

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavdevice/avdevice.h"

#include "libavutil/audio_fifo.h"

#include "libavutil/version.h"

};

class ffmpegVideoThread : public QThread

{

Q_OBJECT

public:

ffmpegVideoThread(QObject* parent = Q_NULLPTR);

~ffmpegVideoThread();

void setStoped(bool);

void quitThread();

void setOutMpeFileName(QString outFileName);

signals:

void sigRecordError(QString);

protected:

virtual void run();

private:

bool stopped = false;

bool quited = false;

QString m_outFileName;

//ffmpeg

AVFormatContext* pFormatCtx_Video = NULL,* pFormatCtx_Out = NULL;

AVCodecContext* pCodecCtx_Video;

AVCodec* pCodec_Video;

AVFifoBuffer* fifo_video = NULL;

int VideoIndex;

CRITICAL_SECTION VideoSection;

SwsContext* img_convert_ctx;

int frame_size = 0;

uint8_t* picture_buf = NULL, * frame_buf = NULL;

bool bCap = true;

static DWORD WINAPI ScreenCapThreadProc(LPVOID lpParam);

int OpenVideoCapture();

int OpenOutPut(const char* outFileName);

};6、ffmpegVideoThread.cpp部分代码

ffmpegVideoThread.cpp部分代码暂不完全开放,有需要私聊我。

void ffmpegVideoThread::run()

{

av_register_all();

avdevice_register_all();

if (OpenVideoCapture() < 0)

{

emit sigRecordError("视频捕获打开错误!");

return;

}

if (m_outFileName.isEmpty())

m_outFileName = "./";

QDateTime current_date_time = QDateTime::currentDateTime();

QString current_date = current_date_time.toString("/yyyyMMdd_hhmmss");

QString SaveFile = m_outFileName + current_date + ".mp4";

if (OpenOutPut(SaveFile.toStdString().c_str()) < 0)

{

emit sigRecordError("不能打开输出文件句柄!\n输出文件:" + SaveFile);

return;

}

InitializeCriticalSection(&VideoSection);

AVFrame* picture = av_frame_alloc();

int size = avpicture_get_size(pFormatCtx_Out->streams[VideoIndex]->codec->pix_fmt,

pFormatCtx_Out->streams[VideoIndex]->codec->width, pFormatCtx_Out->streams[VideoIndex]->codec->height);

picture_buf = new uint8_t[size];

avpicture_fill((AVPicture*)picture, picture_buf,

pFormatCtx_Out->streams[VideoIndex]->codec->pix_fmt,

pFormatCtx_Out->streams[VideoIndex]->codec->width,

pFormatCtx_Out->streams[VideoIndex]->codec->height);

//开始捕获屏幕线程

CreateThread(NULL, 0, ScreenCapThreadProc, this, 0, NULL);

int64_t cur_pts_v = 0, cur_pts_a = 0;

int VideoFrameIndex = 0, AudioFrameIndex = 0;

while (!quited)

{

while (!stopped) {

//从fifo读取数据

if (av_fifo_size(fifo_video) < frame_size && !bCap)

{

cur_pts_v = 0x7fffffffffffffff;

}

if (av_fifo_size(fifo_video) >= size)

{

EnterCriticalSection(&VideoSection);

av_fifo_generic_read(fifo_video, picture_buf, size, NULL);

LeaveCriticalSection(&VideoSection);

avpicture_fill((AVPicture*)picture, picture_buf,

pFormatCtx_Out->streams[VideoIndex]->codec->pix_fmt,

pFormatCtx_Out->streams[VideoIndex]->codec->width,

pFormatCtx_Out->streams[VideoIndex]->codec->height);

picture->pts = VideoFrameIndex * ((pFormatCtx_Video->streams[0]->time_base.den / pFormatCtx_Video->streams[0]->time_base.num) / 15);

int got_picture = 0;

AVPacket pkt;

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

int ret = avcodec_encode_video2(pFormatCtx_Out->streams[VideoIndex]->codec, &pkt, picture, &got_picture);

if (ret < 0)

{

//编码错误,不理会此帧

continue;

}

if (got_picture == 1)

{

pkt.stream_index = VideoIndex;

pkt.pts = av_rescale_q_rnd(pkt.pts, pFormatCtx_Video->streams[0]->time_base,

pFormatCtx_Out->streams[VideoIndex]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.dts = av_rescale_q_rnd(pkt.dts, pFormatCtx_Video->streams[0]->time_base,

pFormatCtx_Out->streams[VideoIndex]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt.duration = ((pFormatCtx_Out->streams[0]->time_base.den / pFormatCtx_Out->streams[0]->time_base.num) / 15);

cur_pts_v = pkt.pts;

ret = av_interleaved_write_frame(pFormatCtx_Out, &pkt);

av_free_packet(&pkt);

}

VideoFrameIndex++;

}

}

bCap = false;

Sleep(2000);//等待采集线程关闭

}

delete[] picture_buf;

av_fifo_free(fifo_video);

av_write_trailer(pFormatCtx_Out);

avio_close(pFormatCtx_Out->pb);

avformat_free_context(pFormatCtx_Out);

if (pFormatCtx_Video != NULL)

{

avformat_close_input(&pFormatCtx_Video);

pFormatCtx_Video = NULL;

}

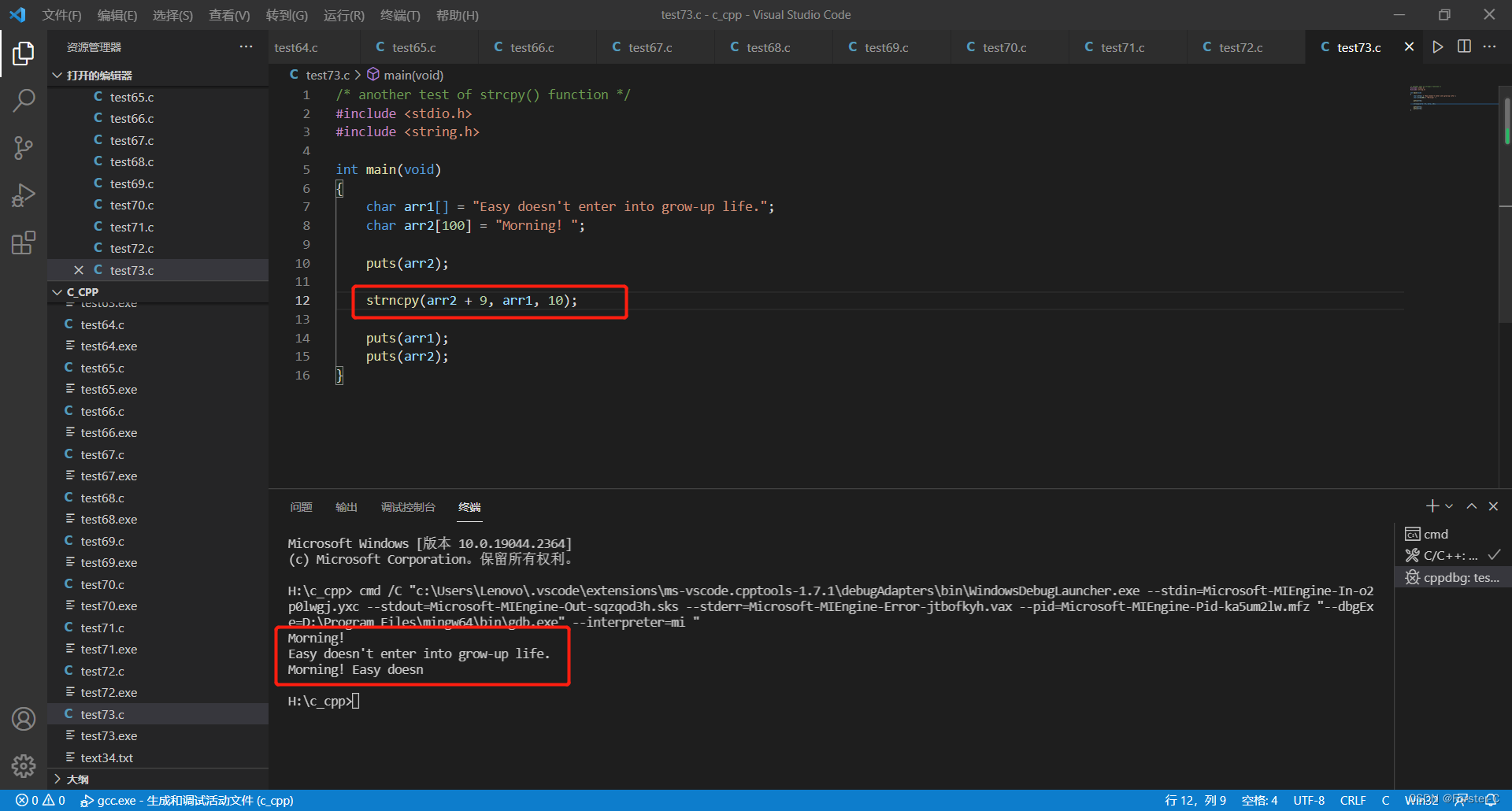

}四、运行效果

ffmpeg实现录屏功能

五、最后

FFmpeg4.2.2编译好了的,直接使用。FFmpeg4.2.2已编译好的

FFmpeg我并不熟悉,只求能正常跑通使用就行了,花了好多时间踩坑,在此记录一下。