接上篇,讲到如何从mask转成YOLOv5训练需要的txt数据集格式,这篇就在此基础上进行模型训练预测和部署转换吧!

目录

1.环境准备

2.YOLO训练

2.1 数据集准备

2.2 data.yaml准备

2.3 yolov5.yaml准备

2.4 训练命令

3.YOLO预测

3.1OLOv5 PyTorch Hub 预测

3.2代码预测

4.torch2onnx模型部署转换

5.onnx模型推理预测

6.onnx模型运行验证

数据集制作快速通道:YOLO格式数据集制作

1.环境准备

环境版本要求:python>=3.7

pytorch>=1.7

其他需要的安装包可以直接用requirements.txt下载安装

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install2.YOLO训练

2.1 数据集准备

rootpath

--images

--1.png

--2.png

...

--labels

--1.txt

--2.txt

...images就不用多说了,里面就是存放原始训练影像,格式必须为png或者jpg;

labels里可以有两种格式.

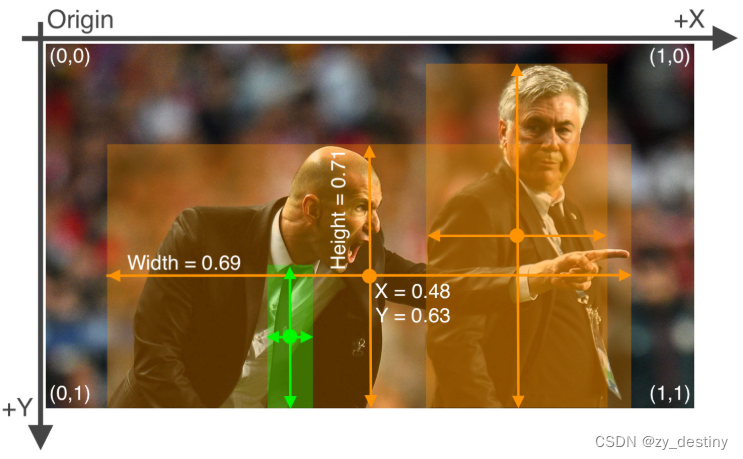

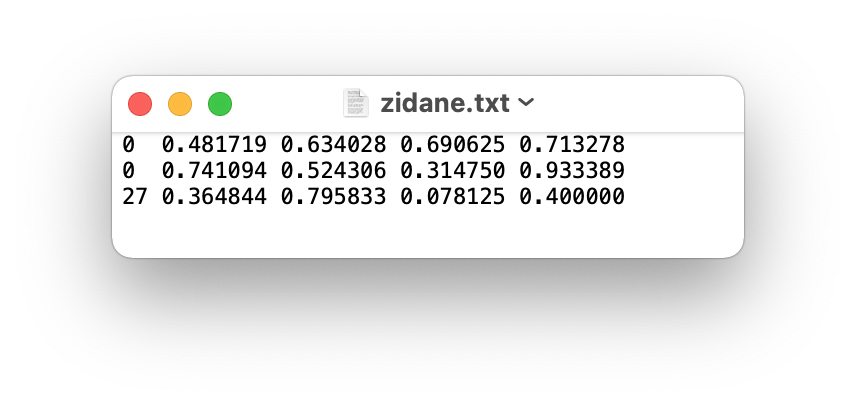

- 如果是目标检测任务,那毋庸置疑就是标准yolo目标检测格式label了,一共5列,第1列存放每个目标的类别,第2-5列分别存放中心点坐标和宽度高度(center_x,center_y,wid ,hig)

注意:坐标点位置要归一化到(0-1)之间,也就是像素坐标分别除以宽度和高度。即

#center_x_pixl,center_y_pixl,width_pixl,higth_pixl表示中心点像素坐标和bbox的宽度和高度

#center_x,center_y,wid,hig表示归一化后的坐标,即txt里写的内容

#width,higth表示原始影像的宽度和高度

center_x = center_x_pixl / width

wid = width_pixl / width

center_y = center_y_pixl / higth

hig = higth_pixl / higth

- 如果是语义分割任务,与目标检测相同的是第1列仍然存放每个目标的类别,不同的是后面所有列分别存放每个边界点的坐标(center_x1,center_y1, center_x2,center_y2, ......, center_xn,center_yn),表示这些点组成了一个多边形目标。注意此时的边界点坐标也是归一化后的,归一化方法与目标检测方法一致。

2.2 data.yaml准备

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ../datasets/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes (80 COCO classes)

names:

0: person

1: bicycle

2: car

...

77: teddy bear

78: hair drier

79: toothbrush

path:数据地址

train:训练数据文件夹名称

val:验证数据文件夹名称

names:类别名称对应,得从0开始

2.3 yolov5.yaml准备

以yolov5s为例,其实要改的就是一个numclass

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

nc改为自己的类别总数就可以了,其他不用动 ,0也算一类哦,比如类别是0,1,那nc就是2。

2.4 训练命令

- 单GPU训练

python train.py --batch 64 --data coco.yaml --weights yolov5s.pt --device 0- 多GPU训练

python -m torch.distributed.run --nproc_per_node 2 train.py --batch 64 --data coco.yaml --weights yolov5s.pt --device 0,1训练结果在run/train/目录下生成exp文件夹,若参数不变,会保留多个exp,不会覆盖。

3.YOLO预测

推理过程可以用两种方式,一种是用pytorch hub工具预测,一种是代码行预测

3.1OLOv5 PyTorch Hub 预测

import torch

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s") # or yolov5n - yolov5x6, custom

# Images

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.3.2代码预测

python detect.py --weights yolov5s.pt --source 0 # webcam

img.jpg # image

vid.mp4 # video

screen # screenshot

path/ # directory

list.txt # list of images

list.streams # list of streams

'path/*.jpg' # glob

'https://youtu.be/Zgi9g1ksQHc' # YouTube

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream结果自动保存在runs/detect目录下

4.torch2onnx模型部署转换

把自己训练好的模型导出成onnx格式,方便部署

python export.py --weights yolov5s.pt --include torchscript onnx注意:可以增加参数--half ,以达到半精度带出的目的,这样导出的文件比较小。

5.onnx模型推理预测

python detect.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle6.onnx模型运行验证

python val.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS Only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle