目录

1--前言

2--初始化 VGG16 模型

3--修改特定层

4--新增特定层

5--删除特定层

6--固定预训练模型的权重

7--综合应用

1--前言

基于 Pytorch,以 VGG16 模型为例;

2--初始化 VGG16 模型

from torchvision import models

# 初始化模型

vgg16 = models.vgg16(pretrained=True) # 使用预训练权重

# 打印模型

print(vgg16)原始 VGG16 模型打印结果如下:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

3--修改特定层

这里以修改 VGG16 最后一层为例,将输出类别更改为120类;

from torchvision import models

import torch

# 初始化模型

vgg16 = models.vgg16(pretrained=True)

#print(vgg16)

# 修改特定层

num_fc = vgg16.classifier[6].in_features # 获取最后一层的输入维度

num_class = 120 # 类别数

vgg16.classifier[6] = torch.nn.Linear(num_fc, num_class)# 修改最后一层的输出维度,即分类数

print(vgg16)修改后 VGG16 模型的打印结果如下,显然 vgg16.classifier[6] 层的输出类别被修改为120类:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=120, bias=True)

)

)

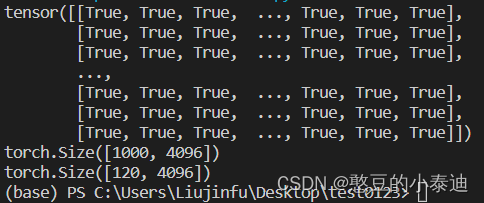

比较修改前和修改后模型的参数值:

from torchvision import models

import torch

# 初始化模型

vgg16_1 = models.vgg16(pretrained=True)

vgg16_2 = models.vgg16(pretrained=True)

#print(vgg16_1)

# 修改特定层

num_fc = vgg16_2.classifier[6].in_features # 获取最后一层的输入维度

num_class = 120 # 类别数

vgg16_2.classifier[6] = torch.nn.Linear(num_fc, num_class)# 修改最后一层的输出维度,即分类数

#print(vgg16_2)

for name, param in vgg16_1.named_parameters():

if name == 'classifier.0.weight':

vgg16_1_data1 = param.data

elif name == 'classifier.6.weight':

vgg16_1_data2 = param.data

for name, param in vgg16_2.named_parameters():

if name == 'classifier.0.weight':

vgg16_2_data1 = param.data

if name == 'classifier.6.weight':

vgg16_2_data2 = param.data

break

# classifier.0.weight 层是没有修改的,所以修改前和修改后模型的参数是相同的

print(vgg16_1_data1 == vgg16_2_data1)

# classifier.6.weight 层是被修改的,所以其维度和数据都不相同

print(vgg16_1_data2.shape)

print(vgg16_2_data2.shape)

从上面代码的执行结果可知:模型在修改前和修改后,被修改层的参数不同,未修改层的参数保持相同;

4--新增特定层

在原模型的基础上,在特定位置新增新的网络模块;

from torchvision import models

import torch.nn as nn

# 初始化模型

vgg16 = models.vgg16(pretrained=True)

# 新增特定层(在 vgg16 的 classifier 最后位置新增一个线性层)

vgg16.classifier.add_module('7', nn.Linear(120,60)) # 7 表示别名

print(vgg16)新增后 VGG16 模型的打印结果如下,显然 vgg16 模型在 classifier 的最后位置新增了一层线性层:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

(7): Linear(in_features=120, out_features=60, bias=True)

)

)

5--删除特定层

简单的实现方式是:使用 del 指令删除指定的网络层;

from torchvision import models

import torch.nn as nn

# 初始化模型

vgg16 = models.vgg16(pretrained=True)

# print(vgg16)

# 删除特定层 (删除vgg16.classifier[6]层)

del vgg16.classifier[6]

print(vgg16)删除后 VGG16 模型的打印结果如下,显然原 vgg16 模型的最后一层被删除了:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

)

)

6--固定预训练模型的权重

首先固定预训练模型的权重,再对特定层进行修改。

from torchvision import models

import torch.nn as nn

# 初始化模型

vgg16 = models.vgg16(pretrained=True)

# print(vgg16)

# 固定预训练模型的所有

for param in vgg16.parameters():

param.requires_grad = False

# 修改最后一层

num_fc = vgg16.classifier[6].in_features

num_class = 120

vgg16.classifier[6] = nn.Linear(num_fc, num_class)

# 模型修改后,打印参数的可训练情况

for name, param in vgg16.named_parameters():

print(name, param.requires_grad)features.0.weight False

features.0.bias False

features.2.weight False

features.2.bias False

features.5.weight False

features.5.bias False

features.7.weight False

features.7.bias False

features.10.weight False

features.10.bias False

features.12.weight False

features.12.bias False

features.14.weight False

features.14.bias False

features.17.weight False

features.17.bias False

features.19.weight False

features.19.bias False

features.21.weight False

features.21.bias False

features.24.weight False

features.24.bias False

features.26.weight False

features.26.bias False

features.28.weight False

features.28.bias False

classifier.0.weight False

classifier.0.bias False

classifier.3.weight False

classifier.3.bias False

classifier.6.weight True

classifier.6.bias True

features.0.weight False

features.0.bias False

features.2.weight False

features.2.bias False

features.5.weight False

features.5.bias False

features.7.weight False

features.7.bias False

features.10.weight False

features.10.bias False

features.12.weight False

features.12.bias False

features.14.weight False

features.14.bias False

features.17.weight False

features.17.bias False

features.19.weight False

features.19.bias False

features.21.weight False

features.21.bias False

features.24.weight False

features.24.bias False

features.26.weight False

features.26.bias False

features.28.weight False

features.28.bias False

classifier.0.weight False

classifier.0.bias False

classifier.3.weight False

classifier.3.bias False

classifier.6.weight True

classifier.6.bias True

上述代码结果表明:被修改的层,其参数状态变为可学习,未修改层的参数仍保持原状态;

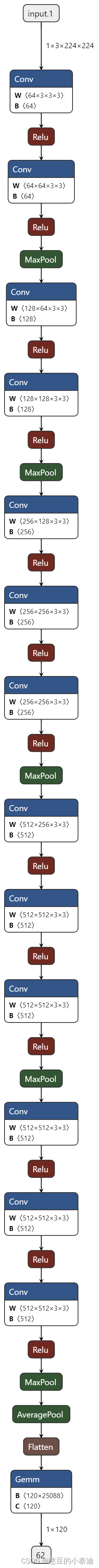

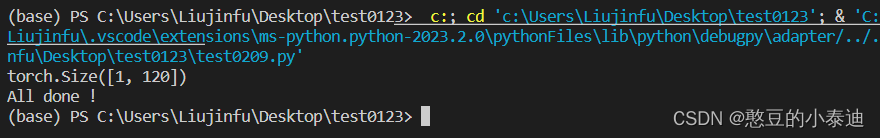

7--综合应用

采用 vgg16 预训练模型作为新网络的 backbone,对 backbone 进行增删改等操作,最后增加自定义的网络模块:

from torchvision import models

import torch.nn as nn

import torch

class My_Net(nn.Module):

def __init__(self):

super(My_Net, self).__init__()

self.backbone = models.vgg16(pretrained=True) # 以 vgg16 作为 backbone

self.backbone = self.process_backbone(self.backbone) # 对预训练模型进行处理

self.linear1 = nn.Linear(in_features = 25088, out_features = 120)

def process_backbone(self, model):

# 固定预训练模型的参数

for param in model.parameters():

param.requires_grad = False

# 删除 classifier 层

# 这里不能直接删除 classifier 层,即不能 del model.classifier,因为可能会涵盖其它 Flatten 等操作在此

del model.classifier[0:7]

return model

def forward(self, x):

x = self.backbone(x)

x = self.linear1(x)

return x

if __name__ == "__main__":

model = My_Net() # 初始化模型

input_data = torch.rand((1, 3, 224, 224)) # 初始化输入数据

output = model(input_data)

print(output.shape) # [1, 120]

torch.onnx.export(model, input_data, "./My_Net.onnx") # 保存导出的模型

print("All done !")

利用 netron 对导出的模型进行可视化:

# python

import netron

netron.start("./My_Net.onnx")