import os

import torch

from torch.utils.data import DataLoader, Dataset

from transformers import BertTokenizer, BertForSequenceClassification, AdamW

from sklearn.metrics import accuracy_score, classification_report

from tqdm import tqdm

# 自定义数据集

class CustomDataset(Dataset):

def __init__(self, texts, labels, tokenizer, max_length=128):

self.texts = texts

self.labels = labels

self.tokenizer = tokenizer

self.max_length = max_length

def __len__(self):

return len(self.texts)

def __getitem__(self, idx):

text = self.texts[idx]

label = self.labels[idx]

encoding = self.tokenizer(

text,

max_length=self.max_length,

padding="max_length",

truncation=True,

return_tensors="pt"

)

return {

"input_ids": encoding["input_ids"].squeeze(0),

"attention_mask": encoding["attention_mask"].squeeze(0),

"label": torch.tensor(label, dtype=torch.long)

}

# 训练函数

def train_model(model, train_loader, optimizer, device, num_epochs=3):

model.train()

for epoch in range(num_epochs):

total_loss = 0

for batch in tqdm(train_loader, desc=f"Training Epoch {epoch + 1}/{num_epochs}"):

input_ids = batch["input_ids"].to(device)

attention_mask = batch["attention_mask"].to(device)

labels = batch["label"].to(device)

outputs = model(input_ids, attention_mask=attention_mask, labels=labels)

loss = outputs.loss

total_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f"Epoch {epoch + 1} Loss: {total_loss / len(train_loader)}")

# 评估函数

def evaluate_model(model, val_loader, device):

model.eval()

predictions, true_labels = [], []

with torch.no_grad():

for batch in val_loader:

input_ids = batch["input_ids"].to(device)

attention_mask = batch["attention_mask"].to(device)

labels = batch["label"].to(device)

outputs = model(input_ids, attention_mask=attention_mask)

logits = outputs.logits

preds = torch.argmax(logits, dim=1).cpu().numpy()

predictions.extend(preds)

true_labels.extend(labels.cpu().numpy())

accuracy = accuracy_score(true_labels, predictions)

report = classification_report(true_labels, predictions)

print(f"Validation Accuracy: {accuracy}")

print("Classification Report:")

print(report)

# 模型保存函数

def save_model(model, tokenizer, output_dir):

os.makedirs(output_dir, exist_ok=True)

model.save_pretrained(output_dir)

tokenizer.save_pretrained(output_dir)

print(f"Model saved to {output_dir}")

# 模型加载函数

def load_model(output_dir, device):

tokenizer = BertTokenizer.from_pretrained(output_dir)

model = BertForSequenceClassification.from_pretrained(output_dir)

model.to(device)

print(f"Model loaded from {output_dir}")

return model, tokenizer

# 推理预测函数

def predict(texts, model, tokenizer, device, max_length=128):

model.eval()

encodings = tokenizer(

texts,

max_length=max_length,

padding="max_length",

truncation=True,

return_tensors="pt"

)

input_ids = encodings["input_ids"].to(device)

attention_mask = encodings["attention_mask"].to(device)

with torch.no_grad():

outputs = model(input_ids, attention_mask=attention_mask)

logits = outputs.logits

probabilities = torch.softmax(logits, dim=1).cpu().numpy()

predictions = torch.argmax(logits, dim=1).cpu().numpy()

return predictions, probabilities

# 主函数

def main():

# 配置参数

config = {

"train_batch_size": 16,

"val_batch_size": 16,

"learning_rate": 5e-5,

"num_epochs": 5,

"max_length": 128,

"device_id": 7, # 指定 GPU ID

"model_dir": "model",

"local_model_path": "roberta_tiny_model", # 指定本地模型路径,如果为 None 则使用预训练模型

"pretrained_model_name": "uer/chinese_roberta_L-12_H-128", # 预训练模型名称

}

# 设置设备

device = torch.device(f"cuda:{config['device_id']}" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

# 加载分词器和模型

tokenizer = BertTokenizer.from_pretrained(config["local_model_path"])

model = BertForSequenceClassification.from_pretrained(config["local_model_path"], num_labels=2)

model.to(device)

# 示例数据

train_texts = ["This is a great product!", "I hate this service."]

train_labels = [1, 0]

val_texts = ["Awesome experience.", "Terrible product."]

val_labels = [1, 0]

# 创建数据集和数据加载器

train_dataset = CustomDataset(train_texts, train_labels, tokenizer, config["max_length"])

val_dataset = CustomDataset(val_texts, val_labels, tokenizer, config["max_length"])

train_loader = DataLoader(train_dataset, batch_size=config["train_batch_size"], shuffle=True)

val_loader = DataLoader(val_dataset, batch_size=config["val_batch_size"])

# 定义优化器

optimizer = AdamW(model.parameters(), lr=config["learning_rate"])

# 训练模型

train_model(model, train_loader, optimizer, device, num_epochs=config["num_epochs"])

# 评估模型

evaluate_model(model, val_loader, device)

# 保存模型

save_model(model, tokenizer, config["model_dir"])

# 加载模型

loaded_model, loaded_tokenizer = load_model(config["model_dir"], "cpu")

# 推理预测

new_texts = ["I love this!", "It's the worst."]

predictions, probabilities = predict(new_texts, loaded_model, loaded_tokenizer, "cpu")

for text, pred, prob in zip(new_texts, predictions, probabilities):

print(f"Text: {text}")

print(f"Predicted Label: {pred} (Probability: {prob})")

if __name__ == "__main__":

main()

【文本分类】bert二分类

news2026/2/14 18:27:26

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.coloradmin.cn/o/2272758.html

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈,一经查实,立即删除!相关文章

尚硅谷· vue3+ts 知识点学习整理 |14h的课程(持续更ing)

vue3

主要内容

核心:ref、reactive、computed、watch、生命周期

常用:hooks、自定义ref、路由、pinia、miit

面试:组件通信、响应式相关api

----> 笔记:ts快速梳理;vue3快速上手.pdf 笔记及大纲 如下ÿ…

阻抗(Impedance)、容抗(Capacitive Reactance)、感抗(Inductive Reactance)

阻抗(Impedance)、容抗(Capacitive Reactance)、感抗(Inductive Reactance) 都是交流电路中描述电流和电压之间关系的参数,但它们的含义、单位和作用不同。下面是它们的定义和区别: …

在 SQL 中,区分 聚合列 和 非聚合列(nonaggregated column)

文章目录 1. 什么是聚合列?2. 什么是非聚合列?3. 在 GROUP BY 查询中的非聚合列问题示例解决方案 4. 为什么 only_full_group_by 要求非聚合列出现在 GROUP BY 中?5. 如何判断一个列是聚合列还是非聚合列?6. 总结 在 SQL 中&#…

B树与B+树:数据库索引的秘密武器

想象一下,你正在构建一个超级大的图书馆,里面摆满了各种各样的书籍。B树和B树就像是两种不同的图书分类和摆放方式,它们都能帮助你快速找到想要的书籍,但各有特点。 B树就像是一个传统的图书馆摆放方式:

1. 书籍摆放&…

回归预测 | MATLAB实现CNN-SVM多输入单输出回归预测

回归预测 | MATLAB实现CNN-SVM多输入单输出回归预测 目录 回归预测 | MATLAB实现CNN-SVM多输入单输出回归预测预测效果基本介绍模型架构程序设计参考资料 预测效果 基本介绍

CNN-SVM多输入单输出回归预测是一种结合卷积神经网络(CNN)和支持向量机&#…

Linux-Ubuntu之裸机驱动最后一弹PWM控制显示亮度

Linux-Ubuntu之裸机驱动最后一弹PWM控制显示亮度 一, PWM实现原理二,软件实现三,正点原子裸机开发总结 一, PWM实现原理

PWM和学习51时候基本上一致,控制频率(周期)和占空比,51实验…

分数阶傅里叶变换代码 MATLAB实现

function Faf myfrft(f, a)

%分数阶傅里叶变换函数

%输入参数:

%f:原始信号

%a:阶数

%输出结果:

%原始信号的a阶傅里叶变换N length(f);%总采样点数

shft rem((0:N-1)fix(N/2),N)1;%此项等同于fftshift(1:N),起到翻…

Ubuntu 20.04安装gcc

一、安装GCC

1.更新包列表

user596785154:~$ sudo apt update2.安装gcc

user596785154:~$ sudo apt install gcc3.验证安装

user596785154:~$ gcc --version二 编译C文件

1.新建workspace文件夹

user596785154:~$ mkdir workspace2.进入workspace文件夹

user596785154:~…

小兔鲜儿:头部区域的logo,导航,搜索,购物车

头部:logo ,导航,搜索,购物车 头部总体布局: 设置好上下外边距以及总体高度, flex布局让总体一行排列 logo: logo考虑搜索引擎优化,所以要使用 h1中包裹 a 标签,a 里边写内容(到时候…

Linux C编程——文件IO基础

文件IO基础 一、简单的文件 IO 示例二、文件描述符三、open 打开文件1. 函数原型2. 文件权限3. 宏定义文件权限4. 函数使用实例 四、write 写文件五、read 读文件六、close 关闭文件七、Iseek 绍 Linux 应用编程中最基础的知识,即文件 I/O(Input、Outout…

论文解读 | NeurIPS'24 IRCAN:通过识别和重新加权上下文感知神经元来减轻大语言模型生成中的知识冲突...

点击蓝字 关注我们 AI TIME欢迎每一位AI爱好者的加入! 点击 阅读原文 观看作者讲解回放! 作者简介 史丹,天津大学博士生 内容简介 大语言模型(LLM)经过海量数据训练后编码了丰富的世界知识。最近的研究表明,…

【51单片机零基础-chapter5:模块化编程】

模块化编程 将以往main中泛型的代码,放在与main平级的c文件中,在h中引用. 简化main函数 将原来main中的delay抽出 然后将delay放入单独c文件,并单独开一个delay头文件,里面放置函数的声明,相当于收纳delay的c文件里面写的函数的接口. 注意,单个c文件所有用到的变量需要在该文…

扩散模型论文概述(三):Stability AI系列工作【学习笔记】

视频链接:扩散模型论文概述(三):Stability AI系列工作_哔哩哔哩_bilibili 本期视频讲的是Stability AI在图像生成的工作。 同样,第一张图片是神作,总结的太好了! 介绍Stable Diffusion之前&…

数据库软考历年上午真题与答案解析(2018-2024)

本题考查计算机总线相关知识。

总线(Bus)是计算机各种功能部件之间传送信息的公共通信干线,它是由导线组成的传输线束。 根据总线连接设备范围的不同, 分为:1.片内总线:芯片内部的总线; 2.系统…

【three.js】模型-几何体Geometry,材质Material

模型 在现实开发中,有时除了需要用代码创建模型之外,多数场景需要加载设计师提供的使用设计软件导出的模型。此时就需要使用模型加载器去加载模型,不同格式的模型需要引入对应的模型加载器,虽然加载器不同,但是使用方式…

彻底学会Gradle插件版本和Gradle版本及对应关系

看完这篇,保你彻底学会Gradle插件版本和Gradle版本及对应关系,超详细超全的对应关系表

需要知道Gradle插件版本和Gradle版本的对应关系,其实就是需要知道Gradle插件版本对应所需的gradle最低版本,详细对应关系如下表格࿰…

预测facebook签到位置

1.11 案例2:预测facebook签到位置 学习目标 目标 通过Facebook位置预测案例熟练掌握第一章学习内容 1 项目描述 本次比赛的目的是预测一个人将要签到的地方。 为了本次比赛,Facebook创建了一个虚拟世界,其中包括10公里*10公里共100平方公里的…

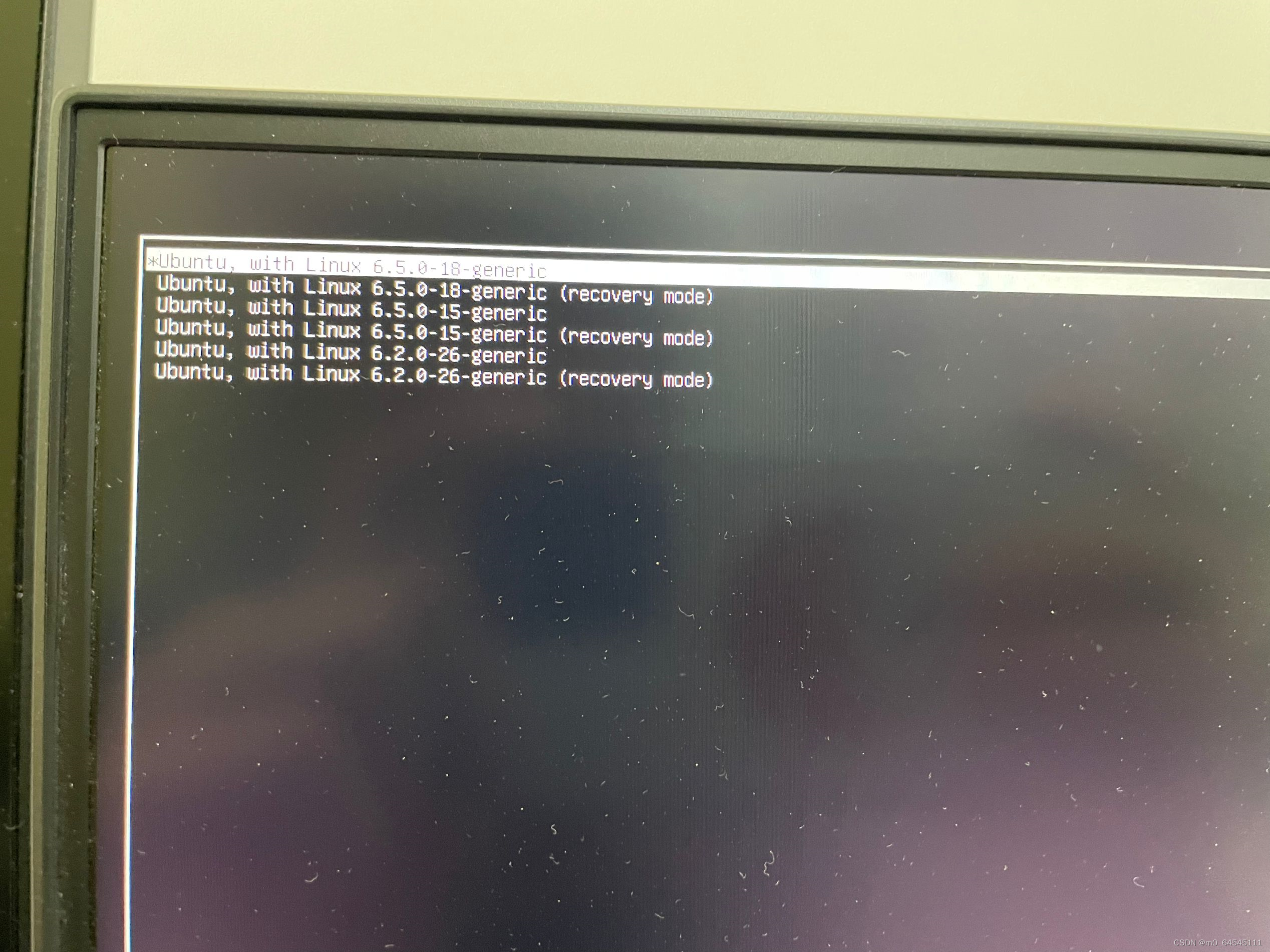

【万字详细教程】Linux to go——装在移动硬盘里的Linux系统(Ubuntu22.04)制作流程;一口气解决系统安装引导文件迁移显卡驱动安装等问题

Linux to go制作流程

0.写在前面 关于教程Why Linux to go?实际效果 1.准备工具2.制作步骤 下载系统镜像硬盘分区准备启动U盘安装系统重启完成驱动安装将系统启动引导程序迁移到移动硬盘上 3.可能出现的问题 3.1.U盘引导系统安装时出现崩溃3.2.不影响硬盘里本身已有…

在 macOS 上,你可以使用系统自带的 终端(Terminal) 工具,通过 SSH 协议远程连接服务器

文章目录 1. 打开终端2. 使用 SSH 命令连接服务器3. 输入密码4. 连接成功5. 使用密钥登录(可选)6. 退出 SSH 连接7. 其他常用 SSH 选项8. 常见问题排查问题 1:连接超时问题 2:权限被拒绝(Permission denied)…