V5比前面版本偏工程化,项目化,更贴合实战

一.V5版本项目配置

(1)整体项目概述

首先github直接查找yolov5,下载下来即可。在训练时,数据是怎么处理的?网络模型架构是怎么设计的(如各层的设计)?yolov5要求是大于python3.8与大于等以torch1.6 。因为torch1.6以上版本用到的混合精度是来提速做的。要求的工具包都是在requirements.txt文件中写有。pip install -r requirements.txt 。它默认用到的是coco数据集,但现在用自己做的数据集来做。它的单机多卡,多机多卡的情况都有考虑到,可做参考。

(2)训练自己的数据集方法

1)数据集可从https://public.roboflow.com网站中去下载,下载前注册一个免费账号,然后下载时,导出的格式就选TXT------>YOLO v5 PyTorch。最后把下载下来的数据集放到与YOLO5同级目录下(例如放在自己建的MaskDataSet目录下, 不放到项目里面,方便管理,这个可结合实际来放置吧),MaskDataSet目录下将有训练,测试与验证数据集和配置文件等。本工程是验证这个人是否带囗罩(2分类),所以下载的是囗罩数据集。

2)打开MaskDataSet目录下的配置文件data.yaml,如下图:

3)打开lables下的txt文件(它是标注数据),它是与同级的images下面的图片是要对应上(即txt前面的名字与images下的图片名字一样,名字名别太长并且不要中文)的,如下图:

标注数据制作工具可用lableme(它标出来的是json格式,当然可以把它转成txt格式),参考yoloV3

(3)训练数据参数配置

像V5版本真的偏重工程化,项目化,例如训练日志跟踪也很详细,可以处理多种不同格式的数据源。

1)打开 https://github.com/ultralytics/yolov5下载预训练模型,到时用作训练,验证时用,本例下载一个yolov5s.pt放在源码目录下,如下图:

2)train.py训练参数配置。本例:--data ..//MaskDataSet//data.yaml --cfg models//yolov5s.yaml --weights '' --epochs 300 --batch-size 16,主要配置数据源位置,预训练模型配置,epochs大小,每批处理的样本数,weights '' 表示不使用人家预训练模型。像yolov5s.yaml主要是网络结构参数配置,例包括特征提取层和网络输出层配置等等,可参考github v5中配置的例子:

3)detect.py验证推断参数配置。本例配置例如 --source ./inference/images/ --weights yolov5s.pt --conf 0.4:

二.V5项目工程源码解读

(1)数据源配置与读取(数据增强),把数据与标签返回给模型

1)数据源DEBUG流程解读

<1>train.py。先得到dataloader与dataset。前面的内容不看先,直接打断点到train.py中的168行处,它的初始化参数有如训练数据路径,图片大小,标签分类,model,运行设备,优化器,batch-size等等,如下图:

<2>单击断点后进入了datasets.py中的create_dataloader,这里主要调用LoadImagesAndLabels类得到dataset,然后用dataset传入到InfiniteDataLoader类中得到dataloader。先看看datasets.py的要功能(不同数据源的读取,做数据增强等功能,这个对不同数据源的处理方式都有考虑到并做了,偏工程化与项目化):

1>BOF解释:只增加训练成本,但是能显著提高精度,并不影响推理速度;数据增强:调整亮度,对比度,色调,随机缩放,剪切,翻转,旋转等方式;网络规则化方法:Dropout,Dropblock;类别不平衡,损失函数设计。LoadImagesAndLabels类(马赛克数据增强等)的代码如下:

class LoadImagesAndLabels(Dataset): # for training/testing

def __init__(self, path, img_size=640, batch_size=16, augment=False, hyp=None, rect=False, image_weights=False,

cache_images=False, single_cls=False, stride=32, pad=0.0, rank=-1):

try:

f = [] # image files

for p in path if isinstance(path, list) else [path]: #win和linux有点区别 所以这里面代码稍微处理的内容多了点

p = str(Path(p)) # os-agnostic

parent = str(Path(p).parent) + os.sep

if os.path.isfile(p): # file

with open(p, 'r') as t:

t = t.read().splitlines()

f += [x.replace('./', parent) if x.startswith('./') else x for x in t] # local to global path

elif os.path.isdir(p): # folder

f += glob.iglob(p + os.sep + '*.*')

else:

raise Exception('%s does not exist' % p)

self.img_files = sorted(

[x.replace('/', os.sep) for x in f if os.path.splitext(x)[-1].lower() in img_formats])

except Exception as e:

raise Exception('Error loading data from %s: %s\nSee %s' % (path, e, help_url))

n = len(self.img_files)

assert n > 0, 'No images found in %s. See %s' % (path, help_url)

bi = np.floor(np.arange(n) / batch_size).astype(np.int64) # batch index #batch索引

nb = bi[-1] + 1 # number of batches #一个epoch有多少个batch

self.n = n # number of images

self.batch = bi # batch index of image

self.img_size = img_size

self.augment = augment

self.hyp = hyp

self.image_weights = image_weights

self.rect = False if image_weights else rect

self.mosaic = self.augment and not self.rect # load 4 images at a time into a mosaic (only during training)

self.mosaic_border = [-img_size // 2, -img_size // 2] #限定范围

self.stride = stride#下采样总值

# Define labels

sa, sb = os.sep + 'images' + os.sep, os.sep + 'labels' + os.sep # /images/, /labels/ substrings

self.label_files = [x.replace(sa, sb, 1).replace(os.path.splitext(x)[-1], '.txt') for x in self.img_files]

# Check cache #可以设置缓存,再训练就不用一个个读了

cache_path = str(Path(self.label_files[0]).parent) + '.cache' # cached labels

if os.path.isfile(cache_path):

cache = torch.load(cache_path) # load

if cache['hash'] != get_hash(self.label_files + self.img_files): # dataset changed

cache = self.cache_labels(cache_path) # re-cache

else:

cache = self.cache_labels(cache_path) # cache

# Get labels

labels, shapes = zip(*[cache[x] for x in self.img_files])

self.shapes = np.array(shapes, dtype=np.float64)

self.labels = list(labels)

# Rectangular Training https://github.com/ultralytics/yolov3/issues/232

if self.rect: #矩形

# Sort by aspect ratio

s = self.shapes # wh

ar = s[:, 1] / s[:, 0] # aspect ratio

irect = ar.argsort()

self.img_files = [self.img_files[i] for i in irect]

self.label_files = [self.label_files[i] for i in irect]

self.labels = [self.labels[i] for i in irect]

self.shapes = s[irect] # wh

ar = ar[irect]

# Set training image shapes

shapes = [[1, 1]] * nb

for i in range(nb):

ari = ar[bi == i]

mini, maxi = ari.min(), ari.max()

if maxi < 1:

shapes[i] = [maxi, 1]

elif mini > 1:

shapes[i] = [1, 1 / mini]

self.batch_shapes = np.ceil(np.array(shapes) * img_size / stride + pad).astype(np.int64) * stride

# Cache labels

create_datasubset, extract_bounding_boxes, labels_loaded = False, False, False

nm, nf, ne, ns, nd = 0, 0, 0, 0, 0 # number missing, found, empty, datasubset, duplicate

pbar = enumerate(self.label_files)

if rank in [-1, 0]:

pbar = tqdm(pbar)

for i, file in pbar:

l = self.labels[i] # label

if l is not None and l.shape[0]:

assert l.shape[1] == 5, '> 5 label columns: %s' % file #5列是否都有

assert (l >= 0).all(), 'negative labels: %s' % file #标签值是否大于0

assert (l[:, 1:] <= 1).all(), 'non-normalized or out of bounds coordinate labels: %s' % file #归一化

if np.unique(l, axis=0).shape[0] < l.shape[0]: # duplicate rows 计算重复的

nd += 1 # print('WARNING: duplicate rows in %s' % self.label_files[i]) # duplicate rows

if single_cls:

l[:, 0] = 0 # force dataset into single-class mode 单个类别,设置其类别为0

self.labels[i] = l

nf += 1 # file found

# Create subdataset (a smaller dataset)

if create_datasubset and ns < 1E4:

if ns == 0:

create_folder(path='./datasubset')

os.makedirs('./datasubset/images')

exclude_classes = 43

if exclude_classes not in l[:, 0]:

ns += 1

# shutil.copy(src=self.img_files[i], dst='./datasubset/images/') # copy image

with open('./datasubset/images.txt', 'a') as f:

f.write(self.img_files[i] + '\n')

# Extract object detection boxes for a second stage classifier 把那个坐标框里面的数据截出来,看你任务需要

if extract_bounding_boxes:

p = Path(self.img_files[i])

img = cv2.imread(str(p))

h, w = img.shape[:2]

for j, x in enumerate(l):

f = '%s%sclassifier%s%g_%g_%s' % (p.parent.parent, os.sep, os.sep, x[0], j, p.name)

if not os.path.exists(Path(f).parent):

os.makedirs(Path(f).parent) # make new output folder

b = x[1:] * [w, h, w, h] # box

b[2:] = b[2:].max() # rectangle to square

b[2:] = b[2:] * 1.3 + 30 # pad

b = xywh2xyxy(b.reshape(-1, 4)).ravel().astype(np.int64)

b[[0, 2]] = np.clip(b[[0, 2]], 0, w) # clip boxes outside of image

b[[1, 3]] = np.clip(b[[1, 3]], 0, h)

assert cv2.imwrite(f, img[b[1]:b[3], b[0]:b[2]]), 'Failure extracting classifier boxes'

else:

ne += 1 # print('empty labels for image %s' % self.img_files[i]) # file empty

# os.system("rm '%s' '%s'" % (self.img_files[i], self.label_files[i])) # remove

if rank in [-1, 0]:

pbar.desc = 'Scanning labels %s (%g found, %g missing, %g empty, %g duplicate, for %g images)' % (

cache_path, nf, nm, ne, nd, n)

if nf == 0:

s = 'WARNING: No labels found in %s. See %s' % (os.path.dirname(file) + os.sep, help_url)

print(s)

assert not augment, '%s. Can not train without labels.' % s

# Cache images into memory for faster training (WARNING: large datasets may exceed system RAM)

self.imgs = [None] * n

if cache_images:

gb = 0 # Gigabytes of cached images

pbar = tqdm(range(len(self.img_files)), desc='Caching images')

self.img_hw0, self.img_hw = [None] * n, [None] * n

for i in pbar: # max 10k images

self.imgs[i], self.img_hw0[i], self.img_hw[i] = load_image(self, i) # img, hw_original, hw_resized

gb += self.imgs[i].nbytes

pbar.desc = 'Caching images (%.1fGB)' % (gb / 1E9)

def cache_labels(self, path='labels.cache'):

# Cache dataset labels, check images and read shapes

x = {} # dict

pbar = tqdm(zip(self.img_files, self.label_files), desc='Scanning images', total=len(self.img_files))

for (img, label) in pbar:

try:

l = []

image = Image.open(img)

image.verify() # PIL verify

# _ = io.imread(img) # skimage verify (from skimage import io)

shape = exif_size(image) # image size

assert (shape[0] > 9) & (shape[1] > 9), 'image size <10 pixels'

if os.path.isfile(label):

with open(label, 'r') as f:

l = np.array([x.split() for x in f.read().splitlines()], dtype=np.float32) # labels

if len(l) == 0:

l = np.zeros((0, 5), dtype=np.float32)

x[img] = [l, shape]

except Exception as e:

x[img] = [None, None]

print('WARNING: %s: %s' % (img, e))

x['hash'] = get_hash(self.label_files + self.img_files)

torch.save(x, path) # save for next time

return x

def __len__(self):

return len(self.img_files)

# def __iter__(self):

# self.count = -1

# print('ran dataset iter')

# #self.shuffled_vector = np.random.permutation(self.nF) if self.augment else np.arange(self.nF)

# return self

def __getitem__(self, index):

if self.image_weights:

index = self.indices[index]

hyp = self.hyp

mosaic = self.mosaic and random.random() < hyp['mosaic']

if mosaic:

# Load mosaic

img, labels = load_mosaic(self, index)

shapes = None

# MixUp https://arxiv.org/pdf/1710.09412.pdf

if random.random() < hyp['mixup']:

img2, labels2 = load_mosaic(self, random.randint(0, len(self.labels) - 1))

r = np.random.beta(8.0, 8.0) # mixup ratio, alpha=beta=8.0

img = (img * r + img2 * (1 - r)).astype(np.uint8)

labels = np.concatenate((labels, labels2), 0)

else:

# Load image

img, (h0, w0), (h, w) = load_image(self, index)

# Letterbox

shape = self.batch_shapes[self.batch[index]] if self.rect else self.img_size # final letterboxed shape

img, ratio, pad = letterbox(img, shape, auto=False, scaleup=self.augment)

shapes = (h0, w0), ((h / h0, w / w0), pad) # for COCO mAP rescaling

# Load labels

labels = []

x = self.labels[index]

if x.size > 0:

# Normalized xywh to pixel xyxy format

labels = x.copy()

labels[:, 1] = ratio[0] * w * (x[:, 1] - x[:, 3] / 2) + pad[0] # pad width

labels[:, 2] = ratio[1] * h * (x[:, 2] - x[:, 4] / 2) + pad[1] # pad height

labels[:, 3] = ratio[0] * w * (x[:, 1] + x[:, 3] / 2) + pad[0]

labels[:, 4] = ratio[1] * h * (x[:, 2] + x[:, 4] / 2) + pad[1]

if self.augment:

# Augment imagespace

if not mosaic: #这个之前在mosaic方法最后做过了

img, labels = random_perspective(img, labels,

degrees=hyp['degrees'],

translate=hyp['translate'],

scale=hyp['scale'],

shear=hyp['shear'],

perspective=hyp['perspective'])

# Augment colorspace h:色调 s:饱和度 V:亮度

augment_hsv(img, hgain=hyp['hsv_h'], sgain=hyp['hsv_s'], vgain=hyp['hsv_v'])

# Apply cutouts

# if random.random() < 0.9:

# labels = cutout(img, labels)

nL = len(labels) # number of labels

if nL: #1.调整标签格式 2.归一化标签取值范围

labels[:, 1:5] = xyxy2xywh(labels[:, 1:5]) # convert xyxy to xywh

labels[:, [2, 4]] /= img.shape[0] # normalized height 0-1

labels[:, [1, 3]] /= img.shape[1] # normalized width 0-1

if self.augment:#要不要做翻转操作

# flip up-down

if random.random() < hyp['flipud']:

img = np.flipud(img)

if nL:

labels[:, 2] = 1 - labels[:, 2]

# flip left-right

if random.random() < hyp['fliplr']:

img = np.fliplr(img)

if nL:

labels[:, 1] = 1 - labels[:, 1]

labels_out = torch.zeros((nL, 6))

if nL:

labels_out[:, 1:] = torch.from_numpy(labels)

# Convert

img = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416 要满足pytorch的格式

img = np.ascontiguousarray(img)

return torch.from_numpy(img), labels_out, self.img_files[index], shapes

@staticmethod

def collate_fn(batch):

img, label, path, shapes = zip(*batch) # transposed

for i, l in enumerate(label):

l[:, 0] = i # add target image index for build_targets()

return torch.stack(img, 0), torch.cat(label, 0), path, shapes2)图像数据源配置。

对datasets.py中的LoadImagesAndLabels类分析:

训练时主要用到这个类处理数据.其中这个类的__init__这个初始化方法主要是读取图像数据与标签数据。__getitem__方法是用来做数据增强的。

<1>数据源读取与处理

1>图像数据源配置。按路径读取数据源(本例都是图片数据),如下图:

至此读取数据源(图片)过程完成,下一步就是读取标签数据

2>加载标签数据

按以结尾的.cache缓存名去缓存中读取,如果存在就读取出来标签出来,否则就结合实际图像名称与标签文件路径读取txt文件中的坐标值,并保存到缓存中(以dict的格式存在,以具体的图像名为key形式,标签值用array保存,同时也把每一个的shape也在缓存中保存下来了)。读取标签放入到缓存的代码(LoadImagesAndLabels类中的cache_labels方法)如下:

def cache_labels(self, path='labels.cache'):

# Cache dataset labels, check images and read shapes

x = {} # dict

pbar = tqdm(zip(self.img_files, self.label_files), desc='Scanning images', total=len(self.img_files))

for (img, label) in pbar:

try:

l = []

image = Image.open(img)

image.verify() # PIL verify

# _ = io.imread(img) # skimage verify (from skimage import io)

shape = exif_size(image) # image size

assert (shape[0] > 9) & (shape[1] > 9), 'image size <10 pixels'

if os.path.isfile(label):

with open(label, 'r') as f:

l = np.array([x.split() for x in f.read().splitlines()], dtype=np.float32) # labels

if len(l) == 0:

l = np.zeros((0, 5), dtype=np.float32)

x[img] = [l, shape]

except Exception as e:

x[img] = [None, None]

print('WARNING: %s: %s' % (img, e))

x['hash'] = get_hash(self.label_files + self.img_files)

torch.save(x, path) # save for next time

return x本例缓存文件保存到这个目录下,文件名是以.cache结尾

上图中如果后续要做物体检测后,要对物体进行相似度比较(create_datasubset=true)或者对检查到的框的内容提取出来(extract_bounding_boxes=true)。

至此标签中的类别与坐标值都已经读取完毕了。

3>Mosaic数据增强方法

数据增强目的是降低过拟合风险。

经过前面二步的图像数据与标签数据读进来后,就可以进行增强操作了.现在还是进入LoadImagesAndLabels类的__getitem__方法,如下图:

def __getitem__(self, index):

if self.image_weights:

index = self.indices[index]

hyp = self.hyp

mosaic = self.mosaic and random.random() < hyp['mosaic']

if mosaic:

# Load mosaic

img, labels = load_mosaic(self, index)

shapes = None

# MixUp https://arxiv.org/pdf/1710.09412.pdf

if random.random() < hyp['mixup']:

img2, labels2 = load_mosaic(self, random.randint(0, len(self.labels) - 1))

r = np.random.beta(8.0, 8.0) # mixup ratio, alpha=beta=8.0

img = (img * r + img2 * (1 - r)).astype(np.uint8)

labels = np.concatenate((labels, labels2), 0)

else:

# Load image

img, (h0, w0), (h, w) = load_image(self, index)

# Letterbox

shape = self.batch_shapes[self.batch[index]] if self.rect else self.img_size # final letterboxed shape

img, ratio, pad = letterbox(img, shape, auto=False, scaleup=self.augment)

shapes = (h0, w0), ((h / h0, w / w0), pad) # for COCO mAP rescaling

# Load labels

labels = []

x = self.labels[index]

if x.size > 0:

# Normalized xywh to pixel xyxy format

labels = x.copy()

labels[:, 1] = ratio[0] * w * (x[:, 1] - x[:, 3] / 2) + pad[0] # pad width

labels[:, 2] = ratio[1] * h * (x[:, 2] - x[:, 4] / 2) + pad[1] # pad height

labels[:, 3] = ratio[0] * w * (x[:, 1] + x[:, 3] / 2) + pad[0]

labels[:, 4] = ratio[1] * h * (x[:, 2] + x[:, 4] / 2) + pad[1]

if self.augment:

# Augment imagespace

if not mosaic: #这个之前在mosaic方法最后做过了

img, labels = random_perspective(img, labels,

degrees=hyp['degrees'],

translate=hyp['translate'],

scale=hyp['scale'],

shear=hyp['shear'],

perspective=hyp['perspective'])

# Augment colorspace h:色调 s:饱和度 V:亮度

augment_hsv(img, hgain=hyp['hsv_h'], sgain=hyp['hsv_s'], vgain=hyp['hsv_v'])

# Apply cutouts

# if random.random() < 0.9:

# labels = cutout(img, labels)

nL = len(labels) # number of labels

if nL: #1.调整标签格式 2.归一化标签取值范围

labels[:, 1:5] = xyxy2xywh(labels[:, 1:5]) # convert xyxy to xywh

labels[:, [2, 4]] /= img.shape[0] # normalized height 0-1

labels[:, [1, 3]] /= img.shape[1] # normalized width 0-1

if self.augment:#要不要做翻转操作

# flip up-down

if random.random() < hyp['flipud']:

img = np.flipud(img)

if nL:

labels[:, 2] = 1 - labels[:, 2]

# flip left-right

if random.random() < hyp['fliplr']:

img = np.fliplr(img)

if nL:

labels[:, 1] = 1 - labels[:, 1]

labels_out = torch.zeros((nL, 6))

if nL:

labels_out[:, 1:] = torch.from_numpy(labels)

# Convert

img = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416 要满足pytorch的格式

img = np.ascontiguousarray(img)

return torch.from_numpy(img), labels_out, self.img_files[index], shapes如果mosaic为真,则进入load_mosaic方法,这个方法主要是把四张图像拼接成一张大图,不光是图像发生变化,它的标签也肯定也重新计算的,将会把这大图传入到网络中的,代码如下:

def load_mosaic(self, index):

# loads images in a mosaic

labels4 = []

s = self.img_size

yc, xc = [int(random.uniform(-x, 2 * s + x)) for x in self.mosaic_border] # mosaic center x, y

indices = [index] + [random.randint(0, len(self.labels) - 1) for _ in range(3)] # 3 additional image indices

for i, index in enumerate(indices):

# Load image

img, _, (h, w) = load_image(self, index)

# place img in img4

if i == 0: # top left 1.初始化大图;2.计算当前图片放在大图中什么位置;3.计算在小图中取哪一部分放到大图中

img4 = np.full((s * 2, s * 2, img.shape[2]), 114, dtype=np.uint8) # base image with 4 tiles

x1a, y1a, x2a, y2a = max(xc - w, 0), max(yc - h, 0), xc, yc # xmin, ymin, xmax, ymax (large image)

x1b, y1b, x2b, y2b = w - (x2a - x1a), h - (y2a - y1a), w, h # xmin, ymin, xmax, ymax (small image)

elif i == 1: # top right

x1a, y1a, x2a, y2a = xc, max(yc - h, 0), min(xc + w, s * 2), yc

x1b, y1b, x2b, y2b = 0, h - (y2a - y1a), min(w, x2a - x1a), h

elif i == 2: # bottom left

x1a, y1a, x2a, y2a = max(xc - w, 0), yc, xc, min(s * 2, yc + h)

x1b, y1b, x2b, y2b = w - (x2a - x1a), 0, w, min(y2a - y1a, h)

elif i == 3: # bottom right

x1a, y1a, x2a, y2a = xc, yc, min(xc + w, s * 2), min(s * 2, yc + h)

x1b, y1b, x2b, y2b = 0, 0, min(w, x2a - x1a), min(y2a - y1a, h)

#1.截图小图中的部分放到大图中 2.由于小图可能填充不满,所以还需要计算差异值,因为一会要更新坐标框标签

img4[y1a:y2a, x1a:x2a] = img[y1b:y2b, x1b:x2b] # img4[ymin:ymax, xmin:xmax]

padw = x1a - x1b

padh = y1a - y1b

# Labels 标签值要重新计算,因为现在都放到大图中了

x = self.labels[index]

labels = x.copy()

if x.size > 0: # Normalized xywh to pixel xyxy format

labels[:, 1] = w * (x[:, 1] - x[:, 3] / 2) + padw

labels[:, 2] = h * (x[:, 2] - x[:, 4] / 2) + padh

labels[:, 3] = w * (x[:, 1] + x[:, 3] / 2) + padw

labels[:, 4] = h * (x[:, 2] + x[:, 4] / 2) + padh

labels4.append(labels)

# Concat/clip labels 坐标计算完之后可能越界,调整坐标值,让他们都在大图中

if len(labels4):

labels4 = np.concatenate(labels4, 0)

np.clip(labels4[:, 1:], 0, 2 * s, out=labels4[:, 1:]) # use with random_perspective

# img4, labels4 = replicate(img4, labels4) # replicate

# Augment 对整合的大图再进行随机旋转、平移、缩放、裁剪

img4, labels4 = random_perspective(img4, labels4,

degrees=self.hyp['degrees'],

translate=self.hyp['translate'],

scale=self.hyp['scale'],

shear=self.hyp['shear'],

perspective=self.hyp['perspective'],

border=self.mosaic_border) # border to remove

return img4, labels4对load_mosaic方法分析:

由上图按图片大小(img_size=640)与x=-320可得出随机中心点位置是(808,862),中心点是偏右下角,其中2*s表示两张图片长度1280;然后还要传入的index值与随机去选3张图片的index值一起组成4个indexs值;然后按这4个indexs进行for操作,调用load_image(self,index)取图片,重置图片长宽大小,目的将来是把四张拼在一张大图(标签也发生拼接了)中。

4>数据四合一方法与流程演示

调用img, _, (h, w) = load_image(self, index)返回后,回到了load_mosaic方法中,

由上二张图总结:h,w是从图片加载进来时得到的高度与宽度;xc,yc是大图的计算出的初始中心点位置,初始位置付给第一张图的右下角;max(xc - w, 0)目的是如果小图放到大图里面超出大图位置(越界)时,就取大图的位置0处;

计算偏移量与更新标签后至此第一张小图就放入到大图的左上角位置,然后第二张图是放在右上角了,剩下的三,四张图按同样的方式计算拼接到大图里。

下面开始对数据与标签做数据增强:

点进去的数据增强方法(用opencv去做,不是用transformer来做)如下:

def random_perspective(img, targets=(), degrees=10, translate=.1, scale=.1, shear=10, perspective=0.0, border=(0, 0)):

# torchvision.transforms.RandomAffine(degrees=(-10, 10), translate=(.1, .1), scale=(.9, 1.1), shear=(-10, 10))

# targets = [cls, xyxy]

# 最后大图还要resize回正常的大小

height = img.shape[0] + border[0] * 2 # shape(h,w,c)

width = img.shape[1] + border[1] * 2

#旋转 平移 缩放等操作 都需要系数矩阵(参考opencv函数,这里全部随机)

# Center

C = np.eye(3)

C[0, 2] = -img.shape[1] / 2 # x translation (pixels)

C[1, 2] = -img.shape[0] / 2 # y translation (pixels)

# Perspective 平移

P = np.eye(3)

P[2, 0] = random.uniform(-perspective, perspective) # x perspective (about y)

P[2, 1] = random.uniform(-perspective, perspective) # y perspective (about x)

# Rotation and Scale 旋转与缩放

R = np.eye(3)

a = random.uniform(-degrees, degrees)

# a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

s = random.uniform(1 - scale, 1 + scale)

# s = 2 ** random.uniform(-scale, scale)

R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

# Shear 裁剪

S = np.eye(3)

S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

# Translation

T = np.eye(3)

T[0, 2] = random.uniform(0.5 - translate, 0.5 + translate) * width # x translation (pixels)

T[1, 2] = random.uniform(0.5 - translate, 0.5 + translate) * height # y translation (pixels)

# 一起执行这些随机变换

# Combined rotation matrix

M = T @ S @ R @ P @ C # order of operations (right to left) is IMPORTANT

if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

if perspective:

img = cv2.warpPerspective(img, M, dsize=(width, height), borderValue=(114, 114, 114))

else: # affine

img = cv2.warpAffine(img, M[:2], dsize=(width, height), borderValue=(114, 114, 114))

# Visualize

# import matplotlib.pyplot as plt

# ax = plt.subplots(1, 2, figsize=(12, 6))[1].ravel()

# ax[0].imshow(img[:, :, ::-1]) # base

# ax[1].imshow(img2[:, :, ::-1]) # warped

# Transform label coordinates 数据变化了,标签的坐标值也得跟着一起变

n = len(targets)

if n:

# warp points

xy = np.ones((n * 4, 3))

xy[:, :2] = targets[:, [1, 2, 3, 4, 1, 4, 3, 2]].reshape(n * 4, 2) # x1y1, x2y2, x1y2, x2y1

xy = xy @ M.T # transform

if perspective:

xy = (xy[:, :2] / xy[:, 2:3]).reshape(n, 8) # rescale

else: # affine

xy = xy[:, :2].reshape(n, 8)

# create new boxes

x = xy[:, [0, 2, 4, 6]]

y = xy[:, [1, 3, 5, 7]]

xy = np.concatenate((x.min(1), y.min(1), x.max(1), y.max(1))).reshape(4, n).T

# # apply angle-based reduction of bounding boxes

# radians = a * math.pi / 180

# reduction = max(abs(math.sin(radians)), abs(math.cos(radians))) ** 0.5

# x = (xy[:, 2] + xy[:, 0]) / 2

# y = (xy[:, 3] + xy[:, 1]) / 2

# w = (xy[:, 2] - xy[:, 0]) * reduction

# h = (xy[:, 3] - xy[:, 1]) * reduction

# xy = np.concatenate((x - w / 2, y - h / 2, x + w / 2, y + h / 2)).reshape(4, n).T

# clip boxes

xy[:, [0, 2]] = xy[:, [0, 2]].clip(0, width)

xy[:, [1, 3]] = xy[:, [1, 3]].clip(0, height)

# filter candidates

i = box_candidates(box1=targets[:, 1:5].T * s, box2=xy.T)

targets = targets[i]

targets[:, 1:5] = xy[i]

return img, targets

最后返回图片数据与标签回去,数据增强方法random_perspective结束,同样调用load_mosaic方法也就结束了,现在返回到__getitem__方法中。

5>getItem构建batch

进入到datasets.py中的__getitem__方法里,最后代码有:

nL = len(labels) # number of labels

if nL: #1.调整标签格式 2.归一化标签取值范围

labels[:, 1:5] = xyxy2xywh(labels[:, 1:5]) # convert xyxy to xywh

labels[:, [2, 4]] /= img.shape[0] # normalized height 0-1

labels[:, [1, 3]] /= img.shape[1] # normalized width 0-1

if self.augment:#要不要做翻转操作

# flip up-down

if random.random() < hyp['flipud']:

img = np.flipud(img)

if nL:

labels[:, 2] = 1 - labels[:, 2]

# flip left-right

if random.random() < hyp['fliplr']:

img = np.fliplr(img)

if nL:

labels[:, 1] = 1 - labels[:, 1]

labels_out = torch.zeros((nL, 6))

if nL:

labels_out[:, 1:] = torch.from_numpy(labels)

# Convert

img = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416 要满足pytorch的格式

img = np.ascontiguousarray(img)

return torch.from_numpy(img), labels_out, self.img_files[index], shapes

最后把转成矩阵的图像数据与标签数据,shapes值返回,至此利用__getitem__得到一批数据与标签工作就完成,然后结合设置几个batch,那就调几次__getitem__方法就行了。拿到矩阵数据后就可供网络前向传播与网络反向传播做训练了。

(2)网络模型细节与逻辑

6)网络架构图可视化工具安装

<1>打开网页版:https://netron.app/,然后导入相应的模型文件(例如可在yolov5的models目录下有预训练模型文件yolov5s.pt与yolov5s.onnx文件,直接打开即可,发现onnx格式的文件打开后比较清晰各结构走向)。onnx文件是由pt文件用代码转换成的,那就是按下面<2>可视化工具中的第二点开始,在源代码export.py中输入参数后转换得到

<2>手动安装(有源码下载: https://github.com/lutzroeder/netron):

7) V5网络配置文件解读

yolov5s.yaml主要是网络结构参数配置,例包括特征提取层和网络输出层配置等等。yolov5s.yaml主要配置信息代码如下:

# parameters

nc: 2 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Focus, [64, 3]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, BottleneckCSP, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, BottleneckCSP, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, BottleneckCSP, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 1, SPP, [1024, [5, 9, 13]]],

[-1, 3, BottleneckCSP, [1024, False]], # 9

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, BottleneckCSP, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, BottleneckCSP, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, BottleneckCSP, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, BottleneckCSP, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

<1>paremeters中的参数说明

1>nc:分类个数

2>depth_multiple:模型深度参数,表示网络中重复执行的比例,从配置文件中读出number值为非1的值后要乘以这个参数值就是真正重复执行的次数了,例如

3>width_multiple:卷积核的个数比例,最终得到几个特征图也是要乘以这个值的,例如:

<2>anchors候选框参数说明

<3>特征提取模块backbone

<4>测试输出配置head:

源码中会先读出这个配置文件后,调用相应方法解释里面的内容来。

8)Focus模块流程分析(特征提取模块的第一层)

用网页版的网页架构图打开yolov5s.onnx文件后,

注意上图中的长,宽,通道数是发生变化的。

由上图的32输出,那么就是说对应配置文件的64*0.5=32,例如下图:

运行了一个

9)完成配置文件解析任务

现在看下源代码:

其实最前面的图像数据与标签数据增强等操作是后面才进行的,首先是对网络模型的加载,前向等操作的。

yolo.py中的Model类中__init__方法主要是对网络各层的堆叠

__init__方法中读取到yolov5s.yaml配置后,将调用self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch])和按配置文件内容把每一层定义出来 ;点进去parse_model方法中代码如下:

def parse_model(d, ch): # model_dict, input_channels(3)

logger.info('\n%3s%18s%3s%10s %-40s%-30s' % ('', 'from', 'n', 'params', 'module', 'arguments'))

anchors, nc, gd, gw = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple']

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors

no = na * (nc + 5) # number of outputs = anchors * (classes + 5)

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out

for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']): # from, number, module, args

m = eval(m) if isinstance(m, str) else m # eval strings

for j, a in enumerate(args):

try:

args[j] = eval(a) if isinstance(a, str) else a # eval strings

except:

pass

n = max(round(n * gd), 1) if n > 1 else n # depth gain

if m in [Conv, Bottleneck, SPP, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP, C3]:

c1, c2 = ch[f], args[0]

# Normal

# if i > 0 and args[0] != no: # channel expansion factor

# ex = 1.75 # exponential (default 2.0)

# e = math.log(c2 / ch[1]) / math.log(2)

# c2 = int(ch[1] * ex ** e)

# if m != Focus:

c2 = make_divisible(c2 * gw, 8) if c2 != no else c2

# Experimental

# if i > 0 and args[0] != no: # channel expansion factor

# ex = 1 + gw # exponential (default 2.0)

# ch1 = 32 # ch[1]

# e = math.log(c2 / ch1) / math.log(2) # level 1-n

# c2 = int(ch1 * ex ** e)

# if m != Focus:

# c2 = make_divisible(c2, 8) if c2 != no else c2

args = [c1, c2, *args[1:]]

if m in [BottleneckCSP, C3]:

args.insert(2, n)

n = 1

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum([ch[-1 if x == -1 else x + 1] for x in f])

elif m is Detect:

args.append([ch[x + 1] for x in f])

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

else:

c2 = ch[f]

m_ = nn.Sequential(*[m(*args) for _ in range(n)]) if n > 1 else m(*args) # module

t = str(m)[8:-2].replace('__main__.', '') # module type

np = sum([x.numel() for x in m_.parameters()]) # number params

m_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number params

logger.info('%3s%18s%3s%10.0f %-40s%-30s' % (i, f, n, np, t, args)) # print

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

layers.append(m_)

ch.append(c2)

return nn.Sequential(*layers), sorted(save)现对上面代码解释:

上图中的no是指最后输出的结果个数,而nc是类别数,5是四个坐标值加上一个置信度,na是候选框

到此为止,本例中的Focus模块对应的参数构建好了,然后通过 m_ = nn.Sequential(*[m(*args) for _ in range(n)]) if n > 1 else m(*args) 就会调用common.py中把这个Focus定义进来(调用这个common.py中的Focus类的__init__方法)。如下图:

本例是加载第一个模型Focus

又因为上图中的__init__方法最后一行调用了Conv,所以它就有下面这张图(其实主要就如这三个内容组成<conv2d,batchnorm2d,hardswish>):

最后

至此读取配置文件中的内容把网络各层都初始化加进来了,下一步就是怎样计算输入进来的矩阵数据(例如一张图片的数据)。

10)前向传播计算

还是定位在yolo.py的class Model的forward中,因为augment默认是false,所以直接会先转到下面那个forward_once方法中。在forward_once方法中它会for每一个模型,然后会在common.py文件中调用相应的模型class,并进入这个类中的forward方法执行。

上图是指对输入的数据进行分块,拼接操作,最后它的channel是变成3*4=12,而w与h都是小一半,都变成2了。

按Focus网络结构然后会进入到batchnorm.py中的class BatchNorm2d(_BatchNorm):中执行,最后还要执行激活函数就结束了。

11)BottleneckCSP层计算方法

先打开common.py中的class BottleneckCSP中的代码,可看到模型BottleneckCSP的每一层定义(如conv,conv2d,batchnorm2d,leakyrelu,sequential)情况(__init__),然后计算的forward方法(主要是对__init__定义的各层之间进行怎样的先后计算,组合等)

class BottleneckCSP(nn.Module):

# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworks

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(BottleneckCSP, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)

self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)

self.cv4 = Conv(2 * c_, c2, 1, 1)

self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)

self.act = nn.LeakyReLU(0.1, inplace=True)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

def forward(self, x):

y1 = self.cv3(self.m(self.cv1(x)))

y2 = self.cv2(x)

return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))

至此BottleneckCSP层计算结束。

12)SPP层计算细节分析

打开配置文件:

现在跳转到common.py中的SPP模块的forward方法中,如下图:

像SPP这里主要用到卷积是做了降维操作功能,而三个最大迟化后分别得到特征图数量还是256个的,拼接后变成1024个特征图,最后再做一次卷积使特征图又减半,这个SSP方法中的__init__中用到//表示除法取商。

到此特征提取方法内容结束。下面将说Head层里面的内容:

13)Head层流程解读

像上图中的Concat之前,需要做上采样操作,目的是把w,h变成一样,这样才能拼接在一起。

14)上采样与拼接操作

经过上采样并与第6层拼接后,w与h会不变,但特征图个数就要相加,变成了256+256=512个,如下图打印出的第13层:

15)输出结果分析

先看配置文件可知,它将从17,20,23层作为输入,最后输出3个结果,如下图:

至此按配置文件中的特征提取,计算与输出的网络结构源码就结束了

(3)参数,训练策略,最终结果与模型展示

16)超参数解读

打开/data/hyp.scratch.yaml文件,里面配置了很多训练时的初始化参数,如下图:

17)命令行参数介绍

train.py中的main方法代码如下:

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='yolov5s.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='', help='model.yaml path')#网络配置

parser.add_argument('--data', type=str, default='data/coco128.yaml', help='data.yaml path')#数据

parser.add_argument('--hyp', type=str, default='data/hyp.scratch.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=16, help='total batch size for all GPUs')

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='[train, test] image sizes')

parser.add_argument('--rect', action='store_true', help='rectangular training')#矩形训练

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')#接着之前的训练

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')#不保存

parser.add_argument('--notest', action='store_true', help='only test final epoch')#不测试

parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')#是否调整候选框

parser.add_argument('--evolve', action='store_true', help='evolve hyperparameters')#超参数更新

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache-images', action='store_true', help='cache images for faster training')#缓存图片

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--name', default='', help='renames experiment folder exp{N} to exp{N}_{name} if supplied')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')#是否多尺度训练

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')#是否一个类别

parser.add_argument('--adam', action='store_true', help='use torch.optim.Adam() optimizer')#优化器选择

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')#跨GPU的BN

parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')#GPU ID

parser.add_argument('--logdir', type=str, default='runs/', help='logging directory')

parser.add_argument('--workers', type=int, default=0, help='maximum number of dataloader workers')#windows的同学别改

opt = parser.parse_args()

# Set DDP variables WORLD_SIZE:进程数 RANK:进程编号

opt.total_batch_size = opt.batch_size

opt.world_size = int(os.environ['WORLD_SIZE']) if 'WORLD_SIZE' in os.environ else 1

opt.global_rank = int(os.environ['RANK']) if 'RANK' in os.environ else -1

set_logging(opt.global_rank)

if opt.global_rank in [-1, 0]:

check_git_status()

# Resume

if opt.resume: # resume an interrupted run 是否继续训练

#传入模型的路径或者最后一次跑的模型(在runs中有last.pt)

ckpt = opt.resume if isinstance(opt.resume, str) else get_latest_run() # specified or most recent path

log_dir = Path(ckpt).parent.parent # runs/exp0

assert os.path.isfile(ckpt), 'ERROR: --resume checkpoint does not exist'

with open(log_dir / 'opt.yaml') as f:

opt = argparse.Namespace(**yaml.load(f, Loader=yaml.FullLoader)) # replace

opt.cfg, opt.weights, opt.resume = '', ckpt, True

logger.info('Resuming training from %s' % ckpt)

else:#加载之前配置好的参数

# opt.hyp = opt.hyp or ('hyp.finetune.yaml' if opt.weights else 'hyp.scratch.yaml')

opt.data, opt.cfg, opt.hyp = check_file(opt.data), check_file(opt.cfg), check_file(opt.hyp) # check files

assert len(opt.cfg) or len(opt.weights), 'either --cfg or --weights must be specified'

opt.img_size.extend([opt.img_size[-1]] * (2 - len(opt.img_size))) # extend to 2 sizes (train, test)

log_dir = increment_dir(Path(opt.logdir) / 'exp', opt.name) # runs/exp1

device = select_device(opt.device, batch_size=opt.batch_size)

# DDP mode 分布式训练,没有多卡的同学略过

if opt.local_rank != -1:

assert torch.cuda.device_count() > opt.local_rank

torch.cuda.set_device(opt.local_rank)#选择GPU

device = torch.device('cuda', opt.local_rank)

dist.init_process_group(backend='nccl', init_method='env://') # distributed backend

assert opt.batch_size % opt.world_size == 0, '--batch-size must be multiple of CUDA device count'

opt.batch_size = opt.total_batch_size // opt.world_size

logger.info(opt)

with open(opt.hyp) as f:

hyp = yaml.load(f, Loader=yaml.FullLoader) # load hyps

# Train

if not opt.evolve:

tb_writer = None

if opt.global_rank in [-1, 0]:

logger.info(f'Start Tensorboard with "tensorboard --logdir {opt.logdir}", view at http://localhost:6006/')

tb_writer = SummaryWriter(log_dir=log_dir) # runs/exp0

train(hyp, opt, device, tb_writer)

# 参数搜索与突变

# Evolve hyperparameters (optional) 参考github issue:https://github.com/ultralytics/yolov3/issues/392

else:

# Hyperparameter evolution metadata (mutation scale 0-1, lower_limit, upper_limit)

meta = {'lr0': (1, 1e-5, 1e-1), # initial learning rate (SGD=1E-2, Adam=1E-3)

'lrf': (1, 0.01, 1.0), # final OneCycleLR learning rate (lr0 * lrf)

'momentum': (0.3, 0.6, 0.98), # SGD momentum/Adam beta1

'weight_decay': (1, 0.0, 0.001), # optimizer weight decay

'warmup_epochs': (1, 0.0, 5.0), # warmup epochs (fractions ok)

'warmup_momentum': (1, 0.0, 0.95), # warmup initial momentum

'warmup_bias_lr': (1, 0.0, 0.2), # warmup initial bias lr

'box': (1, 0.02, 0.2), # box loss gain

'cls': (1, 0.2, 4.0), # cls loss gain

'cls_pw': (1, 0.5, 2.0), # cls BCELoss positive_weight

'obj': (1, 0.2, 4.0), # obj loss gain (scale with pixels)

'obj_pw': (1, 0.5, 2.0), # obj BCELoss positive_weight

'iou_t': (0, 0.1, 0.7), # IoU training threshold

'anchor_t': (1, 2.0, 8.0), # anchor-multiple threshold

'anchors': (2, 2.0, 10.0), # anchors per output grid (0 to ignore)

'fl_gamma': (0, 0.0, 2.0), # focal loss gamma (efficientDet default gamma=1.5)

'hsv_h': (1, 0.0, 0.1), # image HSV-Hue augmentation (fraction)

'hsv_s': (1, 0.0, 0.9), # image HSV-Saturation augmentation (fraction)

'hsv_v': (1, 0.0, 0.9), # image HSV-Value augmentation (fraction)

'degrees': (1, 0.0, 45.0), # image rotation (+/- deg)

'translate': (1, 0.0, 0.9), # image translation (+/- fraction)

'scale': (1, 0.0, 0.9), # image scale (+/- gain)

'shear': (1, 0.0, 10.0), # image shear (+/- deg)

'perspective': (0, 0.0, 0.001), # image perspective (+/- fraction), range 0-0.001

'flipud': (1, 0.0, 1.0), # image flip up-down (probability)

'fliplr': (0, 0.0, 1.0), # image flip left-right (probability)

'mosaic': (1, 0.0, 1.0), # image mixup (probability)

'mixup': (1, 0.0, 1.0)} # image mixup (probability)

assert opt.local_rank == -1, 'DDP mode not implemented for --evolve'

opt.notest, opt.nosave = True, True # only test/save final epoch

# ei = [isinstance(x, (int, float)) for x in hyp.values()] # evolvable indices

yaml_file = Path(opt.logdir) / 'evolve' / 'hyp_evolved.yaml' # save best result here

if opt.bucket:

os.system('gsutil cp gs://%s/evolve.txt .' % opt.bucket) # download evolve.txt if exists

for _ in range(300): # generations to evolve

if os.path.exists('evolve.txt'): # if evolve.txt exists: select best hyps and mutate

# Select parent(s)

parent = 'single' # parent selection method: 'single' or 'weighted'

x = np.loadtxt('evolve.txt', ndmin=2)

n = min(5, len(x)) # number of previous results to consider

x = x[np.argsort(-fitness(x))][:n] # top n mutations

w = fitness(x) - fitness(x).min() # weights

if parent == 'single' or len(x) == 1:

# x = x[random.randint(0, n - 1)] # random selection

x = x[random.choices(range(n), weights=w)[0]] # weighted selection

elif parent == 'weighted':

x = (x * w.reshape(n, 1)).sum(0) / w.sum() # weighted combination

# Mutate

mp, s = 0.8, 0.2 # mutation probability, sigma

npr = np.random

npr.seed(int(time.time()))

g = np.array([x[0] for x in meta.values()]) # gains 0-1

ng = len(meta)

v = np.ones(ng)

while all(v == 1): # mutate until a change occurs (prevent duplicates)

v = (g * (npr.random(ng) < mp) * npr.randn(ng) * npr.random() * s + 1).clip(0.3, 3.0)

for i, k in enumerate(hyp.keys()): # plt.hist(v.ravel(), 300)

hyp[k] = float(x[i + 7] * v[i]) # mutate

# Constrain to limits

for k, v in meta.items():

hyp[k] = max(hyp[k], v[1]) # lower limit

hyp[k] = min(hyp[k], v[2]) # upper limit

hyp[k] = round(hyp[k], 5) # significant digits

# Train mutation

results = train(hyp.copy(), opt, device)

# Write mutation results

print_mutation(hyp.copy(), results, yaml_file, opt.bucket)

# Plot results

plot_evolution(yaml_file)

print(f'Hyperparameter evolution complete. Best results saved as: {yaml_file}\n'

f'Command to train a new model with these hyperparameters: $ python train.py --hyp {yaml_file}')18)训练流程解读

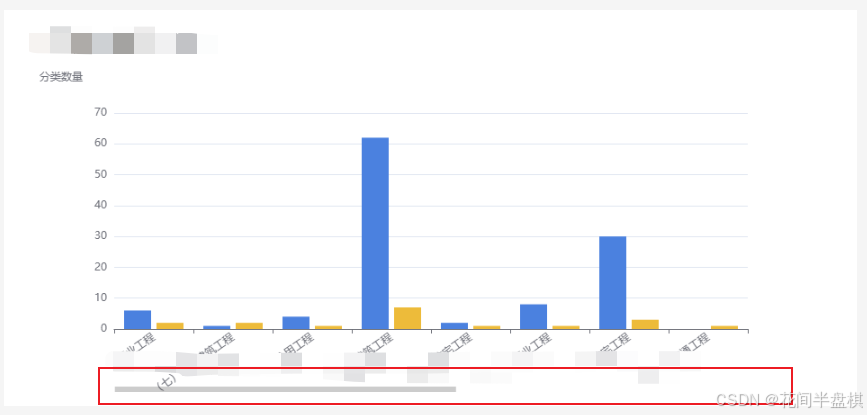

在训练时,它会把每个训练时的情况全部保存下来,可供复源与排

一般是一次batch后就更新一次参数,而它这里是运行4个batch后才更新一次,如下图:

主要对权重,偏置,学习率的优化设置:

19)各种训练策略概述

20)模型迭代过程