项目地址

GitHub - mendableai/firecrawl: 🔥 Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl and extract with a single API.

Firecrawl更多是使用在LLM大模型知识库的构建,是大模型数据准备中的一环(在Dify中会接触到)也是作为检索增强生成(Retrieval Augmented Generation)技术,简称 RAG(当前最火热的LLM应用方案)的一环。

在线体验

https://www.firecrawl.dev/

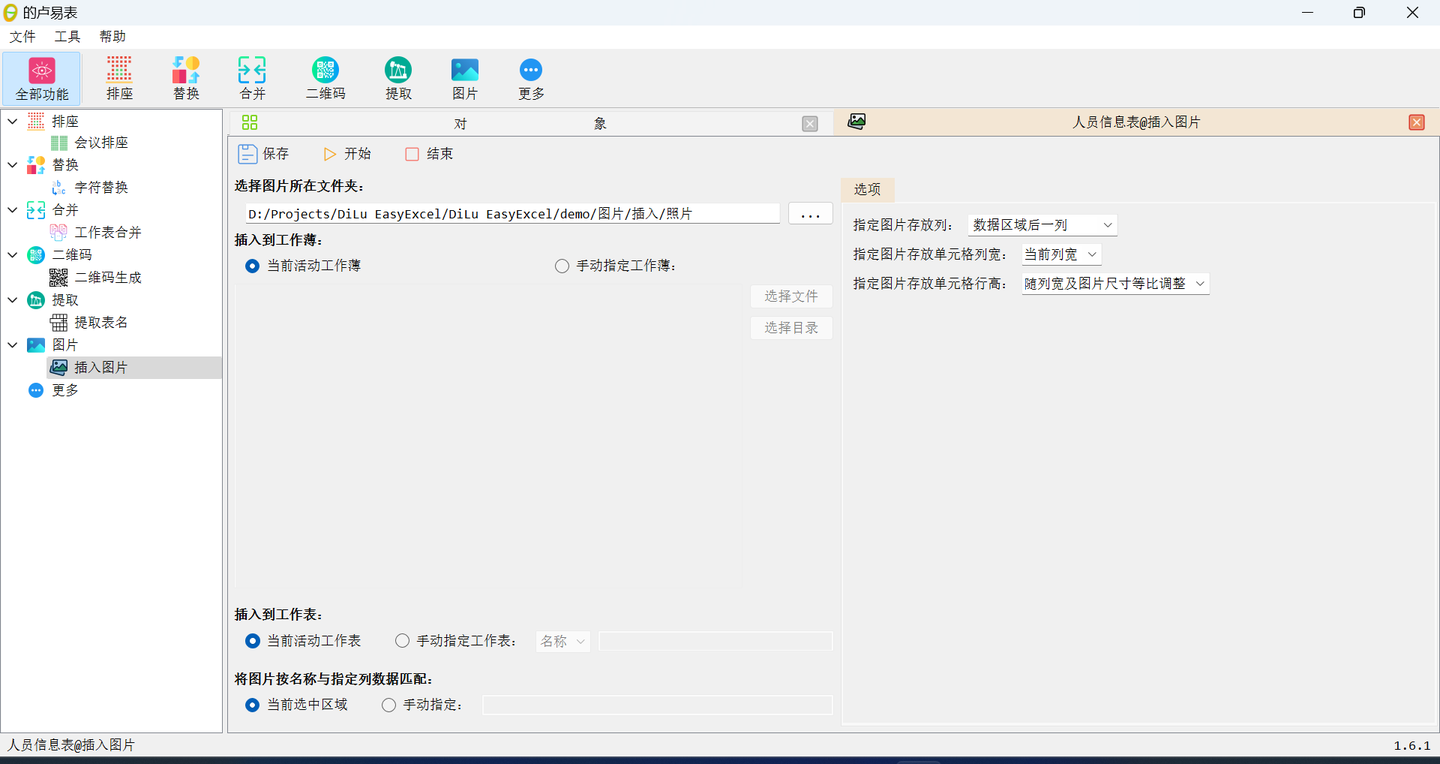

邮箱或其他方式登录后,点击Dashboard

然后可见自己的API Key

分析

1.用Firecrawl的Python SDK举例

使用方法:

1.通过官网进入获取自己的API Key(免费可用500次请求)

2.打开firecrawl中的Python SDK文件夹

可在里面构建.py用于测试的文件(部分test_开头的文件是博主自行构建用于测试的)

2.分析搜索地址(请勿大批量爬取影响网站正常运作)

https://re.jd.com/search?keyword=CCD&enc=utf-8

我们可以看到不同关键词搜索会改变地址栏的keyword=CCD,那么我们就可以通过更改地址栏keyword=?来更改网络页面。(可以在在线体验中先测试一下)

简单用代码表示如下

keyword = "Dangerous people"

try:

# Crawl a website:

crawl_status = app.crawl_url(

# 京东

f'https://re.jd.com/search?keyword={keyword}&enc=utf-8',

params={

'limit': 10,

'scrapeOptions': {'formats': ['markdown', 'html']}

},

)3.完整爬虫代码

配置好环境,更换自己的API Key和关键词keyword即可

from firecrawl import FirecrawlApp

import json

import urllib.parse

import re

from bs4 import BeautifulSoup

def get_value_in_html(text):

"""html取值,通过BeautifulSoup取对应的值"""

soup = BeautifulSoup(text, 'html.parser')

items = soup.find_all('li', {'clstag': lambda x: x and 'ri_same_recommend' in x})

result = []

for item in items:

# 取img_k类的img标签的src属性作为图片链接

pic_img = item.find('div', class_='pic').find('img', class_='img_k')['src']

pic_img = f"https:{pic_img}"

a_tag = item.find('div', class_='li_cen_bot').find('a')

if a_tag is None:

continue

product_link = a_tag['href']

price = a_tag.find('div', class_='commodity_info').find('span', class_='price')

if price is not None:

price = price.text.strip()

else:

price = ''

title = a_tag.find('div', class_='commodity_tit')

if title is not None:

title = title.text.strip()

else:

title = ''

comment_span = a_tag.find('div', class_='comment').find('span', class_='praise')

if comment_span is not None:

evaluation = comment_span.text.strip()

else:

evaluation = ''

product_info = {

'price': price,

'title': title,

'evaluation': evaluation

}

result.append({

"pic_img": pic_img,

"product_link": product_link,

"product_info": product_info

})

return result

API_KEY = "..."

# 用登录后自己的API_KEY

app = FirecrawlApp(api_key=API_KEY)

if __name__ == '__main__':

# 可更改关键词搜索其他

keyword = "Dangerous people"

# keyword_encode = urllib.parse.quote(keyword)

try:

# Crawl a website:

crawl_status = app.crawl_url(

# 京东热卖

f'https://re.jd.com/search?keyword={keyword}&enc=utf-8',

params={

'limit': 10,

'scrapeOptions': {'formats': ['markdown', 'html']}

},

)

# markdown = crawl_status['data'][0]['markdown']

# 使用html提取(提取方法用到bs4)

html = crawl_status['data'][0]['html']

response = {

"result_list": get_value_in_html(html)

}

print(json.dumps(response, ensure_ascii=False))

except Exception as e:

print(f"链接firecrawl异常:{e}")4.返回爬取数据

返回json数据如下

![Figure 02迎重大升级!!人形机器人独角兽[Figure AI]商业化加速](https://i-blog.csdnimg.cn/direct/2d10312665a44e82823379cec821a34d.png)