顺着我上一篇文章 使用onnxruntime-web 运行yolov8-nano推理 继续说,有朋友在问能不能接入

视频流动,实时去识别物品。

首先使用 getUserMedia 获取摄像头视频流

getUserMedia API 可以访问设备的摄像头和麦克风。你可以使用这个 API 获取视频流,并将其显示在页面上的 <video> 标签中。

注意事项:

-

浏览器支持:

getUserMedia被现代浏览器大多数支持,但在一些旧版浏览器上可能不兼容。可以使用 can I use 网站检查浏览器的支持情况。 -

HTTPS 连接:访问摄像头通常要求在 HTTPS 环境下运行。如果你在开发时遇到问题,确保你的本地开发服务器使用 HTTPS(例如通过

localhost和https协议)。

import React, {useState, useRef, useEffect} from "react";

const App = () => {

const [hasVideo, setHasVideo] = useState(false); // 用来记录是否成功获取视频流

const videoRef = useRef(null); // 用来引用 <video> 元素

useEffect(() => {

// 定义一个异步函数来获取视频流

const getVideoStream = async () => {

try {

// 获取视频流

const stream = await navigator.mediaDevices.getUserMedia({

video: true, // 只请求视频流,不需要音频

});

// 如果获取成功,将视频流绑定到 <video> 元素

if (videoRef.current) {

videoRef.current.srcObject = stream;

}

// 标记为成功获取视频流

setHasVideo(true);

} catch (err) {

console.error("Error accessing the camera: ", err);

setHasVideo(false);

}

};

// 执行获取视频流

getVideoStream();

// 清理函数,在组件卸载时停止视频流

return () => {

if (videoRef.current && videoRef.current.srcObject) {

const stream = videoRef.current.srcObject;

const tracks = stream.getTracks();

tracks.forEach(track => track.stop()); // 停止视频流

}

};

}, []);

return (

<div className="video-container">

{hasVideo ? (

<video ref={videoRef} autoPlay playsInline className="video-stream" />

) : (

<p>Unable to access the camera.</p>

)}

</div>

);

};

export default App;效果如下, 不过我们需要实画框的并不在vedio对象上,所以需要把vedio上图片在canvas上重新绘制,方便后续在detect的位置再画上方框

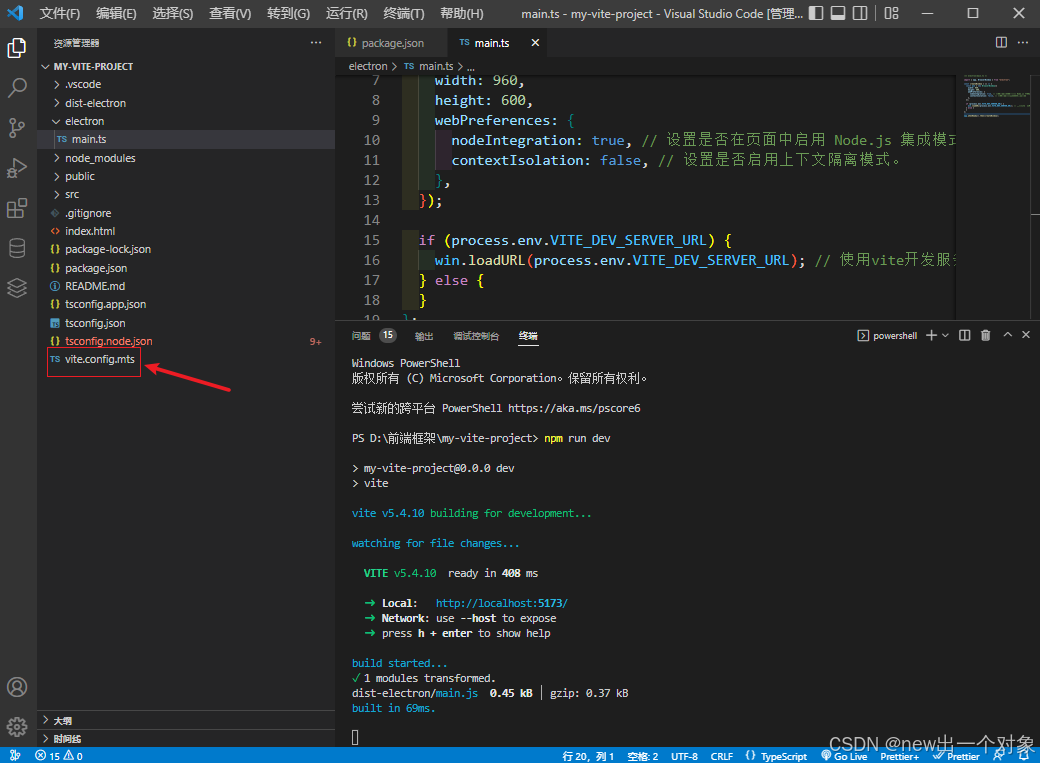

增加取帧模型推理的代码

import React, {useEffect, useRef, useState} from 'react';

import "./style/Camera.css";

import cv from "@techstark/opencv-js";

import {download} from "./utils/download";

import {InferenceSession, Tensor} from "onnxruntime-web";

import {detectImage} from "./utils/detect";

const CameraToCanvas = () => {

const videoRef = useRef(null);

const canvasRef = useRef(null);

const [session, setSession] = useState(null);

const [loading, setLoading] = useState({text: "Loading OpenCV.js", progress: null});

const imageRef = useRef(null);

// Configs

const modelName = "yolov8n.onnx";

const modelInputShape = [1, 3, 640, 640];

const topk = 100;

const iouThreshold = 0.45;

const scoreThreshold = 0.25;

useEffect(() => {

cv["onRuntimeInitialized"] = async () => {

const baseModelURL = `${process.env.PUBLIC_URL}/model`;

// create session

const url = `${baseModelURL}/${modelName}`

console.log(`url:${url}`)

const arrBufNet = await download(url, // url

["加载 YOLOv8", setLoading] // logger

);

const yolov8 = await InferenceSession.create(arrBufNet);

const arrBufNMS = await download(`${baseModelURL}/nms-yolov8.onnx`, // url

["加载 NMS model", setLoading] // logger

);

const nms = await InferenceSession.create(arrBufNMS);

// warmup main model

setLoading({text: "model 预热...", progress: null});

const tensor = new Tensor("float32", new Float32Array(modelInputShape.reduce((a, b) => a * b)), modelInputShape);

await yolov8.run({images: tensor});

setSession({net: yolov8, nms: nms});

//window.session = {net: yolov8, nms: nms};

setLoading(null);

};

// 获取摄像头流

async function startCamera() {

try {

const stream = await navigator.mediaDevices.getUserMedia({video: true});

if (videoRef.current) {

videoRef.current.srcObject = stream;

videoRef.current.play();

}

} catch (error) {

console.error("Error accessing the camera:", error);

}

}

startCamera();

const drawToCanvas = async () => {

const video = videoRef.current;

const canvas = canvasRef.current;

const context = canvas.getContext('2d');

if (video && canvas) {

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

context.drawImage(video, 0, 0, canvas.width, canvas.height);

// 把图片赋到imageRef.src上

imageRef.current.src = canvas.toDataURL();

//const session = window.session

if (session) {

detectImage(imageRef.current, canvas, session, topk, iouThreshold, scoreThreshold, modelInputShape);

}

}

requestAnimationFrame(drawToCanvas);

};

requestAnimationFrame(drawToCanvas);

return () => {

if (videoRef.current && videoRef.current.srcObject) {

const stream = videoRef.current.srcObject;

const tracks = stream.getTracks();

tracks.forEach(track => track.stop()); // 停止摄像头流

}

};

}, []);

return (<div className='video-container'>

<video ref={videoRef} style={{display: 'none'}}/>

<canvas ref={canvasRef}/>

<img

ref={imageRef}

src="#"

alt=""

style={{display: "none"}}

/>

</div>);

}

export default CameraToCanvas;

使用模型进行检测的方法

export const detectImage = async (

image,

canvas,

session,

topk,

iouThreshold,

scoreThreshold,

inputShape

) => {

const [modelWidth, modelHeight] = inputShape.slice(2);

const [input, xRatio, yRatio] = preprocessing(image, modelWidth, modelHeight);

const tensor = new Tensor("float32", input.data32F, inputShape); // to ort.Tensor

const config = new Tensor(

"float32",

new Float32Array([

topk, // topk per class

iouThreshold, // iou threshold

scoreThreshold, // score threshold

])

); // nms config tensor

const {output0} = await session.net.run({images: tensor}); // run session and get output layer

const {selected} = await session.nms.run({detection: output0, config: config}); // perform nms and filter boxes

const boxes = [];

// looping through output

for (let idx = 0; idx < selected.dims[1]; idx++) {

const data = selected.data.slice(idx * selected.dims[2], (idx + 1) * selected.dims[2]); // get rows

const box = data.slice(0, 4);

const scores = data.slice(4); // classes probability scores

const score = Math.max(...scores); // maximum probability scores

const label = scores.indexOf(score); // class id of maximum probability scores

const [x, y, w, h] = [

(box[0] - 0.5 * box[2]) * xRatio, // upscale left

(box[1] - 0.5 * box[3]) * yRatio, // upscale top

box[2] * xRatio, // upscale width

box[3] * yRatio, // upscale height

]; // keep boxes in maxSize range

boxes.push({

label: label,

probability: score,

bounding: [x, y, w, h], // upscale box

}); // update boxes to draw later

}

if (boxes.length > 0) {

renderBoxes(canvas, boxes); // Draw boxes

}

input.delete(); // delete unused Mat

};测试下效果,视频流的处理还是卡顿,勉强找了个静态场景测试下

![【YOLOv11[基础]】目标检测OD | 导出ONNX模型 | ONN模型推理以及检测结果可视化 | python](https://i-blog.csdnimg.cn/direct/8ef62b1337654cf395452873e9cb41b4.png)