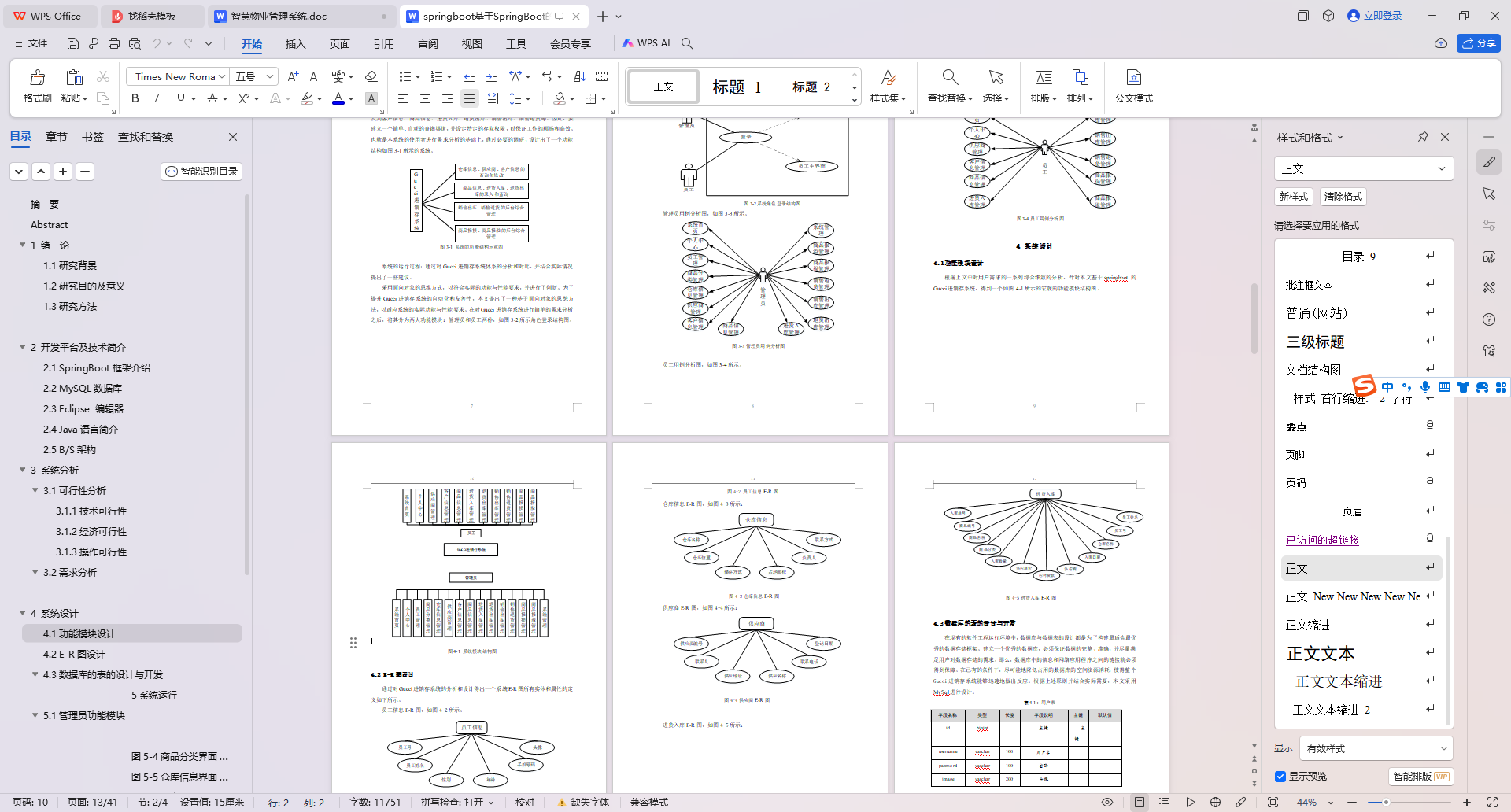

Flux.jl 搭建神经网络基本流程

using Flux, Statistics

# 生成XOR问题的数据

noisy = rand(Float32, 2, 200) # 2×200的矩阵

truth = [xor(col[1] > 0.5, col[2] > 0.5) for col in eachcol(noisy)] # 200元素向量

target = Flux.onehotbatch(truth, [true, false]) # 2×200的OneHotMatrix

# 定义模型,一个具有3个隐藏层的多层感知器

model = Chain(

Dense(2 => 3, tanh), # 使用tanh激活函数

BatchNorm(3), # 批量归一化

Dense(3 => 2) # 输出层

)

# 模型输出

out1 = model(noisy)

probs1 = softmax(out1) # 使用softmax函数获取概率

# 为训练准备目标数据

# 创建数据加载器

loader = Flux.DataLoader((noisy, target), batchsize=64, shuffle=true)

# 设置优化器

optim = Flux.setup(Flux.Adam(0.01), model) # Adam 策略随机梯度方法

# 训练循环,遍历整个数据集1000次

losses = []

for epoch in 1:1000

for (x, y) in loader

loss, grads = Flux.withgradient(model) do m

y_hat = m(x)

Flux.logitcrossentropy(y_hat, y)

end

Flux.update!(optim, model, grads[1])

push!(losses, loss)

end

end

# 训练后的模型输出

out2 = model(noisy)

probs2 = softmax(out2)

# 计算准确率

accuracy = mean((probs2[1,:] .> 0.5) .== truth)

println("Accuracy: $(accuracy * 100)%")

using Plots # to draw the above figure

p_true = scatter(noisy[1,:], noisy[2,:], zcolor=truth, title="True classification", legend=false)

p_raw = scatter(noisy[1,:], noisy[2,:], zcolor=probs1[1,:], title="Untrained network", label="", clims=(0,1))

p_done = scatter(noisy[1,:], noisy[2,:], zcolor=probs2[1,:], title="Trained network", legend=false)

plot(p_true, p_raw,layout=(1,3), size=(200,330))

输出分类效果

![[单master节点k8s部署]26.Istio流量管理(二)](https://i-blog.csdnimg.cn/direct/244e73a03cfb484fa7ed395470424462.png)