💝💝💝欢迎来到我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。

- 推荐:kwan 的首页,持续学习,不断总结,共同进步,活到老学到老

- 导航

- 檀越剑指大厂系列:全面总结 java 核心技术,jvm,并发编程 redis,kafka,Spring,微服务等

- 常用开发工具系列:常用的开发工具,IDEA,Mac,Alfred,Git,typora 等

- 数据库系列:详细总结了常用数据库 mysql 技术点,以及工作中遇到的 mysql 问题等

- 新空间代码工作室:提供各种软件服务,承接各种毕业设计,毕业论文等

- 懒人运维系列:总结好用的命令,解放双手不香吗?能用一个命令完成绝不用两个操作

- 数据结构与算法系列:总结数据结构和算法,不同类型针对性训练,提升编程思维,剑指大厂

非常期待和您一起在这个小小的网络世界里共同探索、学习和成长。💝💝💝 ✨✨ 欢迎订阅本专栏 ✨✨

博客目录

- 1.评分机制 TF\IDF

- 2.score 是如何被计算出来的

- 3.分析如何被匹配上

- 4.Doc value

- 5.query phase

- 6.replica shard 提升吞吐量

- 7.fetch phbase 工作流程

- 8.搜索参数小总结

- 9.bucket 和 metric

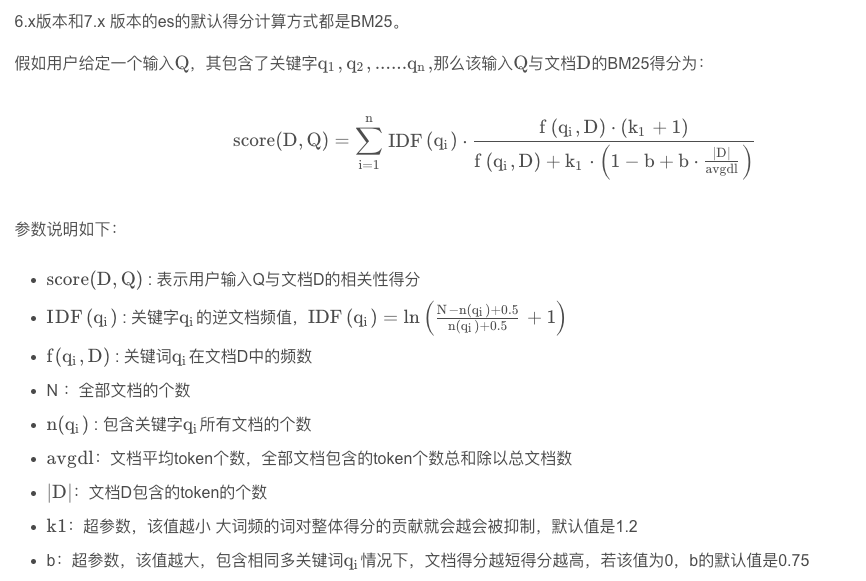

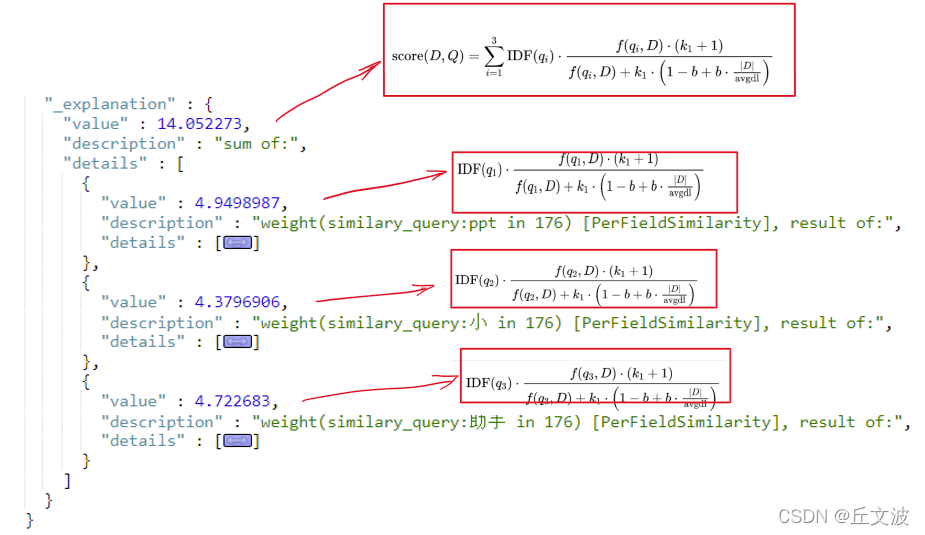

1.评分机制 TF\IDF

TF-IDF(Term Frequency-Inverse Document Frequency)是一种用于信息检索和文本挖掘的统计方法,用以评估一个词在一个文档集中一个特定文档的重要程度。这个评分机制考虑了一个词语在特定文档中的出现频率(Term Frequency,TF)和在整个文档集中的逆文档频率(Inverse Document Frequency,IDF)。

TF(Term Frequency)词频(Term Frequency,TF)表示一个词在一个特定文档中出现的频率。这通常是该词在文档中出现次数与文档的总词数之比。

IDF(Inverse Document Frequency)逆文档频率(Inverse Document Frequency,IDF)是一个词在文档集中的重要性的度量。如果一个词很常见,出现在很多文档中(例如“和”,“是”等),那么它可能不会携带有用的信息。IDF 度量就是为了降低这些常见词在文档相似性度量中的权重。

2.score 是如何被计算出来的

GET /book/_search?explain=true

{

"query": {

"match": {

"description": "java程序员"

}

}

}

返回

{

"took": 5,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 2,

"relation": "eq"

},

"max_score": 2.137549,

"hits": [

{

"_shard": "[book][0]",

"_node": "MDA45-r6SUGJ0ZyqyhTINA",

"_index": "book",

"_type": "_doc",

"_id": "3",

"_score": 2.137549,

"_source": {

"name": "spring开发基础",

"description": "spring 在java领域非常流行,java程序员都在用。",

"studymodel": "201001",

"price": 88.6,

"timestamp": "2019-08-24 19:11:35",

"pic": "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags": ["spring", "java"]

},

"_explanation": {

"value": 2.137549,

"description": "sum of:",

"details": [

{

"value": 0.7936629,

"description": "weight(description:java in 0) [PerFieldSimilarity], result of:",

"details": [

{

"value": 0.7936629,

"description": "score(freq=2.0), product of:",

"details": [

{

"value": 2.2,

"description": "boost",

"details": []

},

{

"value": 0.47000363,

"description": "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details": [

{

"value": 2,

"description": "n, number of documents containing term",

"details": []

},

{

"value": 3,

"description": "N, total number of documents with field",

"details": []

}

]

},

{

"value": 0.7675597,

"description": "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details": [

{

"value": 2.0,

"description": "freq, occurrences of term within document",

"details": []

},

{

"value": 1.2,

"description": "k1, term saturation parameter",

"details": []

},

{

"value": 0.75,

"description": "b, length normalization parameter",

"details": []

},

{

"value": 12.0,

"description": "dl, length of field",

"details": []

},

{

"value": 35.333332,

"description": "avgdl, average length of field",

"details": []

}

]

}

]

}

]

},

{

"value": 1.3438859,

"description": "weight(description:程序员 in 0) [PerFieldSimilarity], result of:",

"details": [

{

"value": 1.3438859,

"description": "score(freq=1.0), product of:",

"details": [

{

"value": 2.2,

"description": "boost",

"details": []

},

{

"value": 0.98082924,

"description": "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details": [

{

"value": 1,

"description": "n, number of documents containing term",

"details": []

},

{

"value": 3,

"description": "N, total number of documents with field",

"details": []

}

]

},

{

"value": 0.6227967,

"description": "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details": [

{

"value": 1.0,

"description": "freq, occurrences of term within document",

"details": []

},

{

"value": 1.2,

"description": "k1, term saturation parameter",

"details": []

},

{

"value": 0.75,

"description": "b, length normalization parameter",

"details": []

},

{

"value": 12.0,

"description": "dl, length of field",

"details": []

},

{

"value": 35.333332,

"description": "avgdl, average length of field",

"details": []

}

]

}

]

}

]

}

]

}

},

{

"_shard": "[book][0]",

"_node": "MDA45-r6SUGJ0ZyqyhTINA",

"_index": "book",

"_type": "_doc",

"_id": "2",

"_score": 0.57961315,

"_source": {

"name": "java编程思想",

"description": "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel": "201001",

"price": 68.6,

"timestamp": "2019-08-25 19:11:35",

"pic": "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags": ["java", "dev"]

},

"_explanation": {

"value": 0.57961315,

"description": "sum of:",

"details": [

{

"value": 0.57961315,

"description": "weight(description:java in 0) [PerFieldSimilarity], result of:",

"details": [

{

"value": 0.57961315,

"description": "score(freq=1.0), product of:",

"details": [

{

"value": 2.2,

"description": "boost",

"details": []

},

{

"value": 0.47000363,

"description": "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details": [

{

"value": 2,

"description": "n, number of documents containing term",

"details": []

},

{

"value": 3,

"description": "N, total number of documents with field",

"details": []

}

]

},

{

"value": 0.56055,

"description": "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details": [

{

"value": 1.0,

"description": "freq, occurrences of term within document",

"details": []

},

{

"value": 1.2,

"description": "k1, term saturation parameter",

"details": []

},

{

"value": 0.75,

"description": "b, length normalization parameter",

"details": []

},

{

"value": 19.0,

"description": "dl, length of field",

"details": []

},

{

"value": 35.333332,

"description": "avgdl, average length of field",

"details": []

}

]

}

]

}

]

}

]

}

}

]

}

}

3.分析如何被匹配上

分析一个 document 是如何被匹配上的

- 最终得分

- IDF 得分

GET /book/_explain/3

{

"query": {

"match": {

"description": "java程序员"

}

}

}

4.Doc value

搜索的时候,要依靠倒排索引;排序的时候,需要依靠正排索引,看到每个 document 的每个 field,然后进行排序,所谓的正排索引,其实就是 doc values

在建立索引的时候,一方面会建立倒排索引,以供搜索用;一方面会建立正排索引,也就是 doc values,以供排序,聚合,过滤等操作使用

doc values 是被保存在磁盘上的,此时如果内存足够,os 会自动将其缓存在内存中,性能还是会很高;如果内存不足够,os 会将其写入磁盘上

倒排索引

doc1: hello world you and me

doc2: hi, world, how are you

| term | doc1 | doc2 |

|---|---|---|

| hello | * | |

| world | * | * |

| you | * | * |

| and | * | |

| me | * | |

| hi | * | |

| how | * | |

| are | * |

搜索时:

hello you --> hello, you

hello --> doc1

you --> doc1,doc2

doc1: hello world you and me

doc2: hi, world, how are you

sort by 出现问题

正排索引

doc1: { “name”: “jack”, “age”: 27 }

doc2: { “name”: “tom”, “age”: 30 }

| document | name | age |

|---|---|---|

| doc1 | jack | 27 |

| doc2 | tom | 30 |

5.query phase

-

搜索请求发送到某一个 coordinate node,构构建一个 priority queue,长度以 paging 操作 from 和 size 为准,默认为 10

-

coordinate node 将请求转发到所有 shard,每个 shard 本地搜索,并构建一个本地的 priority queue

-

各个 shard 将自己的 priority queue 返回给 coordinate node,并构建一个全局的 priority queue

6.replica shard 提升吞吐量

replica shard 如何提升搜索吞吐量

一次请求要打到所有 shard 的一个 replica/primary 上去,如果每个 shard 都有多个 replica,那么同时并发过来的搜索请求可以同时打到其他的 replica 上去

7.fetch phbase 工作流程

-

coordinate node 构建完 priority queue 之后,就发送 mget 请求去所有 shard 上获取对应的 document

-

各个 shard 将 document 返回给 coordinate node

-

coordinate node 将合并后的 document 结果返回给 client 客户端

一般搜索,如果不加 from 和 size,就默认搜索前 10 条,按照_score 排序

8.搜索参数小总结

preference:

决定了哪些 shard 会被用来执行搜索操作

_primary, _primary_first, _local, _only_node:xyz, _prefer_node:xyz, _shards:2,3

bouncing results 问题,两个 document 排序,field 值相同;不同的 shard 上,可能排序不同;每次请求轮询打到不同的 replica shard 上;每次页面上看到的搜索结果的排序都不一样。这就是 bouncing result,也就是跳跃的结果。

搜索的时候,是轮询将搜索请求发送到每一个 replica shard(primary shard),但是在不同的 shard 上,可能 document 的排序不同

解决方案就是将 preference 设置为一个字符串,比如说 user_id,让每个 user 每次搜索的时候,都使用同一个 replica shard 去执行,就不会看到 bouncing results 了

timeout:

主要就是限定在一定时间内,将部分获取到的数据直接返回,避免查询耗时过长

routing:

document 文档路由,_id 路由,routing=user_id,这样的话可以让同一个 user 对应的数据到一个 shard 上去

search_type:

default:query_then_fetch

dfs_query_then_fetch,可以提升 revelance sort 精准度

9.bucket 和 metric

bucket:一个数据分组

city name

北京 张三

北京 李四

天津 王五

天津 赵六

天津 王麻子

划分出来两个 bucket,一个是北京 bucket,一个是天津 bucket

北京 bucket:包含了 2 个人,张三,李四

上海 bucket:包含了 3 个人,王五,赵六,王麻子

metric:对一个数据分组执行的统计

metric,就是对一个 bucket 执行的某种聚合分析的操作,比如说求平均值,求最大值,求最小值

select count(*) from book group by studymodel

bucket:group by studymodel --> 那些 studymodel 相同的数据,就会被划分到一个 bucket 中metric:count(*),对每个 user_id bucket 中所有的数据,计算一个数量。还有 avg(),sum(),max(),min()

觉得有用的话点个赞

👍🏻呗。

❤️❤️❤️本人水平有限,如有纰漏,欢迎各位大佬评论批评指正!😄😄😄💘💘💘如果觉得这篇文对你有帮助的话,也请给个点赞、收藏下吧,非常感谢!👍 👍 👍

🔥🔥🔥Stay Hungry Stay Foolish 道阻且长,行则将至,让我们一起加油吧!🌙🌙🌙