配置三台elasticsearch服务器

安装包

elasticsearch.j2

报错

#---执行rsync命令报以下错误

[root@es1 ~]# rsync -av /etc/hosts 192.168.29.172:/etc/hosts

root@192.168.29.172's password:

bash: rsync: 未找到命令

rsync: connection unexpectedly closed (0 bytes received so far) [sender]

rsync error: error in rsync protocol data stream (code 12) at io.c(226) [sender=3.1.3]

解决方法

== 同步双方机器都需要安装rsync==

机器环境:RockyLinux8.6

资源列表:es1,es2,es3,nfs-server

es1:192.168.29.171

es2:192.168.29.172

es3:192.168.29.173

nfs-server:192.168.29.139

#-----------------配置本地yum仓库

#------------搭建nfs-server

[root@harbor ~]# yum install -y nfs-utils

#查看nfs-server配置文件

[root@harbor ~]# cat /etc/exports

/var/ftp 192.168.29.0/24(rw,no_root_squash)

[root@harbor ~]# systemctl enable nfs-server --now

#------------搭建nfs-server

[root@harbor ftp]# createrepo --update /var/ftp/elk

Directory walk started

Directory walk done - 5 packages

Loaded information about 0 packages

Temporary output repo path: /var/ftp/elk/.repodata/

Preparing sqlite DBs

Pool started (with 5 workers)

Pool finished

[root@harbor ~]# ls /var/ftp/elk

elasticsearch-7.17.8-x86_64.rpm kibana-7.17.8-x86_64.rpm metricbeat-7.17.8-x86_64.rpm

filebeat-7.17.8-x86_64.rpm logstash-7.17.8-x86_64.rpm repodata

#-----------------配置本地yum仓库

#---------在三台机器上分别安装elasticsearch

#-----yum配置文件

[root@es1 ~]# cat /etc/yum.repos.d/extra.repo

[es]

name=Rocky Linux $releasever - es

#mirrorlist=https://mirrors.rockylinux.org/mirrorlist?arch=$basearch&repo=BaseOS-$releasever

baseurl=ftp://192.168.29.139/elk/

gpgcheck=0

enabled=1

countme=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-rockyofficial

[root@es1 ~]# dnf install -y elasticsearch

#集群名称

cluster.name: elk-cluster

#节点名称

node.name: es1

#监听地址

network.host: 0.0.0.0

#集群广播成员,用于设置Elasticsearch节点启动时去连接的种子节点列表,这有助于节点能够发现集群中的其他节点

discovery.seed_hosts: ["es1", "es2","es3"]

#集群创始人成员,用于指定集群启动时应该被选举为初始主节点的节点列表

cluster.initial_master_nodes: ["es1", "es2","es3"]

[root@es1 ~]# systemctl enable elasticsearch --now

[root@es1 ~]# curl 192.168.29.171:9200

{

"name" : "es1",

"cluster_name" : "elk-cluster",

"cluster_uuid" : "_na_",

"version" : {

"number" : "7.17.8",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "120eabe1c8a0cb2ae87cffc109a5b65d213e9df1",

"build_date" : "2022-12-02T17:33:09.727072865Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

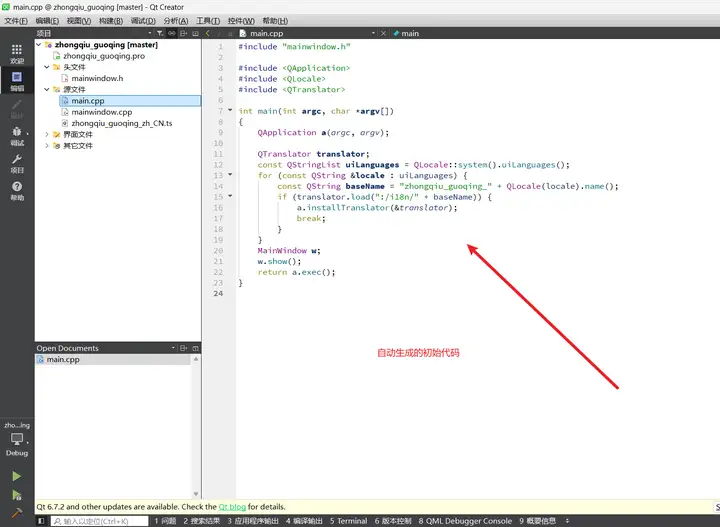

使用Ansible批量部署ES

[root@harbor es]# cat ansible.cfg

[defaults]

inventory = hostlist

host_key_checking = False

#elasticsearch.j2通过变量获取主机名称

node.name: {{ ansible_hostname }}

#部署es的playbook文件

[root@harbor es]# cat deployes.yaml

---

- name: deploy es

hosts: es

tasks:

- name: 'set /etc/hosts'

copy:

dest: /etc/hosts

owner: root

group: root

mode: 0644

content: |

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

{% for i in groups.es %}

{{ hostvars[i].ansible_ens160.ipv4.address }}{{ hostvars[i].ansible_hostname }}

{% endfor %}

- name: install es

dnf:

name: elasticsearch

state: latest

update_cache: yes

- name: copy config file

template:

src: elasticsearch.j2

dest: /etc/elasticsearch/elasticsearch.yml

owner: root

group: elasticsearch

mode: 0600

- name: update es service

service:

name: elasticsearch

state: started

enabled: yes

#主机列表文件

[root@harbor es]# cat hostlist

[web]

192.168.29.161

192.168.29.162

[es]

192.168.29.171

192.168.29.172

192.168.29.173

[root@harbor es]# ansible-playbook deployes.yaml

#部署完成后,查看集群状态

[root@el2 ~]# curl 192.168.29.172:9200/_cat/nodes?pretty

192.168.29.172 26 94 4 0.55 0.21 0.10 cdfhilmrstw * es2

192.168.29.171 12 93 4 0.09 0.11 0.08 cdfhilmrstw - es1

192.168.29.173 7 93 30 1.09 0.32 0.16 cdfhilmrstw - es3

部署插件页面

[root@es1 ~]# dnf install -y nginx

[root@es1 ~]# systemctl enable nginx --now

Created symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service.

[root@es1 ~]# tar xf head.tar.gz -C /usr/share/nginx/html/

认证和代理

[root@es1 conf.d]# cat /etc/nginx/default.d/es.conf

location ~* ^/es/(.*)$ {

proxy_pass http://127.0.0.1:9200/$1;

auth_basic "elastic admin";

auth_basic_user_file /etc/nginx/auth-user;

}

[root@es1 conf.d]# dnf install -y httpd-tools

[root@es1 conf.d]# htpasswd -cm /etc/nginx/auth-user admin

[root@es1 conf.d]# systemctl restart nginx