目录

一、背景

二、环境说明和安装

1.1 环境说明

2.2 环境安装

2.2.1 searXNG安装

三、代码实现

代码

结果输出

直接请求模型输出

四、参考

一、背景

大语言模型的出现带来了新的技术革新,但是大模型由于训练语料的原因,它的知识和当前实时热点存在时间差距,存在很严重的幻觉。而RAG检索增强生成能够解决这个问题,知识以prompt的形式进行补足,再让LLM进行润色进而呈现给用户。

二、环境说明和安装

1.1 环境说明

整个环境都是基于我本地进行搭建,机器环境采用的是wsl2部署的Ubuntu-20.04,大模型选取的是Qwen/Qwen2-7B-Instruct,采用vllm进行部署推理以openai api的形式提供服务,langchain使用的是最新的0.2版本,由于searXNG是需要docker部署的,所以请确保已安装docker .desktop。

2.2 环境安装

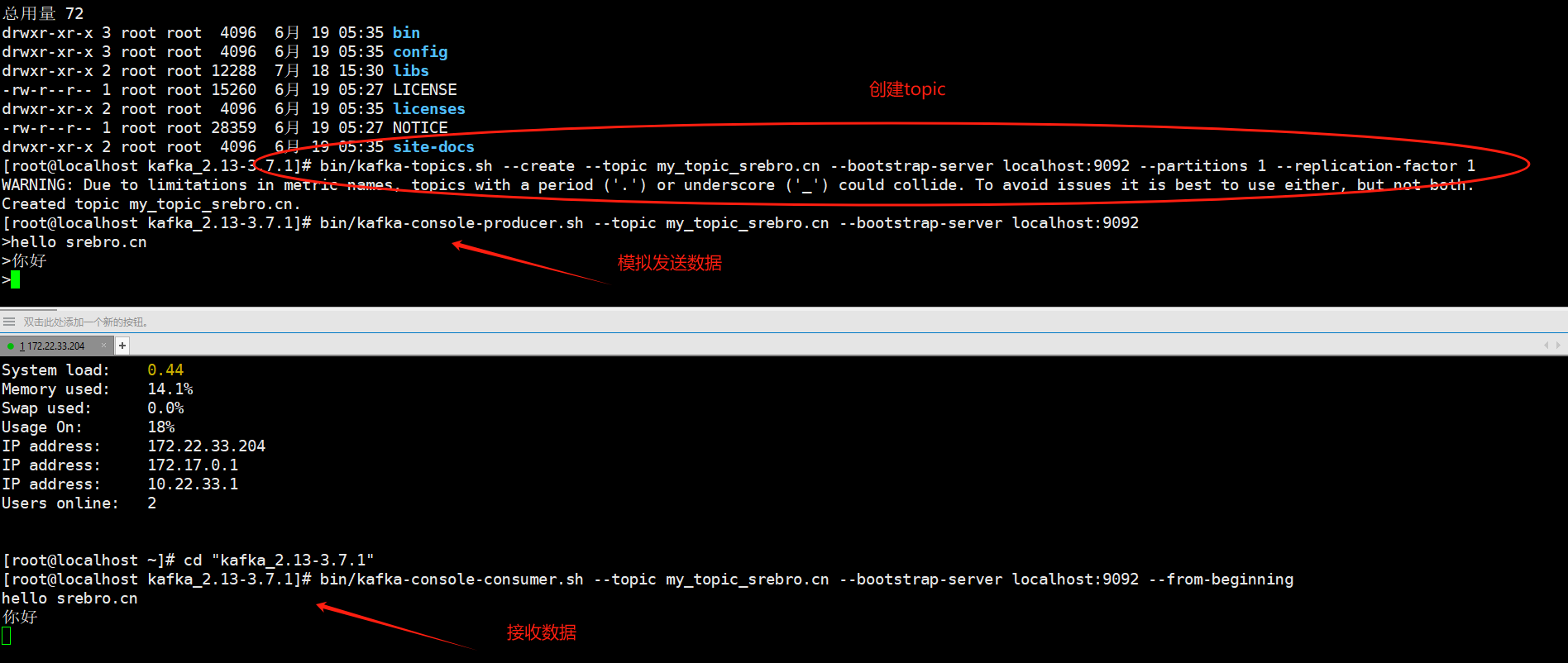

2.2.1 searXNG安装

- 下载

cd /opt/softwares

git clone https://github.com/searxng/searxng-docker.git

cd searxng-docker- docker-compose配置

searXNG的组件有三个,caddy实现反向代理,这里不需要反向代理,采用直接调用的形式,关于caddy的部分注释掉;redis用来保存session,searXNG提供搜索服务。设置端口映射,0.0.0.0:8080:8080左边的端口为宿主机端口,右边的端口为docker端口

version: "3.7"

services:

#caddy:

#container_name: caddy

#image: docker.io/library/caddy:2-alpine

#network_mode: host

#restart: unless-stopped

#volumes:

#- ./Caddyfile:/etc/caddy/Caddyfile:ro

#- caddy-data:/data:rw

#- caddy-config:/config:rw

#environment:

#- SEARXNG_HOSTNAME=${SEARXNG_HOSTNAME:-http://localhost:80}

#- SEARXNG_TLS=${LETSENCRYPT_EMAIL:-internal}

#cap_drop:

#- ALL

#cap_add:

#- NET_BIND_SERVICE

#logging:

#driver: "json-file"

#options:

#max-size: "1m"

#max-file: "1"

redis:

container_name: redis

image: docker.io/valkey/valkey:7-alpine

command: valkey-server --save 30 1 --loglevel warning

restart: unless-stopped

networks:

- searxng

volumes:

- valkey-data2:/data

cap_drop:

- ALL

cap_add:

- SETGID

- SETUID

- DAC_OVERRIDE

logging:

driver: "json-file"

options:

max-size: "1m"

max-file: "1"

searxng:

container_name: searxng

image: docker.io/searxng/searxng:latest

restart: unless-stopped

networks:

- searxng

ports:

- "0.0.0.0:8080:8080"

volumes:

- ./searxng:/etc/searxng:rw

environment:

- SEARXNG_BASE_URL=https://${SEARXNG_HOSTNAME:-localhost}/

cap_drop:

- ALL

cap_add:

- CHOWN

- SETGID

- SETUID

logging:

driver: "json-file"

options:

max-size: "1m"

max-file: "1"

networks:

searxng:

volumes:

caddy-data:

caddy-config:

valkey-data2:- searxng配置

# 设置秘钥

sed -i "s|ultrasecretkey|$(openssl rand -hex 32)|g" searxng/settings.yml

# limiter设置为false,设置formats

vim searxng/settings.yml

settings.yml内容如下:

use_default_settings: true

server:

# base_url is defined in the SEARXNG_BASE_URL environment variable, see .env and docker-compose.yml

secret_key: "fc6a61736b885ace5f990f840006d057c01604501a36636b4a378f4b2cb89fdf" # change this!

limiter: false # can be disabled for a private instance

image_proxy: true

ui:

static_use_hash: true

redis:

url: redis://redis:6379/0

search:

formats:

- html

- json如果没有配置search.formats,在使用langchain进行调用的时候会报503错误,相关issue如下:

https://github.com/langchain-ai/langchain/issues/855

- 启动

# 启动

docker-compose up -d

# 停止

docker-compose stop- 访问localhost:8080,如下:

三、代码实现

代码

from typing import List

from langchain_community.utilities import SearxSearchWrapper

import requests

from requests.exceptions import RequestException

from langchain_community.document_loaders import AsyncHtmlLoader

from langchain_community.document_transformers.html2text import Html2TextTransformer

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_openai.chat_models import ChatOpenAI

from langchain.prompts.chat import ChatPromptTemplate

import faiss

from langchain_community.vectorstores import FAISS

from langchain_community.docstore import InMemoryDocstore

from langchain_huggingface import HuggingFaceEmbeddings

from langchain_core.documents import Document

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains.retrieval import create_retrieval_chain

import os

import sys

sys.path.append(os.path.abspath(os.pardir))

from global_config import MODEL_CACHE_DIR

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

def check_url_access(urls: List[str]) -> List[str]:

urls_can_access = []

for url in urls:

try:

req_res = requests.get(url)

if req_res is not None and req_res.ok:

urls_can_access.append(url)

except RequestException as e:

continue

return urls_can_access

def get_chat_llm(streaming=False, temperature=0, max_tokens=2048) -> ChatOpenAI:

chat_llm = ChatOpenAI(

model_name="Qwen2-7B-Instruct",

openai_api_key="empty",

openai_api_base="http://localhost:8000/v1",

max_tokens=max_tokens,

temperature=temperature,

streaming=streaming

)

return chat_llm

def get_retriever(documents: List[Document]):

# embedding

embeddings = HuggingFaceEmbeddings(

model_name=os.path.join(MODEL_CACHE_DIR, "maidalun/bce-embedding-base_v1")

)

# faiss

faiss_index = faiss.IndexFlatL2(len(embeddings.embed_query("hello world"))) # 指定向量维度

vector_store = FAISS(

embedding_function=embeddings,

index=faiss_index,

docstore=InMemoryDocstore(),

index_to_docstore_id={}

)

vector_store.add_documents(documents)

return vector_store.as_retriever()

def get_answer_prompt() -> ChatPromptTemplate:

system_prompt = """

你是一个问答任务的助手,请依据以下检索出来的信息去回答问题:

{context}

"""

return ChatPromptTemplate([

("system", system_prompt),

("human", "{input}")

])

if __name__ == '__main__':

llm = get_chat_llm()

search = SearxSearchWrapper(searx_host="http://localhost:8080/")

query = "《元梦之星》这款游戏是什么时候发行的"

results = search.results(

query, # 搜索内容

language="zh-CN", # 语言

safesearch=1, # 可选0(不过滤任何结果),1(中等级别内容过滤),2(严格级别内容过滤)

categories="general", # 搜索内容,取值general/images/videos等

engines=["bing", "brave", "google"], # 搜索引擎

num_results=3 # 返回内容数

)

print(f"search results: {results}")

urls_to_look = [ele["link"] for ele in results if ele.get("link", None)]

# 检查url是否可达

urls = check_url_access(urls_to_look)

print(f"urls: {urls}")

# loader

loader = AsyncHtmlLoader(urls, ignore_load_errors=True, requests_kwargs={"timeout": 5})

docs = loader.load()

# transformer

html2text = Html2TextTransformer()

docs = html2text.transform_documents(docs)

# text split

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=100)

docs = text_splitter.split_documents(docs)

# retriever

retriever = get_retriever(docs)

# create_stuff_documents_chain的prompt必须含有context变量

qa_chain = create_stuff_documents_chain(llm, get_answer_prompt())

rag_chain = create_retrieval_chain(retriever, qa_chain)

res = rag_chain.invoke({

"input": query

})

print(res["answer"])结果输出

《元梦之星》这款游戏是在2023年12月15日全平台上线发行的。直接请求模型输出

curl http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "Qwen2-7B-Instruct",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "《元梦之星》这款游戏是什么时候发行的"}

]

}'从基于搜索的RAG输出和模型原始输出来看,搜索能补充大模型缺失的知识,从而基于新知识来进行回答

四、参考

https://github.com/searxng/searxng-docker

https://github.com/ptonlix/LangChain-SearXNG

SearxNG Search | 🦜️🔗 LangChain

Conversational RAG | 🦜️🔗 LangChain

maidalun1020/bce-embedding-base_v1 · Hugging Face

![[pytorch] --- pytorch基础之损失函数与反向传播](https://i-blog.csdnimg.cn/direct/d7918f0c5e0d4bc2be09bd8aaf71e61a.png)