文章目录

- 一、kubeadm方式部署etcd

- 1.修改etcd指标接口监听地址

- 2.prometheus中添加etcd的服务发现配置

- 3.创建etcd的service

- 4.grafana添加etcd监控模版

- 二、二进制方式部署k8s etcd

- 1.将etcd服务代理到k8s集群

- 2.创建etcd证书的secrets

- 3.prometheus挂载etcd证书的secrets

- 4.prometheus添加采集etcd配置

- 5.grafana添加etcd监控面板

一、kubeadm方式部署etcd

对于使用kubeadm工具搭建的kubernetes集群,etcd组件默认以静态pod方式将其部署在master节点上。通过它的静态pod资源文件"/etc/kubernetes/manifests/etcd.yaml",可以得到以下信息

- 部署在kube-system命名空间内;

- pod标签带有"component=etcd";

- 指标接口服务监控本地的2381端口;

- Pod以hostNetwork模式运行,共享宿主机网络命名空间;

综上所述,可以在master节点上通过curl工具访问"http://127.0.0.1:2381/metrics"来查看etcd的指标数据。

1.修改etcd指标接口监听地址

指标接口服务默认监听"127.0.0.1",为了实现外部可以抓取数据,需要将监听地址修改为"0.0.0.0",即在pod资源文件中将"–listen-metrics-urls"参数的值改为"http://0.0.0.0:2381"。

[root@k8s-master ~]# vim /etc/kubernetes/manifests/etcd.yaml

containers:

- command:

...

- --listen-metrics-urls=http://0.0.0.0:2381

...

修改完后不需要重新apply,会自动重启生效的

2.prometheus中添加etcd的服务发现配置

[root@k8s-master prometheus]# vim prometheus-configmap.yaml

- job_name: kubernetes-etcd

kubernetes_sd_configs:

- role: endpoints

# 仅保留kube-system命名空间名为etcd的service

relabel_configs:

- action: keep

regex: kube-system;etcd

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

上述配置添加了一个名为"kubernetes-etcd"的作业,然后使用了endpoints类型自动发现etcd endpoints对象关联的pod并将其添加为监控目标。在此过程中,仅保留kube-system命名空间中名为etcd的service。

3.创建etcd的service

使用endpoints类型的前提是需要存在service,而默认情况下不会创建etcd。因此为它创建一个名为"etcd"的service

[root@k8s-master prometheus]# vim etcd-service.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd

namespace: kube-system

spec:

selector:

# 这里使用标签选择器来匹配你的 etcd Pods

component: etcd

ports:

- name: metrics

port: 2381

targetPort: 2381

创建完成后,就能在prometheus Web UI中看到etcd pod的监控目标了

4.grafana添加etcd监控模版

grafana模版中心 etcd监控模版:https://grafana.com/grafana/dashboards/9733-etcd-for-k8s-cn/

或者直接下载我修改好的

https://download.csdn.net/download/qq_44930876/89682777

或者直接导入 grafana模版中心的etcd监控模板ID:9733

二、二进制方式部署k8s etcd

kubeadm方式部署k8s,etcd以pod的方式运行 在默认开启暴露metrics数据的,不过一般etcd都是部署在集群外部的,如二进制部署的k8s 并且其暴露的借口是基于https协议的,为了方便统一监控管理,我们需要将etcd服务代理到k8s集群中,然后使用prometheus的k8s动态服务发现机制,自动查找到带有指定label标签的etcd service服务;

https://blog.csdn.net/qq_44930876/article/details/126686599

继之前二进制部署k8s时安装的etcd集群来配置接下来的监控

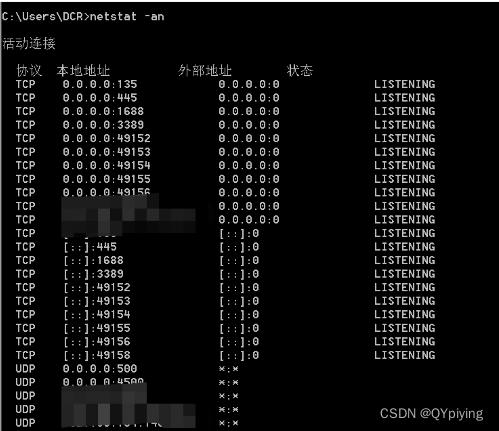

如下:对于开启tls的etcd 需要指定客户端证书后,查看本机/metrics etcd的指标数据

[root@k8s-master1 ~]# cd /hqtbj/hqtwww/etcd/ssl

[root@k8s-master1 ssl]# curl -Lk --cert ./ca.pem --key ca-key.pem https://172.32.0.11:2379/metrics

1.将etcd服务代理到k8s集群

实现prometheus第一步,需要创建etcd的service和endpoint资源,将etcd代理到k8s集群内部,然后给etcd service添加指定labels的标签,这样prometheus就可以通过k8s服务发现机制 查到带有指定标签的service关联的应用列表

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: ops

labels:

#Kubernetes 会根据该标签和 Endpoints 资源关联

k8s-app: etcd

#Prometheus 会根据该标签服务发现到该服务

app.kubernetes.io/name: etcd

spec:

type: ClusterIP

#设置为 None,不分配 Service IP

clusterIP: None

ports:

- name: port

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: ops

labels:

k8s-app: etcd

subsets:

#etcd的节点IP地址列表

- addresses:

- ip: 172.32.0.11

- ip: 172.32.0.12

- ip: 172.32.0.13

#etcd 端口

ports:

- port: 2379

2.创建etcd证书的secrets

由于etcd是基于https协议,prometheuss采集指标数据时需要使用到tls证书,所以我们将etcd的证书文件挂在到k8s的configmap中;

[root@k8s-master1 ~]# kubectl create secret generic etcd-certs \

--from-file=/hqtbj/hqtwww/etcd/ssl/etcd-server.pem \

--from-file=/hqtbj/hqtwww/etcd/ssl/etcd-server-key.pem \

--from-file=/hqtbj/hqtwww/etcd/ssl/ca.pem \

-n ops

secret/etcd-certs created

3.prometheus挂载etcd证书的secrets

[root@k8s-master1 prometheus]# vim prometheus-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: ops

labels:

k8s-app: prometheus

spec:

replicas: 1

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

spec:

serviceAccountName: prometheus

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: ""

containers:

#负责热加载configmap配置文件的容器

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.45.4"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

resources:

limits:

cpu: 500m

memory: 1500Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-rules

mountPath: /etc/config/rules

#将etcd的secrets挂载至prometheus的/etc/config/etcd-certs

- name: prometheus-etcd

mountPath: /var/run/secrets/kubernetes.io/etcd-certs

- name: timezone

mountPath: /etc/localtime

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

- name: prometheus-data

persistentVolumeClaim:

claimName: prometheus

#将etcd的secrets挂载至prometheus的/etc/config/etcd-certs

- name: prometheus-etcd

secret:

secretName: etcd-certs

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus

namespace: ops

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: ops

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090

selector:

k8s-app: prometheus

---

#授权prometheus访问k8s资源的凭证

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: ops

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: ops

4.prometheus添加采集etcd配置

[root@k8s-master1 prometheus]# vim prometheus-configmap.yaml

...

- job_name: kubernetes-etcd

# 基于k8s服务自动发现

kubernetes_sd_configs:

# 指定 ETCD Service 所在的Namespace名称

- role: endpoints

namespaces:

names: ["ops"]

relabel_configs:

# 指定从 app.kubernetes.io/name 标签等于 etcd 的 service 服务获取指标信息

- action: keep

source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: etcd

scheme: https

tls_config:

# 配置 ETCD 证书所在路径(Prometheus 容器内的文件路径)

ca_file: /var/run/secrets/kubernetes.io/etcd-certs/ca.pem

cert_file: /var/run/secrets/kubernetes.io/etcd-certs/etcd-server.pem

key_file: /var/run/secrets/kubernetes.io/etcd-certs/etcd-server-key.pem

insecure_skip_verify: true

...

重启prometheus,我在deployment添加了修改configmap自动重启,稍等片刻查看prometheus web ui中etcd的采集项状态

5.grafana添加etcd监控面板

grafana模版中心 etcd监控模版:https://grafana.com/grafana/dashboards/21473-etcd-cluster-overview/

或者直接下载我修改好的:https://download.csdn.net/download/qq_44930876/89682778

或者直接导入 grafana模版中心的etcd监控模板ID:21473