爬虫521

- 记录

记录

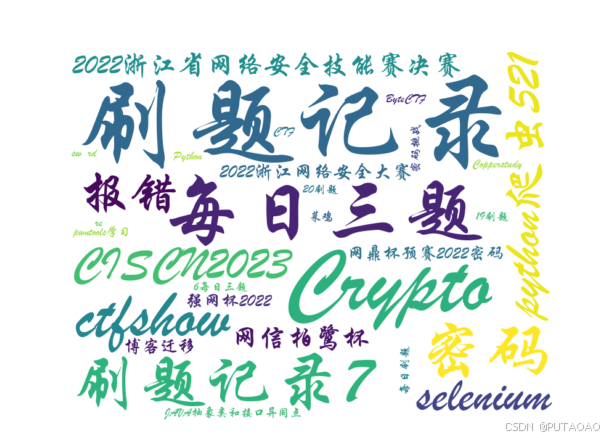

最近想学爬虫,尝试爬取自己账号下的文章标题做个词云

csdn有反爬机制 原理我就不说啦 大家都写了

看到大家结果是加cookie

但是我加了还是521报错

尝试再加了referer 就成功了(╹▽╹)

import matplotlib

import requests

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import jieba

# 定义URL和请求头

url = 'https://blog.csdn.net/community/home-api/v1/get-business-list?page=1&size=40&businessType=blog&orderby=&noMore=false&year=&month=&username=PUTAOAO'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36',

'Cookie':'cookie',

'Referer':'https://blog.csdn.net/PUTAOAO?type=blog'}

# 发送GET请求

response = requests.get(url, headers=headers)

# 检查响应状态码

if response.status_code == 200:

# 转换响应内容为JSON格式

re=response.json()

# 获取评论列表

ll = re['data']['list']

print(ll)

# 初始化内容列表

content = []

# 遍历评论列表,提取内容并添加到内容列表

for l in ll:

content.append(l['title'])

# 合并所有评论内容为一个字符串

full_content = ' '.join(content)

print(full_content)

# 生成词云

wc = WordCloud(font_path='C:\Windows\Fonts\STXINGKA.TTF',width=800, height=600, mode="RGBA", background_color='white').generate(full_content)

# 显示词云

plt.imshow(wc, interpolation='bilinear')

plt.axis('off')

plt.show()

else:

print(f"请求失败,状态码:{response.status_code}")