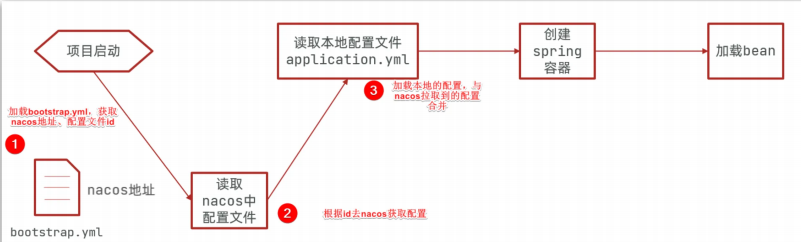

MapTRv2这篇工作很有意思的一点是预测可视化的时候,在Argoverse数据集上把BEV的预测结果投影到PV图中,来更直观地评估预测结果的好坏,如下图所示。

这部分的代码在maptrv2分支中的tools/maptrv2/av2_vis_pred.py中

def points_ego2img(pts_ego, lidar2img):

pts_ego_4d = np.concatenate([pts_ego, np.ones([len(pts_ego), 1])], axis=-1)

pts_img_4d = lidar2img @ pts_ego_4d.T

uv = pts_img_4d.T

uv = remove_nan_values(uv)

depth = uv[:, 2]

uv = uv[:, :2] / uv[:, 2].reshape(-1, 1)

return uv, depth

def draw_polyline_ego_on_img(polyline_ego, img_bgr, lidar2img, map_class, thickness):

# if 2-dimension, assume z=0

if polyline_ego.shape[1] == 2:

zeros = np.zeros((polyline_ego.shape[0], 1))

polyline_ego = np.concatenate([polyline_ego, zeros], axis=1)

polyline_ego = interp_fixed_dist(line=LineString(polyline_ego), sample_dist=0.2)

uv, depth = points_ego2img(polyline_ego, lidar2img)

h, w, c = img_bgr.shape

is_valid_x = np.logical_and(0 <= uv[:, 0], uv[:, 0] < w - 1)

is_valid_y = np.logical_and(0 <= uv[:, 1], uv[:, 1] < h - 1)

is_valid_z = depth > 0

is_valid_points = np.logical_and.reduce([is_valid_x, is_valid_y, is_valid_z])

if is_valid_points.sum() == 0:

return

tmp_list = []

for i, valid in enumerate(is_valid_points):

if valid:

tmp_list.append(uv[i])

else:

if len(tmp_list) >= 2:

tmp_vector = np.stack(tmp_list)

tmp_vector = np.round(tmp_vector).astype(np.int32)

draw_visible_polyline_cv2(

copy.deepcopy(tmp_vector),

valid_pts_bool=np.ones((len(uv), 1), dtype=bool),

image=img_bgr,

color=COLOR_MAPS_BGR[map_class],

thickness_px=thickness,

map_class=map_class

)

tmp_list = []

if len(tmp_list) >= 2:

tmp_vector = np.stack(tmp_list)

tmp_vector = np.round(tmp_vector).astype(np.int32)

draw_visible_polyline_cv2(

copy.deepcopy(tmp_vector),

valid_pts_bool=np.ones((len(uv), 1), dtype=bool),

image=img_bgr,

color=COLOR_MAPS_BGR[map_class],

thickness_px=thickness,

map_class=map_class,

)

def render_anno_on_pv(cam_img, anno, lidar2img):

for key, value in anno.items():

for pts in value:

draw_polyline_ego_on_img(pts, cam_img, lidar2img,

key, thickness=10)

def main():

##

pred_anno = {'divider':[],'ped_crossing':[],'boundary':[],'centerline':[]}

class_by_index=['divider','ped_crossing','boundary']

for pred_score_3d, pred_label_3d, pred_pts_3d in zip(scores_3d[keep], labels_3d[keep], pts_3d[keep]):

if pred_label_3d == 3:

instance = LineString(pred_pts_3d.numpy()).simplify(0.2, preserve_topology=True)

pts = np.array(list(instance.coords))

pred_anno['centerline'].append(pts)

pred_centerlines.append(pts)

x = np.array([pt[0] for pt in pts])

y = np.array([pt[1] for pt in pts])

plt.quiver(x[:-1], y[:-1], x[1:] - x[:-1], y[1:] - y[:-1], scale_units='xy', angles='xy', scale=1, color='green',headwidth=5,headlength=6,width=0.006,alpha=0.8,zorder=-1)

else:

pred_pts_3d = pred_pts_3d.numpy()

pred_anno[class_by_index[pred_label_3d]].append(pred_pts_3d)

pts_x = pred_pts_3d[:,0]

pts_y = pred_pts_3d[:,1]

plt.plot(pts_x, pts_y, color=colors_plt[pred_label_3d],linewidth=1,alpha=0.8,zorder=-1)

# plt.scatter(pts_x, pts_y, color=colors_plt[pred_label_3d],s=1,alpha=0.8,zorder=-1)

plt.imshow(car_img, extent=[-1.5, 1.5, -1.2, 1.2])

map_path = osp.join(sample_dir, 'PRED_MAP.png')

plt.savefig(map_path, bbox_inches='tight', format='png',dpi=1200)

plt.close()

rendered_cams_dict = {}

for key, cam_dict in img_path_dict.items():

cam_img = cv2.imread(osp.join(data_path_prefix,cam_dict['filepath']))

render_anno_on_pv(cam_img,pred_anno,cam_dict['lidar2img'])

if 'front' not in key:

# cam_img = cam_img[:,::-1,:]

cam_img = cv2.flip(cam_img, 1)

lw = 8

tf = max(lw - 1, 1)

w, h = cv2.getTextSize(caption_by_cam[key], 0, fontScale=lw / 3, thickness=tf)[0] # text width, height

p1 = (0,0)

p2 = (w,h+3)

color=(0, 0, 0)

txt_color=(255, 255, 255)

cv2.rectangle(cam_img, p1, p2, color, -1, cv2.LINE_AA) # filled

cv2.putText(cam_img,

caption_by_cam[key], (p1[0], p1[1] + h + 2),

0,

lw / 3,

txt_color,

thickness=tf,

lineType=cv2.LINE_AA)

rendered_cams_dict[key] = cam_img

具体来说,利用lidar2img矩阵将BEV下自车ego坐标系中的结果转换到每张PV图坐标系中,然后进行可视化。

然而对应nuScenes可视化的脚本里没有实现这个功能。因此笔者尝试手动迁移。

然而效果不如意。。

在比较了argoverse和nuScenes的区别后,发现两者的坐标系定义是不同的。

无人驾驶中常用坐标系及相互转换的数学原理介绍_相机 imu 世界坐标系转换-CSDN博客

具体来说,Argoverse的坐标系中,自车ego坐标系=标注坐标系,因此所有标注点/预测点的z=0.具体体现在

def draw_polyline_ego_on_img(polyline_ego, img_bgr, lidar2img, map_class, thickness):

# if 2-dimension, assume z=0

if polyline_ego.shape[1] == 2:

zeros = np.zeros((polyline_ego.shape[0], 1))

polyline_ego = np.concatenate([polyline_ego, zeros], axis=1)这一步人工加了一个z维度,而argoverse所有的点z=0。意味着地面点都在lidar坐标系z=0中。

然而nuScenes不同。标注是在lidar坐标系中的,因此lidar的z=0是跟lidar平高的,然而地面点是低于lidar的,因此需要设置一个z。参考projects/mmdet3d_plugin/datasets/nuscenes_offlinemap_dataset.py中对于gt_pv_seg_mask的生成中z=-1.6。因此在可视化nuScenes的时候也设置成z=-1.6

相对拟合一点。但是实际上还是跟实际情况有出入。。。。到这里卡住了,记录一下问题,不知道怎么解决。

注:实际上笔者认为,BEV下的标注是ego坐标系下的,而如果要用lidar2img转换为图像坐标系下进行可视化,中间还需要进行一步ego2lidar。

因此我有一次做了一点改动,想着先将ego转化为lidar再转化为img

def points_ego2img(pts_ego, lidar2img, lidar2ego):

pts_ego_4d = np.concatenate([pts_ego, np.ones([len(pts_ego), 1])], axis=-1)

ego2lidar = np.linalg.inv(lidar2ego)

pts_img_4d = lidar2img @ ego2lidar @ pts_ego_4d.T

# pts_img_4d = lidar2img @ pts_ego_4d.T

pdb.set_trace()

pix_coords = perspective(pts_ego_4d, lidar2img)但是可视化完全崩了。。希望有大佬能指点一二。

![[机器学习]--线性回归算法](https://i-blog.csdnimg.cn/direct/38189eeaa18b44b282fedae6a3463eea.png)

![自开发多功能Vue组件:可定义滚动速度[回到顶部/底部]图标组件的安装与使用!](https://i-blog.csdnimg.cn/direct/541bdb7a523748a4bda4c1dc83d9b3ed.jpeg#pic_center)