环境描述:Redhat7.9 11.2.0.4 RAC 双节点

实验背景

群里有大佬在交流RAC中1个节点操作系统坏了如何修复,故有了该实验。

在正常的生产环境当中,有时候会遇到主机磁盘以及其他硬件故障导致主机OS系统无法启动,或者OS系统本身故障无法修复的情况。这时候除了重装OS系统也没别的办法,但是重装后改如何加入原有的RAC集群呢。具体步骤如下:

1、 清理失败节点信息

2、 配置重装系统相关信息

3、 将重装系统重新加入到RAC中

注意:官方文档上的删除、添加节点的操作步骤和这里的重新配置还是有点区别的。

服务器信息

| 主机名 | OS | Public IP | VIP | Private IP | 实例 | 版本 |

| rac1 | rhel7.6 | 192.168.40.200 | 192.168.40.202 | 192.168.183.200 | topnet01 | 11.2.0.4 |

| rac2 | rhel7.6 | 192.168.40.201 | 192.168.40.203 | 192.168.183.201 | topnet02 | 11.2.0.4 |

查看版本命令:

su - oracle

sqlplus -V 模拟OS故障

为了节省时间,采用将节点2的GI和DB软件删除来模拟一个重装后的全新OS系统。

集群状态

[root@orcl01:/root]$ su - grid

[grid@orcl01:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCH.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.DATA.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.LISTENER.lsnr

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.OCR.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.asm

ONLINE ONLINE orcl01 Started

ONLINE ONLINE orcl02 Started

ora.gsd

OFFLINE OFFLINE orcl01

OFFLINE OFFLINE orcl02

ora.net1.network

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.ons

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE orcl02

ora.cvu

1 ONLINE ONLINE orcl02

ora.oc4j

1 ONLINE ONLINE orcl01

ora.orcl01.vip

1 ONLINE ONLINE orcl01

ora.orcl02.vip

1 ONLINE ONLINE orcl02

ora.scan1.vip

1 ONLINE ONLINE orcl02

ora.topnet.db

1 ONLINE ONLINE orcl01 Open

2 ONLINE ONLINE orcl02 Open卸载节点2的GI和DB

[root@orcl02:/root]$ rm -rf /etc/oracle

[root@orcl02:/root]$ rm -rf /etc/ora*

[root@orcl02:/root]$ rm -rf /u01

[root@orcl02:/root]$ rm -rf /tmp/CVU*

[root@orcl02:/root]$ rm -rf /tmp/.oracle

[root@orcl02:/root]$ rm -rf /var/tmp/.oracle

[root@orcl02:/root]$ rm -f /etc/init.d/init.ohasd

[root@orcl02:/root]$ rm -f /etc/systemd/system/oracle-ohasd.service

[root@orcl02:/root]$ rm -rf /etc/init.d/ohasd重启节点2的OS

reboot再次确认集群状态

[grid@orcl01:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCH.dg

ONLINE ONLINE orcl01

ora.DATA.dg

ONLINE ONLINE orcl01

ora.LISTENER.lsnr

ONLINE ONLINE orcl01

ora.OCR.dg

ONLINE ONLINE orcl01

ora.asm

ONLINE ONLINE orcl01 Started

ora.gsd

OFFLINE OFFLINE orcl01

ora.net1.network

ONLINE ONLINE orcl01

ora.ons

ONLINE ONLINE orcl01

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE orcl01

ora.cvu

1 ONLINE ONLINE orcl01

ora.oc4j

1 ONLINE ONLINE orcl01

ora.orcl01.vip

1 ONLINE ONLINE orcl01

ora.orcl02.vip

1 ONLINE INTERMEDIATE orcl01 FAILED OVER

ora.scan1.vip

1 ONLINE ONLINE orcl01

ora.topnet.db

1 ONLINE ONLINE orcl01 Open

2 ONLINE OFFLINE确认节点2环境

[root@orcl02:/root]$ cd /

[root@orcl02:/]$ ll

total 36

drwxr-xr-x. 3 oracle oinstall 58 Mar 30 09:17 backup

lrwxrwxrwx. 1 root root 7 Aug 18 2023 bin -> usr/bin

dr-xr-xr-x. 4 root root 4096 Aug 18 2023 boot

drwxr-xr-x 19 root root 3480 Aug 16 13:33 dev

drwxr-xr-x. 83 root root 8192 Aug 16 13:16 etc

drwxr-xr-x. 4 root root 32 Aug 18 2023 home

lrwxrwxrwx. 1 root root 7 Aug 18 2023 lib -> usr/lib

lrwxrwxrwx. 1 root root 9 Aug 18 2023 lib64 -> usr/lib64

drwxr-xr-x. 2 root root 6 Apr 11 2018 media

drwxr-xr-x. 2 root root 6 Apr 11 2018 mnt

drwxr-xr-x. 3 root root 22 Aug 18 2023 opt

dr-xr-xr-x 161 root root 0 Aug 16 13:33 proc

dr-xr-x---. 3 root root 4096 Aug 18 2023 root

drwxr-xr-x 27 root root 840 Aug 16 13:33 run

lrwxrwxrwx. 1 root root 8 Aug 18 2023 sbin -> usr/sbin

drwxr-xr-x. 2 grid oinstall 4096 Mar 30 08:24 soft

drwxr-xr-x. 2 root root 6 Apr 11 2018 srv

-rw-------. 1 root root 0 Aug 18 2023 swapfile

dr-xr-xr-x 13 root root 0 Aug 16 13:33 sys

drwxrwxrwt. 16 root root 4096 Aug 16 13:33 tmp

drwxr-xr-x. 13 root root 4096 Aug 18 2023 usr

drwxr-xr-x. 19 root root 4096 Dec 31 2023 var

[root@orcl02:/]$ ps -ef | grep grid

root 2500 1466 0 13:53 pts/0 00:00:00 grep --color=auto grid

[root@orcl02:/]$ ps -ef | grep asm

root 2505 1466 0 13:53 pts/0 00:00:00 grep --color=auto asm

[root@orcl02:/]$ ps -ef | grep oracle

root 2512 1466 0 13:53 pts/0 00:00:00 grep --color=auto oracle扩展:生产环境

节点2要求:

- 公网、私网ip必须和原来保持一致

- 重做后的操作系统目录和原来保持一致

- 内存 、CPU配置不低于原来配置

节点2 Linux服务器配置

时区设置

根据客户标准设置 OS 时区,国内通常为东八区"Asia/Shanghai".

在安装 GRID 之前,一定要先修改好 OS 时区,否则 GRID 将引用一个错误的 OS 时区,导致 DB 的时区,监听的时区等不正确。

[root@orcl02:/root]$ timedatectl status

Local time: Sat 2024-08-17 07:48:47 CST

Universal time: Fri 2024-08-16 23:48:47 UTC

RTC time: Fri 2024-08-16 23:48:46

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: no

RTC in local TZ: no

DST active: n/a修改OS时区:

timedatectl set-timezone "Asia/Shanghai"检查ip地址是否和原来一样

依据节点1的/etc/hosts文件获取节点2的网卡ip。确保节点2是2块网卡,保证节点2的公网和私网ip和原来一样

ip addr配置主机名

依据节点1的/etc/hosts文件获取节点2的主机名。

hostnamectl set-hostname orcl02

exec bashhosts文件配置

依据节点1的/etc/hosts文件向节点2的/etc/hosts文件中增加如下内容:

cat >> /etc/hosts << EOF

## OracleBegin

## RAC1 IP's: orcl01

## RAC1 Public IP

192.168.40.200 orcl01

## RAC1 Virtual IP

192.168.40.202 orcl01-vip

## RAC1 Private IP

192.168.183.200 orcl01-priv

## RAC2 IP's: orcl02

## RAC2 Public IP

192.168.40.201 orcl02

## RAC2 Virtual IP

192.168.40.203 orcl02-vip

## RAC2 Private IP

192.168.183.201 orcl02-priv

## SCAN IP

192.168.40.205 orcl-scan

EOF配置语言环境

echo "export LANG=en_US" >> ~/.bash_profile

source ~/.bash_profile创建用户、组、目录

--节点1上查询grid用户和oracle用户信息,节点2上的uid和gid尽量和节点1保持一致

id grid

id oracle

--节点2上创建grid和oracle用户

--创建用户、组

/usr/sbin/groupadd -g 54321 oinstall

/usr/sbin/groupadd -g 54322 dba

/usr/sbin/groupadd -g 54323 oper

/usr/sbin/groupadd -g 54329 asmadmin

/usr/sbin/groupadd -g 54328 asmoper

/usr/sbin/groupadd -g 54327 asmdba

/usr/sbin/useradd -u 60001 -g oinstall -G dba,asmdba,oper oracle

/usr/sbin/useradd -u 54321 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

echo grid | passwd --stdin grid

echo oracle | passwd --stdin oracle

mkdir -p /u01/app/grid

mkdir -p /u01/app/11.2.0/grid

chown -R grid:oinstall /u01/app/grid

chown -R grid:oinstall /u01/app/11.2.0/grid

mkdir -p /u01/app/oracle

mkdir -p /u01/app/oracle/product/11.2.0/db

chown -R oracle:oinstall /u01/app/oracle

chown -R oracle:oinstall /u01/app/oracle/product/11.2.0/db

mkdir -p /u01/app/oraInventory

chown -R grid:oinstall /u01/app/oraInventory

chmod -R 775 /u01配置yum软件安装环境及软件包安装

#配置本地yum源

mount /dev/cdrom /mnt

cd /etc/yum.repos.d

mkdir bk

mv *.repo bk/

cat > /etc/yum.repos.d/Centos7.repo << "EOF"

[local]

name=Centos7

baseurl=file:///mnt

gpgcheck=0

enabled=1

EOF

cat /etc/yum.repos.d/Centos7.repo

#安装所需的软件

yum -y install autoconf

yum -y install automake

yum -y install binutils

yum -y install binutils-devel

yum -y install bison

yum -y install cpp

yum -y install dos2unix

yum -y install gcc

yum -y install gcc-c++

yum -y install lrzsz

yum -y install python-devel

yum -y install compat-db*

yum -y install compat-gcc-34

yum -y install compat-gcc-34-c++

yum -y install compat-libcap1

yum -y install compat-libstdc++-33

yum -y install compat-libstdc++-33.i686

yum -y install glibc-*

yum -y install glibc-*.i686

yum -y install libXpm-*.i686

yum -y install libXp.so.6

yum -y install libXt.so.6

yum -y install libXtst.so.6

yum -y install libXext

yum -y install libXext.i686

yum -y install libXtst

yum -y install libXtst.i686

yum -y install libX11

yum -y install libX11.i686

yum -y install libXau

yum -y install libXau.i686

yum -y install libxcb

yum -y install libxcb.i686

yum -y install libXi

yum -y install libXi.i686

yum -y install libXtst

yum -y install libstdc++-docs

yum -y install libgcc_s.so.1

yum -y install libstdc++.i686

yum -y install libstdc++-devel

yum -y install libstdc++-devel.i686

yum -y install libaio

yum -y install libaio.i686

yum -y install libaio-devel

yum -y install libaio-devel.i686

yum -y install libXp

yum -y install libaio-devel

yum -y install numactl

yum -y install numactl-devel

yum -y install make

yum -y install sysstat

yum -y install unixODBC

yum -y install unixODBC-devel

yum -y install elfutils-libelf-devel-0.97

yum -y install elfutils-libelf-devel

yum -y install redhat-lsb-core

yum -y install unzip

yum -y install *vnc*

yum install perl-Env

# 安装Linux图像界面

yum groupinstall -y "X Window System"

yum groupinstall -y "GNOME Desktop" "Graphical Administration Tools"

#检查包的安装情况

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' 安装依赖包

rpm -ivh compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

rpm -ivh rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm

如果提示和ksh冲突执行如下操作先卸载ksh然后再安装pdksh依赖包

rpm -evh ksh-20120801-139.el7.x86_64

rpm -ivh pdksh-5.2.14-37.el5.x86_64.rpm修改系统相关参数

修改系统资源限制参数

vi /etc/security/limits.conf

#ORACLE SETTING

grid soft nproc 16384

grid hard nproc 16384

grid soft nofile 65536

grid hard nofile 65536

grid soft stack 32768

grid hard stack 32768

oracle soft nproc 16384

oracle hard nproc 16384

oracle soft nofile 65536

oracle hard nofile 65536

oracle soft stack 32768

oracle hard stack 32768

oracle hard memlock 2000000

oracle soft memlock 2000000

ulimit -a

# nproc 操作系统对用户创建进程数的限制

# nofile 文件描述符 一个文件同时打开的会话数 也就是一个进程能够打开多少个文件

# memlock 内存锁,给oracle用户使用的最大内存,单位是KB

当前环境的物理内存为4G(grid 1g,操作系统 1g,我们给oracle留2g),memlock<物理内存

修改nproc参数

echo "* - nproc 16384" > /etc/security/limits.d/90-nproc.conf控制给用户分配的资源

echo "session required pam_limits.so" >> /etc/pam.d/login

cat /etc/pam.d/login修改内核参数

vi /etc/sysctl.conf

#ORACLE SETTING

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

kernel.panic_on_oops = 1

vm.nr_hugepages = 868

kernel.shmmax = 1610612736

kernel.shmall = 393216

kernel.shmmni = 4096

sysctl -p

参数说明:

--kernel.panic_on_oops = 1

程序出问题,是否继续

--vm.nr_hugepages = 1000

大内存页,物理内存超过8g,必设

经验值:sga_max_size/2m+(100~500)=1536/2m+100=868

>sga_max_size

--kernel.shmmax = 1610612736

定义单个共享内存段的最大值,一定要存放下整个SGA,>SGA

SGA+PGA <物理内存的80%

SGA_max<物理内存的80%的80%

PGA_max<物理内存的80%的20%

kernel.shmall = 393216

--控制共享内存的页数 =kernel.shmmax/PAGESIZE

getconf PAGESIZE --获取内存页大小 4096

kernel.shmmni = 4096

--共享内存段的数量,一个实例就是一个内存共享段

--物理内存(KB)

os_memory_total=$(awk '/MemTotal/{print $2}' /proc/meminfo)

--获取系统页面大小,用于计算内存总量

pagesize=$(getconf PAGE_SIZE)

min_free_kbytes = $os_memory_total / 250

shmall = ($os_memory_total - 1) * 1024 / $pagesize

shmmax = $os_memory_total * 1024 - 1

# 如果 shmall 小于 2097152,则将其设为 2097152

(($shmall < 2097152)) && shmall=2097152

# 如果 shmmax 小于 4294967295,则将其设为 4294967295

(($shmmax < 4294967295)) && shmmax=4294967295关闭透明页

cat /proc/meminfo

cat /sys/kernel/mm/transparent_hugepage/defrag

[always] madvise never

cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

vi /etc/rc.d/rc.local

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

chmod +x /etc/rc.d/rc.local

关闭numa功能

numactl --hardware

vim /etc/default/grub

GRUB_CMDLINE_LINUX="crashkernel=auto rhgb quiet numa=off"

grub2-mkconfig -o /boot/grub2/grub.cfg

#vi /boot/grub/grub.conf

#kernel /boot/vmlinuz-2.6.18-128.1.16.0.1.el5 root=LABEL=DBSYS ro bootarea=dbsys rhgb quiet console=ttyS0,115200n8 console=tty1 crashkernel=128M@16M numa=off

设置字符界面启动操作系统

systemctl set-default multi-user.target共享内存段

[root@racdb01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 93G 1.9G 91G 2% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

#/dev/shm 默认是操作系统物理内存的一半,我们设置大一点

echo "tmpfs /dev/shm tmpfs defaults,size=3072m 0 0" >>/etc/fstab

mount -o remount /dev/shm

[root@racdb01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 93G 2.3G 91G 3% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 3.0G 0 3.0G 0% /dev/shm

检查或配置交换空间

若swap>=2G,跳过该步骤,

若swap=0,则执行以下操作

# 创建指定大小的空文件 /swapfile,并将其格式化为交换分区

dd if=/dev/zero of=/swapfile bs=2G count=1

# 设置文件权限为 0600

chmod 600 /swapfile

# 格式化文件为 Swap 分区

mkswap /swapfile

# 启用 Swap 分区

swapon /swapfile

# 将 Swap 分区信息添加到 /etc/fstab 文件中,以便系统重启后自动加载

echo "/swapfile none swap sw 0 0" >>/etc/fstab

mount -a

--查看内存 已经有swap了

[root@racdb03 tmp]# free -g

total used free shared buff/cache available

Mem: 3 1 1 0 0 1

Swap: 3 0 3

配置安全

#1、禁用SELINUX

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

setenforce 0

#2、关闭防火墙

systemctl stop firewalld

systemctl disable firewalld禁用NTP

--停止NTP服务

systemctl stop ntpd

systemctl disable ntpd

--删除配置文件

mv /etc/ntp.conf /etc/ntp.conf_bak_20240521

--设置时间三台主机的时间要一样,如果一样就不用再设置

date -s 'Sat Aug 26 23:18:15 CST 2023'禁用DNS

因为测试环境,没有使用DNS,删除resolv.conf文件即可。或者直接忽略该失败

mv /etc/resolv.conf /etc/resolv.conf_bak配置grid/oracle 用户环境变量

grid用户

vi /home/grid/.bash_profile 增加如下内容:

# OracleBegin

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export ORACLE_TERM=xterm

export TNS_ADMIN=$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=+ASM2

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysasm'

export PS1="[`whoami`@`hostname`:"'$PWD]$ '

alias sqlplus='rlwrap sqlplus'

alias asmcmd='rlwrap asmcmd'

alias adrci='rlwrap adrci'oracle用户

vi /home/oracle/.bash_profile 增加如下内容:

# OracleBegin

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=/u01/app/oracle/product/11.2.0/db

export ORACLE_TERM=xterm

export TNS_ADMIN=$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32:/lib:/usr/lib

export ORACLE_SID=topnet1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$ORACLE_HOME/perl/bin:$PATH

export PERL5LIB=$ORACLE_HOME/perl/lib

alias sas='sqlplus / as sysdba'

alias awr='sqlplus / as sysdba @?/rdbms/admin/awrrpt'

alias ash='sqlplus / as sysdba @?/rdbms/admin/ashrpt'

alias alert='vi $ORACLE_BASE/diag/rdbms/*/$ORACLE_SID/trace/alert_$ORACLE_SID.log'

export PS1="[`whoami`@`hostname`:"'$PWD]$ '

alias sqlplus='rlwrap sqlplus'

alias rman='rlwrap rman'

alias adrci='rlwrap adrci'配置SSH信任关系

📎ssh.sh

#下载脚本

wget https://gitcode.net/myneth/tools/-/raw/master/tool/ssh.sh

chmod +x ssh.sh

#执行互信

./ssh.sh -user grid -hosts "orcl01 orcl02" -advanced -exverify -confirm

./ssh.sh -user oracle -hosts "orcl01 orcl02" -advanced -exverify -confirm

chmod 600 /home/grid/.ssh/config

chmod 600 /home/oracle/.ssh/config

#检查互信

su - grid

for i in orcl{01,02};do

ssh $i hostname

done

su - oracle

for i in orcl{01,02};do

ssh $i hostname

done实验过程

划重点:节点在加入集群之前,服务器的配置必须与安装RAC时的环境配置相同,不然会出现各种报错。

显示集群节点列表

--显示集群节点列表

su - grid

olsnodes 输出如下:

--显示集群节点列表

[grid@orcl01:/home/grid]$ olsnodes

orcl01

orcl02清除重装主机的OCR条目

--清除重装主机的OCR条目

su - root

$ORACLE_HOME/bin/crsctl delete node -n orcl02

参数说明:

-n 实例节点名,即主机名

--显示集群节点列表 在尚存执行即可 olsnodes ,重装的主机不应该出现在它列出的清单里

su - grid

olsnodes输出如下:

--清除重装主机的OCR条目

[root@orcl01:/root]$ /u01/app/11.2.0/grid/bin/crsctl delete node -n orcl02

CRS-4661: Node orcl02 successfully deleted.

参数说明:

-n 实例节点名,即主机名

--显示集群节点列表

[root@orcl01:/root]$ su - grid

[grid@orcl01:/home/grid]$ olsnodes

orcl01从OCR中删除重装主机的VIP信息

清除节点2的VIP后,最好重启网络服务,否则操作系统层IP地址不会释放,可采用重启清除节点2操作。在生产环境中由于重启网络服务会影响业务,这里可以使用重启监听的方式释放VIP和SCAN_IP

su - root

$ORACLE_HOME/bin/srvctl remove vip -i orcl02 -v -f

参数说明:

-i 实例节点名,即主机名输出如下:

[root@orcl01:/root]$ /u01/app/11.2.0/grid/bin/srvctl remove vip -i orcl02 -v -f

Successfully removed VIP orcl02.清除重装主机的GI和DB home的inventory信息

清除GI的Inventory

对应的是/u01/app/oraInventory/ContentsXML/inventory.xml

su - grid

cd $ORACLE_HOME/oui/bin

./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=orcl01 -silent -local

--参数说明

CLUSTER_NODES:主机名 写尚存节点的主机名输出如下:

[grid@orcl01:/u01/app/11.2.0/grid/oui/bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=orcl01 -silent -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2047 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.清除DB的Inventory

对应的是/u01/app/oraInventory/ContentsXML/inventory.xml

su - oracle

cd $ORACLE_HOME/oui/bin

./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=orcl01 -silent -local

--参数说明

CLUSTER_NODES:主机名 写尚存节点的主机名输出如下:

[oracle@orcl01:/u01/app/oracle/product/11.2.0/db/oui/bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=orcl01 -silent -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2047 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.查看Inventory配置文件

只显示orcl01的信息了,orcl02的信息已不显示。

[grid@orcl01:/u01/app/11.2.0/grid/oui/bin]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2013, Oracle and/or its affiliates.

All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.4.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/u01/app/11.2.0/grid" TYPE="O" IDX="1" CRS="true">

<NODE_LIST>

<NODE NAME="orcl01"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraDb11g_home1" LOC="/u01/app/oracle/product/11.2.0/db" TYPE="O" IDX="2">

<NODE_LIST>

<NODE NAME="orcl01"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraHome1" LOC="/ogg213_Micro/ogg213_soft" TYPE="O" IDX="3"/>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

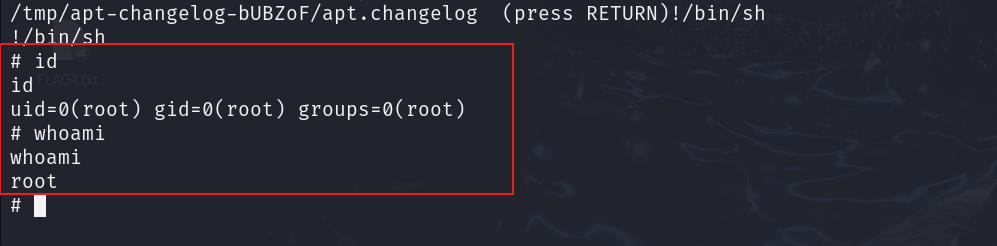

</INVENTORY>CVU检查

su - grid

/u01/app/11.2.0/grid/bin/./cluvfy stage -pre nodeadd -n orcl02 -verbose查看校验信息,个别failed的可以忽略,比如resolv.conf等解析文件配置

在节点1上执行AddNode.sh增加GI节点

节点2上必须创建grid、oracle根和安装目录及存储库目录,权限也要正确,不然addnode的过程中会提示目录不存在或创建目录失败。

su - grid

cd $ORACLE_HOME/oui/bin

--忽略之前的校验失败选项

--增加节点

export IGNORE_PREADDNODE_CHECKS=Y

./addNode.sh -silent "CLUSTER_NEW_NODES={orcl02}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={orcl02-vip}"

参数说明:

$ORACLE_HOME 即$GRID_HOME

CLUSTER_NEW_VIRTUAL_HOSTNAMES 参数值从幸存节点/etc/hosts中获取或者

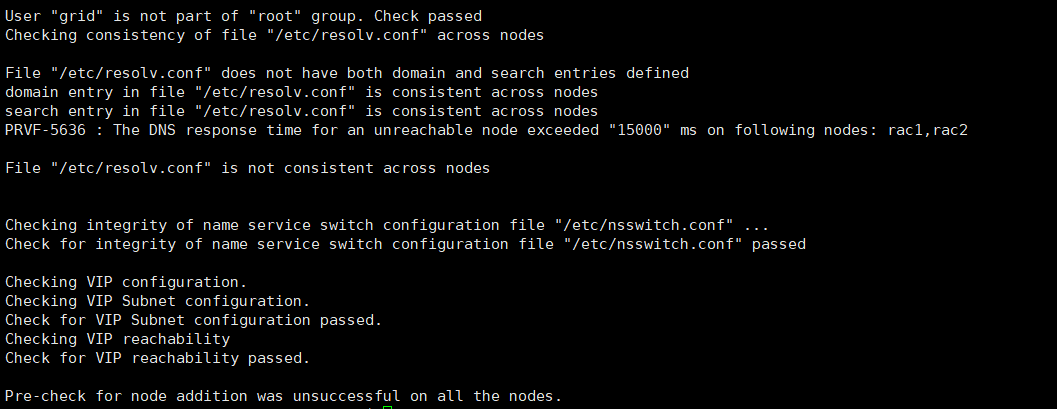

olsnodes -n -i 获取vip名称若不设置IGNORE_PREADDNODE_CHECKS=Y 忽略之前的校验失败选项,会有如下报错:

忽略之前的校验失败选项,addnode成功,如下:

Instantiating scripts for add node (Saturday, August 17, 2024 8:31:47 AM CST)

. 1% Done.

Instantiation of add node scripts complete

Copying to remote nodes (Saturday, August 17, 2024 8:31:50 AM CST)

............................................................................................... 96% Done.

Home copied to new nodes

Saving inventory on nodes (Saturday, August 17, 2024 8:33:11 AM CST)

. 100% Done.

Save inventory complete

WARNING:A new inventory has been created on one or more nodes in this session. However, it has not yet been registered as the central inventory of this system.

To register the new inventory please run the script at '/u01/app/oraInventory/orainstRoot.sh' with root privileges on nodes 'orcl02'.

If you do not register the inventory, you may not be able to update or patch the products you installed.

The following configuration scripts need to be executed as the "root" user in each new cluster node. Each script in the list below is followed by a list of nodes.

/u01/app/oraInventory/orainstRoot.sh #On nodes orcl02

/u01/app/11.2.0/grid/root.sh #On nodes orcl02

To execute the configuration scripts:

1. Open a terminal window

2. Log in as "root"

3. Run the scripts in each cluster node

The Cluster Node Addition of /u01/app/11.2.0/grid was successful.

Please check '/tmp/silentInstall.log' for more details.节点2运行脚本启动CRS

启动CRS

su - root

/u01/app/oraInventory/orainstRoot.sh

/u01/app/11.2.0/grid/root.shCRS启动日志位置:/u01/app/11.2.0/grid/install/

检查集群状态

su - grid

crsctl stat res -t输出如下:

[grid@orcl02:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCH.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.DATA.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.LISTENER.lsnr

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.OCR.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.asm

ONLINE ONLINE orcl01 Started

ONLINE ONLINE orcl02 Started

ora.gsd

OFFLINE OFFLINE orcl01

OFFLINE OFFLINE orcl02

ora.net1.network

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.ons

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE orcl01

ora.cvu

1 ONLINE ONLINE orcl01

ora.oc4j

1 ONLINE ONLINE orcl01

ora.orcl01.vip

1 ONLINE ONLINE orcl01

ora.orcl02.vip

1 ONLINE ONLINE orcl02

ora.scan1.vip

1 ONLINE ONLINE orcl01

ora.topnet.db

1 ONLINE ONLINE orcl01 Open

2 ONLINE OFFLINE在节点1上执行AddNode.sh增加DB节点

AddNode.sh增加DB节点

su - oracle

/u01/app/oracle/product/11.2.0/db/oui/bin/addNode.sh -silent "CLUSTER_NEW_NODES={orcl02}"

参数说明:

CLUSTER_NEW_NODES 参数值从幸存节点/etc/hosts中获取或者olsnodes -n 显示节点编号和节点名称输出如下:

Instantiating scripts for add node (Sunday, August 18, 2024 6:27:06 AM CST)

. 1% Done.

Instantiation of add node scripts complete

Copying to remote nodes (Sunday, August 18, 2024 6:27:09 AM CST)

............................................................................................... 96% Done.

Home copied to new nodes

Saving inventory on nodes (Sunday, August 18, 2024 6:29:18 AM CST)

. 100% Done.

Save inventory complete

WARNING:

The following configuration scripts need to be executed as the "root" user in each new cluster node. Each script in the list below is followed by a list of nodes.

/u01/app/oracle/product/11.2.0/db/root.sh #On nodes orcl02

To execute the configuration scripts:

1. Open a terminal window

2. Log in as "root"

3. Run the scripts in each cluster node

The Cluster Node Addition of /u01/app/oracle/product/11.2.0/db was successful.

Please check '/tmp/silentInstall.log' for more details.执行root.sh脚本

节点2 ,即需要加入的节点在root用户下执行root.sh脚本

su - root

/u01/app/oracle/product/11.2.0/db/root.sh输出如下:

[root@orcl02:/root]$ /u01/app/oracle/product/11.2.0/db/root.sh

Check /u01/app/oracle/product/11.2.0/db/install/root_orcl02_2024-08-18_06-37-25.log for the output of root script

[root@orcl02:/root]$ tail -300f /u01/app/oracle/product/11.2.0/db/install/root_orcl02_2024-08-18_06-37-25.log

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/db

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

Finished product-specific root actions.问题处理

--问题描述

节点2 执行root.sh脚本提示如下报错

[root@orcl02:/root]$ /u01/app/oracle/product/11.2.0/db/root.sh

Check /u01/app/oracle/product/11.2.0/db/install/root_orcl02_2024-08-18_06-31-43.log for the output of root script

[root@orcl02:/root]$ tail -300f /u01/app/oracle/product/11.2.0/db/install/root_orcl02_2024-08-18_06-31-43.log

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/db

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

/bin/chown: cannot access ‘/u01/app/oracle/product/11.2.0/db/bin/nmhs’: No such file or directory

/bin/chmod: cannot access ‘/u01/app/oracle/product/11.2.0/db/bin/nmhs’: No such file or directory

Finished product-specific root actions.

Finished product-specific root actions.

--解决办法

rhel7上的11g版本,这里提示的nmhs文件不存在,可以手工从1号节点拷贝,然后修改权限

节点1:

su - root

scp /u01/app/oracle/product/11.2.0/db/bin/nmhs root@192.168.40.201:/u01/app/oracle/product/11.2.0/db/bin/nmhs

节点2:

su - root

chown -R root:oinstall /u01/app/oracle/product/11.2.0/db/bin/nmhs启动节点2实例

模拟故障时只清理了oraInventory信息,所以集群中节点2的实例信息、数据库都还保留,直接启动实例即可。

重命名参数文件

su - oracle

cd /u01/app/oracle/product/11.2.0/db/dbs

mv inittopnet1.ora inittopnet2.ora重命名密码文件

su - oracle

cd /u01/app/oracle/product/11.2.0/db/dbs

mv orapwtopnet1 orapwtopnet2启动节点2实例

su - grid

srvctl start database -d topnet查看集群状态

输出如下:

[root@orcl01:/root]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCH.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.DATA.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.LISTENER.lsnr

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.OCR.dg

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.asm

ONLINE ONLINE orcl01 Started

ONLINE ONLINE orcl02 Started

ora.gsd

OFFLINE OFFLINE orcl01

OFFLINE OFFLINE orcl02

ora.net1.network

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

ora.ons

ONLINE ONLINE orcl01

ONLINE ONLINE orcl02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE orcl02

ora.cvu

1 ONLINE ONLINE orcl01

ora.oc4j

1 ONLINE ONLINE orcl01

ora.orcl01.vip

1 ONLINE ONLINE orcl01

ora.orcl02.vip

1 ONLINE ONLINE orcl02

ora.scan1.vip

1 ONLINE ONLINE orcl02

ora.topnet.db

1 ONLINE ONLINE orcl01 Open

2 ONLINE ONLINE orcl02 Open重装节点成功加入集群

参考链接:【RAC】操作系统重装后RAC11g节点重置注意事项_ITPUB博客

主机os重装节点加回RAC集群_window rac重装节点-CSDN博客

Oracle RAC一节点系统重做问题_oracle rac 节点重装操作系统-CSDN博客