前几天虽然让树莓派学会了“听”(《树莓派智能语音助手之ASR – SpeechRecognition+PocketSphinx》),但是,PocketSphinx的识别效果真心不咋的。可我的树莓派因为系统等原因,一直装不了sherpa-onnx。正当我只能无奈地接受ASR低识别率的时候,忽然发现了有人推荐了一款新的语音识别工具——sherpa-ncnn。不多废话,直接开装。

官网教程:Python API — sherpa 1.3 documentation

CSDN上的介绍文章:https://blog.csdn.net/lstef/article/details/139680825

1.安装

sherpa-ncnn的安装很简单:

pip install sherpa-ncnn

当然,你也可以选择手动编译:

git clone https://github.com/k2-fsa/sherpa-ncnn

cd sherpa-ncnn

mkdir build

cd build

cmake \

-D SHERPA_NCNN_ENABLE_PYTHON=ON \

-D SHERPA_NCNN_ENABLE_PORTAUDIO=OFF \

-D BUILD_SHARED_LIBS=ON \

-DCMAKE_C_FLAGS="-march=armv7-a -mfloat-abi=hard -mfpu=neon" \

-DCMAKE_CXX_FLAGS="-march=armv7-a -mfloat-abi=hard -mfpu=neon" \

..

make -j6

这两种方法我都亲自验证过(手动编译是python3.9下,直接pip install是python3.7,)。

2.下载模型

安装成功后就要下载预先训练好的模型:

Pre-trained models — sherpa 1.3 documentation

如图,我下载的是Small models,这里面也有4个模型可供选择。

模型的下载步骤:

cd /path/to/sherpa-ncnn

wget https://github.com/k2-fsa/sherpa-ncnn/releases/download/models/sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16.tar.bz2

tar xvf sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16.tar.bz2

3.测试

下载并解压成功后,可以在当前目录直接测试效果:

cd /path/to/sherpa-ncnn

for method in greedy_search modified_beam_search; do

./build/bin/sherpa-ncnn \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/tokens.txt \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/encoder_jit_trace-pnnx.ncnn.param \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/encoder_jit_trace-pnnx.ncnn.bin \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/decoder_jit_trace-pnnx.ncnn.param \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/decoder_jit_trace-pnnx.ncnn.bin \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/joiner_jit_trace-pnnx.ncnn.param \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/joiner_jit_trace-pnnx.ncnn.bin \

./sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/test_wavs/1.wav \

2 \

$method

done

直接把上面的代码复制到linux终端执行就可以看到测试效果了。

4.python调用

接下来,就是自己编写代码了。sherpa-ncnn的录音用的是sounddevice这个模块,需要自行安装:pip install sounddevice。

直接复制官网教程的示例:

import sys

try:

import sounddevice as sd

except ImportError as e:

print("Please install sounddevice first. You can use")

print()

print(" pip install sounddevice")

print()

print("to install it")

sys.exit(-1)

import sherpa_ncnn

def create_recognizer():

# Please replace the model files if needed.

# See https://k2-fsa.github.io/sherpa/ncnn/pretrained_models/index.html

# for download links.

recognizer = sherpa_ncnn.Recognizer(

tokens="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/tokens.txt",

encoder_param="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/encoder_jit_trace-pnnx.ncnn.param",

encoder_bin="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/encoder_jit_trace-pnnx.ncnn.bin",

decoder_param="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/decoder_jit_trace-pnnx.ncnn.param",

decoder_bin="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/decoder_jit_trace-pnnx.ncnn.bin",

joiner_param="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/joiner_jit_trace-pnnx.ncnn.param",

joiner_bin="./sherpa-ncnn-conv-emformer-transducer-2022-12-06/joiner_jit_trace-pnnx.ncnn.bin",

num_threads=4,

)

return recognizer

def main():

print("Started! Please speak")

recognizer = create_recognizer()

sample_rate = recognizer.sample_rate

samples_per_read = int(0.1 * sample_rate) # 0.1 second = 100 ms

last_result = ""

with sd.InputStream(

channels=1, dtype="float32", samplerate=sample_rate

) as s:

while True:

samples, _ = s.read(samples_per_read) # a blocking read

samples = samples.reshape(-1)

recognizer.accept_waveform(sample_rate, samples)

result = recognizer.text

if last_result != result:

last_result = result

print(result)

if __name__ == "__main__":

devices = sd.query_devices()

print(devices)

default_input_device_idx = sd.default.device[0]

print(f'Use default device: {devices[default_input_device_idx]["name"]}')

try:

main()

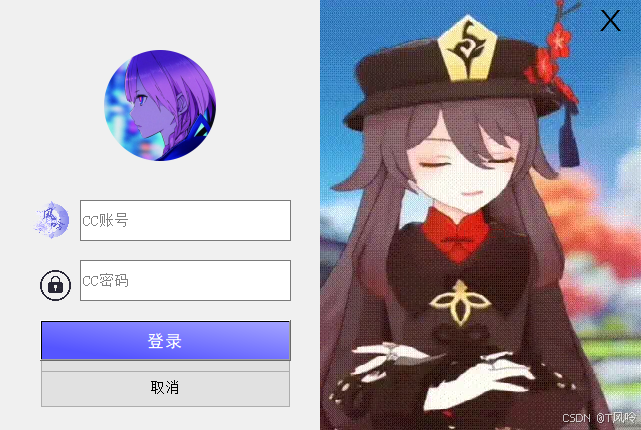

好了,就这么方便。下图是我融合了snowboy唤醒+ASR的演示效果。