Stable Cascade是 Stability AI 开发的一款先进的文生图(Text-to-Image)生成模型。

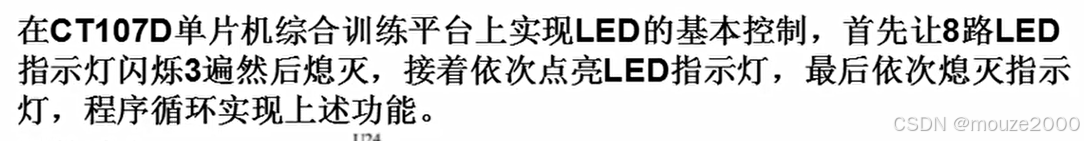

Stable Cascade由三个模型组成:Stage A、Stage B 和 Stage C,它们分别处理图像生成的不同阶段,形成了一个“级联”(Cascade)的过程。

Stage C 模型会根据给定的文本生成低分辨率潜像,然后输入到 Stage B 模型中进行放大,最后输入到 Stage A 模型中再次放大并转换为像素空间,生成最终图像。

这种分阶段的架构模式使得 Stable Cascade 在生成图像时更加灵活高效,它不仅允许每个阶段使用不同大小的模型,还能让用户根据自身硬件条件选择合适的模型,从而降低了硬件要求。

github项目地址:https://github.com/Stability-AI/StableCascade。

一、环境安装

1、python环境

建议安装python版本在3.10以上。

2、pip库安装

pip install torch==2.3.0+cu118 torchvision==0.18.0+cu118 torchaudio==2.3.0 --extra-index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install gradio accelerate -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install git+https://github.com/kashif/diffusers.git@wuerstchen-v3

3、模型下载:

bash models/download_models.sh essential big-big small-small bfloat16

二、功能测试

1、命令行运行测试:

(1)python代码调用测试

import torch

from tqdm import tqdm

# Assuming calculate_latent_sizes, core, models, extras, core_b, models_b, extras_b, show_images are all properly imported and defined elsewhere

batch_size = 4

caption = "Cinematic photo of an anthropomorphic penguin sitting in a cafe reading a book and having a coffee"

height, width = 1024, 1024

stage_c_latent_shape, stage_b_latent_shape = calculate_latent_sizes(height, width, batch_size=batch_size)

# Stage C Parameters

extras.sampling_configs['cfg'] = 4

extras.sampling_configs['shift'] = 2

extras.sampling_configs['timesteps'] = 20

extras.sampling_configs['t_start'] = 1.0

# Stage B Parameters

extras_b.sampling_configs['cfg'] = 1.1

extras_b.sampling_configs['shift'] = 1

extras_b.sampling_configs['timesteps'] = 10

extras_b.sampling_configs['t_start'] = 1.0

# Prepare conditions

batch = {'captions': [caption] * batch_size}

conditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=False, eval_image_embeds=False)

unconditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=True, eval_image_embeds=False)

conditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=False)

unconditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=True)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Sampling

with torch.no_grad(), torch.cuda.amp.autocast(dtype=torch.bfloat16):

torch.manual_seed(42)

# Stage C Sampling

sampling_c = extras.gdf.sample(

models.generator, conditions, stage_c_latent_shape,

unconditions, device=device, **extras.sampling_configs,

)

for (sampled_c, _, _) in tqdm(sampling_c, total=extras.sampling_configs['timesteps']):

sampled_c = sampled_c

# Uncomment to preview stage C results

# preview_c = models.previewer(sampled_c).float()

# show_images(preview_c)

# Update conditions for Stage B

conditions_b['effnet'] = sampled_c

unconditions_b['effnet'] = torch.zeros_like(sampled_c, device=device)

# Stage B Sampling

sampling_b = extras_b.gdf.sample(

models_b.generator, conditions_b, stage_b_latent_shape,

unconditions_b, device=device, **extras_b.sampling_configs

)

for (sampled_b, _, _) in tqdm(sampling_b, total=extras_b.sampling_configs['timesteps']):

sampled_b = sampled_b

# Decode and display final sampled images

sampled = models_b.stage_a.decode(sampled_b).float()

show_images(sampled)未完......

更多详细的内容欢迎关注:杰哥新技术

![[Datawhale AI 夏令营]多模态大模型数据合成赛事-Task2](https://i-blog.csdnimg.cn/direct/5462e1a42f5c49fca75f7f517a84c798.png)