文章目录

- 项目背景

- 代码

- 导包

- 读取数据

- 文本预处理

- 举例查看分词器

- 数据集调整

- 进一步剖析:对应Step [{i+1}/{len(train_loader)}] 里的train_loader

- 进一步剖析:Step [{i+1}/{len(train_loader)}] 里的train_loader,原始的train_df

- 计算数据集中最长文本的长度

- 定义模型

- 超参数

- 进一步剖析label_encoder.classes_

- 训练 RNN 模型

- 训练 GRU 模型

- 训练 LSTM 模型

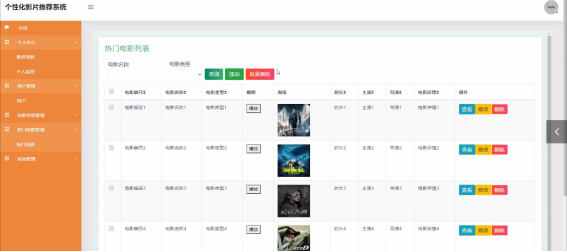

- 同类型项目

项目背景

项目的目的,是为了对情感评论数据集进行预测打标。在训练之前,需要对数据进行数据清洗环节,前面已对数据进行清洗,详情可移步至NLP_情感分类_数据清洗

前面用机器学习方案解决,详情可移步至NLP_情感分类_机器学习方案

下面对已清洗的数据集,用序列模型方案进行处理

代码

导包

import warnings

warnings.filterwarnings('ignore')

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

from torch.nn.utils.rnn import pad_sequence

import torch.nn.functional as F

读取数据

df = pd.read_csv('data/sentiment_analysis_clean.csv')

df = df.dropna()

文本预处理

tokenizer = get_tokenizer('basic_english')

def yield_tokens(data_iter):

for text in data_iter:

yield tokenizer(text)

vocab = build_vocab_from_iterator(yield_tokens(df['text']), specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])

# 标签编码

label_encoder = LabelEncoder()

df['label'] = label_encoder.fit_transform(df['label'])

# 划分训练集和测试集

train_df, test_df = train_test_split(df, test_size=0.2, random_state=2024)

# 转换为 PyTorch 张量

def text_pipeline(x):

return vocab(tokenizer(x))

def label_pipeline(x):

return int(x)

举例查看分词器

tokenizer('I like apple'),vocab(tokenizer('I like apple'))

数据集调整

class TextDataset(Dataset):

def __init__(self, df):

self.texts = df['text'].values

self.labels = df['label'].values

def __len__(self):

return len(self.texts)

def __getitem__(self, idx):

text = torch.tensor(text_pipeline(self.texts[idx]), dtype=torch.long)

label = torch.tensor(label_pipeline(self.labels[idx]), dtype=torch.long)

return text, label

train_dataset = TextDataset(train_df)

test_dataset = TextDataset(test_df)

def collate_batch(batch):

text_list, label_list = [], []

for (text, label) in batch:

text_list.append(text)

label_list.append(label)

text_list = pad_sequence(text_list, batch_first=True, padding_value=vocab['<pad>'])

label_list = torch.tensor(label_list, dtype=torch.long)

return text_list, label_list

train_loader = DataLoader(train_dataset, batch_size=128, shuffle=True, collate_fn=collate_batch)

test_loader = DataLoader(test_dataset, batch_size=128, shuffle=False, collate_fn=collate_batch)

进一步剖析:对应Step [{i+1}/{len(train_loader)}] 里的train_loader

len(train_dataset)

进一步剖析:Step [{i+1}/{len(train_loader)}] 里的train_loader,原始的train_df

len(train_df)

计算数据集中最长文本的长度

max_seq_len = 0

for text in df['text']:

tokens = text_pipeline(text)

if len(tokens) > max_seq_len:

max_seq_len = len(tokens)

print(f'Max sequence length in the dataset: {max_seq_len}')

定义模型

class TextClassifier(nn.Module):

def __init__(self, model_type, vocab_size, embed_dim, hidden_dim, output_dim, num_layers=1):

super(TextClassifier, self).__init__()

self.model_type = model_type

self.embedding = nn.Embedding(vocab_size, embed_dim, padding_idx=vocab['<pad>'])

if model_type == 'RNN':

self.rnn = nn.RNN(embed_dim, hidden_dim, num_layers, batch_first=True)

elif model_type == 'GRU':

self.rnn = nn.GRU(embed_dim, hidden_dim, num_layers, batch_first=True)

elif model_type == 'LSTM':

self.rnn = nn.LSTM(embed_dim, hidden_dim, num_layers, batch_first=True)

else:

raise ValueError("model_type should be one of ['RNN', 'GRU', 'LSTM']")

if model_type in ['RNN', 'GRU', 'LSTM']:

self.fc = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x = self.embedding(x) # [batch,seq_len,emb_dim]

if self.model_type in ['RNN', 'GRU', 'LSTM']:

h0 = torch.zeros(self.rnn.num_layers, x.size(0), self.rnn.hidden_size).to(x.device)

if self.model_type == 'LSTM':

c0 = torch.zeros(self.rnn.num_layers, x.size(0), self.rnn.hidden_size).to(x.device)

out, _ = self.rnn(x, (h0, c0))

else:

out, _ = self.rnn(x, h0)

# out :[batch,seq_len,emb_dim]

out = self.fc(out[:, -1, :]) # 使用输出序列最后一个时间步的表征作为序列整体的表征

else:

raise ValueError("model_type should be one of ['RNN', 'GRU', 'LSTM']")

return out

def train_model(model, train_loader, criterion, optimizer, num_epochs=2, device='cpu'):

model.to(device)

model.train()

for epoch in range(num_epochs):

running_loss = 0.0

correct = 0

total = 0

for i, (texts, labels) in enumerate(train_loader):

texts, labels = texts.to(device), labels.to(device)

outputs = model(texts)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

if i % 10 == 0: # 每个批次输出一次日志

accuracy = 100 * correct / total

print(f'Epoch [{epoch+1}/{num_epochs}], Step [{i+1}/{len(train_loader)}], Loss: {loss.item():.4f}, Accuracy: {accuracy:.2f}%')

epoch_loss = running_loss / len(train_loader)

epoch_accuracy = 100 * correct / total

print(f'Epoch [{epoch+1}/{num_epochs}], Average Loss: {epoch_loss:.4f}, Average Accuracy: {epoch_accuracy:.2f}%')

def evaluate_model(model, test_loader, device='cpu'):

model.to(device)

model.eval()

correct = 0

total = 0

with torch.no_grad():

for texts, labels in test_loader:

texts, labels = texts.to(device), labels.to(device)

outputs = model(texts)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Accuracy: {100 * correct / total:.2f}%')

超参数

vocab_size = len(vocab)

embed_dim = 128

hidden_dim = 128

output_dim = len(label_encoder.classes_)

num_layers = 1

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

进一步剖析label_encoder.classes_

len(label_encoder.classes_)

训练 RNN 模型

model_rnn = TextClassifier('RNN', vocab_size, embed_dim, hidden_dim, output_dim, num_layers)

criterion = nn.CrossEntropyLoss()

optimizer_rnn = optim.Adam(model_rnn.parameters(), lr=0.001)

train_model(model_rnn, train_loader, criterion, optimizer_rnn, num_epochs=2, device=device)

evaluate_model(model_rnn, test_loader, device=device)

训练 GRU 模型

model_gru = TextClassifier('GRU', vocab_size, embed_dim, hidden_dim, output_dim, num_layers)

optimizer_gru = optim.Adam(model_gru.parameters(), lr=0.001)

train_model(model_gru, train_loader, criterion, optimizer_gru, num_epochs=2, device=device)

evaluate_model(model_gru, test_loader, device=device)

训练 LSTM 模型

model_lstm = TextClassifier('LSTM', vocab_size, embed_dim, hidden_dim, output_dim, num_layers)

optimizer_lstm = optim.Adam(model_lstm.parameters(), lr=0.001)

train_model(model_lstm, train_loader, criterion, optimizer_lstm, num_epochs=2, device=device)

evaluate_model(model_lstm, test_loader, device=device)

同类型项目

阿里云-零基础入门NLP【基于机器学习的文本分类】

阿里云-零基础入门NLP【基于深度学习的文本分类3-BERT】

也可以参考进行学习

学习的参考资料:

深度之眼

![[upload]-[GXYCTF2019]BabyUpload1-笔记](https://i-blog.csdnimg.cn/direct/7fb53f7dc67a44a495ebff43d6488819.png)