LLMChain是一个简单的链,接受一个提示模板,使用用户输入格式化它并从LLM返回响应。

其中,prompt_template是一个非常关键的组件,可以让你创建一个非常简单的链,它将接收用户输入,使用它格式化提示,然后将其发送到LLM。

1. 配置OLLaMA

在使用LLMChain之前,需要先配置OLLaMA,OLLaMA可以运行本地大语言模型,模型名称如下:

https://ollama.com/library

每个模型都有其特点和适用场景:

- Llama 2:这是一个预训练的大型语言模型,具有7B、13B和70B三种不同规模的模型。Llama 2增加了预训练语料,上下文长度从2048提升到4096,使得模型能够理解和生成更长的文本。

- OpenHermes:这个模型专注于代码生成和编程任务,适合用于软件开发和脚本编写等场景。

- Solar:这是一个基于Llama 2的微调版本,专为对话场景优化。Solar在安全性和有用性方面进行了人工评估和改进,旨在成为封闭源模型的有效替代品。

- Qwen:7B:这是一个中文微调过的模型,特别适合处理中文文本。它需要至少8GB的内存进行推理,推荐配备16GB以流畅运行。

综上所述,这些模型各有侧重点,用户可以根据自己的需求选择合适的模型进行使用。

下载的模型列表,可以通过以下命令来查看:

ollama list

NAME ID SIZE MODIFIED

llama2:latest 78e26419b446 3.8 GB 38 hours ago

llama2-chinese:13b 990f930d55c5 7.4 GB 2 days ago

qwen:7b 2091ee8c8d8f 4.5 GB 7 days ago

qwen:latest d53d04290064 2.3 GB 2 days ago

1.1 安装

ollama官网 https://ollama.com/

1.2 下载模型

以通义千问模型为例:

ollama run 模型名

ollama run qwen:7b

qwen下载.png

qwen使用.png

第一次下载时间长点,后面再运行就不用下载了

2. langchain

2.1.LLMChain调用

实现目标:创建LLM链。假设我们想要创建一个公司名字

英文版

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

from langchain.llms import Ollama

prompt_template = "What is a good name for a company that makes {product}?"

ollama_llm = Ollama(model="qwen:7b")

llm_chain = LLMChain(

llm = ollama_llm,

prompt = PromptTemplate.from_template(prompt_template)

)

print(llm_chain("colorful socks"))

中文版

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

from langchain.llms import Ollama

prompt_template = "请给制作 {product} 的公司起个名字,只回答公司名即可"

ollama_llm = Ollama(model="qwen:7b")

llm_chain = LLMChain(

llm = ollama_llm,

prompt = PromptTemplate.from_template(prompt_template)

)

print(llm_chain("袜子"))

# print(llm_chain.run("袜子")) # 加个.run也可

输出:{'product': '袜子', 'text': '"棉语袜业公司"\n\n\n'}

print(llm_chain.predict("袜子"))

输出:棉语袜业公司

run和 predict的区别是

- llm_chain.run:结合 输入{product} 和 大模型输出内容一起输出

- llm_chain.predict :只给出大模型输出内容

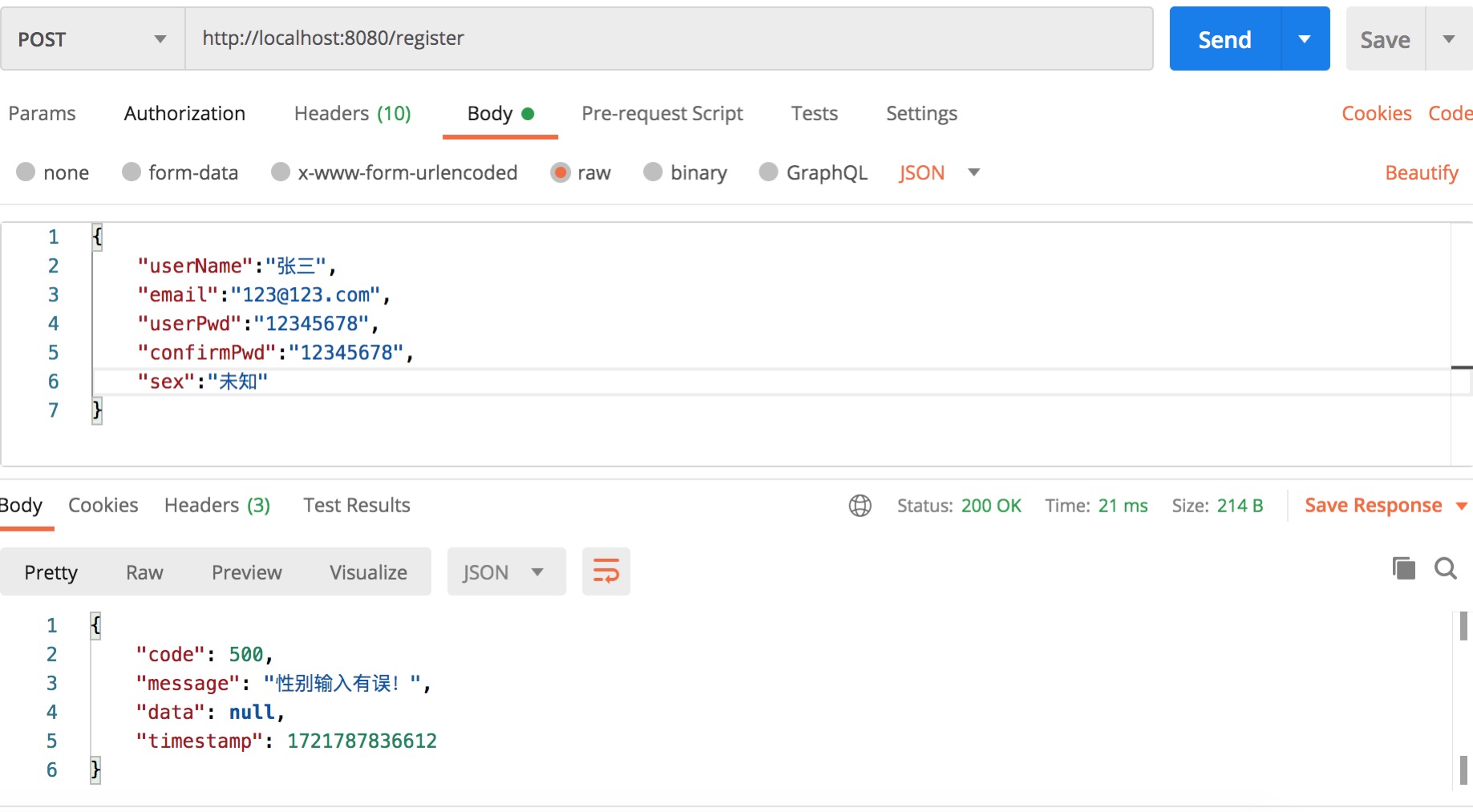

2.2.Model调用

直接调用llama2模型

from langchain_community.llms import Ollama

llm = Ollama(model="llama2")

response = llm.invoke("Who are you")

print(response)

运行输出结果:

I'm LLaMA, an AI assistant developed by Meta AI that can understand and respond

to human input in a conversational manner. I'm here to help you with any questions

or topics you'd like to discuss!

Is there anything specific you'd like to talk about?

3.本地化LLM

前面讲到,可以通过ollama run llama2 可以直接访问大模型:

>>> hello

Hello! It's nice to meet you. Is there something I can help you

with or would you like to chat?

>>> tell me a joke

Sure, here's one:

Why don't scientists trust atoms?

Because they make up everything!

I hope that brought a smile to your face 😄. Is there anything

else I can assist you with?

>>> Send a message (/? for help)

langchain集成

可以通过langchain本地代码方式集成实现,实现方式如下:

ollama_host = "localhost"

ollama_port = 11434

ollama_model = "llama2"

from langchain_community.llms import Ollama

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

if __name__ == "__main__":

llm = Ollama(base_url = f"http://{ollama_host}:{ollama_port}",

model= ollama_model,

callback_manager = CallbackManager([StreamingStdOutCallbackHandler()]))

while True:

query = input("\n\n>>>Enter a query:")

llm(query)

运行后,显示效果如下:

>>>Enter a query:hello

Hello! It's nice to meet you. Is there something I can help you with or would you like to chat?

>>>Enter a query:tell me a joke

Sure! Here's one:

Why don't scientists trust atoms?

Because they make up everything!

I hope that made you smile! Do you want to hear another one?

>>>Enter a query:

4.定制化LLM

4.1.Modelfile

可以通过ModelFile的方式来对大模型进行本地定制化:

1.Create a Modelfile:

FROM llama2

SYSTEM """

You are responsible for translating user's query to English. You should only respond

with the following content:

1. The translated content.

2. Introduction to some ket concepts or words in the translated content, to help

users understand the context.

"""

2.创建LLM:

ollama create llama-translator -f ./llama2-translator.Modelfile

创建完后,ollama list 可以发现:

llama-translator:latest 40f41df44b0a 3.8 GB 53 minutes ago

3.运行LLM

ollama run llama-translator

运行结果如下:

>>> 今天心情不错

Translation: "Today's mood is good."

Introduction to some key concepts or words in the translated content:

* 心情 (xīn jìng) - mood, state of mind

* 不错 (bù hǎo) - good, fine, well

So, "今天心情不错" means "Today's mood is good." It is a simple sentence that expresses a positive emotional state.

The word "心情" is a key term in Chinese that refers to one's emotions or mood, and the word "不错"

is an adverb that can be translated as "good," "fine," or "well."

>>> 我爱你中国

Translation: "I love you China."

Introduction to some key concepts or words in the translated content:

* 爱 (ài) - love, loving

* 中国 (zhōng guó) - China, People's Republic of China

So, "我爱你中国" means "I love you China." It is a simple sentence that expresses affection

or fondness towards a country. The word "爱" is a key term in Chinese that refers to romantic

love, while the word "中国" is a geographical term that refers to the People's Republic of China.

>>> Send a message (/? for help)

4.2.自定义系统提示词

根据 ChatGPT 的使用经验,大家都知道系统提示词的重要性。好的系统提示词能有效地将大模型定制成自己需要的状态。在 Ollama 中,有多种方法可以自定义系统提示词。

首先,不少 Ollama 前端已提供系统提示词的配置入口,推荐直接利用其功能。此外,这些前端在底层往往是通过API与 Ollama 服务端交互的,我们也可以直接调用,并传入系统提示词选项:

curl http://localhost:11434/api/chat -d '{

"model": "llama2-chinese:13b",

"messages": [

{

"role": "system",

"content": "以海盗的口吻简单作答。"

},

{

"role": "user",

"content": "天空为什么是蓝色的?"

}

],

"stream": false

}'

其中role为system的消息即为系统提示词,跟Modelfile里面的SYSTEM下面的定义差不多一个意思。

输出如下:

{

"model":"llama2-chinese:13b",

"created_at":"2024-04-29T01:32:08.448614864Z",

"message":{

"role":"assistant",

"content":"好了,这个问题太简单了。蓝色是由于我们的视觉系统处理光线而有所改变造成的。在水平方向看到的天空大多为天际辐射,

其中包括大量的紫外线和可见光线。这些光线会被散射,而且被大气层上的大量分子所吸收,进而变成蓝色或其他相似的颜色。\n"

},

"done":true,

"total_duration":31927183897,

"load_duration":522246,

"prompt_eval_duration":224460000,

"eval_count":149,

"eval_duration":31700862000

}