数据集、代码均来自kaggle。地址:https://www.kaggle.com/datasets/himanshunakrani/iris-dataset?resource=download

🚀 揭示线性分类器的力量:线性判别分析的探索

欢迎来到线性分类器的世界和线性判别分析(LDA)的迷人领域!🌟在本笔记本中,我们将开始一场激动人心的冒险,揭开这些强大算法的内部工作原理,这些算法构成了许多机器学习应用程序的支柱。

线性分类器是机器学习领域的基本工具,是二元分类、多类分类等任务的基石。但你有没有想过,在这些看似简单却非常有效的模型背后发生了什么?🧐这就是线性判别分析发挥作用的地方。

和我一起深入研究线性分类器背后的原理,揭示线性判别分析背后的魔力。🎩准备好解开谜团,获得见解,并扩展您对机器学习中这些基本技术的理解!

所以,废话不多说,让我们一起深入了解线性分类器的秘密吧!💡

线性分类器

从本质上讲,简单的线性分类器的目标是找到一个区分特征空间中不同类的决策边界。在数学上,对于二值分类,这个边界表示为一个超平面:

w 0 + w 1 x 1 + w 2 x 2 + ⋯ + w m x m = 0 w_0 + w_1x_1 + w_2x_2 + \dots + w_mx_m = 0 w0+w1x1+w2x2+⋯+wmxm=0

其中, ( w 0 , w 1 , … , w m ) (w_0, w_1, \dots, w_m) (w0,w1,…,wm) 被称为权重, ( x 1 , x 2 , … , x m ) (x_1, x_2, \dots, x_m) (x1,x2,…,xm) 被称为特征.

在整个旅程中,我们将深入研究线性分类器的数学,探索优化,损失函数和梯度下降。🎓让我们一起深入了解线性分类器的简单性和强大功能!💫

理解线性判别分析(LDA)

线性判别分析(LDA)是一种用于降维和分类的强大技术。📊与简单的线性分类器不同,LDA考虑来自不同类别的数据点的分布来寻找最优决策边界。

在其核心,LDA寻求最大化类之间的分离,同时最小化每个类内的方差。在数学上,LDA的目标是找到一个最大类间分散和最小类内分散的投影。

给定一组 ( N ) (N) (N)数据点, ( m ) (m) (m)特征和 ( K ) (K) (K)类,LDA通过最大化以下准则来计算最优投影矩阵 ( W ) (W) (W):

J ( W ) = Tr ( W T S B W ) Tr ( W T S W W ) J(W) = \frac{{\text{Tr}(W^T S_B W)}}{{\text{Tr}(W^T S_W W)}} J(W)=Tr(WTSWW)Tr(WTSBW)

其中, ( S B ) (S_B) (SB)表示类间散点矩阵, ( S W ) (S_W) (SW)表示类内散点矩阵。

最优投影矩阵

(

W

)

(W)

(W)可通过求解广义特征值问题得到:

S

B

W

=

λ

S

W

W

S_B W = \lambda S_W W

SBW=λSWW

一旦计算出投影矩阵 ( W ) (W) (W), LDA将原始数据投影到这个低维子空间上。然后可以在这个简化的空间中使用简单的线性分类器进行分类。

通过利用数据的统计属性,LDA提供了一种健壮的分类和降维方法。🎓让我们一起探索LDA的复杂性,释放它的潜力!!

导入所需依赖项

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

from sklearn.linear_model import Lasso

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import StandardScaler

import warnings

warnings.filterwarnings('ignore')

加载数据集

df = pd.read_csv('./iris.csv')

# 查看数据行数和列数

df.shape

(150, 5)

df.head()

| sepal_length | sepal_width | petal_length | petal_width | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

# 查看类别

df['species'].unique()

array(['setosa', 'versicolor', 'virginica'], dtype=object)

# 查看每一列有多少null值

df.isnull().sum()

sepal_length 0

sepal_width 0

petal_length 0

petal_width 0

species 0

dtype: int64

数据处理

# 为了理解方便,只保留 species 中类别为 setosa、versicolor 的数据

df = df[df['species'].isin(['setosa', 'versicolor'])]

# 将类别为versicolor替换为0,类别为setosa替换为1

df['species'].replace({'versicolor': 0,'setosa': 1}, inplace=True)

# 划分数据集为特征集X,以及目标集y

columns_y = ['species']

X = df.drop(columns=columns_y, inplace=False)

y = df['species']

# 划分数据为训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

基本线性分类器

class LinearClassifier:

def __init__(self):

self.w = None

self.bias = None

def fit(self, X, y):

class_0 = X[y == 0]

class_1 = X[y == 1]

# 两种方法计算每个类别的均值

centroid_0 = np.mean(class_0, axis=0)

centroid_1 = np.sum(class_1, axis=0) / len(class_1)

# 求法向量w作为两类的质心之差

self.w = centroid_1 - centroid_0

# 求截距w0

dist_to_centroid_0 = np.linalg.norm(centroid_0)

dist_to_centroid_1 = np.linalg.norm(centroid_1)

self.bias = 0.5 * (dist_to_centroid_0 - dist_to_centroid_1)

def predict(self, X):

# 基于线性分选机的分类预测

predictions = np.dot(X, self.w) + self.bias

return np.where(predictions >= 0, 1, 0)

classifier = LinearClassifier()

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

accuracy = np.mean(y_pred == y_test)

print("Accuracy:", accuracy)

Accuracy: 0.44

LDA

df = pd.read_csv('./iris.csv')

df = df[df['species'].isin(['setosa', 'versicolor'])]

df['species'].replace({'versicolor': -1}, inplace=True)

df['species'].replace({'setosa': 1}, inplace=True)

columns_to_drop = ['species']

X = df.drop(columns=columns_to_drop, inplace=False)

y = df['species']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

y_test.unique()

array([-1, 1])

class FishersLDA:

def __init__(self):

self.w = None

self.b = None

def fit(self, X, y):

# 按照类别划分数据

X0 = X[y == -1]

X1 = X[y == 1]

# 计算每个类的均值

mean0 = np.mean(X0, axis=0)

mean1 = np.mean(X1, axis=0)

# 计算类内散点矩阵

Sw = np.dot((X0 - mean0).T, (X0 - mean0)) + np.dot((X1 - mean1).T, (X1 - mean1))

# 计算Fisher线性判别式

self.w = np.dot(np.linalg.inv(Sw), mean1 - mean0)

self.b = - 0.5 * (np.dot(mean0, np.dot(np.linalg.inv(Sw), mean0)) - np.dot(mean1, np.dot(np.linalg.inv(Sw), mean1)))

def predict(self, X):

if self.w is None or self.b is None:

raise Exception("Model not trained yet!")

# 计算判别函数

f_x = np.dot(X, self.w) - self.b

# 基于判别函数的符号进行分类

y_pred = np.sign(f_x)

return y_pred.astype(int)

def get_z_projection(self, X):

if self.w is None or self.b is None:

raise Exception("Model not trained yet!")

# 计算判别函数

f_x = np.dot(X, self.w) - self.b

return f_x

# 实例化和拟合FLDA

flda = FishersLDA()

flda.fit(X_train.values, y_train)

# 对测试集进行预测

y_pred = flda.predict(X_test.values)

y_pred

array([-1, -1, -1, 1, 1, 1, 1, -1, 1, 1, 1, 1, -1, 1, -1, 1, -1,

-1, 1, 1, -1, -1, 1, 1, -1])

# 计算准确性

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

# 其他分类指标

print("Classification Report:")

print(classification_report(y_test, y_pred))

Accuracy: 1.0

Classification Report:

precision recall f1-score support

-1 1.00 1.00 1.00 11

1 1.00 1.00 1.00 14

accuracy 1.00 25

macro avg 1.00 1.00 1.00 25

weighted avg 1.00 1.00 1.00 25

尝试使用两个相关性最大的特征

df = pd.read_csv('./iris.csv')

df = df[df['species'].isin(['setosa', 'versicolor'])]

df['species'].replace({'versicolor': -1}, inplace=True)

df['species'].replace({'setosa': 1}, inplace=True)

df.head()

| sepal_length | sepal_width | petal_length | petal_width | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | 1 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | 1 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | 1 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | 1 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | 1 |

# 计算相关系数

correlation_matrix = df.corr()

correlation_with_target = correlation_matrix['species'].drop('species')

correlation_with_target

sepal_length -0.728290

sepal_width 0.684019

petal_length -0.969955

petal_width -0.960158

Name: species, dtype: float64

# 选择两个绝对相关系数最高的特征

selected_features = correlation_with_target.abs().nlargest(2).index

# 提取特征和目标

X_reduced = df[selected_features]

y = df['species']

X_reduced

| petal_length | petal_width | |

|---|---|---|

| 0 | 1.4 | 0.2 |

| 1 | 1.4 | 0.2 |

| 2 | 1.3 | 0.2 |

| 3 | 1.5 | 0.2 |

| 4 | 1.4 | 0.2 |

| ... | ... | ... |

| 95 | 4.2 | 1.2 |

| 96 | 4.2 | 1.3 |

| 97 | 4.3 | 1.3 |

| 98 | 3.0 | 1.1 |

| 99 | 4.1 | 1.3 |

100 rows × 2 columns

# 将数据分成训练集和测试集

X_train_reduced, X_test_reduced, y_train, y_test = train_test_split(X_reduced, y, test_size=0.25, random_state=42)

lda = FishersLDA()

lda.fit(X_train_reduced.values, y_train)

y_pred = lda.predict(X_test_reduced.values)

# 评估准确性

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

Accuracy: 1.0

z_projection = lda.get_z_projection(X_train_reduced)

# print(z_projection)

ind_pos = y_train.values==1

ind_neg = y_train.values==-1

z_pos = z_projection[ind_pos]

z_neg = z_projection[ind_neg]

hist_p, bin_edges_p = np.histogram(z_pos, bins=10)

hist_n, bin_edges_n = np.histogram(z_neg, bins=10)

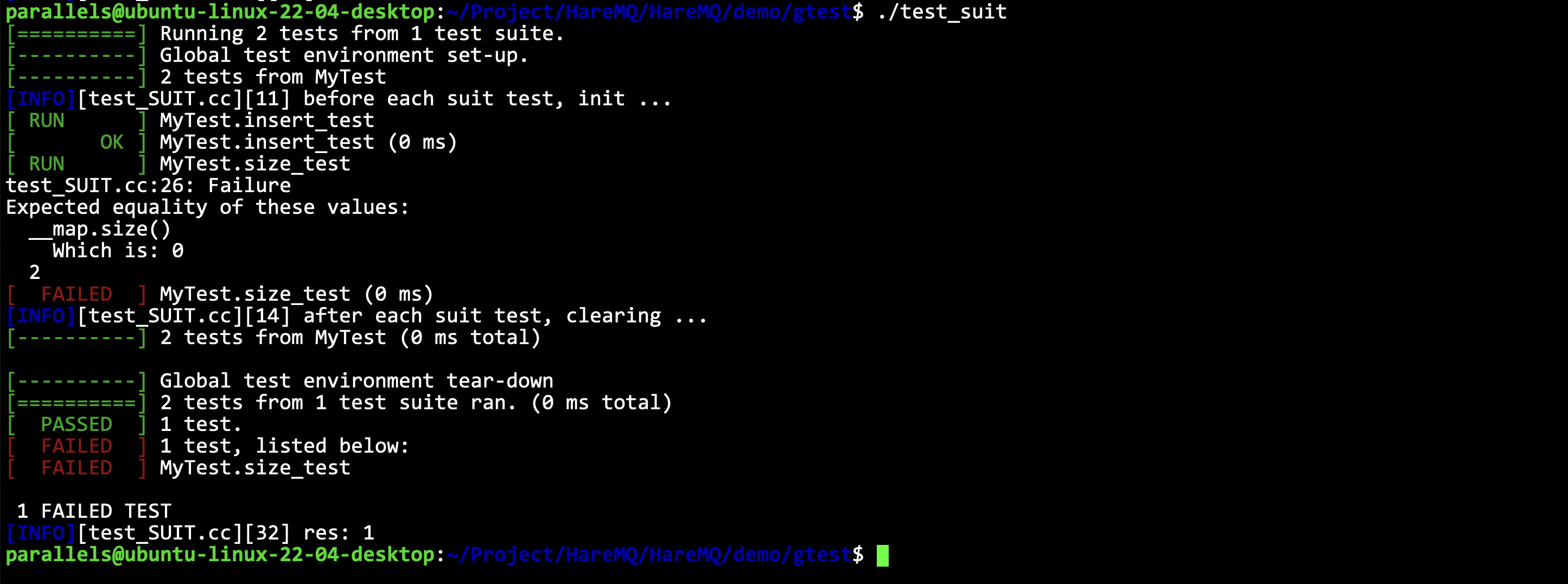

# 类分布的直方图

plt.bar(bin_edges_p[:-1], hist_p, color=['blue'], width=0.02)

plt.bar(bin_edges_n[:-1], hist_n, color=['orange'], width=0.02)

plt.xlabel('Class')

plt.ylabel('Frequency')

plt.title('Histogram of Class Distribution')

plt.show()

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

clf = LinearDiscriminantAnalysis()

clf.fit(X_train_reduced, y_train)

y_pred_sklearn = clf.predict(X_test_reduced)

# 评估准确性

accuracy = accuracy_score(y_test, y_pred_sklearn)

print("Accuracy:", accuracy)

Accuracy: 1.0

尝试LASSO回归进行特征选择

df = pd.read_csv('./iris.csv')

df = df[df['species'].isin(['setosa', 'versicolor'])]

df['species'].replace({'versicolor': -1}, inplace=True)

df['species'].replace({'setosa': 1}, inplace=True)

columns_to_drop = ['species']

X = df.drop(columns=columns_to_drop, inplace=False)

y = df['species']

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# 正则化参数(alpha)的不同值

alphas = np.logspace(-4, 2, 100)

coefs = []

for alpha in alphas:

lasso = Lasso(alpha=alpha)

lasso.fit(X_scaled, y)

coefs.append(lasso.coef_)

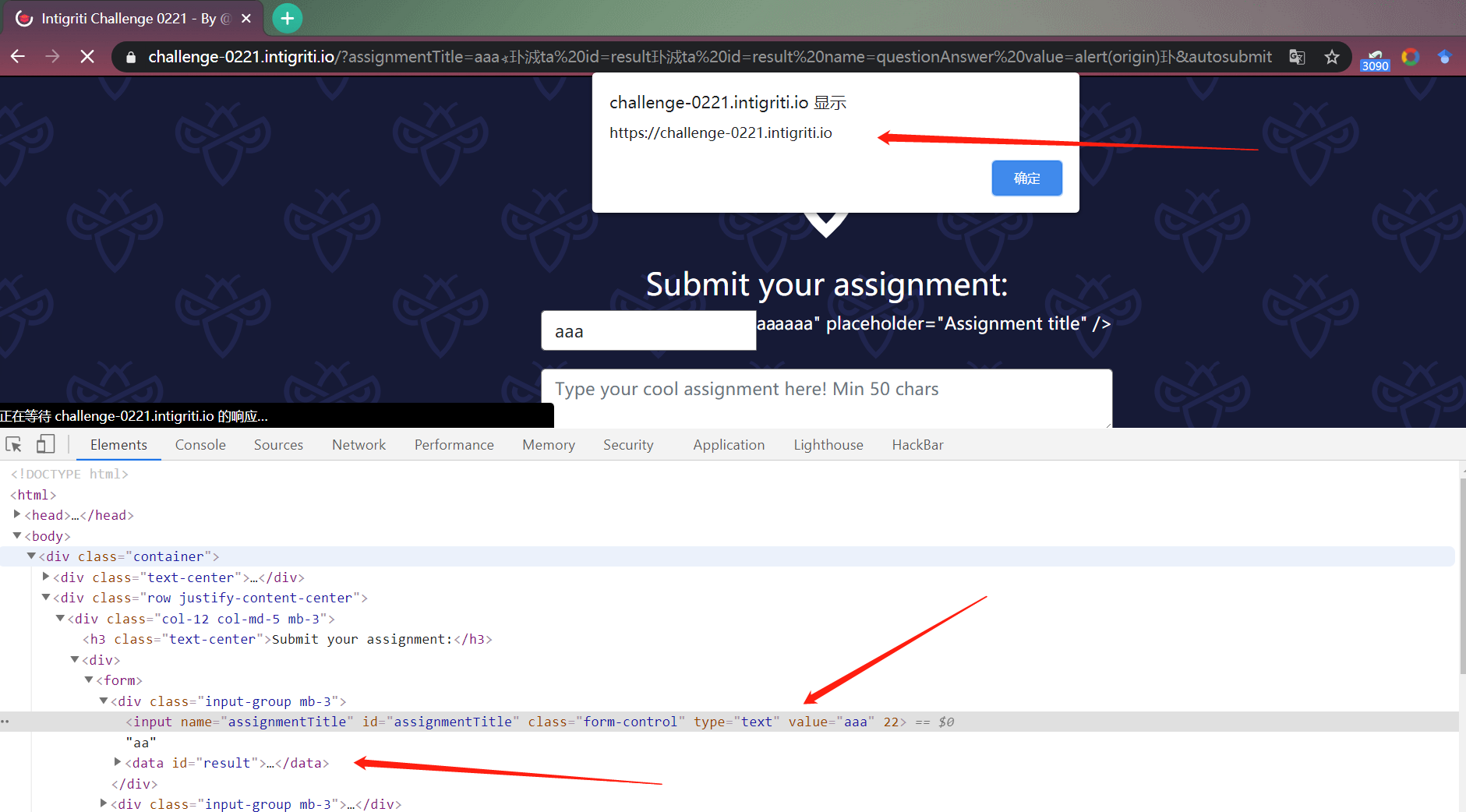

plt.figure(figsize=(10, 6))

for i in range(4):

plt.plot(alphas, [coef[i] for coef in coefs], label=f'Feature {i+1}')

plt.xscale('log')

plt.xlabel('Alpha')

plt.ylabel('Coefficient Value')

plt.title('LASSO Regression Coefficients Shrinkage')

plt.legend()

plt.grid(True)

plt.show()

完整代码和数据

链接: https://pan.baidu.com/s/1igLuxvmivgGbr_8-SfYymg?pwd=mj24 提取码: mj24

![[亲测可用]俄罗斯方块H5-网页小游戏源码-HTML源码](https://img-blog.csdnimg.cn/img_convert/1a724cc1ec486c71a9059676873771ff.png)