目录

1.回忆

2.System::System

1.回忆

上篇博客中我们讲述了DynaSLAM中初始化Mask R-CNN网络部分的代码。

这篇博客我们讲述初始化DynaSLAM除Mask R-CNN网络部分以外的代码。

2.System::System

初始化Mask R-CNN网络后,程序执行:

// Create SLAM system. It initializes all system threads and gets ready to process frames. ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::STEREO,true);其中参数1为path_to_vocabulary,即ORB字典所在文件。

参数2为path_to_settings,即相机配置参数所在文件。

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer):mSensor(sensor), mpViewer(static_cast<Viewer*>(NULL)), mbReset(false),mbActivateLocalizationMode(false), mbDeactivateLocalizationMode(false)在其构造参数我们可以看出,其初始化了变量:

@mSensor:设置为stereo,即双目传感器模式。

@mpViewer:绘图句柄,设置为空。

@mbReset:复位标志,设置为false

@mbActivateLocalizationMode:是否仅定位不建图模式,设置为false。

@bUseViewer:是否使用viewer线程即显示建图,默认设置为true。

@mbDeactivateLocalizationMode:关闭定位模式的标志,默认设置为false。

本函数内我们要初始化一系列的东西:

①mpVocabulary:ORB字典的句柄

②mpKeyFrameDatabase:关键帧数据库的句柄,在这里初始化了倒排索引mvInvertedFile的大小为ORB字典的大小。

③mpMap:地图的句柄,在这里初始化了地图点中最大关键帧id mnMaxKFid为0,初始化了与地图中的重大变化相关的索引mnBigChangeIdx(循环闭合,全局BA)。

④mpFrameDrawer:帧绘制器的句柄,初始化追踪线程的状态mState为SYSTEM_NOT_READY(系统没有准备好),初始化关键帧画布mIm为480 * 640。

⑤mpMapDrawer:地图绘制器的句柄,读取yaml文件的内容初始化下列变量:mKeyFrameSize、mKeyFrameLineWidth、mGraphLineWidth、mPointSize、mCameraSize、mCameraLineWidth。

⑥mpTracker:追踪线程的句柄,初始化追踪线程并计算图像金字塔的参数:

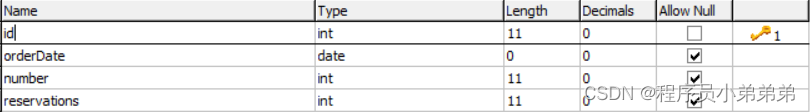

Tracking::Tracking(System *pSys, ORBVocabulary* pVoc, FrameDrawer *pFrameDrawer, MapDrawer *pMapDrawer, Map *pMap, KeyFrameDatabase* pKFDB, const string &strSettingPath, const int sensor): mState(NO_IMAGES_YET), mSensor(sensor), mbOnlyTracking(false), mbVO(false), mpORBVocabulary(pVoc), mpKeyFrameDB(pKFDB), mpInitializer(static_cast<Initializer*>(NULL)), mpSystem(pSys), mpViewer(NULL), mpFrameDrawer(pFrameDrawer), mpMapDrawer(pMapDrawer), mpMap(pMap), mnLastRelocFrameId(0) { // Load camera parameters from settings file cv::FileStorage fSettings(strSettingPath, cv::FileStorage::READ); float fx = fSettings["Camera.fx"]; float fy = fSettings["Camera.fy"]; float cx = fSettings["Camera.cx"]; float cy = fSettings["Camera.cy"]; cv::Mat K = cv::Mat::eye(3,3,CV_32F); K.at<float>(0,0) = fx; K.at<float>(1,1) = fy; K.at<float>(0,2) = cx; K.at<float>(1,2) = cy; K.copyTo(mK); cv::Mat DistCoef(4,1,CV_32F); DistCoef.at<float>(0) = fSettings["Camera.k1"]; DistCoef.at<float>(1) = fSettings["Camera.k2"]; DistCoef.at<float>(2) = fSettings["Camera.p1"]; DistCoef.at<float>(3) = fSettings["Camera.p2"]; const float k3 = fSettings["Camera.k3"]; if(k3!=0) { DistCoef.resize(5); DistCoef.at<float>(4) = k3; } DistCoef.copyTo(mDistCoef); mbf = fSettings["Camera.bf"]; float fps = fSettings["Camera.fps"]; if(fps==0) fps=30; // Max/Min Frames to insert keyframes and to check relocalisation mMinFrames = 0; mMaxFrames = fps; cout << endl << "Camera Parameters: " << endl; cout << "- fx: " << fx << endl; cout << "- fy: " << fy << endl; cout << "- cx: " << cx << endl; cout << "- cy: " << cy << endl; cout << "- k1: " << DistCoef.at<float>(0) << endl; cout << "- k2: " << DistCoef.at<float>(1) << endl; if(DistCoef.rows==5) cout << "- k3: " << DistCoef.at<float>(4) << endl; cout << "- p1: " << DistCoef.at<float>(2) << endl; cout << "- p2: " << DistCoef.at<float>(3) << endl; cout << "- fps: " << fps << endl; int nRGB = fSettings["Camera.RGB"]; mbRGB = nRGB; if(mbRGB) cout << "- color order: RGB (ignored if grayscale)" << endl; else cout << "- color order: BGR (ignored if grayscale)" << endl; // Load ORB parameters int nFeatures = fSettings["ORBextractor.nFeatures"]; float fScaleFactor = fSettings["ORBextractor.scaleFactor"]; int nLevels = fSettings["ORBextractor.nLevels"]; int fIniThFAST = fSettings["ORBextractor.iniThFAST"]; int fMinThFAST = fSettings["ORBextractor.minThFAST"]; mpORBextractorLeft = new ORBextractor(nFeatures,fScaleFactor,nLevels,fIniThFAST,fMinThFAST); if(sensor==System::STEREO) mpORBextractorRight = new ORBextractor(nFeatures,fScaleFactor,nLevels,fIniThFAST,fMinThFAST); if(sensor==System::MONOCULAR) mpIniORBextractor = new ORBextractor(2*nFeatures,fScaleFactor,nLevels,fIniThFAST,fMinThFAST); cout << endl << "ORB Extractor Parameters: " << endl; cout << "- Number of Features: " << nFeatures << endl; cout << "- Scale Levels: " << nLevels << endl; cout << "- Scale Factor: " << fScaleFactor << endl; cout << "- Initial Fast Threshold: " << fIniThFAST << endl; cout << "- Minimum Fast Threshold: " << fMinThFAST << endl; if(sensor==System::STEREO || sensor==System::RGBD) { mThDepth = mbf*(float)fSettings["ThDepth"]/fx; cout << endl << "Depth Threshold (Close/Far Points): " << mThDepth << endl; } if(sensor==System::RGBD) { mDepthMapFactor = fSettings["DepthMapFactor"]; if(fabs(mDepthMapFactor)<1e-5) mDepthMapFactor=1; else mDepthMapFactor = 1.0f/mDepthMapFactor; } }读取了相机的参数fx、fy、cx、cy组成相机内参矩阵K、相机畸变参数、mMinFrames、mMaxFrames。

初始化ORB提取器的句柄mpORBextractorLeft和mpORBextractorRight:

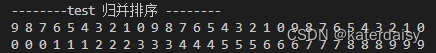

ORBextractor::ORBextractor(int _nfeatures, float _scaleFactor, int _nlevels, int _iniThFAST, int _minThFAST): nfeatures(_nfeatures), scaleFactor(_scaleFactor), nlevels(_nlevels), iniThFAST(_iniThFAST), minThFAST(_minThFAST) { mvScaleFactor.resize(nlevels); mvLevelSigma2.resize(nlevels); mvScaleFactor[0]=1.0f; mvLevelSigma2[0]=1.0f; for(int i=1; i<nlevels; i++) { mvScaleFactor[i]=mvScaleFactor[i-1]*scaleFactor; mvLevelSigma2[i]=mvScaleFactor[i]*mvScaleFactor[i]; } mvInvScaleFactor.resize(nlevels); mvInvLevelSigma2.resize(nlevels); for(int i=0; i<nlevels; i++) { mvInvScaleFactor[i]=1.0f/mvScaleFactor[i]; mvInvLevelSigma2[i]=1.0f/mvLevelSigma2[i]; } mvImagePyramid.resize(nlevels); mnFeaturesPerLevel.resize(nlevels); float factor = 1.0f / scaleFactor; float nDesiredFeaturesPerScale = nfeatures*(1 - factor)/(1 - (float)pow((double)factor, (double)nlevels)); int sumFeatures = 0; for( int level = 0; level < nlevels-1; level++ ) { mnFeaturesPerLevel[level] = cvRound(nDesiredFeaturesPerScale); sumFeatures += mnFeaturesPerLevel[level]; nDesiredFeaturesPerScale *= factor; } mnFeaturesPerLevel[nlevels-1] = std::max(nfeatures - sumFeatures, 0); const int npoints = 512; const Point* pattern0 = (const Point*)bit_pattern_31_; std::copy(pattern0, pattern0 + npoints, std::back_inserter(pattern)); //This is for orientation // pre-compute the end of a row in a circular patch umax.resize(HALF_PATCH_SIZE + 1); int v, v0, vmax = cvFloor(HALF_PATCH_SIZE * sqrt(2.f) / 2 + 1); int vmin = cvCeil(HALF_PATCH_SIZE * sqrt(2.f) / 2); const double hp2 = HALF_PATCH_SIZE*HALF_PATCH_SIZE; for (v = 0; v <= vmax; ++v) umax[v] = cvRound(sqrt(hp2 - v * v)); // Make sure we are symmetric for (v = HALF_PATCH_SIZE, v0 = 0; v >= vmin; --v) { while (umax[v0] == umax[v0 + 1]) ++v0; umax[v] = v0; ++v0; } }这里和ORB-SLAM2中相同,不再赘述,详细的解释见我的文章:

ORB-SLAM2 ---- ORBextractor::ORBextractor类解析

https://blog.csdn.net/qq_41694024/article/details/126288776

初始化深度阈值mThDepth,用于判定离群点。

⑦初始化局部建图线程句柄:初始了有关于一些局部建图进程的变量。

⑧初始化回环检测线程句柄:初始了有关于一些回环检测进程的变量。

@mpMatchedKF:最终检测出来的,和当前关键帧形成闭环的闭环关键帧。

@mLastLoopKFid:上一次闭环帧的id

@mbFixScale:是否固定尺度的标志,单目固定其他不固定。

⑨执行线程mptLocalMapping、mptLoopClosing

⑩我们默认使用绘图器,因为传入SLAM系统的时候设置bUseViewer = true了

因为要绘图,因此设置mbFinishRequested、mbFinished、mbStopped、mbStopRequested设置为true。设置fps、图片的宽高...。并设置线程mptViewer执行它,赋予跟踪线程,现在就有画布了。

⑪最后设置三大线程间的指针。