文章目录

- Inception介绍

- InceptionV3代码实现

- 第一步:定义基础卷积模块

- 第二步:定义Inceptionv3模块

- InceptionA

- InceptionB

- InceptionC

- InceptionD

- InceptionE

- 第三步:定义辅助分类器InceptionAux

- 第四步:搭建GoogLeNet网络

- 第五步*:网络结构参数初始化

- 完整代码

- 论文复现代码

- 论文中结构

- 代码

Inception介绍

Inception网络是CNN发展史上一个重要的里程碑。在Inception出现之前,大部分流行CNN仅仅是把卷积层堆叠得越来越多,使网络越来越深,以此希望能够得到更好的性能。但是存在以下问题:

- 图像中突出部分的大小差别很大。

- 由于信息位置的巨大差异,为卷积操作选择合适的卷积核大小就比较困难。信息分布更全

局性的图像偏好较大的卷积核,信息分布比较局部的图像偏好较小的卷积核。 - 非常深的网络更容易过拟合。将梯度更新传输到整个网络是很困难的。

- 简单地堆叠较大的卷积层非常消耗计算资源。

Inception module

解决方案:

为什么不在同一层级上运行具备多个尺寸的滤波器呢?网络本质上会变得稍微「宽一些」,而不是「更深」。作者因此设计了Inception 模块。

Inception模块( Inception module) : 它使用3个不同大小的滤波器(1x1、 3x3、 5x5)对输入执行卷积操作,此外它还会执行最大池化。所有子层的输出最后会被级联起来,并传送至下一个Inception模块。

- 方面增加了网络的宽度,另一方面增加了网络对尺度的适应性

实现降维的Inception模块:如前所述,深度神经网络需要耗费大量计算资源。为了降低算力成

本,作者在3x3和5x5卷积层之前添加额外的1x1卷积层,来限制输入通道的数量。尽管添加额

外的卷积操作似乎是反直觉的,但是1x1卷积比5x5卷积要廉价很多,而且输入通道数量减少也

有利于降低算力成本。

InceptionV1–Googlenet

- Googl eNet采用了Inception模块化(9个)的结构,共22层;

- 为了避免梯度消失,网络额外增加了2个辅助的softmax用于向前传导梯度(只用于训练)。

Inception V2在输入的时候增加了BatchNormalization:

所有输出保证在0~1之间。

- 所有输出数据的均值接近0,标准差接近1的正太分布。使其落入激活函数的敏感区,避免梯度消失,加快收敛。

- 加快模型收敛速度,并且具有-定的泛化能力。

- 可以减少dropout的使用。

- 作者提出可以用2个连续的3x3卷积层(stride= 1)组成的小网络来代替单个的5x5卷积层,这便是Inception V2结构。

- 5x5卷积核参数是3x3卷积核的25/9=2.78倍。

- 此外,作者将 n * n的卷积核尺寸分解为 1 * n 和 n * 1 两个卷积

- 并联比串联计算效率要高

- 前面三个原则用来构建三种不同类型的 Inception 模块

InceptionV3-网络结构图

- InceptionV3整合了前面Inception v2中提到的所有升级,还使用了7x7卷积

- 目前,InceptionV3是最常用的网络模型

Inception V3设计思想和Trick:

(1) 分解成小卷积很有效,可以降低参数量,减轻过拟合,增加网络非线性的表达能力。

(2) 卷积网络从输入到输出,应该让图片尺寸逐渐减小,输出通道数逐渐增加,即让空间结

构化,将空间信息转化为高阶抽象的特征信息。

(3) InceptionModule用多个分支提取不同抽象程度的高阶特征的思路很有效,可以丰富网络

的表达能力

InceptionV4

- 左图是基本的Inception v2/v3模块,使用两个3x3卷积代替5x5卷积,并且使用average pooling,该模

块主要处理尺寸为35x35的feature map; - 中图模块使用1xn和nx1卷积代替nxn卷积,同样使用average pooling,该模块主要处理尺寸为17x17

的feature map; - 右图将3x3卷积用1x3卷积和3x1卷积代替。

总的来说,Inception v4中基本的Inception module还是沿袭了Inception v2/v3的结构,只是结构看起来更加简洁统一,并且使用更多的Inception modules实验效果也更好。

Inception模型优势:

- 采用了1x1卷积核,性价比高,用很少的计算量既可以增加一层的特征变换和非线性变换。

- 提出Batch Normalization,通过一定的手段,把每层神经元的输入值分布拉到均值0方差1的正态分布,使其落入激活函数的敏感区,避免梯度消失,加快收敛。

- 引入Inception module, 4个分支结合的结构。

卷积神经网络迁移学习 - 现在在工程中最为常用的还是vgg、 resnet、 inception这几种结构, 设计者通常会先直接套用原版的模型对数据进行训练一次,然后选择效果较为好的模型进行微调与模型缩减。

- 工程上使用的模型必须在精度高的同时速度要快。

- 常用的模型缩减的方法是减少卷积的个数与减少resnet的模块数。

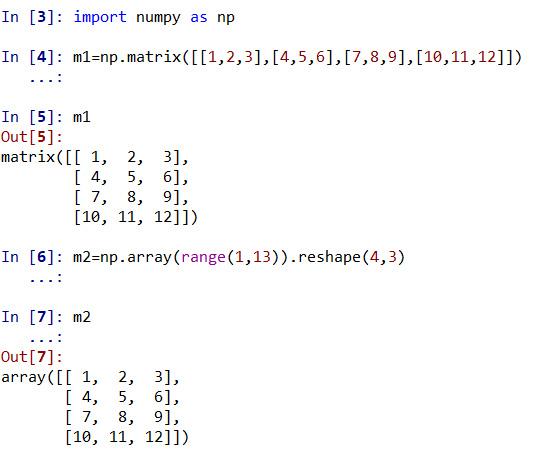

InceptionV3代码实现

第一个示例参考文章:

原文链接:GoogLeNet InceptionV3代码复现+超详细注释(PyTorch)

感谢大佬!

第一步:定义基础卷积模块

BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

作用:卷积层之后总会添加BatchNorm2d进行数据的归一化处理,这使得数据在进行Relu之前不会因为数据过大而导致网络性能的不稳定

- num_features:一般输入参数的shape为batch_size * num_features * height*width,即为其中特征的数量,即为输入BN层的通道数;

- eps:分母中添加的一个值,目的是为了计算的稳定性,默认为:1e-5,避免分母为0;

- momentum:一个用于运行过程中均值和方差的一个估计参数(可以理解是一个稳定系数,类似于SGD中的momentum的系数);

- affine:当设为true时,会给定可以学习的系数矩阵gamma和beta

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)

第二步:定义Inceptionv3模块

PyTorch提供的有六种基本的Inception模块,分别是InceptionA——InceptionE。

InceptionA

得到输入大小不变,通道数为224+pool_features的特征图。

假如输入为(35, 35, 192)的数据:

第一个branch:

经过branch1x1为带有64个11的卷积核,所以生成第一张特征图(35, 35, 64);

第二个branch:

首先经过branch5x5_1为带有48个11的卷积核,所以第二张特征图(35, 35, 48),

然后经过branch5x5_2为带有64个55大小且填充为2的卷积核,特征图大小依旧不变,因此第二张特征图最终为(35, 35, 64);

第三个branch:

首先经过branch3x3dbl_1为带有64个11的卷积核,所以第三张特征图(35, 35, 64),

然后经过branch3x3dbl_2为带有96个33大小且填充为1的卷积核,特征图大小依旧不变,因此进一步生成第三张特征图(35, 35, 96),

最后经过branch3x3dbl_3为带有96个33大小且填充为1的卷积核,特征图大小和通道数不变,因此第三张特征图最终为(35, 35, 96);

第四个branch:

首先经过avg_pool2d,其中池化核33,步长为1,填充为1,所以第四张特征图大小不变,通道数不变,第四张特征图为(35, 35, 192),

然后经过branch_pool为带有pool_features个的11卷积,因此第四张特征图最终为(35, 35, pool_features);

最后将四张特征图进行拼接,最终得到(35,35,64+64+96+pool_features)的特征图。

'''---InceptionA---'''

class InceptionA(nn.Module):

def __init__(self, in_channels, pool_features, conv_block=None):

super(InceptionA, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 64, kernel_size=1)

self.branch5x5_1 = conv_block(in_channels, 48, kernel_size=1)

self.branch5x5_2 = conv_block(48, 64, kernel_size=5, padding=2)

self.branch3x3dbl_1 = conv_block(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = conv_block(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = conv_block(96, 96, kernel_size=3, padding=1)

self.branch_pool = conv_block(in_channels, pool_features, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch5x5, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

InceptionB

得到输入大小减半,通道数为480的特征图,

假如输入为(35, 35, 288)的数据:

第一个branch:

经过branch1x1为带有384个33大小且步长2的卷积核,(35-3+20)/2+1=17所以生成第一张特征图(17, 17, 384);

第二个branch:

首先经过branch3x3dbl_1为带有64个11的卷积核,特征图大小不变,即(35, 35, 64);

然后经过branch3x3dbl_2为带有96个33大小填充1的卷积核,特征图大小不变,即(35, 35, 96),

再经过branch3x3dbl_3为带有96个33大小步长2的卷积核,(35-3+20)/2+1=17,即第二张特征图为(17, 17, 96);

第三个branch:

经过max_pool2d,池化核大小3*3,步长为2,所以是二倍最大值下采样,通道数保持不变,第三张特征图为(17, 17, 288);

最后将三张特征图进行拼接,最终得到(17(即Hin/2),17(即Win/2),384+96+288(Cin)=768)的特征图。

'''---InceptionB---'''

class InceptionB(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionB, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch3x3 = conv_block(in_channels, 384, kernel_size=3, stride=2)

self.branch3x3dbl_1 = conv_block(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = conv_block(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = conv_block(96, 96, kernel_size=3, stride=2)

def _forward(self, x):

branch3x3 = self.branch3x3(x)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

InceptionC

得到输入大小不变,通道数为768的特征图。

假如输入为(17,17, 768)的数据:

第一个branch:

首先经过branch1x1为带有192个1*1的卷积核,所以生成第一张特征图(17,17, 192);

第二个branch:

首先经过branch7x7_1为带有c7个11的卷积核,所以第二张特征图(17,17, c7),

然后经过branch7x7_2为带有c7个17大小且填充为03的卷积核,特征图大小不变,进一步生成第二张特征图(17,17, c7),

然后经过branch7x7_3为带有192个71大小且填充为30的卷积核,特征图大小不变,进一步生成第二张特征图(17,17, 192),因此第二张特征图最终为(17,17, 192);

第三个branch:

首先经过branch7x7dbl_1为带有c7个11的卷积核,所以第三张特征图(17,17, c7),

然后经过branch7x7dbl_2为带有c7个71大小且填充为30的卷积核,特征图大小不变,进一步生成第三张特征图(17,17, c7),

然后经过branch7x7dbl_3为带有c7个17大小且填充为03的卷积核,特征图大小不变,进一步生成第三张特征图(17,17, c7),

然后经过branch7x7dbl_4为带有c7个71大小且填充为30的卷积核,特征图大小不变,进一步生成第三张特征图(17,17, c7),

然后经过branch7x7dbl_5为带有192个17大小且填充为03的卷积核,特征图大小不变,因此第二张特征图最终为(17,17, 192);

第四个branch:

首先经过avg_pool2d,其中池化核33,步长为1,填充为1,所以第四张特征图大小不变,通道数不变,第四张特征图为(17,17, 768),

然后经过branch_pool为带有192个的11卷积,因此第四张特征图最终为(17,17, 192);

最后将四张特征图进行拼接,最终得到(17, 17, 192+192+192+192=768)的特征图。

'''---InceptionC---'''

class InceptionC(nn.Module):

def __init__(self, in_channels, channels_7x7, conv_block=None):

super(InceptionC, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 192, kernel_size=1)

c7 = channels_7x7

self.branch7x7_1 = conv_block(in_channels, c7, kernel_size=1)

self.branch7x7_2 = conv_block(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7_3 = conv_block(c7, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_1 = conv_block(in_channels, c7, kernel_size=1)

self.branch7x7dbl_2 = conv_block(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_3 = conv_block(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7dbl_4 = conv_block(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_5 = conv_block(c7, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch_pool = conv_block(in_channels, 192, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7_1(x)

branch7x7 = self.branch7x7_2(branch7x7)

branch7x7 = self.branch7x7_3(branch7x7)

branch7x7dbl = self.branch7x7dbl_1(x)

branch7x7dbl = self.branch7x7dbl_2(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_3(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_4(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_5(branch7x7dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

InceptionD

得到输入大小减半,通道数512的特征图,

假如输入为(17, 17, 768)的数据:

第一个branch:

首先经过branch3x3_1为带有192个11的卷积核,所以生成第一张特征图(17, 17, 192);

然后经过branch3x3_2为带有320个33大小步长为2的卷积核,(17-3+20)/2+1=8,最终第一张特征图(8, 8, 320);

第二个branch:

首先经过branch7x7x3_1为带有192个11的卷积核,特征图大小不变,即(17, 17, 192);

然后经过branch7x7x3_2为带有192个17大小且填充为03的卷积核,特征图大小不变,进一步生成第三张特征图(17,17, 192);

再经过branch7x7x3_3为带有192个71大小且填充为30的卷积核,特征图大小不变,进一步生成第三张特征图(17,17, 192);

最后经过branch7x7x3_4为带有192个3*3大小步长为2的卷积核,最终第一张特征图(8, 8, 192);

第三个branch:

首先经过max_pool2d,池化核大小3*3,步长为2,所以是二倍最大值下采样,通道数保持不变,第三张特征图为(8, 8, 768);

最后将三张特征图进行拼接,最终得到(8(即Hin/2),8(即Win/2),320+192+768(Cin)=1280)的特征图。

'''---InceptionD---'''

class InceptionD(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionD, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch3x3_1 = conv_block(in_channels, 192, kernel_size=1)

self.branch3x3_2 = conv_block(192, 320, kernel_size=3, stride=2)

self.branch7x7x3_1 = conv_block(in_channels, 192, kernel_size=1)

self.branch7x7x3_2 = conv_block(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7x3_3 = conv_block(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7x3_4 = conv_block(192, 192, kernel_size=3, stride=2)

def _forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch7x7x3 = self.branch7x7x3_1(x)

branch7x7x3 = self.branch7x7x3_2(branch7x7x3)

branch7x7x3 = self.branch7x7x3_3(branch7x7x3)

branch7x7x3 = self.branch7x7x3_4(branch7x7x3)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch7x7x3, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

InceptionE

最终得到输入大小不变,通道数为2048的特征图。

假如输入为(8,8, 1280)的数据:

第一个branch:

首先经过branch1x1为带有320个11的卷积核,所以生成第一张特征图(8, 8, 320);

第二个branch:

首先经过branch3x3_1为带有384个11的卷积核,所以第二张特征图(8, 8, 384),

经过分支branch3x3_2a为带有384个13大小且填充为01的卷积核,特征图大小不变,进一步生成特征图(8,8, 384),

经过分支branch3x3_2b为带有192个31大小且填充为10的卷积核,特征图大小不变,进一步生成特征图(8,8, 384),

因此第二张特征图最终为两个分支拼接(8,8, 384+384=768);

第三个branch:

首先经过branch3x3dbl_1为带有448个11的卷积核,所以第三张特征图(8,8, 448),

然后经过branch3x3dbl_2为带有384个33大小且填充为1的卷积核,特征图大小不变,进一步生成第三张特征图(8,8, 384),

经过分支branch3x3dbl_3a为带有384个13大小且填充为01的卷积核,特征图大小不变,进一步生成特征图(8,8, 384),

经过分支branch3x3dbl_3b为带有384个31大小且填充为10的卷积核,特征图大小不变,进一步生成特征图(8,8, 384),

因此第三张特征图最终为两个分支拼接(8,8, 384+384=768);

第四个branch:

首先经过avg_pool2d,其中池化核33,步长为1,填充为1,所以第四张特征图大小不变,通道数不变,第四张特征图为(8,8, 1280),

然后经过branch_pool为带有192个的11卷积,因此第四张特征图最终为(8,8, 192);

最后将四张特征图进行拼接,最终得到(8, 8, 320+768+768+192=2048)的特征图。

'''---InceptionE---'''

class InceptionE(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionE, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 320, kernel_size=1)

self.branch3x3_1 = conv_block(in_channels, 384, kernel_size=1)

self.branch3x3_2a = conv_block(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3_2b = conv_block(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch3x3dbl_1 = conv_block(in_channels, 448, kernel_size=1)

self.branch3x3dbl_2 = conv_block(448, 384, kernel_size=3, padding=1)

self.branch3x3dbl_3a = conv_block(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3dbl_3b = conv_block(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch_pool = conv_block(in_channels, 192, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch3x3 = self.branch3x3_1(x)

branch3x3 = [

self.branch3x3_2a(branch3x3),

self.branch3x3_2b(branch3x3),

]

branch3x3 = torch.cat(branch3x3, 1)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = [

self.branch3x3dbl_3a(branch3x3dbl),

self.branch3x3dbl_3b(branch3x3dbl),

]

branch3x3dbl = torch.cat(branch3x3dbl, 1)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch3x3, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

第三步:定义辅助分类器InceptionAux

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes, conv_block=None):

super(InceptionAux, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.conv0 = conv_block(in_channels, 128, kernel_size=1)

self.conv1 = conv_block(128, 768, kernel_size=5)

self.conv1.stddev = 0.01

self.fc = nn.Linear(768, num_classes)

self.fc.stddev = 0.001

def forward(self, x):

# N x 768 x 17 x 17

x = F.avg_pool2d(x, kernel_size=5, stride=3)

# N x 768 x 5 x 5

x = self.conv0(x)

# N x 128 x 5 x 5

x = self.conv1(x)

# N x 768 x 1 x 1

# Adaptive average pooling

x = F.adaptive_avg_pool2d(x, (1, 1))

# N x 768 x 1 x 1

x = torch.flatten(x, 1)

# N x 768

x = self.fc(x)

# N x 1000

return x

第四步:搭建GoogLeNet网络

'''-----------------------搭建GoogLeNet网络--------------------------'''

class GoogLeNet(nn.Module):

def __init__(self, num_classes=1000, aux_logits=True, transform_input=False,

inception_blocks=None):

super(GoogLeNet, self).__init__()

if inception_blocks is None:

inception_blocks = [

BasicConv2d, InceptionA, InceptionB, InceptionC,

InceptionD, InceptionE, InceptionAux

]

assert len(inception_blocks) == 7

conv_block = inception_blocks[0]

inception_a = inception_blocks[1]

inception_b = inception_blocks[2]

inception_c = inception_blocks[3]

inception_d = inception_blocks[4]

inception_e = inception_blocks[5]

inception_aux = inception_blocks[6]

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = conv_block(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = conv_block(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = conv_block(32, 64, kernel_size=3, padding=1)

self.Conv2d_3b_1x1 = conv_block(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = conv_block(80, 192, kernel_size=3)

self.Mixed_5b = inception_a(192, pool_features=32)

self.Mixed_5c = inception_a(256, pool_features=64)

self.Mixed_5d = inception_a(288, pool_features=64)

self.Mixed_6a = inception_b(288)

self.Mixed_6b = inception_c(768, channels_7x7=128)

self.Mixed_6c = inception_c(768, channels_7x7=160)

self.Mixed_6d = inception_c(768, channels_7x7=160)

self.Mixed_6e = inception_c(768, channels_7x7=192)

if aux_logits:

self.AuxLogits = inception_aux(768, num_classes)

self.Mixed_7a = inception_d(768)

self.Mixed_7b = inception_e(1280)

self.Mixed_7c = inception_e(2048)

self.fc = nn.Linear(2048, num_classes)

'''输入(229,229,3)的数据,首先归一化输入,经过5个卷积,2个最大池化层。'''

def _forward(self, x):

# N x 3 x 299 x 299

x = self.Conv2d_1a_3x3(x)

# N x 32 x 149 x 149

x = self.Conv2d_2a_3x3(x)

# N x 32 x 147 x 147

x = self.Conv2d_2b_3x3(x)

# N x 64 x 147 x 147

x = F.max_pool2d(x, kernel_size=3, stride=2)

# N x 64 x 73 x 73

x = self.Conv2d_3b_1x1(x)

# N x 80 x 73 x 73

x = self.Conv2d_4a_3x3(x)

# N x 192 x 71 x 71

x = F.max_pool2d(x, kernel_size=3, stride=2)

'''然后经过3个InceptionA结构,

1个InceptionB,3个InceptionC,1个InceptionD,2个InceptionE,

其中InceptionA,辅助分类器AuxLogits以经过最后一个InceptionC的输出为输入。'''

# 35 x 35 x 192

x = self.Mixed_5b(x) # InceptionA(192, pool_features=32)

# 35 x 35 x 256

x = self.Mixed_5c(x) # InceptionA(256, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_5d(x) # InceptionA(288, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_6a(x) # InceptionB(288)

# 17 x 17 x 768

x = self.Mixed_6b(x) # InceptionC(768, channels_7x7=128)

# 17 x 17 x 768

x = self.Mixed_6c(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6d(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6e(x) # InceptionC(768, channels_7x7=192)

# 17 x 17 x 768

if self.training and self.aux_logits:

aux = self.AuxLogits(x) # InceptionAux(768, num_classes)

# 17 x 17 x 768

x = self.Mixed_7a(x) # InceptionD(768)

# 8 x 8 x 1280

x = self.Mixed_7b(x) # InceptionE(1280)

# 8 x 8 x 2048

x = self.Mixed_7c(x) # InceptionE(2048)

'''进入分类部分。

经过平均池化层+dropout+打平+全连接层输出'''

x = F.adaptive_avg_pool2d(x, (1, 1))

# N x 2048 x 1 x 1

x = F.dropout(x, training=self.training)

# N x 2048 x 1 x 1

x = torch.flatten(x, 1)#Flatten()就是将2D的特征图压扁为1D的特征向量,是展平操作,进入全连接层之前使用,类才能写进nn.Sequential

# N x 2048

x = self.fc(x)

# N x 1000 (num_classes)

return x, aux

def forward(self, x):

x, aux = self._forward(x)

return x, aux

第五步*:网络结构参数初始化

'''-----------------------网络结构参数初始化--------------------------'''

# 目的:使网络更好收敛,准确率更高

def _initialize_weights(self): # 将各种初始化方法定义为一个initialize_weights()的函数并在模型初始后进行使用。

# 遍历网络中的每一层

for m in self.modules():

# isinstance(object, type),如果指定的对象拥有指定的类型,则isinstance()函数返回True

'''如果是卷积层Conv2d'''

if isinstance(m, nn.Conv2d):

# Kaiming正态分布方式的权重初始化

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

'''判断是否有偏置:'''

# 如果偏置不是0,将偏置置成0,对偏置进行初始化

if m.bias is not None:

# torch.nn.init.constant_(tensor, val),初始化整个矩阵为常数val

nn.init.constant_(m.bias, 0)

'''如果是全连接层'''

elif isinstance(m, nn.Linear):

# init.normal_(tensor, mean=0.0, std=1.0),使用从正态分布中提取的值填充输入张量

# 参数:tensor:一个n维Tensor,mean:正态分布的平均值,std:正态分布的标准差

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

完整代码

from __future__ import division

import torch

import torch.nn as nn

import torch.nn.functional as F

'''-------------------------第一步:定义基础卷积模块-------------------------------'''

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)

'''-----------------第二步:定义Inceptionv3模块---------------------'''

'''---InceptionA---'''

class InceptionA(nn.Module):

def __init__(self, in_channels, pool_features, conv_block=None):

super(InceptionA, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 64, kernel_size=1)

self.branch5x5_1 = conv_block(in_channels, 48, kernel_size=1)

self.branch5x5_2 = conv_block(48, 64, kernel_size=5, padding=2)

self.branch3x3dbl_1 = conv_block(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = conv_block(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = conv_block(96, 96, kernel_size=3, padding=1)

self.branch_pool = conv_block(in_channels, pool_features, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch5x5, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

'''---InceptionB---'''

class InceptionB(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionB, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch3x3 = conv_block(in_channels, 384, kernel_size=3, stride=2)

self.branch3x3dbl_1 = conv_block(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = conv_block(64, 96, kernel_size=3, padding=1)

self.branch3x3dbl_3 = conv_block(96, 96, kernel_size=3, stride=2)

def _forward(self, x):

branch3x3 = self.branch3x3(x)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

'''---InceptionC---'''

class InceptionC(nn.Module):

def __init__(self, in_channels, channels_7x7, conv_block=None):

super(InceptionC, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 192, kernel_size=1)

c7 = channels_7x7

self.branch7x7_1 = conv_block(in_channels, c7, kernel_size=1)

self.branch7x7_2 = conv_block(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7_3 = conv_block(c7, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_1 = conv_block(in_channels, c7, kernel_size=1)

self.branch7x7dbl_2 = conv_block(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_3 = conv_block(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7dbl_4 = conv_block(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_5 = conv_block(c7, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch_pool = conv_block(in_channels, 192, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7_1(x)

branch7x7 = self.branch7x7_2(branch7x7)

branch7x7 = self.branch7x7_3(branch7x7)

branch7x7dbl = self.branch7x7dbl_1(x)

branch7x7dbl = self.branch7x7dbl_2(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_3(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_4(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_5(branch7x7dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

'''---InceptionD---'''

class InceptionD(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionD, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch3x3_1 = conv_block(in_channels, 192, kernel_size=1)

self.branch3x3_2 = conv_block(192, 320, kernel_size=3, stride=2)

self.branch7x7x3_1 = conv_block(in_channels, 192, kernel_size=1)

self.branch7x7x3_2 = conv_block(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7x3_3 = conv_block(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7x3_4 = conv_block(192, 192, kernel_size=3, stride=2)

def _forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch7x7x3 = self.branch7x7x3_1(x)

branch7x7x3 = self.branch7x7x3_2(branch7x7x3)

branch7x7x3 = self.branch7x7x3_3(branch7x7x3)

branch7x7x3 = self.branch7x7x3_4(branch7x7x3)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

outputs = [branch3x3, branch7x7x3, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

'''---InceptionE---'''

class InceptionE(nn.Module):

def __init__(self, in_channels, conv_block=None):

super(InceptionE, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.branch1x1 = conv_block(in_channels, 320, kernel_size=1)

self.branch3x3_1 = conv_block(in_channels, 384, kernel_size=1)

self.branch3x3_2a = conv_block(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3_2b = conv_block(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch3x3dbl_1 = conv_block(in_channels, 448, kernel_size=1)

self.branch3x3dbl_2 = conv_block(448, 384, kernel_size=3, padding=1)

self.branch3x3dbl_3a = conv_block(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3dbl_3b = conv_block(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch_pool = conv_block(in_channels, 192, kernel_size=1)

def _forward(self, x):

branch1x1 = self.branch1x1(x)

branch3x3 = self.branch3x3_1(x)

branch3x3 = [

self.branch3x3_2a(branch3x3),

self.branch3x3_2b(branch3x3),

]

branch3x3 = torch.cat(branch3x3, 1)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = [

self.branch3x3dbl_3a(branch3x3dbl),

self.branch3x3dbl_3b(branch3x3dbl),

]

branch3x3dbl = torch.cat(branch3x3dbl, 1)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch3x3, branch3x3dbl, branch_pool]

return outputs

def forward(self, x):

outputs = self._forward(x)

return torch.cat(outputs, 1)

'''-------------------第三步:定义辅助分类器InceptionAux-----------------------'''

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes, conv_block=None):

super(InceptionAux, self).__init__()

if conv_block is None:

conv_block = BasicConv2d

self.conv0 = conv_block(in_channels, 128, kernel_size=1)

self.conv1 = conv_block(128, 768, kernel_size=5)

self.conv1.stddev = 0.01

self.fc = nn.Linear(768, num_classes)

self.fc.stddev = 0.001

def forward(self, x):

# N x 768 x 17 x 17

x = F.avg_pool2d(x, kernel_size=5, stride=3)

# N x 768 x 5 x 5

x = self.conv0(x)

# N x 128 x 5 x 5

x = self.conv1(x)

# N x 768 x 1 x 1

# Adaptive average pooling

x = F.adaptive_avg_pool2d(x, (1, 1))

# N x 768 x 1 x 1

x = torch.flatten(x, 1)

# N x 768

x = self.fc(x)

# N x 1000

return x

'''-----------------------第四步:搭建GoogLeNet网络--------------------------'''

class GoogLeNet(nn.Module):

def __init__(self, num_classes=1000, aux_logits=True, transform_input=False,

inception_blocks=None):

super(GoogLeNet, self).__init__()

if inception_blocks is None:

inception_blocks = [

BasicConv2d, InceptionA, InceptionB, InceptionC,

InceptionD, InceptionE, InceptionAux

]

assert len(inception_blocks) == 7

conv_block = inception_blocks[0]

inception_a = inception_blocks[1]

inception_b = inception_blocks[2]

inception_c = inception_blocks[3]

inception_d = inception_blocks[4]

inception_e = inception_blocks[5]

inception_aux = inception_blocks[6]

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = conv_block(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = conv_block(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = conv_block(32, 64, kernel_size=3, padding=1)

self.Conv2d_3b_1x1 = conv_block(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = conv_block(80, 192, kernel_size=3)

self.Mixed_5b = inception_a(192, pool_features=32)

self.Mixed_5c = inception_a(256, pool_features=64)

self.Mixed_5d = inception_a(288, pool_features=64)

self.Mixed_6a = inception_b(288)

self.Mixed_6b = inception_c(768, channels_7x7=128)

self.Mixed_6c = inception_c(768, channels_7x7=160)

self.Mixed_6d = inception_c(768, channels_7x7=160)

self.Mixed_6e = inception_c(768, channels_7x7=192)

if aux_logits:

self.AuxLogits = inception_aux(768, num_classes)

self.Mixed_7a = inception_d(768)

self.Mixed_7b = inception_e(1280)

self.Mixed_7c = inception_e(2048)

self.fc = nn.Linear(2048, num_classes)

'''输入(229,229,3)的数据,首先归一化输入,经过5个卷积,2个最大池化层。'''

def _forward(self, x):

# N x 3 x 299 x 299

x = self.Conv2d_1a_3x3(x)

# N x 32 x 149 x 149

x = self.Conv2d_2a_3x3(x)

# N x 32 x 147 x 147

x = self.Conv2d_2b_3x3(x)

# N x 64 x 147 x 147

x = F.max_pool2d(x, kernel_size=3, stride=2)

# N x 64 x 73 x 73

x = self.Conv2d_3b_1x1(x)

# N x 80 x 73 x 73

x = self.Conv2d_4a_3x3(x)

# N x 192 x 71 x 71

x = F.max_pool2d(x, kernel_size=3, stride=2)

'''然后经过3个InceptionA结构,

1个InceptionB,3个InceptionC,1个InceptionD,2个InceptionE,

其中InceptionA,辅助分类器AuxLogits以经过最后一个InceptionC的输出为输入。'''

# 35 x 35 x 192

x = self.Mixed_5b(x) # InceptionA(192, pool_features=32)

# 35 x 35 x 256

x = self.Mixed_5c(x) # InceptionA(256, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_5d(x) # InceptionA(288, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_6a(x) # InceptionB(288)

# 17 x 17 x 768

x = self.Mixed_6b(x) # InceptionC(768, channels_7x7=128)

# 17 x 17 x 768

x = self.Mixed_6c(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6d(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6e(x) # InceptionC(768, channels_7x7=192)

# 17 x 17 x 768

if self.training and self.aux_logits:

aux = self.AuxLogits(x) # InceptionAux(768, num_classes)

# 17 x 17 x 768

x = self.Mixed_7a(x) # InceptionD(768)

# 8 x 8 x 1280

x = self.Mixed_7b(x) # InceptionE(1280)

# 8 x 8 x 2048

x = self.Mixed_7c(x) # InceptionE(2048)

'''进入分类部分。

经过平均池化层+dropout+打平+全连接层输出'''

x = F.adaptive_avg_pool2d(x, (1, 1))

# N x 2048 x 1 x 1

x = F.dropout(x, training=self.training)

# N x 2048 x 1 x 1

x = torch.flatten(x, 1)#Flatten()就是将2D的特征图压扁为1D的特征向量,是展平操作,进入全连接层之前使用,类才能写进nn.Sequential

# N x 2048

x = self.fc(x)

# N x 1000 (num_classes)

return x, aux

def forward(self, x):

x, aux = self._forward(x)

return x, aux

'''-----------------------第五步:网络结构参数初始化--------------------------'''

# 目的:使网络更好收敛,准确率更高

def _initialize_weights(self): # 将各种初始化方法定义为一个initialize_weights()的函数并在模型初始后进行使用。

# 遍历网络中的每一层

for m in self.modules():

# isinstance(object, type),如果指定的对象拥有指定的类型,则isinstance()函数返回True

'''如果是卷积层Conv2d'''

if isinstance(m, nn.Conv2d):

# Kaiming正态分布方式的权重初始化

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

'''判断是否有偏置:'''

# 如果偏置不是0,将偏置置成0,对偏置进行初始化

if m.bias is not None:

# torch.nn.init.constant_(tensor, val),初始化整个矩阵为常数val

nn.init.constant_(m.bias, 0)

'''如果是全连接层'''

elif isinstance(m, nn.Linear):

# init.normal_(tensor, mean=0.0, std=1.0),使用从正态分布中提取的值填充输入张量

# 参数:tensor:一个n维Tensor,mean:正态分布的平均值,std:正态分布的标准差

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

'''---------------------------------------显示网络结构-------------------------------'''

if __name__ == '__main__':

net = GoogLeNet(1000).cuda()

from torchsummary import summary

summary(net, (3, 299, 299))

论文复现代码

上面实现的是torchvision中的Inception v3结构,和论文中不太一样。

GITHUB论文复现代码链接

论文中结构

代码

import torch

import torch.nn as nn

from functools import partial

# functools.partial():减少某个函数的参数个数。 partial() 函数允许你给一个或多个参数设置固定的值,减少接下来被调用时的参数个数

'''-----------------------第一步:定义卷积模块-----------------------'''

#基础卷积模块

class Conv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, output=False):

super(Conv2d, self).__init__()

'''卷积层'''

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)

'''输出层'''

self.output = output

if self.output == False:

'''bn层'''

self.bn = nn.BatchNorm2d(out_channels)

'''relu层'''

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x = self.conv(x)

if self.output:

return x

else:

x = self.bn(x)

x = self.relu(x)

return x

class Separable_Conv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):

super(Separable_Conv2d, self).__init__()

self.conv_h = nn.Conv2d(in_channels, in_channels, (kernel_size, 1), stride=(stride, 1), padding=(padding, 0))

self.conv_w = nn.Conv2d(in_channels, out_channels, (1, kernel_size), stride=(1, stride), padding=(0, padding))

self.bn = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x = self.conv_h(x)

x = self.conv_w(x)

x = self.bn(x)

x = self.relu(x)

return x

class Concat_Separable_Conv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):

super(Concat_Separable_Conv2d, self).__init__()

self.conv_h = nn.Conv2d(in_channels, out_channels, (kernel_size, 1), stride=(stride, 1), padding=(padding, 0))

self.conv_w = nn.Conv2d(in_channels, out_channels, (1, kernel_size), stride=(1, stride), padding=(0, padding))

self.bn = nn.BatchNorm2d(out_channels * 2)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x_h = self.conv_h(x)

x_w = self.conv_w(x)

x = torch.cat([x_h, x_w], dim=1)

x = self.bn(x)

x = self.relu(x)

return x

#Flatten()就是将2D的特征图压扁为1D的特征向量,是展平操作,进入全连接层之前使用,类才能写进nn.Sequential

class Flatten(nn.Module):

# 传入输入维度和输出维度

def __init__(self):

# 调用父类构造函数

super(Flatten, self).__init__()

# 实现forward函数

def forward(self, x):

# 保存batch维度,后面的维度全部压平

return torch.flatten(x, 1)

#Squeeze()降维

class Squeeze(nn.Module):

def __init__(self):

super(Squeeze, self).__init__()

def forward(self, x):

return torch.squeeze(x)

'''-----------------------搭建GoogLeNet网络--------------------------'''

class GoogLeNet(nn.Module):

def __init__(self, num_classes, mode='train'):

super(GoogLeNet, self).__init__()

self.num_classes = num_classes

self.mode = mode

self.layers = nn.Sequential(

Conv2d(3, 32, 3, stride=2),

Conv2d(32, 32, 3, stride=1),

Conv2d(32, 64, 3, stride=1, padding=1),

nn.MaxPool2d(kernel_size=3, stride=2),

Conv2d(64, 80, kernel_size=3),

Conv2d(80, 192, kernel_size=3, stride=2),

Conv2d(192, 288, kernel_size=3, stride=1, padding=1),

#输入:35*35*288。将5*5用两个3*3代替

Inceptionv3(288, 64, 48, 64, 64, 96, 64, mode='1'), # 3a

Inceptionv3(288, 64, 48, 64, 64, 96, 64, mode='1'), # 3b

Inceptionv3(288, 0, 128, 384, 64, 96, 0, stride=2, pool_type='MAX', mode='1'), # 3c

#输入:17*17*768。

Inceptionv3(768, 192, 128, 192, 128, 192, 192, mode='2'), # 4a

Inceptionv3(768, 192, 160, 192, 160, 192, 192, mode='2'), # 4b

Inceptionv3(768, 192, 160, 192, 160, 192, 192, mode='2'), # 4c

Inceptionv3(768, 192, 192, 192, 192, 192, 192, mode='2'), # 4d

Inceptionv3(768, 0, 192, 320, 192, 192, 0, stride=2, pool_type='MAX', mode='2'), # 4e

#8*8*1280

Inceptionv3(1280, 320, 384, 384, 448, 384, 192, mode='3'), # 5a

Inceptionv3(2048, 320, 384, 384, 448, 384, 192, pool_type='MAX', mode='3'), # 5b

nn.AvgPool2d(8, 1),

Conv2d(2048, num_classes, kernel_size=1, output=True),

Squeeze(),

)

if mode == 'train':

self.aux = InceptionAux(768, num_classes)

def forward(self, x):

for idx, layer in enumerate(self.layers):

if (idx == 14 and self.mode == 'train'):

aux = self.aux(x)

x = layer(x)

if self.mode == 'train':

return x, aux

else:

return x

'''-----------------------网络结构参数初始化--------------------------'''

# 目的:使网络更好收敛,准确率更高

def _initialize_weights(self): # 将各种初始化方法定义为一个initialize_weights()的函数并在模型初始后进行使用。

# 遍历网络中的每一层

for m in self.modules():

# isinstance(object, type),如果指定的对象拥有指定的类型,则isinstance()函数返回True

'''如果是卷积层Conv2d'''

if isinstance(m, nn.Conv2d):

# Kaiming正态分布方式的权重初始化

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

'''判断是否有偏置:'''

# 如果偏置不是0,将偏置置成0,对偏置进行初始化

if m.bias is not None:

# torch.nn.init.constant_(tensor, val),初始化整个矩阵为常数val

nn.init.constant_(m.bias, 0)

'''如果是全连接层'''

elif isinstance(m, nn.Linear):

# init.normal_(tensor, mean=0.0, std=1.0),使用从正态分布中提取的值填充输入张量

# 参数:tensor:一个n维Tensor,mean:正态分布的平均值,std:正态分布的标准差

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

'''---------------------Inceptionv3-------------------------------------'''

'''

Inceptionv3由三个连续的Inception模块组组成

'''

class Inceptionv3(nn.Module):

def __init__(self, input_channel, conv1_channel, conv3_reduce_channel,

conv3_channel, conv3_double_reduce_channel, conv3_double_channel, pool_reduce_channel, stride=1,

pool_type='AVG', mode='1'):

super(Inceptionv3, self).__init__()

self.stride = stride

if stride == 2:

padding_conv3 = 0

padding_conv7 = 2

else:

padding_conv3 = 1

padding_conv7 = 3

if conv1_channel != 0:

self.conv1 = Conv2d(input_channel, conv1_channel, kernel_size=1)

else:

self.conv1 = None

self.conv3_reduce = Conv2d(input_channel, conv3_reduce_channel, kernel_size=1)

#第一种Inception模式:输入的特征图尺寸为35x35x288,采用了论文中图5中的架构,将5x5以两个3x3代替。

if mode == '1':

self.conv3 = Conv2d(conv3_reduce_channel, conv3_channel, kernel_size=3, stride=stride,

padding=padding_conv3)

self.conv3_double1 = Conv2d(conv3_double_reduce_channel, conv3_double_channel, kernel_size=3, padding=1)

self.conv3_double2 = Conv2d(conv3_double_channel, conv3_double_channel, kernel_size=3, stride=stride,

padding=padding_conv3)

#第二种Inception模块:输入特征图尺寸为17x17x768,采用了论文中图6中nx1+1xn的不对称卷积结构

elif mode == '2':

self.conv3 = Separable_Conv2d(conv3_reduce_channel, conv3_channel, kernel_size=7, stride=stride,

padding=padding_conv7)

self.conv3_double1 = Separable_Conv2d(conv3_double_reduce_channel, conv3_double_channel, kernel_size=7,

padding=3)

self.conv3_double2 = Separable_Conv2d(conv3_double_channel, conv3_double_channel, kernel_size=7,

stride=stride, padding=padding_conv7)

#第三种Inception模块:输入特征图尺寸为8x8x1280, 采用了论文图7中所示的并行模块的结构

elif mode == '3':

self.conv3 = Concat_Separable_Conv2d(conv3_reduce_channel, conv3_channel, kernel_size=3, stride=stride,

padding=1)

self.conv3_double1 = Conv2d(conv3_double_reduce_channel, conv3_double_channel, kernel_size=3, padding=1)

self.conv3_double2 = Concat_Separable_Conv2d(conv3_double_channel, conv3_double_channel, kernel_size=3,

stride=stride, padding=1)

self.conv3_double_reduce = Conv2d(input_channel, conv3_double_reduce_channel, kernel_size=1)

if pool_type == 'MAX':

self.pool = nn.MaxPool2d(kernel_size=3, stride=stride, padding=padding_conv3)

elif pool_type == 'AVG':

self.pool = nn.AvgPool2d(kernel_size=3, stride=stride, padding=padding_conv3)

if pool_reduce_channel != 0:

self.pool_reduce = Conv2d(input_channel, pool_reduce_channel, kernel_size=1)

else:

self.pool_reduce = None

def forward(self, x):

output_conv3 = self.conv3(self.conv3_reduce(x))

output_conv3_double = self.conv3_double2(self.conv3_double1(self.conv3_double_reduce(x)))

if self.pool_reduce != None:

output_pool = self.pool_reduce(self.pool(x))

else:

output_pool = self.pool(x)

if self.conv1 != None:

output_conv1 = self.conv1(x)

outputs = torch.cat([output_conv1, output_conv3, output_conv3_double, output_pool], dim=1)

else:

outputs = torch.cat([output_conv3, output_conv3_double, output_pool], dim=1)

return outputs

'''------------辅助分类器---------------------------'''

class InceptionAux(nn.Module):

def __init__(self, input_channel, num_classes):

super(InceptionAux, self).__init__()

self.layers = nn.Sequential(

nn.AvgPool2d(5, 3),

Conv2d(input_channel, 128, 1),

Conv2d(128, 1024, kernel_size=5),

Conv2d(1024, num_classes, kernel_size=1, output=True),

Squeeze()

)

def forward(self, x):

x = self.layers(x)

return x

'''-------------------显示网络结构-------------------------------'''

if __name__ == '__main__':

net = GoogLeNet(1000).cuda()

from torchsummary import summary

summary(net, (3, 299, 299))