C# CvDnn部署CoupledTPS实现旋转图像矫正

目录

说明

效果

模型信息

项目

代码

下载

说明

TPAMI2024 - Semi-Supervised Coupled Thin-Plate Spline Model for Rotation Correction and Beyond

github地址:https://github.com/nie-lang/CoupledTPS

代码实现参考:https://github.com/hpc203/CoupledTPS-opencv-dnn

效果

模型信息

feature_extractor.onnx

Model Properties

-------------------------

---------------------------------------------------------------

Inputs

-------------------------

name:input

tensor:Float[1, 3, 384, 512]

---------------------------------------------------------------

Outputs

-------------------------

name:feature

tensor:Float[1, 256, 24, 32]

---------------------------------------------------------------

regressnet.onnx

Model Properties

-------------------------

---------------------------------------------------------------

Inputs

-------------------------

name:feature

tensor:Float[1, 256, 24, 32]

---------------------------------------------------------------

Outputs

-------------------------

name:mesh_motion

tensor:Float[1, 7, 9, 2]

---------------------------------------------------------------

项目

代码

Form1.cs

using OpenCvSharp;

using System;

using System.Drawing;

using System.Drawing.Imaging;

using System.Windows.Forms;

namespace Onnx_Demo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

DateTime dt1 = DateTime.Now;

DateTime dt2 = DateTime.Now;

Mat image;

CoupledTPS_RotationNet rotationNet;

int iter_num = 3;

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.InitialDirectory =Application.StartupPath+"\\test_img\\";

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

image_path = ofd.FileName;

pictureBox1.Image = new Bitmap(image_path);

textBox1.Text = "";

image = new Mat(image_path);

pictureBox2.Image = null;

}

private void button2_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

button2.Enabled = false;

pictureBox2.Image = null;

textBox1.Text = "";

Application.DoEvents();

//读图片

image = new Mat(image_path);

dt1 = DateTime.Now;

Mat result_image = rotationNet.detect(image, iter_num);

dt2 = DateTime.Now;

Cv2.CvtColor(result_image, result_image, ColorConversionCodes.BGR2RGB);

pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

textBox1.Text = "推理耗时:" + (dt2 - dt1).TotalMilliseconds + "ms";

button2.Enabled = true;

}

private void Form1_Load(object sender, EventArgs e)

{

rotationNet = new CoupledTPS_RotationNet("model/feature_extractor.onnx", "model/regressnet.onnx");

image_path = "test_img/00150_-8.4.jpg";

pictureBox1.Image = new Bitmap(image_path);

image = new Mat(image_path);

}

private void pictureBox1_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox1.Image);

}

private void pictureBox2_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox2.Image);

}

SaveFileDialog sdf = new SaveFileDialog();

private void button3_Click(object sender, EventArgs e)

{

if (pictureBox2.Image == null)

{

return;

}

Bitmap output = new Bitmap(pictureBox2.Image);

sdf.Title = "保存";

sdf.Filter = "Images (*.jpg)|*.jpg|Images (*.png)|*.png|Images (*.bmp)|*.bmp|Images (*.emf)|*.emf|Images (*.exif)|*.exif|Images (*.gif)|*.gif|Images (*.ico)|*.ico|Images (*.tiff)|*.tiff|Images (*.wmf)|*.wmf";

if (sdf.ShowDialog() == DialogResult.OK)

{

switch (sdf.FilterIndex)

{

case 1:

{

output.Save(sdf.FileName, ImageFormat.Jpeg);

break;

}

case 2:

{

output.Save(sdf.FileName, ImageFormat.Png);

break;

}

case 3:

{

output.Save(sdf.FileName, ImageFormat.Bmp);

break;

}

case 4:

{

output.Save(sdf.FileName, ImageFormat.Emf);

break;

}

case 5:

{

output.Save(sdf.FileName, ImageFormat.Exif);

break;

}

case 6:

{

output.Save(sdf.FileName, ImageFormat.Gif);

break;

}

case 7:

{

output.Save(sdf.FileName, ImageFormat.Icon);

break;

}

case 8:

{

output.Save(sdf.FileName, ImageFormat.Tiff);

break;

}

case 9:

{

output.Save(sdf.FileName, ImageFormat.Wmf);

break;

}

}

MessageBox.Show("保存成功,位置:" + sdf.FileName);

}

}

}

}

using OpenCvSharp;

using System;

using System.Drawing;

using System.Drawing.Imaging;

using System.Windows.Forms;

namespace Onnx_Demo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

DateTime dt1 = DateTime.Now;

DateTime dt2 = DateTime.Now;

Mat image;

CoupledTPS_RotationNet rotationNet;

int iter_num = 3;

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.InitialDirectory =Application.StartupPath+"\\test_img\\";

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

image_path = ofd.FileName;

pictureBox1.Image = new Bitmap(image_path);

textBox1.Text = "";

image = new Mat(image_path);

pictureBox2.Image = null;

}

private void button2_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

button2.Enabled = false;

pictureBox2.Image = null;

textBox1.Text = "";

Application.DoEvents();

//读图片

image = new Mat(image_path);

dt1 = DateTime.Now;

Mat result_image = rotationNet.detect(image, iter_num);

dt2 = DateTime.Now;

Cv2.CvtColor(result_image, result_image, ColorConversionCodes.BGR2RGB);

pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

textBox1.Text = "推理耗时:" + (dt2 - dt1).TotalMilliseconds + "ms";

button2.Enabled = true;

}

private void Form1_Load(object sender, EventArgs e)

{

rotationNet = new CoupledTPS_RotationNet("model/feature_extractor.onnx", "model/regressnet.onnx");

image_path = "test_img/00150_-8.4.jpg";

pictureBox1.Image = new Bitmap(image_path);

image = new Mat(image_path);

}

private void pictureBox1_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox1.Image);

}

private void pictureBox2_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox2.Image);

}

SaveFileDialog sdf = new SaveFileDialog();

private void button3_Click(object sender, EventArgs e)

{

if (pictureBox2.Image == null)

{

return;

}

Bitmap output = new Bitmap(pictureBox2.Image);

sdf.Title = "保存";

sdf.Filter = "Images (*.jpg)|*.jpg|Images (*.png)|*.png|Images (*.bmp)|*.bmp|Images (*.emf)|*.emf|Images (*.exif)|*.exif|Images (*.gif)|*.gif|Images (*.ico)|*.ico|Images (*.tiff)|*.tiff|Images (*.wmf)|*.wmf";

if (sdf.ShowDialog() == DialogResult.OK)

{

switch (sdf.FilterIndex)

{

case 1:

{

output.Save(sdf.FileName, ImageFormat.Jpeg);

break;

}

case 2:

{

output.Save(sdf.FileName, ImageFormat.Png);

break;

}

case 3:

{

output.Save(sdf.FileName, ImageFormat.Bmp);

break;

}

case 4:

{

output.Save(sdf.FileName, ImageFormat.Emf);

break;

}

case 5:

{

output.Save(sdf.FileName, ImageFormat.Exif);

break;

}

case 6:

{

output.Save(sdf.FileName, ImageFormat.Gif);

break;

}

case 7:

{

output.Save(sdf.FileName, ImageFormat.Icon);

break;

}

case 8:

{

output.Save(sdf.FileName, ImageFormat.Tiff);

break;

}

case 9:

{

output.Save(sdf.FileName, ImageFormat.Wmf);

break;

}

}

MessageBox.Show("保存成功,位置:" + sdf.FileName);

}

}

}

}

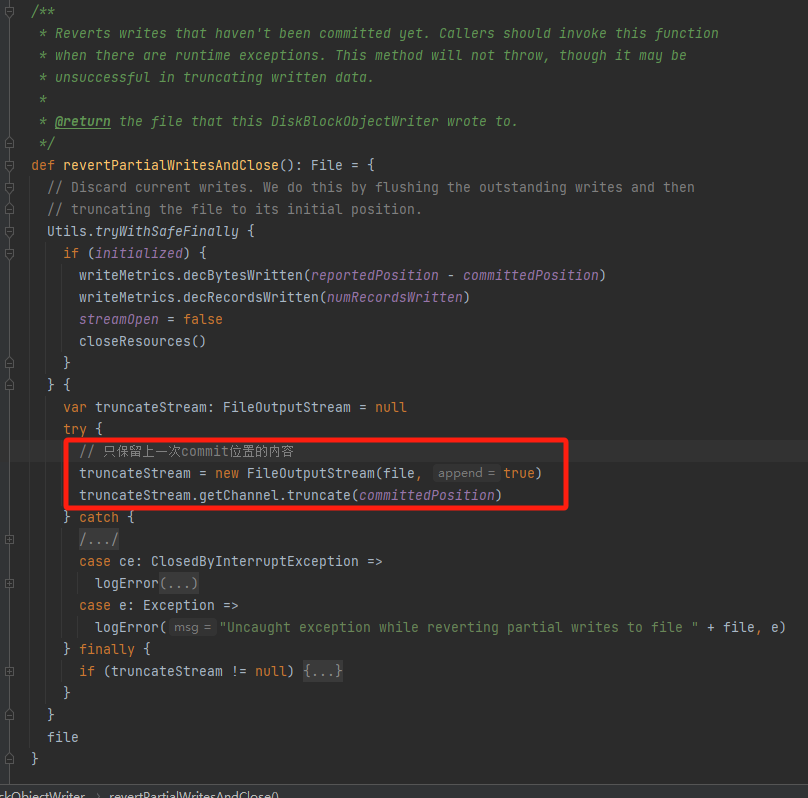

CoupledTPS_RotationNet.cs

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System.Collections.Generic;

using System.Linq;

namespace Onnx_Demo

{

public class CoupledTPS_RotationNet

{

int input_height = 384;

int input_width = 512;

int grid_h = 6;

int grid_w = 8;

Mat grid = new Mat();

Mat W_inv = new Mat();

Net feature_extractor;

Net regressNet;

public CoupledTPS_RotationNet(string modelpatha, string modelpathb)

{

feature_extractor = CvDnn.ReadNet(modelpatha);

regressNet = CvDnn.ReadNet(modelpathb);

tps2flow.get_norm_rigid_mesh_inv_grid(ref grid, ref W_inv, input_height, input_width, grid_h, grid_w);

}

unsafe public Mat detect(Mat srcimg, int iter_num)

{

Mat img = new Mat();

Cv2.Resize(srcimg, img, new Size(input_width, input_height));

img.ConvertTo(img, MatType.CV_32FC3, 1.0 / 127.5d, -1.0d);

Mat input_tensor = CvDnn.BlobFromImage(img);

feature_extractor.SetInput(input_tensor);

Mat[] feature_oris = new Mat[1] { new Mat() };

string[] outBlobNames = feature_extractor.GetUnconnectedOutLayersNames().ToArray();

feature_extractor.Forward(feature_oris, outBlobNames);

Mat feature = feature_oris[0].Clone();

int[] shape = { 1, 2, input_height, input_width };

Mat flow = Mat.Zeros(MatType.CV_32FC1, shape);

List<Mat> flow_list = new List<Mat>();

for (int i = 0; i < iter_num; i++)

{

regressNet.SetInput(feature);

Mat[] mesh_motions = new Mat[1] { new Mat() };

regressNet.Forward(mesh_motions, regressNet.GetUnconnectedOutLayersNames().ToArray());

float* offset = (float*)mesh_motions[0].Data;

Mat tp = new Mat();

tps2flow.get_ori_rigid_mesh_tp(ref tp, offset, input_height, input_width, grid_h, grid_w);

Mat T = W_inv * tp; //_solve_system

T = T.T(); //舍弃batchsize

Mat T_g = T * grid;

Mat delta_flow = new Mat();

tps2flow._transform(T_g, grid, input_height, input_width, ref delta_flow);

if (i == 0)

{

flow += delta_flow;

}

else

{

Mat warped_flow = new Mat();

grid_sample.warp_with_flow(flow, delta_flow, ref warped_flow);

flow = delta_flow + warped_flow;

}

flow_list.Add(flow.Clone());

if (i < (iter_num - 1))

{

int fea_h = feature.Size(2);

int fea_w = feature.Size(3);

float scale_h = (float)fea_h / flow.Size(2);

float scale_w = (float)fea_w / flow.Size(3);

Mat down_flow = new Mat();

upsample.UpSamplingBilinear(flow, ref down_flow, fea_h, fea_w, true, scale_h, scale_w);

for (int h = 0; h < fea_h; h++)

{

for (int w = 0; w < fea_w; w++)

{

float* p_w = (float*)down_flow.Ptr(0, 0, h);

float temp_w = p_w[w];

temp_w = temp_w * scale_w;

p_w[w] = temp_w;

float* p_h = (float*)down_flow.Ptr(0, 1, h);

float temp_h = p_h[w];

temp_h = temp_h * scale_h;

p_h[w] = temp_h;

}

}

feature.Release();

feature = new Mat();

grid_sample.warp_with_flow(feature_oris[0], down_flow, ref feature);

}

}

Mat correction_final = new Mat();

grid_sample.warp_with_flow(input_tensor, flow_list[iter_num - 1], ref correction_final);

Mat correction_img = grid_sample.convert4dtoimage(correction_final);

return correction_img;

}

}

}

下载

源码下载